-

Notifications

You must be signed in to change notification settings - Fork 5

NSTAT

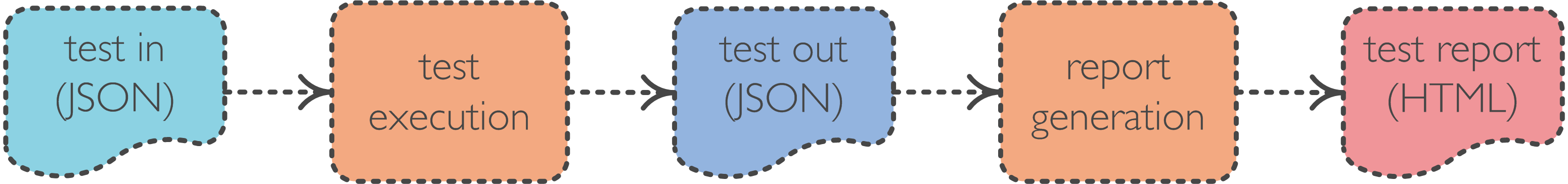

The general work flow of NSTAT is depicted below.

The user provides a JSON input file with the configuration parameters required by a test scenario. NSTAT parses these parameters, prepares and initiates the test components (controller, network emulators), and repeats the test for all different parameter combinations. Each repetition produces a set of performance results and statistics which are stored in an output JSON file. At the end, NSTAT scans this file and produces a detailed report and plots based on user configuration, all in a single HTML file.

NSTAT currently supports the following stress test scenarios:

-

sb_active_scalability test, with switches in active mode (consistently initiating Packet-In events).

- goal: explore the maximum number of switches the controller can sustain, and how the controller throughput scales with the topology size while traffic is present between the controller and switches.

- network emulator: MT-Cbench, check here the test description.

- network emulator: Multinet, check here the test description.

-

sb_idle_scalability test, with switches in idle mode (not actively initiating traffic).

- goal: explore the maximum number of switches the controller can sustain, and how a certain-sized topology should be connected to the controller so that the latter can successfully discover it at the minimum time.

- network emulator: MT-Cbench (switches are of OF1.0 and no other topology alternative is available apart from disconnected.) check here the test description

- network emulator: Multinet, check here the test description.

-

sb_active_stability test with switches in active mode.

-

sb_idle_stability test with switches in idle mode.

-

nb_active_scalability flow scalability test, with NorthBound clients (apps) installing flows on the switches of a Multinet topology.

NSTAT provides fully automated controller and emulator lifecycle management. For this to be possible, it must be supplied with handling logic for those components.

This handling logic is responsible for getting (build handler) any

controller/soutbound/northbound appication software on the NSTAT workspace in

order these components are available for the test and finally cleaning

(clean handler) the NSTAT workspace from the aforementioned application

software, once the test execution is over.

The code design section describes the conventions that the handling logic of a component should adhere to, in order for NSTAT to be able to execute tests with that component.

$ python3.4 ./nstat --help

usage: nstat.py [-h] --test TEST_TYPE [--bypass-execution]

--ctrl-base-dir CTRL_BASE_DIR

--sb-emulator-base-dir SB_EMU_BASE_DIR

[--nb-emulator-base-dir NB_EMU_BASE_DIR]

--json-config JSON_CONFIG --json-output

JSON_OUTPUT --html-report HTML_REPORT

[--log-file LOG_FILE] --output-dir OUTPUT_DIR

[--logging-level LOGGING_LEVEL]

-h, --help show this help message and exit

--test TEST_TYPE Test type:

{sb_active_scalability,

sb_idle_scalability,

sb_active_stability,

sb_idle_stability,

nb_active_scalability}

--bypass-execution bypass test execution and proceed to report

generation, based on an existing output.

--ctrl-base-dir CTRL_BASE_DIR

controller base directory

--sb-emulator-base-dir SB_EMU_BASE_DIR

southbound emulator base directory,

supported network emulators: MT-Cbench, Multinet

--nb-emulator-base-dir NB_EMU_BASE_DIR

northbound emulator base directory

--json-config JSON_CONFIG

json test input (configuration) file name

--json-output JSON_OUTPUT

json test output (results) file name

--html-report HTML_REPORT

html report file name

--log-file LOG_FILE log file name

--output-dir OUTPUT_DIR

result files output directory

--logging-level LOGGING_LEVEL

LOG level set. Possible values are:

INFO

DEBUG (default)

ERRORThe command-line options for NSTAT are:

-

--testcan be set to any of the supported test scenarios described above (sb_active_scalability, sb_idle_scalability, sb_active_stability, sb_idle_stability, nb_active_scalability) -

--bypass-execution(optional) if this option is specified, the test execution stage from NSTAT work-flow will be bypassed and an existing generated JSON output will be used to produce the*.htmlreport. -

--ctrl-base-dirspecifies the controller base directory, i.e. the directory containing the controller ```build/clean`` handlers. -

--sb-emulator-base-dirspecifies the southbound generator base directory, i.e. the directory containing southbound emulatorbuild/cleanhandlers. -

--nb-emulator-base-dirspecifies the northbound emulator base directory, i.e. the directory containing northbound emulatorbuild/cleanhandlers. -

--json-configspecifies the name of the JSON file containing the test configuration parameters. -

--json-outputspecifies the name of the JSON output file produced after test execution. -

--html-reportspecifies the name of the HTML report produced after result analysis. -

--log-file(optional) specifies the name of the log file used to capture all output from the test. If this option is not specified all output will be printed in the console. -

--output-dirspecifies the name of the directory where all produced files (JSON output, plots, HTML report, controller logs) are stored at the end. -

--logging-levelspecifies the NSTAT logging level during test execution. Three different logging levels are supported. INFO, DEBUG (default), ERROR.

A test is described end-to-end --from controller/emulator management to report generation with an input JSON file that contains the test configuration keys.

The configuration keys are test parameters that describe every aspect of test execution, e.g. handling logic, execution environment parameters, test parameters, remote nodes deployment parameters etc.

The configuration keys for each test scenario are described in detail at the corresponding wiki pages.

Of particular importance are the array-valued configuration keys, i.e. those encoded as JSON arrays. These define the test dimensions in a test scenario. Essentially, a test dimension affects the output of a stress test and that is why NSTAT repeats the test for every value of the array. We are used to saying that the test dimensions determine the experimental space of a test scenario.

The results produced by NSTAT are stored in a JSON output file and organized as an array of result objects (or samples). A result object is a set of result key-value pairs, i.e. a set of key-value pairs that fully describe the results of a test iteration. These values typically include:

- the values of the test dimensions for this test iteration,

- the actual performance results,

- secondary runtime statistics,

- other test configuration parameters.

Again, the result keys for each test scenario are described at the corresponding wiki pages.

To produce customized plots on the results, refer to the plotting page for detailed info.

Intro

Stress Tests

- Switch scalability test with active MT-Cbench switches

- Switch scalability test with active Multinet switches

- Switch scalability test with idle MT-Cbench switches

- Switch scalability test with idle Multinet switches

- Controller stability test with active MT-Cbench switches

- Controller stability test with idle Multinet switches

- Flow scalability test with idle Multinet switches

Emulators

Monitoring tools

- OpenFlow monitoring tools

Design

Releases

ODL stress tests performance reports

Sample Performance Results