-

Notifications

You must be signed in to change notification settings - Fork 1.6k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Error when using microk8s: failed to save outputs: Error response from daemon: No such container: #4302

Comments

|

Can you click the step and see what the error message is? |

|

This step is in Error state with this message: failed to save outputs: Error response from daemon: No such container: d98f03a40af58bc2f1a623005d9da0a6b6838822ebf0d11fcf0894291d3d5782 #Define a Python function

def add(a: float, b: float) -> float:

'''Calculates sum of two arguments'''

print("in add Funktion")

return a + bPlease see included container log file above for more details. |

|

Hi, @tomalbrecht I think I've managed to replicate the error and in my case was due to missing capabilities in a pod security policy.

A quick& dirty test, if you have a PSP deployed you may simply add:

@Bobgy maybe we should add this to the troubleshooting section for Jupyter/KFP? I can do that. |

|

@alfsuse Thanks for your help. I deployed these manifests from kubeflow.org on microk8s. Within the kustomize directory (

I am running the code above as a user (not admin) - added like suggested here -

Edit: Running the code as 'admin@kubeflow.org' user, yields the same results as described above. So running as admin is not a workaround. |

|

@tomalbrecht I'm not sure that the cluster-roles or the user are related in this context. I think the issue relay on the presence of a psp, can you run apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: privileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: '*'

spec:

privileged: true

allowPrivilegeEscalation: true

# this section should be missing

allowedCapabilities:

- '*'

########## end of section

volumes:

- '*'

hostNetwork: true

hostPorts:

- min: 0

max: 65535

hostIPC: true

hostPID: true

runAsUser:

rule: 'RunAsAny'

seLinux:

rule: 'RunAsAny'

supplementalGroups:

rule: 'RunAsAny'

fsGroup:

rule: 'RunAsAny' |

|

@alfsuse I installed kubeflow with RBAC enabled, if this matters. But it seems that PodSecurityPolicy is a cluster level resource - so maybe not. Anyway. Here's the output. complete I edited the field 'allowedCapabilities' as suggested by you. After this I ran the code again. It took some time, while I got this message. But then I got the the same result I created a fresh notebook server, so I assume, that the new policies were taken. Any ideas? |

|

More information. This time it seems several different resources failed.

Because of this, I deployed deployment/metadata-deployment again. This solved at least the failed metadata containers. Then I recreated the notebook and run the test again. Nothing changed. The pipeline container still fails. |

|

Thanks, @tomalbrecht couple of more questions:

I currently working on 3 different clusters with 1.18.4, 1.18.2 and 1.16.9 with and without psp's and works fine..I can try to re-deploy with the same manifest of you and see if something changes. |

|

Thanks, I will take a look at #4138 in case I will stick to kubernetes 1.18.6. I am not aware of any psp deployments. Right now I am testing on a single user environment with full access. So if there are any, it was deployed with microk8s (juju) or kubeflow manifests. My install process (scripted) is as follows:

I will install an older version of microk8s for now and see if this will help. |

|

Older Versions of microk8s in combination with kubeflow 1.0.2 won't work either. The mentioned tutorial won't work. # list available versions of microk8s

snap info microk8s

channels:

latest/stable: v1.18.6 2020-07-25 (1551) 215MB classic

latest/candidate: v1.18.6 2020-07-16 (1551) 215MB classic

latest/beta: v1.18.6 2020-07-16 (1551) 215MB classic

latest/edge: v1.18.6 2020-07-31 (1584) 215MB classic

dqlite/stable: –

dqlite/candidate: –

dqlite/beta: –

dqlite/edge: v1.16.2 2019-11-07 (1038) 189MB classic

1.19/stable: –

1.19/candidate: v1.19.0-rc.3 2020-07-29 (1573) 220MB classic

1.19/beta: v1.19.0-beta.2 2020-06-13 (1478) 213MB classic

1.19/edge: v1.19.0-alpha.3 2020-06-05 (1457) 210MB classic

1.18/stable: v1.18.6 2020-07-26 (1550) 201MB classic

1.18/candidate: v1.18.6 2020-07-22 (1550) 201MB classic

1.18/beta: v1.18.6 2020-07-22 (1550) 201MB classic

1.18/edge: v1.18.6 2020-07-15 (1550) 201MB classic

1.17/stable: v1.17.9 2020-07-26 (1549) 179MB classic

1.17/candidate: v1.17.9 2020-07-21 (1549) 179MB classic

1.17/beta: v1.17.9 2020-07-21 (1549) 179MB classic

1.17/edge: v1.17.9 2020-07-31 (1586) 179MB classic

1.16/stable: v1.16.8 2020-03-27 (1302) 179MB classic

1.16/candidate: v1.16.13 2020-08-02 (1587) 179MB classic

1.16/beta: v1.16.13 2020-08-02 (1587) 179MB classic

1.16/edge: v1.16.13 2020-07-31 (1587) 179MB classic

1.15/stable: v1.15.11 2020-03-27 (1301) 171MB classic

1.15/candidate: v1.15.11 2020-03-27 (1301) 171MB classic

1.15/beta: v1.15.11 2020-03-27 (1301) 171MB classic

1.15/edge: v1.15.11 2020-03-26 (1301) 171MB classic

1.14/stable: v1.14.10 2020-01-06 (1120) 217MB classic

...Test 1: microk8s 1.16/stable - v1.16.8 FAILED "No such container" Test 2: microk8s 1.15/stable - v1.15.11 Installation FAILED. Will be stuck in "Waiting for kubernetes core services to be ready.." and kubectl won't be able to connect to kubernetes api. Test 3: microk8s 1.14/stable - v1.14.10 FAILED "No such container" I'd take a deeper look into it. But how does the process look like, so I can check it? Where do I have to look at?

Question: What other containers and services are involved within this process? I cannot see any difference using older versions of microk8s. Thus I'd keep debugging microk8s v1.18.6. Any help appreciated. |

|

Hi, @tomalbrecht I finally replicated the error on microk8s and found that is directly related to microk8s itself. I found the same error here: jupyterhub/zero-to-jupyterhub-k8s#1189 (comment) the problem seems to be related to the way microk8s manage security context. |

|

Wrap up for microk8s 1.18.6 and kubeflow 1.0.2.

|

https://github.com/kubeflow/manifests/blob/master/pipeline/upstream/base/cache-deployer/cache-deployer-deployment.yaml |

|

So I reinstalled microk8s with Kubernetes v 18.6 and enabled kubeflow (only) from microk8s directly (microk8s enable dns kubeflow storage) it will install everything else.. than deployed a notebook from withing kubeflow and ran the sample.

I think the issue at this point is that microk8s use some specific configurations that do not permit the standard installation via manifests. |

|

I wonder how to go on from now. microk8s.enable kubeflow does not seem to be the right solution for us. Kubeflow will be managed by juju (within microk8s), so its not easily possible to patch kubeflow. |

|

I don't know your specific use case but if your need is to manage the manifests process probably minikube is a good solution (in case you need GPU support) or kind,k3s.

There's a new manifest that has been released for this case: https://github.com/kubeflow/pipelines/tree/master/manifests/kustomize/env/platform-agnostic-pns |

|

Thanks again. Yes, I'd like to manage the manifests. I'll take a look. |

|

Ping me over slack KF channels if you need any help with those |

|

Running the same tutorial code on minikube will work (without the patches mentioned above). I opened a issue on microk8s repository: canonical/microk8s#1472 |

|

@alfsuse Patching Maybe it would help to extend the trouble shooting documentation. I patched the config map within k8dashboard - I had no idea how to merge the manifest (#4302 (comment)) into my existing kubeflow manifests (https://github.com/kubeflow/manifests/blob/master/kfdef/kfctl_istio_dex.v1.0.2.yaml) |

Interesting. I wanted to propose PNS, but then I remembered that people trying to use PNS executor get errors about outputs and emptyDir volumes, so I decided not to have you jump through that hoop. It's interesting that it worked for you. |

|

@Ark-kun: It does work on microk8s v1.18.6 and k3s v1.16.13+k3s1 for me. Minikube v1.2.0 will run without PNS when driver is set to None on installation process (which means that minikube uses the host docker installation). All setup under ubuntu 18.04 with latest updates. |

|

FYI, some contributors have documented pns executor for local k8s clusters: kubeflow/website#2056. |

|

Ohhh, they are actually already commenting above. |

What steps did you take:

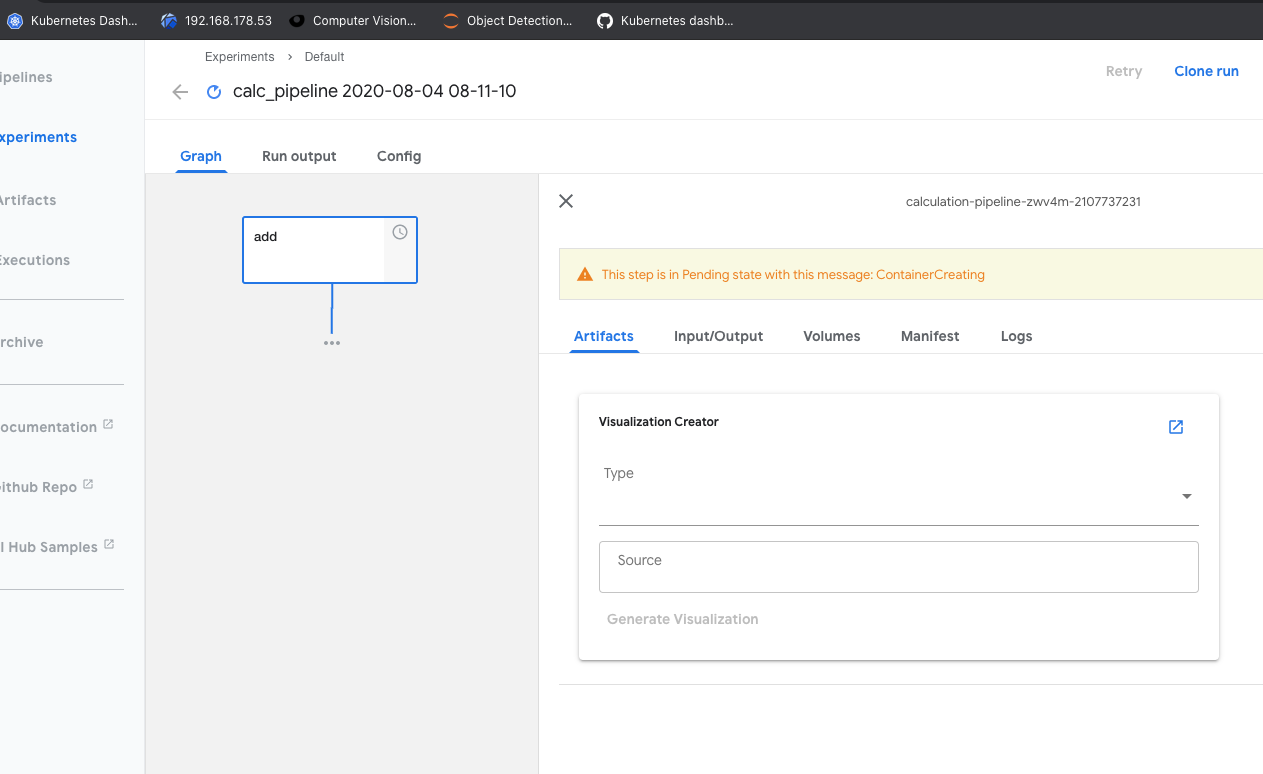

I ran /samples/core/lightweight_component/lightweight_component.ipynb. Running all cells seems ok. I did not change the tutorial code.

What happened:

When viewing the run, the pipeline crashed within the "add" function.

What did you expect to happen:

I expected the pipeline to complete without errors.

Environment:

How did you deploy Kubeflow Pipelines (KFP)?

As part of a full Kubeflow 1.0.2 deployment.

KFP version: Build commit: 743746b

KFP SDK version:

Anything else you would like to add:

logs-from-wait-in-calculation-pipeline-g7df4-1345834039.txt

Any workarounds or ideas how to further debug and fix it?

/kind bug

The text was updated successfully, but these errors were encountered: