New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

etcd and kube-apiserver does not start after incorrect machine shutdown #88574

Comments

sorry but how? |

|

What is the content of the etcd container logs? /cc @jpbetz |

|

/sig api-machinery |

Is this what you want? |

|

is the |

Yes it is presented. No there is no side-process job that cleans it up. Should I clean it now? |

no. does this cluster have more etcd instances / control-plane Nodes? if yes, are you seeing the same issue on those? |

no, it is a single node cluster |

|

From etcd log: It seems the panic is by design if the recovery fails (from server.go): |

|

I've tried to rename If I understand correct the issue is due to broken etcd database. Are there any best practices for periodically backing up etcd database during cluster operation? Or is this type of issue normal when cluster is incorrectly shut down? Or what is the best way to avoid this? |

the folder contains the data of your existing cluster. deleting it would mean data loss.

this seems more like a bug in the etcd server where it cannot restore it's previous state.

these docs provide some guidelines:

if you had multiple etcd members, it is possible to restore the overall cluster state from a healthy member. |

if you have used |

|

/assign @jingyih |

|

@fedebongio: GitHub didn't allow me to assign the following users: jingyih. Note that only kubernetes members, repo collaborators and people who have commented on this issue/PR can be assigned. Additionally, issues/PRs can only have 10 assignees at the same time. In response to this:

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository. |

No this is not normal. etcd is designed to be recoverable from unexpected shut down. While I am trying to reproduce this issue, on your side could you add "--logger=zap" to etcd starting command (in etcd.yaml). It will print more info such as exactly which snapshot file it fails to find. |

|

|

@mcajkovs, The latest etcd log you provided suggests that the etcd server was started successfully. The following log entry suggests that there was no snapshot file missing. Is there anything changed since the last panic? |

Oh I'm sorry - I've posted etcd output that was created as a result of renaming Here is content of |

|

Got it. The relevant log entries are: So etcd is trying to open cc @gyuho Could you also help take a look? |

|

Not sure why it is looking for |

|

@mcajkovs how reproducible is this issue on your VM. Let's say we create a fresh etcd (fresh k8s cluster), and then the VM is shutdown unexpectedly (such as due to power cut). Does it always comes to this state where the restarting etcd panics? |

|

@mcajkovs Did you remove any files in the snap directory? Or could be old snapshots which's been already discarded in the local node (but old incoming snapshot handler should not even reach this code path...) |

|

We encountered the same issue with etcd-3.4.3 after a power cut. The three nodes cluster had two of them failed to recover, with the following message: The snap dir of the failed nodes: And one of them can recover, but can't make to consensus without one more healthy node. here's it's log: and its snap directory tree: |

|

Since one of the nodes can recover, we add I'm wondering why it can recover with the same(?) snap db but the other two can't? The recovery process seems to be looking for a |

|

Same here after power loss, one of my 3 nodes cluster cannot start with the same panic. |

|

Same problem here with single node cluster with {"level":"warn","ts":"2020-09-25T06:40:27.621Z","caller":"snap/db.go:92","msg":"failed to find [SNAPSHOT-INDEX].snap.db","snapshot-index":55435591,"snapshot-file-path":"/var/lib/etcd/member/snap/00000000034de147.snap.db","error":"snap: snapshot file doesn't exist"} |

|

Can we recover part of data? I really don't want to reinstall the environments including other k8s-related projects which took me many weeks. |

|

I ended up doing a scheduled job as a workaround in the meantime with something like this to back up every 6 hours for 30 days: apiVersion: batch/v1beta1

kind: CronJob

metadata:

name: backup

namespace: kube-system

spec:

concurrencyPolicy: Allow

failedJobsHistoryLimit: 1

jobTemplate:

spec:

template:

spec:

containers:

- args:

- -c

- etcdctl --endpoints=https://127.0.0.1:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt

--cert=/etc/kubernetes/pki/etcd/healthcheck-client.crt --key=/etc/kubernetes/pki/etcd/healthcheck-client.key

snapshot save /backup/etcd-snapshot-$(date +%Y-%m-%d_%H:%M:%S_%Z).db

command:

- /bin/sh

env:

- name: ETCDCTL_API

value: "3"

image: k8s.gcr.io/etcd:3.4.3-0

imagePullPolicy: IfNotPresent

name: backup

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /etc/kubernetes/pki/etcd

name: etcd-certs

readOnly: true

- mountPath: /backup

name: backup

- args:

- -c

- find /backup -type f -mtime +30 -exec rm -f {} \;

command:

- /bin/sh

env:

- name: ETCDCTL_API

value: "3"

image: k8s.gcr.io/etcd:3.4.3-0

imagePullPolicy: IfNotPresent

name: cleanup

resources: {}

terminationMessagePath: /dev/termination-log

terminationMessagePolicy: File

volumeMounts:

- mountPath: /backup

name: backup

dnsPolicy: ClusterFirst

hostNetwork: true

nodeName: YOUR_MASTER_NODE_NAME

restartPolicy: OnFailure

schedulerName: default-scheduler

securityContext: {}

terminationGracePeriodSeconds: 30

volumes:

- hostPath:

path: /etc/kubernetes/pki/etcd

type: DirectoryOrCreate

name: etcd-certs

- hostPath:

path: /opt/etcd_backups

type: DirectoryOrCreate

name: backup

schedule: 0 */6 * * *

successfulJobsHistoryLimit: 3

suspend: false |

This is great! I tried running it but the output file name didn't have the date: any ideas why? edit just ran a test and watched the logs: seems date command is not found |

|

I install kubernetes through RKE and the 3 etcd node of my k8s are ok but the etcd server on rancher host (rancher/rancher:v2.3.2.) has this problem. All kubectl command are working and i can connect to API to interact with this but the rancher interface is down. |

|

This happened to me as well and I had to go through these steps and then reconfigure to use the new, restored, etcd cluster. |

|

@mamiapatrick rancher problems, please contact rancher. etcd problems, please contact the etcd community. thanks! |

|

Seems like I've found the reason of such behavior - the The main problem is that corrupted was the |

|

Same issue after power down , can it be restored to a point using .snaps and .wal filess or should just destroy cluster |

|

@deeco unfortunately, in my case, I was need to re-setup my whole cluster from the scratch, because I had only 1 |

same here , not ideal and will to reimplement all :( |

|

same issue with for tmp solved then to found the cluster is really cleaning, only static pod is here. tips: just do backup when cluster is health!! just do backup when cluster is health!!just do backup when cluster is health!! |

|

Issues go stale after 90d of inactivity. If this issue is safe to close now please do so with Send feedback to sig-contributor-experience at kubernetes/community. |

|

Stale issues rot after 30d of inactivity. If this issue is safe to close now please do so with Send feedback to sig-contributor-experience at kubernetes/community. |

|

Rotten issues close after 30d of inactivity. Send feedback to sig-contributor-experience at kubernetes/community. |

|

@fejta-bot: Closing this issue. In response to this:

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository. |

|

Why is this issue closed? There is no concrete solution found yet. I am facing the same issue today |

|

Same question, how to recover data when ETCD cluster has only one node? |

|

Same question. Is there a solution now? |

|

It's been many years, here is my solution, I help it can help someone

follow the instructions here: https://etcd.io/docs/v3.5/tutorials/how-to-deal-with-membership/ |

|

Still facing this issue while using rehl 8.3 machine |

I was told that this is correct issue tracker for my problem. Previously I've post this issue here

Environment

Installation and setup

I've installed k8s on my virtual machine (VM) VMware Workstation using following steps:

Problem

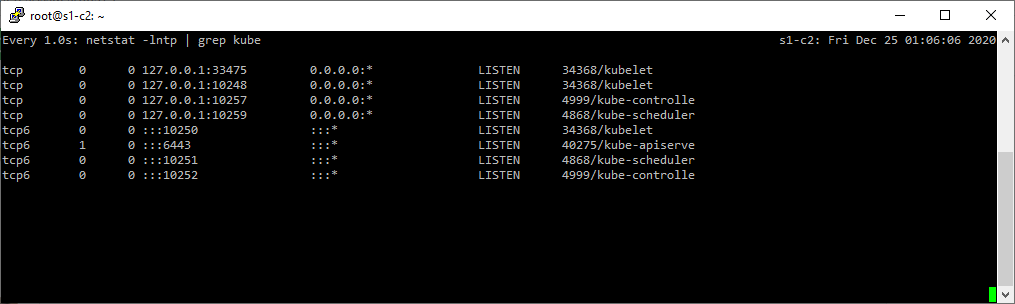

k8s does not start after boot. As a a result I cannot use kubectl to communicate with k8s. I thing main problem is that apiserver is restarting and etcd is not started

I've tried also following with same result:

What you expected to happen?

Start k8s after VM boot.

How to reproduce it (as minimally and precisely as possible)?

Shut down VM incorrectly (e.g. kill VM process, power off host machine etc.) and start VM again.

Anything else we need to know?

I have NOT observed this problem when I correctly shut down VM. But if VM is shut down incorrectly (killed process etc) then this happens. If I do kubectl reset and set up k8s according to above steps then it works.

content of /etc/kubernetes/manifests files

etcd.yaml

kube-apiserver.yaml

kube-controller-manager.yaml

kube-scheduler.yaml

The text was updated successfully, but these errors were encountered: