New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

/stats/summary endpoints failing with "Context Deadline Exceeded" on Windows nodes with very high CPU usage #95735

Comments

|

@marosset: This issue is currently awaiting triage. If a SIG or subproject determines this is a relevant issue, they will accept it by applying the The Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository. |

|

/sig windows |

|

I was able to reproduce Metric server logs: I do not see "context deadline" in kubelet but I did find some logs assocated: Once the pods are scheduled the node remains at 100% cpu and I no longer see errors but on the node (run after the pod deployment stabilized) |

|

Adding |

|

Also, without setting system reserved CPU, it looks like |

|

I run into this again during scaling, collectiong logs for analysis here: Metric Server logs: Kubelet logs: |

|

I believe @jingxu97 has seen high CPU issues before. Not particularly on this. I've heard there were kernel issues in the past that triggered high CPU cases. |

|

Some investigations on our end are showing that the DiagTrack service running in the infra/pause container can consume non-trivial amounts of CPU. |

|

#95840 is an issue for DiagTrack |

|

The differences in behavior between 1.17.* and 1.18.* clusters looks like it is caused by #86101 (which was NOT backported to any prior releases) K8s version <= 1.17 behavior The observed behavior on Windows system when CPU weights were used was that under high-CPU load pods/containers were not guaranteed their scheduling time. The good news here is that system critical services like kubelet/docker/etc were still able to get enough CPU time for the nodes to function properly. K8s version >= 1.18 behavior Suggestion / possible fixes

|

I can work on this, I am wondering if we should do the same for CRI(like docker) and CNI processes. |

|

@ravisantoshgudimetla Sure, if you want to work on this go ahead. |

|

docker/containerd should probably have a flag that allows them to run as high priory as well but would likely be different issues in the respective projects |

|

/reopen |

|

@marosset: Reopened this issue. In response to this:

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository. |

|

also related to #104283 |

|

The Kubernetes project currently lacks enough contributors to adequately respond to all issues and PRs. This bot triages issues and PRs according to the following rules:

You can:

Please send feedback to sig-contributor-experience at kubernetes/community. /lifecycle stale |

|

The Kubernetes project currently lacks enough active contributors to adequately respond to all issues and PRs. This bot triages issues and PRs according to the following rules:

You can:

Please send feedback to sig-contributor-experience at kubernetes/community. /lifecycle rotten |

|

The Kubernetes project currently lacks enough active contributors to adequately respond to all issues and PRs. This bot triages issues and PRs according to the following rules:

You can:

Please send feedback to sig-contributor-experience at kubernetes/community. /close |

|

@k8s-triage-robot: Closing this issue. In response to this:

Instructions for interacting with me using PR comments are available here. If you have questions or suggestions related to my behavior, please file an issue against the kubernetes/test-infra repository. |

What happened:

Windows nodes running with high (>90%) CPU usage are having calls to /stats/summary time out with "Context Deadline Exceeded".

When this happens metrics-server cannot gather accurate CPU/memory usage for the node or pods running on the node which results in

kubectl top node/podreturning instead of valuesWhat you expected to happen:

Windows nodes should be able to run with high CPU utilization without negatively impacting Kubernetes functionality

How to reproduce it (as minimally and precisely as possible):

kubectl top nodeAnything else we need to know?:

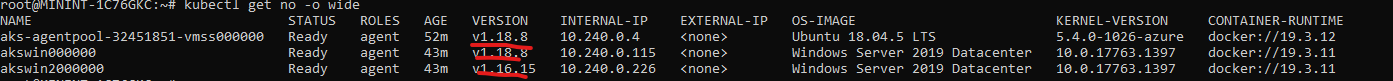

This issue appears to be easier to reproduce on 1.18.x nodes vs 1.16.x or 1.17.x nodes

Ex:

metrics-server logs show

E1020 10:58:24.539304 1 manager.go:111] unable to fully collect metrics: unable to fully scrape metrics from source kubelet_summary:akswin000000: unable to fetch metrics from Kubelet akswin000000 (10.240.0.115): Get https://10.240.0.115:10250/stats/summary?only_cpu_and_memory=true: context deadline exceeded

Kubelet logs show

and

Environment:

kubectl version): 1.18.8cat /etc/os-release): WIndows Server 2019uname -a):The text was updated successfully, but these errors were encountered: