-

Notifications

You must be signed in to change notification settings - Fork 571

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

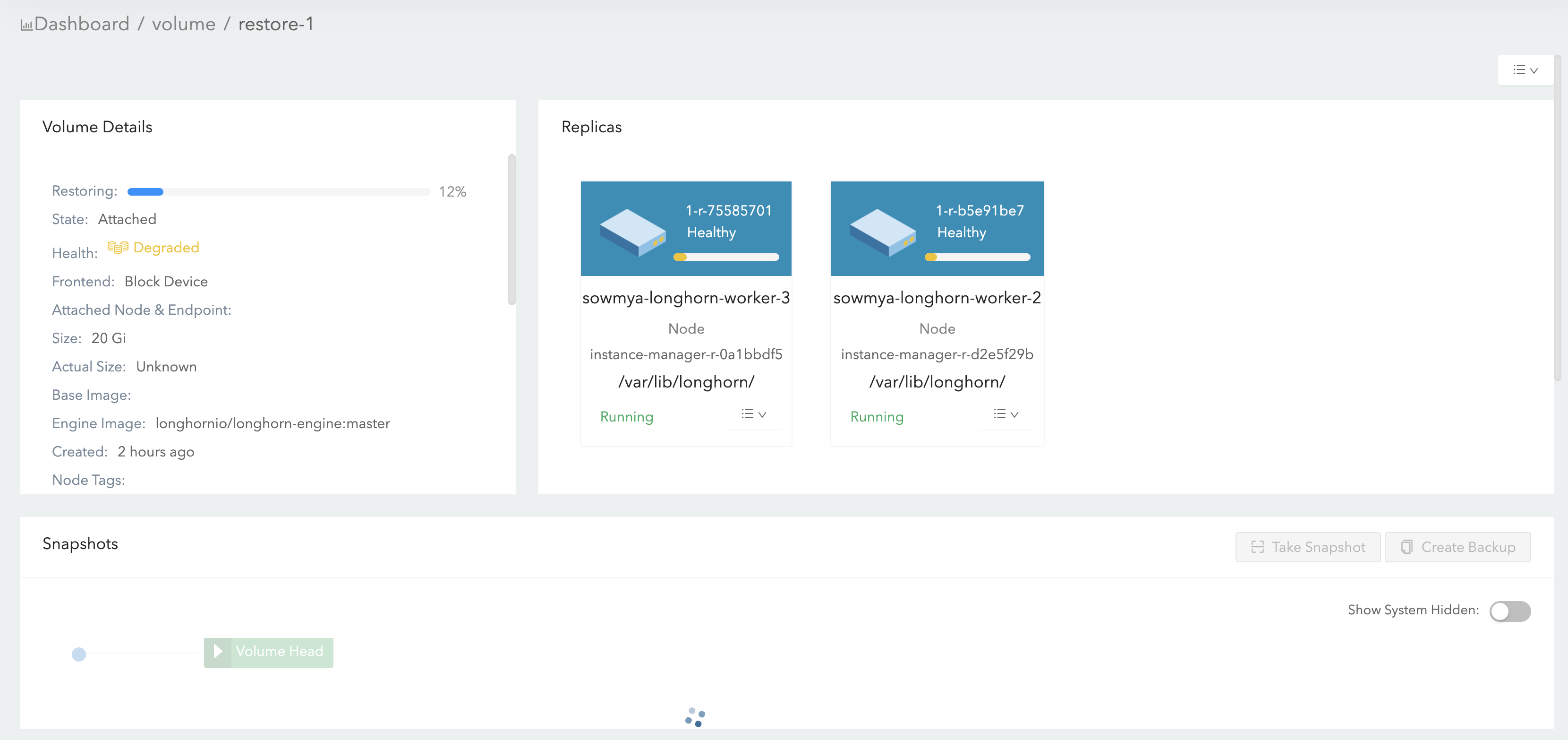

[BUG] Not able to attach a restored volume to a workload when the volume was interrupted during restoration process #1270

Comments

Possible solution: Actually I am worried what will happen if a replica is deleted and rebuilt when users keep writing data into the volume. I am thinking there is a flaw/gap between rebuild complete and starting to write data for the replica in that case. If YES, it may lead to data inconsistency. |

|

@shuo-wu replica rebuild and data writing should be fine. When we do replica rebuild there is a lock to prevent any writing until we've done snapshot. You can try it too. Regarding the replica rebuild during the restoration, we can disable the rebuild during restoration volumes for now. Though for the new replica, it should start the restoration process - not the rebuild process. The same applies to the DR volume. |

|

OK. I checked the implementation. The replica rebuild with data writing looks fine. I will disable the rebuild for this case in the manager part now. But when will we refactor/fix the rebuild? |

|

@shuo-wu can you check and give me an estimate of how long it will take to fix the rebuild during restoration? We can just let the new replica to do restoration rather than rebuild. |

|

Maybe 3~5 days(Review is not included). I think it depends on the complexity of the DR volume part. |

|

@shuo-wu OK, let's stop the rebuilding for failure during restoration or DR. Can you file a bug to track the rebuild during the restoration as an enhancement? Thanks. |

|

Verified in master - 05-04-2020 While restoring if there is any interruption, replica rebuild doesn't get triggered and volume later get attached to pod successfully. Steps for verification:

Scenario: 2

|

|

E2E test has been implemented by Shuo. |

|

|

|

Reopening this issue - Validated on the latest master - 05/11/2020 Steps:

Logs: |

|

There are actually 2 sub-cases for the node down:

I think what @sowmyav27 encountered is the 1st case. For a regular volume, the volume should be retained there if the attached node somehow gets disconnected hence the above behavior is what Longhorn currently expects. But for the restoring volume, I think Longhorn needs to directly mark the volume as Faulted. Since the restore volume won't be auto reattached again after the node is back. And the engine process doesn't know how to reuse the incomplete snapshot to continue the full restore, either. BTW, Longhorn also needs to mark DR volumes as Faulted for this node down case. [Updated]For the 2nd case, the replica on the down node should become failed and the restore can be done. After the restore complete, the restored data is correct. (Please use a big backup, e.g., 5Gi backup, to test this scenario. Otherwise, the restore can be done before detecting the node down and removing the down replica.) I will fix the 1st scenario then. |

|

Manually test 1:

Manually test 2:

|

|

@sowmyav27 Which worker node you powered down? Is it a replica one or the node that the volume attached to? |

|

@yasker I consciously powered down a node where a replica was deployed. I am not sure how to find out which node is the volume attached to. How can we check this? |

See the |

|

Logged bug #1355 to track this issue separately - #1270 (comment) as otherwise the original issue is seen fixed - #1270 (comment) |

|

test_rebuild_with_restoration failed in longhorn-tests/457 |

|

test_rebuild_with_restoration passed |

Describe the bug

Not able to attach a restored volume to a workload when the volume was interrupted during restoration process

To Reproduce

Expected behavior

User should be able to attach volume to a workload/pod successfully.

Note:

Similar issue is seen - when during restoration, one of the nodes is powered down, which causes a replica rebuild

Environment:

The text was updated successfully, but these errors were encountered: