Release 0.7.90 | That '90s Show Release

·

3057 commits

to master

since this release

Data integration

New sources

New destinations

Full lists of available sources and destinations can be found here:

- Sources: https://docs.mage.ai/data-integrations/overview#available-sources

- Destinations: https://docs.mage.ai/data-integrations/overview#available-destinations

Improvements on existing sources and destinations

- Trino destination

- Support

MERGEcommand in Trino connector to handle conflict. - Allow customizing

query_max_lengthto adjust batch size.

- Support

- MSSQL source

- Fix datetime column conversion and comparison for MSSQL source.

- BigQuery destination

- Fix BigQuery error “Deadline of 600.0s exceeded while calling target function”.

- Deltalake destination

- Upgrade delta library from version from 0.6.4 to 0.7.0 to fix some errors.

- Allow datetime columns to be used as bookmark properties.

- When clicking apply button in the data integration schema table, if a bookmark column is not a valid replication key for a table or a unique column is not a valid key property for a table, don’t apply that change to that stream.

New command line tool

Mage has a newly revamped command line tool, with better formatting, clearer help commands, and more informative error messages. Kudos to community member @jlondonobo, for your awesome contribution!

DBT block improvements

- Support running Redshift DBT models in Mage.

- Raise an error if there is a DBT compilation error when running DBT blocks in a pipeline.

- Fix duplicate DBT source names error with same source name across multiple

mage_sources.ymlfiles in different model subfolders: use only 1 sources file for. all models instead of nesting them in subfolders.

Notebook improvements

- Support editing global variables in UI: https://docs.mage.ai/production/configuring-production-settings/runtime-variable#in-mage-editor

- Support creating or edit global variables in code by editing the pipeline

metadata.yamlfile. https://docs.mage.ai/production/configuring-production-settings/runtime-variable#in-code - Add a save file button when editing a file not in the pipeline notebook.

- Support Windows keyboard shortcuts: CTRL+S to save the files.

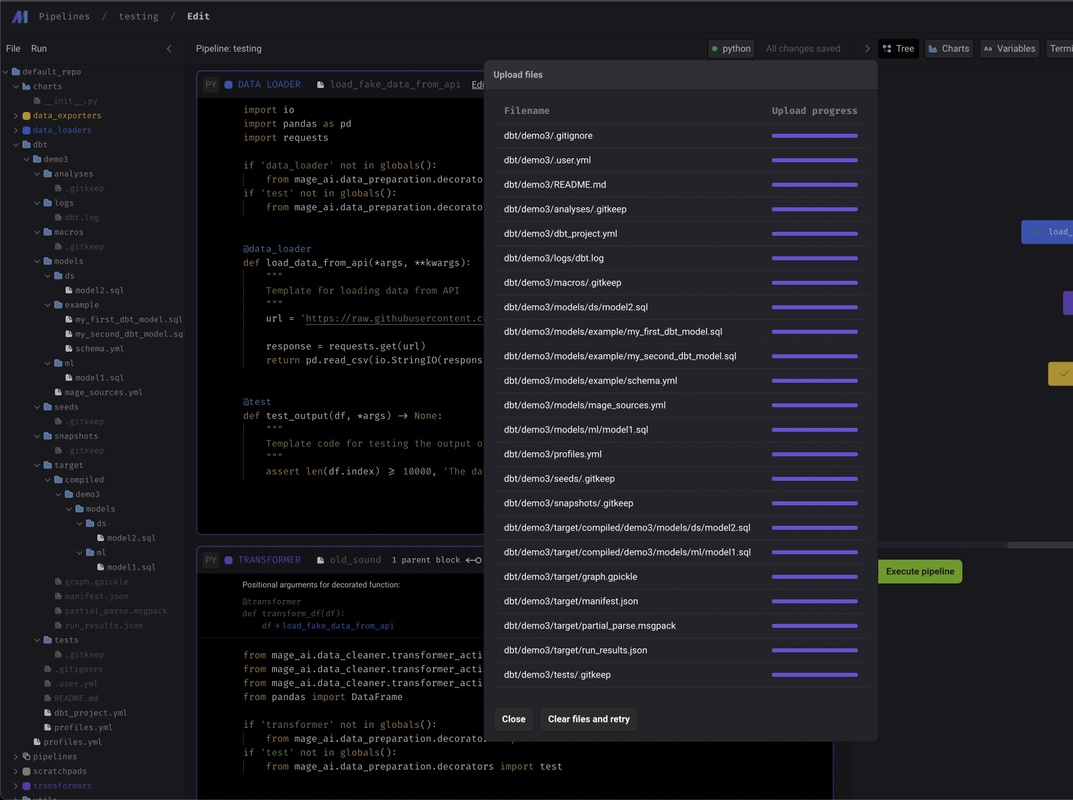

- Support uploading files through UI.

Store logs in GCP Cloud Storage bucket

Besides storing logs on the local disk or AWS S3, we now add the option to store the logs in GCP Cloud Storage by adding logging config in project’s metadata.yaml like below:

logging_config:

type: gcs

level: INFO

destination_config:

path_to_credentials: <path to gcp credentials json file>

bucket: <bucket name>

prefix: <prefix path>Check out the doc for details: https://docs.mage.ai/production/observability/logging#google-cloud-storage

Other bug fixes & improvements

- SQL block improvements

- Support writing raw SQL to customize the create table and insert commands.

- Allow editing SQL block output table names.

- Support loading files from a directory when using

mage_ai.io.file.FileIO. Example:

from mage_ai.io.file import FileIO

file_directories = ['default_repo/csvs']

FileIO().load(file_directories=file_directories)View full Changelog