New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Ensure uploaded secrets are stable #24325

Conversation

Fixes: #23034 Background: Uploaded secrets are stored as bytes in our application db since cloud doesn't have a filesystem. To make db connections we stuff them into temporary files and use those files. We also are constantly watching for db detail changes so we can recompose the connection pool. Each time you call `db->pooled-connection-spec` we check if the hash of the connection spec has changed and recompose the pool if it has. Problem: These uploaded files have temporary files and we make new temp files each time we call `db->pooled-connection-spec`. So the hashes always appear different: ```clojure connection=> (= x y) true connection=> (take 2 (clojure.data/diff (connection-details->spec :postgres (:details x)) (connection-details->spec :postgres (:details y)))) ({:sslkey #object[java.io.File 0x141b0f09 "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_1388256635324085910.tmp"], :sslrootcert #object[java.io.File 0x6f443fac "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_9248342447139746747.tmp"], :sslcert #object[java.io.File 0xbb13300 "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_17076432929457451876.tmp"]} {:sslkey #object[java.io.File 0x6fbb3b7b "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_18336254363340056265.tmp"], :sslrootcert #object[java.io.File 0x6ba4c390 "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_11775804023700307206.tmp"], :sslcert #object[java.io.File 0x320184a0 "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_10098480793225259237.tmp"]}) ``` And this is quite a problem: each time we get a db connection we are making a new file, putting the contents of the secret in it, and then considering the pool stale, recomposing it, starting our query. And if you are on a dashboard, each card will kill the pool of the previously running cards. This behavior does not happen with the local-file path because the secret is actually the filepath and we load that. So the file returned is always the same. It's only for the uploaded bits that we dump into a temp file (each time). Solution: Let's memoize the temp file created by the secret. We cannot use the secret as the key though because the secret can (always?) includes a byte array: ```clojure connection-test=> (hash {:x (.getBytes "hi")}) 1771366777 connection-test=> (hash {:x (.getBytes "hi")}) -709002180 ``` So we need to come up with a stable key. I'm using `value->string` here, falling back to `(gensym)` because `value->string` doesn't always return a value due to its cond. ```clojure (defn value->string "Returns the value of the given `secret` as a String. `secret` can be a Secret model object, or a secret-map (i.e. return value from `db-details-prop->secret-map`)." {:added "0.42.0"} ^String [{:keys [value] :as _secret}] (cond (string? value) value (bytes? value) (String. ^bytes value StandardCharsets/UTF_8))) ``` Why did this bug come up recently? [pull/21604](#21604) gives some light. That changed `(hash details)` -> `(hash (connection-details->spec driver details))` with the message > also made some tweaks so the SQL JDBC driver connection pool cache is > invalidated when the (unpooled) JDBC spec returned by > connection-details->spec changes, rather than when the details map > itself changes. This means that changes to other things outside of > connection details that affect the JDBC connection parameters, for > example the report-timezone or start-of-week Settings will now properly > result in the connection pool cache being flushed So we want to continue to hash the db spec but ensure that the spec is stable.

src/metabase/models/secret.clj

Outdated

| {::memoize/args-fn (fn [[secret _driver?]] | ||

| ;; not clear if value->string could return nil due to the cond so we'll just cache on a key | ||

| ;; that is unique | ||

| [(or (value->string secret) (gensym))])}))) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Wouldn't value->string throw an exception if the byte array is not a valid UTF-8 string?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

good thought. I'll catch and use the gensym there as well

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

(although I'm assuming this code path doesn't throw a lot)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't think it's very important how it prints, but the javadoc says this about invalid encodings:

The behavior of this constructor when the given bytes are not valid in the given charset is unspecified. The CharsetDecoder class should be used when more control over the decoding process is required.

So for maximum safety you could use that or just hash the byte array.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

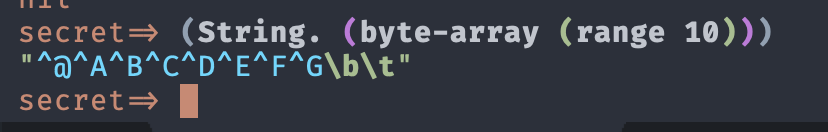

can't hash the byte array:

secret=> (hash (.getBytes "hi"))

917686548

secret=> (hash (.getBytes "hi"))

718722076unless we have a hash that works on the contents:

secret=> (java.util.Arrays/hashCode ^bytes (.getBytes "hi"))

4290

secret=> (java.util.Arrays/hashCode ^bytes (.getBytes "hi"))

4290But this leaves us pretty susceptible to hash collisions so i'm nervous about that.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

One thing I could do is just turn the byte array into a clojure vector and use that. That has the semantics we want I think: value based rather than identity, collisions only occur if and only if the sequence of bytes are equal

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't understand what makes you nervous about Arrays/hashCode? Do you expect that to cause more collisions than hashing the equivalent String?

I don't understand the advantage of converting the array to a vector. Is the Java hash much worse than Clojure's?

Sorry, the above is rubbish.

Yes, I think converting to a vector is the easiest solution.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think I made this confusing. When you upload a sequence of bytes as a secret, we want to put them in a file once and only once and always reuse that temporary file. We will eventually hash the whole connection spec but I don't care about collisions there. It's only given the same sequence of bytes, you should always get back the exact same temporary file that has been created.

So i'm making a function f: Secret -> file that given the same Secret always returns the exact same file. This was not the case before this. Each uploaded secret would return a new temporary file with the contents of the secret. So you would end up with 35 temporary files each with the same key in it.

An easy way to get this guarantee is to memoize the function. But the secret itself isn't a good key to memoize against because it contains a byte array.

If the memoization key is the byte-array itself, this will fail because arrays have reference identity:

user=> (= (.getBytes "hi") (.getBytes "hi"))

false(I was using the fact that they had different hashes as a shorthand for this: their notion of identity is tied to where in memory those byte arrays live rather than their contents). So each time we load the same secret from the database we get a new byte array, ask for its temp file and get a different temp file each time.

This means that memoization cannot be driven off of the byte array. But one way to gain this back quickly is just stuff those bytes into a string, because strings compare on value not identity. This is what is currently in the PR. I was banking on the assumption that Strings are just opaque sequences of bytes that will compare byte by byte, regardless of whether those bytes make sense.

But you've pointed out a good point that maybe that is flirting with undefined behavior. If we use the hash of the contents of the byte array as the memoization key with (j.u.Arrays/hashCode array), then we open ourselves up the (albeit rare) case that two distinct secret values hash to the same value. This sounds really bad. Two distinct secrets (think two ssh keys) but both would map to only one file containing a single ssh key.

An easy way to have the value semantics we want for the memoization is just to call (vec array) on the byte array and use this sequence of bytes as the memoization key. Clojure vectors compare by value not reference. So two secrets would return the same file if and only if the sequence of bytes are identical, in which case we would expect the files to be identical. This gives me the same guarantee that I was wanting from the String behavior I used initially without entwining this with charsets, utf8, etc.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, when I managed to defocus my brain from hashing, I understood this. 😄

Codecov Report

@@ Coverage Diff @@

## master #24325 +/- ##

=======================================

Coverage 65.21% 65.22%

=======================================

Files 2768 2768

Lines 84836 84846 +10

Branches 10462 10461 -1

=======================================

+ Hits 55329 55337 +8

- Misses 25191 25194 +3

+ Partials 4316 4315 -1

Flags with carried forward coverage won't be shown. Click here to find out more.

Continue to review full report at Codecov.

|

Copying comment from Github: When you upload a sequence of bytes as a secret, we want to put them in a file once and only once and always reuse that temporary file. We will eventually hash the whole connection spec but I don't care about collisions there. It's only given the same sequence of bytes, you should always get back the exact same temporary file that has been created. So i'm making a function `f: Secret -> file` that given the same Secret always returns the exact same file. This was not the case before this. Each uploaded secret would return a new temporary file with the contents of the secret each time you got its value. So you would end up with 35 temporary files each with the same key in it. An easy way to get this guarantee is to memoize the function. But the secret itself isn't a good key to memoize against because it contains a byte array. If the memoization key is the byte-array itself, this will fail because arrays have reference identity: ```clojure user=> (= (.getBytes "hi") (.getBytes "hi")) false ``` So each time we load the same secret from the database we get a new byte array, ask for its temp file and get a different temp file each time. This means that memoization cannot be driven off of the byte array. But one way to gain this back quickly is just stuff those bytes into a string, because strings compare on value not identity. This is what is currently in the PR (before this change). I was banking on the assumption that Strings are just opaque sequences of bytes that will compare byte by byte, regardless of whether those bytes make sense. But you've pointed out a good point that maybe that is flirting with undefined behavior. If we use the hash of the contents of the byte array as the memoization key with (j.u.Arrays/hashCode array), then we open ourselves up the (albeit rare) case that two distinct secret values hash to the same value. This sounds really bad. Two distinct secrets (think two ssh keys) but both would map to only one file containing a single ssh key. An easy way to have the value semantics we want for the memoization is just to call (vec array) on the byte array and use this sequence of bytes as the memoization key. Clojure vectors compare by value not reference. So two secrets would return the same file if and only if the sequence of bytes are identical, in which case we would expect the files to be identical. This gives me the same guarantee that I was wanting from the String behavior I used initially without entwining this with charsets, utf8, etc.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

* Ensure uploaded secrets are stable Fixes: #23034 Background: Uploaded secrets are stored as bytes in our application db since cloud doesn't have a filesystem. To make db connections we stuff them into temporary files and use those files. We also are constantly watching for db detail changes so we can recompose the connection pool. Each time you call `db->pooled-connection-spec` we check if the hash of the connection spec has changed and recompose the pool if it has. Problem: These uploaded files have temporary files and we make new temp files each time we call `db->pooled-connection-spec`. So the hashes always appear different: ```clojure connection=> (= x y) true connection=> (take 2 (clojure.data/diff (connection-details->spec :postgres (:details x)) (connection-details->spec :postgres (:details y)))) ({:sslkey #object[java.io.File 0x141b0f09 "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_1388256635324085910.tmp"], :sslrootcert #object[java.io.File 0x6f443fac "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_9248342447139746747.tmp"], :sslcert #object[java.io.File 0xbb13300 "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_17076432929457451876.tmp"]} {:sslkey #object[java.io.File 0x6fbb3b7b "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_18336254363340056265.tmp"], :sslrootcert #object[java.io.File 0x6ba4c390 "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_11775804023700307206.tmp"], :sslcert #object[java.io.File 0x320184a0 "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_10098480793225259237.tmp"]}) ``` And this is quite a problem: each time we get a db connection we are making a new file, putting the contents of the secret in it, and then considering the pool stale, recomposing it, starting our query. And if you are on a dashboard, each card will kill the pool of the previously running cards. This behavior does not happen with the local-file path because the secret is actually the filepath and we load that. So the file returned is always the same. It's only for the uploaded bits that we dump into a temp file (each time). Solution: Let's memoize the temp file created by the secret. We cannot use the secret as the key though because the secret can (always?) includes a byte array: ```clojure connection-test=> (hash {:x (.getBytes "hi")}) 1771366777 connection-test=> (hash {:x (.getBytes "hi")}) -709002180 ``` So we need to come up with a stable key. I'm using `value->string` here, falling back to `(gensym)` because `value->string` doesn't always return a value due to its cond. ```clojure (defn value->string "Returns the value of the given `secret` as a String. `secret` can be a Secret model object, or a secret-map (i.e. return value from `db-details-prop->secret-map`)." {:added "0.42.0"} ^String [{:keys [value] :as _secret}] (cond (string? value) value (bytes? value) (String. ^bytes value StandardCharsets/UTF_8))) ``` Why did this bug come up recently? [pull/21604](#21604) gives some light. That changed `(hash details)` -> `(hash (connection-details->spec driver details))` with the message > also made some tweaks so the SQL JDBC driver connection pool cache is > invalidated when the (unpooled) JDBC spec returned by > connection-details->spec changes, rather than when the details map > itself changes. This means that changes to other things outside of > connection details that affect the JDBC connection parameters, for > example the report-timezone or start-of-week Settings will now properly > result in the connection pool cache being flushed So we want to continue to hash the db spec but ensure that the spec is stable. * typehint the memoized var with ^java.io.File * Switch memoization key from String to vector of bytes Copying comment from Github: When you upload a sequence of bytes as a secret, we want to put them in a file once and only once and always reuse that temporary file. We will eventually hash the whole connection spec but I don't care about collisions there. It's only given the same sequence of bytes, you should always get back the exact same temporary file that has been created. So i'm making a function `f: Secret -> file` that given the same Secret always returns the exact same file. This was not the case before this. Each uploaded secret would return a new temporary file with the contents of the secret each time you got its value. So you would end up with 35 temporary files each with the same key in it. An easy way to get this guarantee is to memoize the function. But the secret itself isn't a good key to memoize against because it contains a byte array. If the memoization key is the byte-array itself, this will fail because arrays have reference identity: ```clojure user=> (= (.getBytes "hi") (.getBytes "hi")) false ``` So each time we load the same secret from the database we get a new byte array, ask for its temp file and get a different temp file each time. This means that memoization cannot be driven off of the byte array. But one way to gain this back quickly is just stuff those bytes into a string, because strings compare on value not identity. This is what is currently in the PR (before this change). I was banking on the assumption that Strings are just opaque sequences of bytes that will compare byte by byte, regardless of whether those bytes make sense. But you've pointed out a good point that maybe that is flirting with undefined behavior. If we use the hash of the contents of the byte array as the memoization key with (j.u.Arrays/hashCode array), then we open ourselves up the (albeit rare) case that two distinct secret values hash to the same value. This sounds really bad. Two distinct secrets (think two ssh keys) but both would map to only one file containing a single ssh key. An easy way to have the value semantics we want for the memoization is just to call (vec array) on the byte array and use this sequence of bytes as the memoization key. Clojure vectors compare by value not reference. So two secrets would return the same file if and only if the sequence of bytes are identical, in which case we would expect the files to be identical. This gives me the same guarantee that I was wanting from the String behavior I used initially without entwining this with charsets, utf8, etc.

* Ensure uploaded secrets are stable Fixes: #23034 Background: Uploaded secrets are stored as bytes in our application db since cloud doesn't have a filesystem. To make db connections we stuff them into temporary files and use those files. We also are constantly watching for db detail changes so we can recompose the connection pool. Each time you call `db->pooled-connection-spec` we check if the hash of the connection spec has changed and recompose the pool if it has. Problem: These uploaded files have temporary files and we make new temp files each time we call `db->pooled-connection-spec`. So the hashes always appear different: ```clojure connection=> (= x y) true connection=> (take 2 (clojure.data/diff (connection-details->spec :postgres (:details x)) (connection-details->spec :postgres (:details y)))) ({:sslkey #object[java.io.File 0x141b0f09 "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_1388256635324085910.tmp"], :sslrootcert #object[java.io.File 0x6f443fac "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_9248342447139746747.tmp"], :sslcert #object[java.io.File 0xbb13300 "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_17076432929457451876.tmp"]} {:sslkey #object[java.io.File 0x6fbb3b7b "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_18336254363340056265.tmp"], :sslrootcert #object[java.io.File 0x6ba4c390 "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_11775804023700307206.tmp"], :sslcert #object[java.io.File 0x320184a0 "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_10098480793225259237.tmp"]}) ``` And this is quite a problem: each time we get a db connection we are making a new file, putting the contents of the secret in it, and then considering the pool stale, recomposing it, starting our query. And if you are on a dashboard, each card will kill the pool of the previously running cards. This behavior does not happen with the local-file path because the secret is actually the filepath and we load that. So the file returned is always the same. It's only for the uploaded bits that we dump into a temp file (each time). Solution: Let's memoize the temp file created by the secret. We cannot use the secret as the key though because the secret can (always?) includes a byte array: ```clojure connection-test=> (hash {:x (.getBytes "hi")}) 1771366777 connection-test=> (hash {:x (.getBytes "hi")}) -709002180 ``` So we need to come up with a stable key. I'm using `value->string` here, falling back to `(gensym)` because `value->string` doesn't always return a value due to its cond. ```clojure (defn value->string "Returns the value of the given `secret` as a String. `secret` can be a Secret model object, or a secret-map (i.e. return value from `db-details-prop->secret-map`)." {:added "0.42.0"} ^String [{:keys [value] :as _secret}] (cond (string? value) value (bytes? value) (String. ^bytes value StandardCharsets/UTF_8))) ``` Why did this bug come up recently? [pull/21604](#21604) gives some light. That changed `(hash details)` -> `(hash (connection-details->spec driver details))` with the message > also made some tweaks so the SQL JDBC driver connection pool cache is > invalidated when the (unpooled) JDBC spec returned by > connection-details->spec changes, rather than when the details map > itself changes. This means that changes to other things outside of > connection details that affect the JDBC connection parameters, for > example the report-timezone or start-of-week Settings will now properly > result in the connection pool cache being flushed So we want to continue to hash the db spec but ensure that the spec is stable. * typehint the memoized var with ^java.io.File * Switch memoization key from String to vector of bytes Copying comment from Github: When you upload a sequence of bytes as a secret, we want to put them in a file once and only once and always reuse that temporary file. We will eventually hash the whole connection spec but I don't care about collisions there. It's only given the same sequence of bytes, you should always get back the exact same temporary file that has been created. So i'm making a function `f: Secret -> file` that given the same Secret always returns the exact same file. This was not the case before this. Each uploaded secret would return a new temporary file with the contents of the secret each time you got its value. So you would end up with 35 temporary files each with the same key in it. An easy way to get this guarantee is to memoize the function. But the secret itself isn't a good key to memoize against because it contains a byte array. If the memoization key is the byte-array itself, this will fail because arrays have reference identity: ```clojure user=> (= (.getBytes "hi") (.getBytes "hi")) false ``` So each time we load the same secret from the database we get a new byte array, ask for its temp file and get a different temp file each time. This means that memoization cannot be driven off of the byte array. But one way to gain this back quickly is just stuff those bytes into a string, because strings compare on value not identity. This is what is currently in the PR (before this change). I was banking on the assumption that Strings are just opaque sequences of bytes that will compare byte by byte, regardless of whether those bytes make sense. But you've pointed out a good point that maybe that is flirting with undefined behavior. If we use the hash of the contents of the byte array as the memoization key with (j.u.Arrays/hashCode array), then we open ourselves up the (albeit rare) case that two distinct secret values hash to the same value. This sounds really bad. Two distinct secrets (think two ssh keys) but both would map to only one file containing a single ssh key. An easy way to have the value semantics we want for the memoization is just to call (vec array) on the byte array and use this sequence of bytes as the memoization key. Clojure vectors compare by value not reference. So two secrets would return the same file if and only if the sequence of bytes are identical, in which case we would expect the files to be identical. This gives me the same guarantee that I was wanting from the String behavior I used initially without entwining this with charsets, utf8, etc. Co-authored-by: dpsutton <dan@dpsutton.com>

* Ensure uploaded secrets are stable Fixes: #23034 Background: Uploaded secrets are stored as bytes in our application db since cloud doesn't have a filesystem. To make db connections we stuff them into temporary files and use those files. We also are constantly watching for db detail changes so we can recompose the connection pool. Each time you call `db->pooled-connection-spec` we check if the hash of the connection spec has changed and recompose the pool if it has. Problem: These uploaded files have temporary files and we make new temp files each time we call `db->pooled-connection-spec`. So the hashes always appear different: ```clojure connection=> (= x y) true connection=> (take 2 (clojure.data/diff (connection-details->spec :postgres (:details x)) (connection-details->spec :postgres (:details y)))) ({:sslkey #object[java.io.File 0x141b0f09 "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_1388256635324085910.tmp"], :sslrootcert #object[java.io.File 0x6f443fac "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_9248342447139746747.tmp"], :sslcert #object[java.io.File 0xbb13300 "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_17076432929457451876.tmp"]} {:sslkey #object[java.io.File 0x6fbb3b7b "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_18336254363340056265.tmp"], :sslrootcert #object[java.io.File 0x6ba4c390 "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_11775804023700307206.tmp"], :sslcert #object[java.io.File 0x320184a0 "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_10098480793225259237.tmp"]}) ``` And this is quite a problem: each time we get a db connection we are making a new file, putting the contents of the secret in it, and then considering the pool stale, recomposing it, starting our query. And if you are on a dashboard, each card will kill the pool of the previously running cards. This behavior does not happen with the local-file path because the secret is actually the filepath and we load that. So the file returned is always the same. It's only for the uploaded bits that we dump into a temp file (each time). Solution: Let's memoize the temp file created by the secret. We cannot use the secret as the key though because the secret can (always?) includes a byte array: ```clojure connection-test=> (hash {:x (.getBytes "hi")}) 1771366777 connection-test=> (hash {:x (.getBytes "hi")}) -709002180 ``` So we need to come up with a stable key. I'm using `value->string` here, falling back to `(gensym)` because `value->string` doesn't always return a value due to its cond. ```clojure (defn value->string "Returns the value of the given `secret` as a String. `secret` can be a Secret model object, or a secret-map (i.e. return value from `db-details-prop->secret-map`)." {:added "0.42.0"} ^String [{:keys [value] :as _secret}] (cond (string? value) value (bytes? value) (String. ^bytes value StandardCharsets/UTF_8))) ``` Why did this bug come up recently? [pull/21604](#21604) gives some light. That changed `(hash details)` -> `(hash (connection-details->spec driver details))` with the message > also made some tweaks so the SQL JDBC driver connection pool cache is > invalidated when the (unpooled) JDBC spec returned by > connection-details->spec changes, rather than when the details map > itself changes. This means that changes to other things outside of > connection details that affect the JDBC connection parameters, for > example the report-timezone or start-of-week Settings will now properly > result in the connection pool cache being flushed So we want to continue to hash the db spec but ensure that the spec is stable. * typehint the memoized var with ^java.io.File * Switch memoization key from String to vector of bytes Copying comment from Github: When you upload a sequence of bytes as a secret, we want to put them in a file once and only once and always reuse that temporary file. We will eventually hash the whole connection spec but I don't care about collisions there. It's only given the same sequence of bytes, you should always get back the exact same temporary file that has been created. So i'm making a function `f: Secret -> file` that given the same Secret always returns the exact same file. This was not the case before this. Each uploaded secret would return a new temporary file with the contents of the secret each time you got its value. So you would end up with 35 temporary files each with the same key in it. An easy way to get this guarantee is to memoize the function. But the secret itself isn't a good key to memoize against because it contains a byte array. If the memoization key is the byte-array itself, this will fail because arrays have reference identity: ```clojure user=> (= (.getBytes "hi") (.getBytes "hi")) false ``` So each time we load the same secret from the database we get a new byte array, ask for its temp file and get a different temp file each time. This means that memoization cannot be driven off of the byte array. But one way to gain this back quickly is just stuff those bytes into a string, because strings compare on value not identity. This is what is currently in the PR (before this change). I was banking on the assumption that Strings are just opaque sequences of bytes that will compare byte by byte, regardless of whether those bytes make sense. But you've pointed out a good point that maybe that is flirting with undefined behavior. If we use the hash of the contents of the byte array as the memoization key with (j.u.Arrays/hashCode array), then we open ourselves up the (albeit rare) case that two distinct secret values hash to the same value. This sounds really bad. Two distinct secrets (think two ssh keys) but both would map to only one file containing a single ssh key. An easy way to have the value semantics we want for the memoization is just to call (vec array) on the byte array and use this sequence of bytes as the memoization key. Clojure vectors compare by value not reference. So two secrets would return the same file if and only if the sequence of bytes are identical, in which case we would expect the files to be identical. This gives me the same guarantee that I was wanting from the String behavior I used initially without entwining this with charsets, utf8, etc.

* Ensure uploaded secrets are stable (#24325) * Ensure uploaded secrets are stable Fixes: #23034 Background: Uploaded secrets are stored as bytes in our application db since cloud doesn't have a filesystem. To make db connections we stuff them into temporary files and use those files. We also are constantly watching for db detail changes so we can recompose the connection pool. Each time you call `db->pooled-connection-spec` we check if the hash of the connection spec has changed and recompose the pool if it has. Problem: These uploaded files have temporary files and we make new temp files each time we call `db->pooled-connection-spec`. So the hashes always appear different: ```clojure connection=> (= x y) true connection=> (take 2 (clojure.data/diff (connection-details->spec :postgres (:details x)) (connection-details->spec :postgres (:details y)))) ({:sslkey #object[java.io.File 0x141b0f09 "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_1388256635324085910.tmp"], :sslrootcert #object[java.io.File 0x6f443fac "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_9248342447139746747.tmp"], :sslcert #object[java.io.File 0xbb13300 "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_17076432929457451876.tmp"]} {:sslkey #object[java.io.File 0x6fbb3b7b "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_18336254363340056265.tmp"], :sslrootcert #object[java.io.File 0x6ba4c390 "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_11775804023700307206.tmp"], :sslcert #object[java.io.File 0x320184a0 "/var/folders/1d/3ns5s1gs7xjgb09bh1yb6wpc0000gn/T/metabase-secret_10098480793225259237.tmp"]}) ``` And this is quite a problem: each time we get a db connection we are making a new file, putting the contents of the secret in it, and then considering the pool stale, recomposing it, starting our query. And if you are on a dashboard, each card will kill the pool of the previously running cards. This behavior does not happen with the local-file path because the secret is actually the filepath and we load that. So the file returned is always the same. It's only for the uploaded bits that we dump into a temp file (each time). Solution: Let's memoize the temp file created by the secret. We cannot use the secret as the key though because the secret can (always?) includes a byte array: ```clojure connection-test=> (hash {:x (.getBytes "hi")}) 1771366777 connection-test=> (hash {:x (.getBytes "hi")}) -709002180 ``` So we need to come up with a stable key. I'm using `value->string` here, falling back to `(gensym)` because `value->string` doesn't always return a value due to its cond. ```clojure (defn value->string "Returns the value of the given `secret` as a String. `secret` can be a Secret model object, or a secret-map (i.e. return value from `db-details-prop->secret-map`)." {:added "0.42.0"} ^String [{:keys [value] :as _secret}] (cond (string? value) value (bytes? value) (String. ^bytes value StandardCharsets/UTF_8))) ``` Why did this bug come up recently? [pull/21604](#21604) gives some light. That changed `(hash details)` -> `(hash (connection-details->spec driver details))` with the message > also made some tweaks so the SQL JDBC driver connection pool cache is > invalidated when the (unpooled) JDBC spec returned by > connection-details->spec changes, rather than when the details map > itself changes. This means that changes to other things outside of > connection details that affect the JDBC connection parameters, for > example the report-timezone or start-of-week Settings will now properly > result in the connection pool cache being flushed So we want to continue to hash the db spec but ensure that the spec is stable. * typehint the memoized var with ^java.io.File * Switch memoization key from String to vector of bytes Copying comment from Github: When you upload a sequence of bytes as a secret, we want to put them in a file once and only once and always reuse that temporary file. We will eventually hash the whole connection spec but I don't care about collisions there. It's only given the same sequence of bytes, you should always get back the exact same temporary file that has been created. So i'm making a function `f: Secret -> file` that given the same Secret always returns the exact same file. This was not the case before this. Each uploaded secret would return a new temporary file with the contents of the secret each time you got its value. So you would end up with 35 temporary files each with the same key in it. An easy way to get this guarantee is to memoize the function. But the secret itself isn't a good key to memoize against because it contains a byte array. If the memoization key is the byte-array itself, this will fail because arrays have reference identity: ```clojure user=> (= (.getBytes "hi") (.getBytes "hi")) false ``` So each time we load the same secret from the database we get a new byte array, ask for its temp file and get a different temp file each time. This means that memoization cannot be driven off of the byte array. But one way to gain this back quickly is just stuff those bytes into a string, because strings compare on value not identity. This is what is currently in the PR (before this change). I was banking on the assumption that Strings are just opaque sequences of bytes that will compare byte by byte, regardless of whether those bytes make sense. But you've pointed out a good point that maybe that is flirting with undefined behavior. If we use the hash of the contents of the byte array as the memoization key with (j.u.Arrays/hashCode array), then we open ourselves up the (albeit rare) case that two distinct secret values hash to the same value. This sounds really bad. Two distinct secrets (think two ssh keys) but both would map to only one file containing a single ssh key. An easy way to have the value semantics we want for the memoization is just to call (vec array) on the byte array and use this sequence of bytes as the memoization key. Clojure vectors compare by value not reference. So two secrets would return the same file if and only if the sequence of bytes are identical, in which case we would expect the files to be identical. This gives me the same guarantee that I was wanting from the String behavior I used initially without entwining this with charsets, utf8, etc. * empty commit to trigger CI

Fixes: #23034

Background:

Uploaded secrets are stored as bytes in our application db since cloud

doesn't have a filesystem. To make db connections we stuff them into

temporary files and use those files.

We also are constantly watching for db detail changes so we can

recompose the connection pool. Each time you call

db->pooled-connection-specwe check if the hash of the connection spechas changed and recompose the pool if it has.

Problem:

These uploaded files have temporary files and we make new temp files

each time we call

db->pooled-connection-spec. So the hashes alwaysappear different:

And this is quite a problem: each time we get a db connection we are

making a new file, putting the contents of the secret in it, and then

considering the pool stale, recomposing it, starting our query. And if

you are on a dashboard, each card will kill the pool of the previously

running cards.

This behavior does not happen with the local-file path because the

secret is actually the filepath and we load that. So the file returned

is always the same. It's only for the uploaded bits that we dump into a

temp file (each time).

Solution:

Let's memoize the temp file created by the secret. We cannot use the

secret as the key though because the secret can (always?) includes a

byte array:

So we need to come up with a stable key. I'm using

value->stringhere,falling back to

(gensym)becausevalue->stringdoesn't always returna value due to its cond.

Why did this bug come up recently?

pull/21604 gives some

light. That changed

(hash details)->(hash (connection-details->spec driver details))with the message

So we want to continue to hash the db spec but ensure that the spec is

stable.

How to test

our dev-scripts repo has a great postgres ssl setup.

Just run

This will generate everything you need so you can add a db connection (note the port is 5433 so it doesn't step on any other locally running postgres). You'll need to create a db and a table inside of it so you have something to connect to and then use the following

verify-cadev-scripts/run_postgres_ssl/server.crtdev-scripts/run_postgres_ssl/privkey.pemdev-scripts/run_postgres_ssl/server.keyabcd(this is in the script :openssl req -new -text -passout pass:abcd -subj /CN=localhost -out server.req -keyout privkey.pemBefore this change you would see logs like the following:

Those warnings of details changing should be absent after this change.