New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

JUnit report sent to wrong build #40

Comments

|

Hi @jawnsy This is sadly a problem of GitHub, which you can find more about here: Hoping them providing a fix for that soon |

|

Hi there- unfortunately, this issue makes this Action pretty unusable for us since the check run output is the only place our test suite failures are visible and when they get added to some other "random" Workflow, they're impossible to find. I waded through some of the related issues and there seems to be a potential workaround:

If I understand correctly, this would add all of your annotations to the actual Job where I'm using the step (our tests), instead of as a new check that is getting misplaced in some other Workflow. Besides being a workaround for this issue, this is actually exactly where I was trying to find these annotations to begin with. I think it's a much more useful place to put them. Even if the check was showing up under the correct Workflow, I think users would still be looking for failures in the existing, failed "Test Job" check and not some separate "JUnit Reporter" check (I know we could configure the name to be more obvious, but still). Am I missing anything? Would you consider implementing such functionality? |

|

@pbrisbin thank you very much for the added notes. It sure sounds like an opportunity to use those APIs and make it so it will only update the current run. Would need to look into it. |

|

Hmm, I can't say that I do. A lot of Actions add annotations to the Job's check where they're run, but they do it by outputting messages with a problem-matcher attached, not by updating the Check. I suppose that's another alternative you could consider. Looking at the docs a bit more closely, I think it would look something like, const ref = head_sha

const check_name = github.context.job // assumes check names are job.<job-id>

const filter = 'latest'

const checks = await octokit.rest.checks.listForRef({

...github.context.repo

ref,

check_name,

filter

});

// assumes a check for our job exists if we're running, probably want some error-handling

const check_run_id = checks[0].run_id

// as per docs: "Each time you update the check run, annotations are

// appended to the list of annotations that already exist for the check run."

oktokit.rest.checks.update({ owner, repo, check_run_id, ... })Oh, and I noticed you're currently only sending your first 50 annotations. I suppose it's reasonable to expect a test suite in a to never have more than 50 test failures in a given run, but you could send everything if you call this

|

|

@pbrisbin there's a version coming up using the outlined APIs. Sadly those lead to a different result than anticipated, and due to the check still being running at the time of updating the result, it won't keep the failure state for example. Just for reference, there is still no API to create a check in a specific suite / workflow sadly: https://github.community/t/specify-check-suite-when-creating-a-checkrun/118380/9 |

|

Awesome!

Let me make sure I understand... So, if I use this in a Am I missing something? |

|

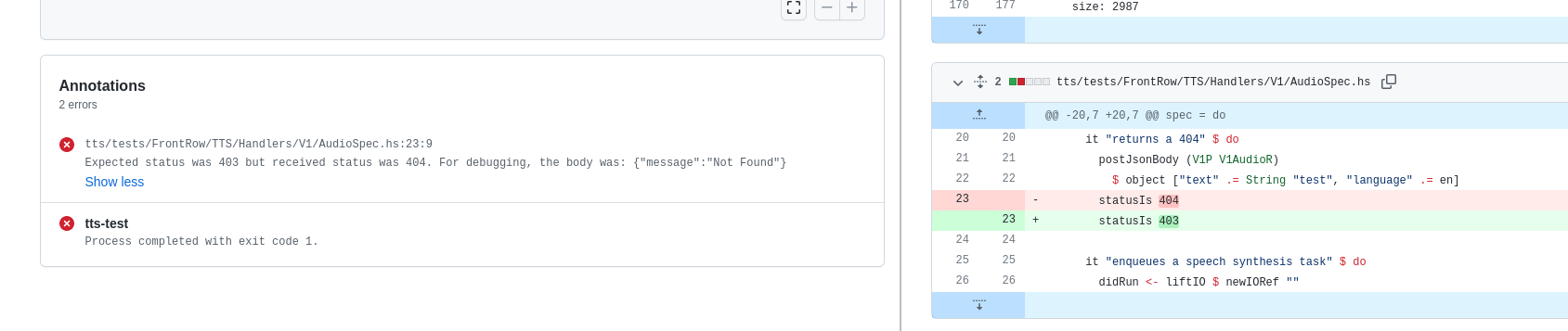

@pbrisbin you are actually right, that particular thing may not be an issue in this case. One thing seems to be a problem though. The way the annotations are rendered if we use the vs. https://github.com/mikepenz/action-junit-report/runs/4279043601 |

|

Wow, well that's super annoying. I wonder why that is 🤔 |

|

@pbrisbin sadly this wasn't it, but this makes the build to fail, while it's still ongoing, breaking a few things :D |

|

Ha, not unexpected in hindsight. I would say that when doing the update path you don't want |

|

I wonder if the test's conclusion/output is somehow taking over as the "important one". So your annotations remain, but they get subdued in the UI once the test completes? Either way, totally annoying. And sorry if this ends up a dead-end. |

|

@pbrisbin I feel somehow that this is potentially an issue with the github API. the documentation itself would not indicate any behavior difference as far as I found briefly: https://docs.github.com/en/rest/reference/checks#update-a-check-run Thank you for also looking into it. Really appreciated! |

|

Yeah I agree. Looking at the API docs between Create and Update the POST bodies are identical, you would expect the result to be the same too. |

|

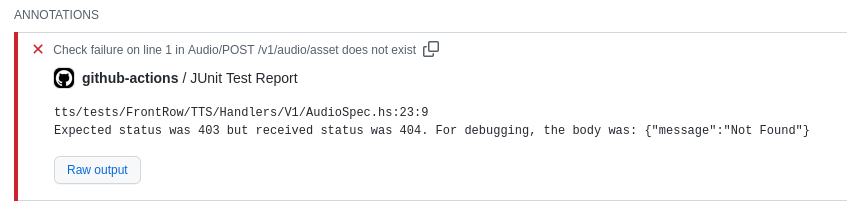

There seems to be more functionality that doesn't work for the update vs create: I don't see the annotations appear in the diff. Unfortunately, I think not seeing the annotations in-line is a bridge too far for us to use this style. I also notice here that the Process completed with exit code 1. annotation actually includes the Job/Check name (tts-test), but the others do not -- as if they're attached to the Workflow but not any one Job/Check. I wonder if that's somehow related. |

|

@pbrisbin that's unfortunate :/ I haven't had the chance to check if there have been other reports on the |

|

Oh, that may actually be our fault. I need to investigate where file/line information is meant to live in a JUnit XML. Our formatter seems to just have it as part of the failure message, but that's probably not right. Notice the "Line 1 in {spec description}", even though |

|

@pbrisbin sadly it seems line numbers are not consistent between different output formats. There surely is some way to expand support for handling it. It should already handle retrieving file numbers from file paths as you show in your screenshot: maybe if you have a sample output we. can use it to expand test cases and see why it does not work for your case |

|

Oh, that's convenient! Here is an example of what it is right now: golden.xml But we author this formatter, so I can pretty easily add |

|

I didn't realize. In this case I'd propose to add the And thanks for providing the |

|

So you are saying that if we added

And there isn't any one way to do that is more common or standardized than any other? |

|

I believe using the In cases neither is available (line via the file, or via the line attribute) the action tries to do some more searching to find the the line number in the stacktrace: https://github.com/mikepenz/action-junit-report/blob/main/src/testParser.ts#L53-L55 I'll check again and see why it wouldn't match the description in your case as it should be the stacktrace. Did some search but the xsd for junit output for example does not specify |

|

@mikepenz I am looking for a way to solve this issue. |

|

@koyama-yoshihito as of this discussion here, the issue is still not fixed by GitHub: community/community#14891 |

|

@mikepenz |

|

Could this feature help with the issue: https://github.blog/2022-05-09-supercharging-github-actions-with-job-summaries? |

|

@laughedelic thank you for the link. The newest version of this action does publish a job summary. While it seems to be a partial solution I think it's not fully covering the whole element. Sample display: Sadly this would loose the great feature of seeing the test results within the reported checks: We could add a flag to offer disabling the report being sent and only having a summary - for people who'd prefer that. |

|

Glad to know that it's already in use! 👏 I think the summary in the checks list is a very nice feature, but unfortunately, when there are a lot of tests ran in parallel, the number of check summaries is doubled, so it would be good to either be able to disable it, or consolidate all summaries by some tag (as suggested in #195). |

|

@laughedelic without offering a configuration where multiple imports can be done at once, I feel that the current summary APi exposed by the But given a sample summary of this: https://github.com/mikepenz/action-junit-report/actions/runs/2717236074#summary-7464569272 it seems like it's not too bad. What also is possible already today is to enable the |

|

Btw. regarding multiple imports as one. Please see my comment here: #195 (comment) with the current (in-progress) PR |

Builds were failing because `junit` was not installed. Use a dedicated action plugin to ensure it is available and also publish the test report on PR's for convenient viewing. For now we don't create an actual PR check, only annotations, because the check is currently sent to the wrong build. See mikepenz/action-junit-report#40.

|

As a workaround you could use the same approach as other actions (i.e. https://github.com/EnricoMi/publish-unit-test-result-action) and add in the summary a link to the check run. |

|

Hi @mikepenz |

|

hi @AkhremDmitry as far as I am aware no new API was introduced to fix the root problem of this ticket. However one thing I use in different projects these days is to only have annotations and the job summary: And having the main build running the tests fail, so it would not cause such wrong association. |

…heck run A workaround for mikepenz/action-junit-report#40

…heck run (#3112) A workaround for mikepenz/action-junit-report#40

workflowiin develop-branchin automaattisten testien yhteydessä * VHAR-8429 * mikepenz/action-junit-report#40 (comment)

Dear maintainer,

Thanks so much for creating this excellent plugin and sharing it for others to use!

I have multiple workflows defined, and it seems that the check is added to the first workflow, rather than the one that actually ran the test suite. Is there some way to associate the report with the correct workflow definition? Ideally I'd like the report to be below the test suite (e.g. job that runs tests, publishes report, should have the report appended as a check below it or nearby)

The text was updated successfully, but these errors were encountered: