New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

File descriptors and internal connection leak #15022

Comments

|

@lihaohua66 this doesn't make sense - you should provide us some profiling data regarding the server. There should be no leak etc at all unless you are making some calls. Please run |

|

@harshavardhana btw, do you know what does this kind of open file mean? |

I have the same working cluster for the last 6days nothing is going on - are you running replication etc on this setup or is it a single setup? are you using operator? @lihaohua66 |

|

there is only one node in the profile - is this a single disk setup? @lihaohua66 |

|

It looks like you have Client API calls calling the server |

|

we have 6 nodes in the cluster, and each node has 24 disks. let me send you the full profile. |

you need to send everything not just one node. |

|

yes, we could have some clients access this minio. but the work load should be very low, and it should not have so many open file descriptors. |

it has because there 300+ listing requests @lihaohua66 |

|

Okay from what it looks like you are facing this You need to upgrade your setup @lihaohua66 |

Use the latest release. |

|

@harshavardhana thank you for your help. let me try upgrade. |

|

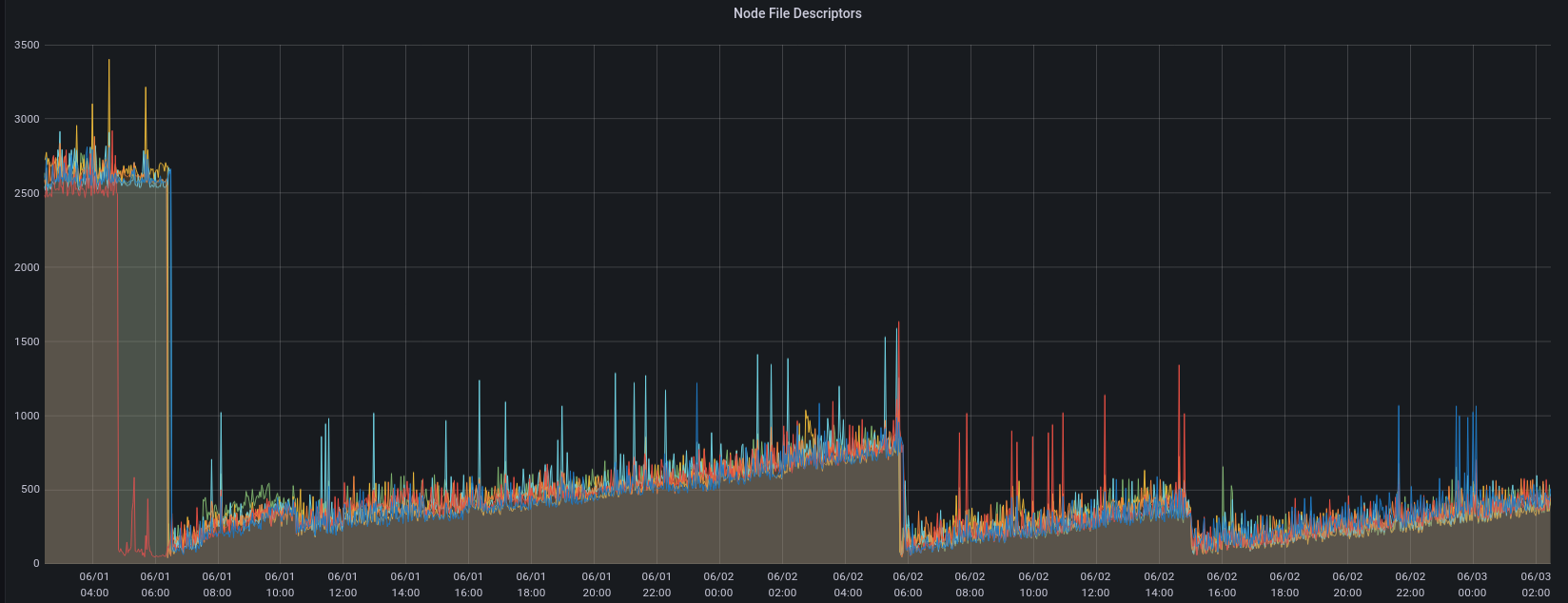

@harshavardhana we have upgrade to 20220602021104.0.0, but we are still observing increasing file descriptor. could you help take another look? we restarted the whole cluster several times, so you could see few clifs here. |

NOTE

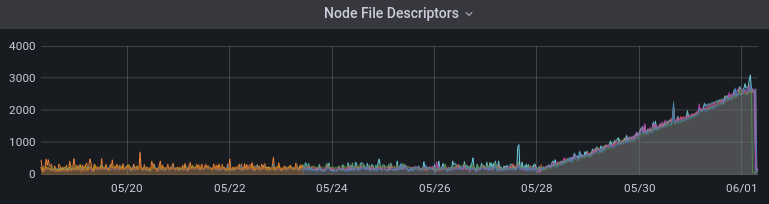

After upgrading to RELEASE.2022-05-19T18-20-59Z, we encountered the following issues in both UI and clients after running the cluster for few days (The cluster has very low work load) :

We could observe the file descriptor keeping increasing in the last few days.

We could also see thousands of file descriptors as below:

Expected Behavior

The cluster should recycle the file descriptor/connections and should not stop working.

Current Behavior

The cluster could not handle client request. The performance is downgrade

Possible Solution

Steps to Reproduce (for bugs)

Context

Regression

Your Environment

minio --version): RELEASE.2022-05-19T18-20-59Zuname -a): Linux 5.4.0-54-generic Adding more api suite tests #60~18.04.1-Ubuntu SMP Fri Nov 6 17:25:16 UTC 2020 x86_64 x86_64 x86_64 GNU/LinuxThe text was updated successfully, but these errors were encountered: