New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

File descriptors and internal connection leak(reopen) #15033

Comments

|

We need to see profiling output here @lihaohua66 |

|

the profile for 10 minutes are here: |

I have no idea what this version is we do not publish such versions @lihaohua66 |

|

@harshavardhana it is RELEASE.2021-12-09T06-19-41Z |

@lihaohua66 you 300+ listing calls going on all servers - this is why there is a connection build-up - this is normal are you sure you have the right application workload here? You can't be really doing 300*6 - 1800 concurrent List operations on a tiny cluster like this. |

|

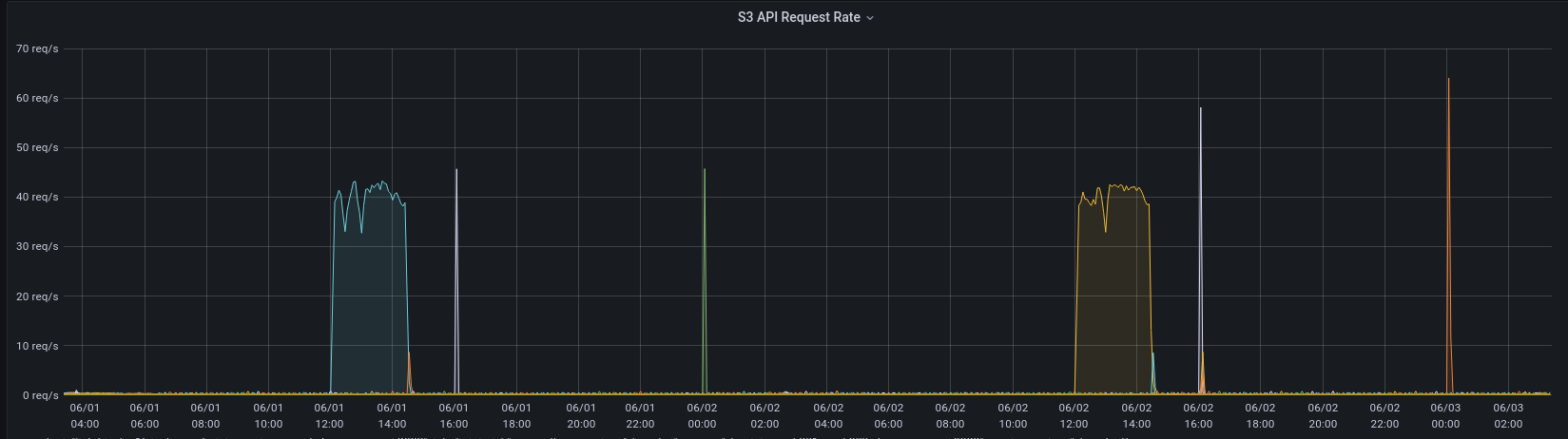

@harshavardhana from the metrics, we did not see many list operations in the cluster. |

|

yes but why? @lihaohua66 what is the purpose of doing 1800 list calls concurrently? |

|

I don't think we really perform 1800 list calls concurrently. is it possible that list operation lead to any leak? |

|

For the open file descriptors, most of entries are |

git diff

diff --git a/cmd/metacache-entries.go b/cmd/metacache-entries.go

index 41c3da3b1..3bad979cf 100644

--- a/cmd/metacache-entries.go

+++ b/cmd/metacache-entries.go

@@ -695,8 +695,12 @@ func mergeEntryChannels(ctx context.Context, in []chan metaCacheEntry, out chan<

}

}

if best.name > last {

- out <- *best

- last = best.name

+ select {

+ case <-ctxDone:

+ return ctx.Err()

+ case out <- *best:

+ last = best.name

+ }

} else if serverDebugLog {

console.Debugln("mergeEntryChannels: discarding duplicate", best.name, "<=", last)

}

diff --git a/cmd/metacache-server-pool.go b/cmd/metacache-server-pool.go

index 82998f86f..96c213073 100644

--- a/cmd/metacache-server-pool.go

+++ b/cmd/metacache-server-pool.go

@@ -554,8 +554,8 @@ func (z *erasureServerPools) listMerged(ctx context.Context, o listPathOptions,

for _, pool := range z.serverPools {

for _, set := range pool.sets {

wg.Add(1)

- results := make(chan metaCacheEntry, 100)

- inputs = append(inputs, results)

+ innerResults := make(chan metaCacheEntry, 100)

+ inputs = append(inputs, innerResults)

go func(i int, set *erasureObjects) {

defer wg.Done()

err := set.listPath(listCtx, o, results)can you apply this fix and test again? @lihaohua66 how long does it take to reproduce the problem? |

|

Actually, this is the bug, the context is canceled however the result is hung at this and this is leaking connections. |

|

@harshavardhana thank you for the quick action. we use released deb package to deploy our minio. do you know if any easy way to apply this fix? |

|

The file descriptors increases gradually so usually it takes 5~10 hours to see the obvious increasing in the dashboard. |

you will have to compile, I can compile one for you to deploy - so you can test it out. |

|

that would be great. thank you |

|

You can download the binary from |

|

sure, let me test it. |

|

Please do not deploy this yet there seems to be some problem, let me make a more thorough fix @lihaohua66 |

|

Okay updated the binary once again, same location |

|

Closing this as fixed in #15034 |

|

cool, let me tested with the latest again |

NOTE

After upgrading to RELEASE.2022-05-19T18-20-59Z, we encountered the following issues in both UI and clients after running the cluster for few days (The cluster has very low work load) :

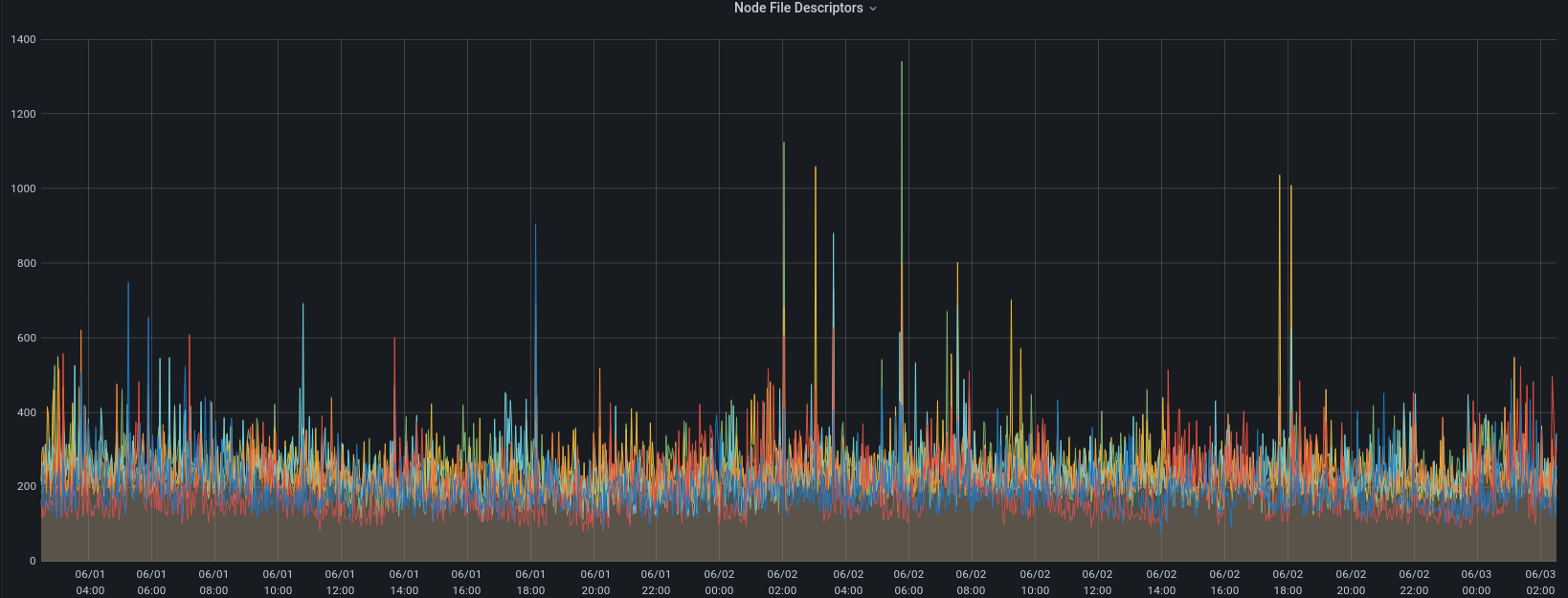

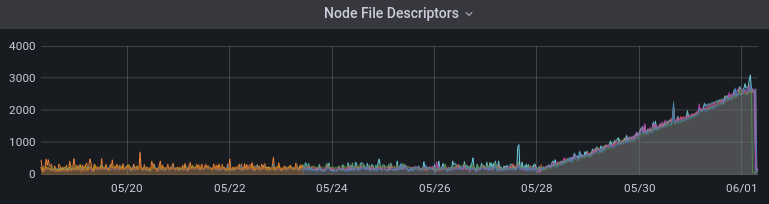

We could observe the file descriptor keeping increasing in the last few days.

We could also see thousands of file descriptors as below:

With @harshavardhana ' suggestion in #15022, we upgrade to

RELEASE.2022-06-02T02-11-04Z, but the file descriptor still keep increasing. (there are some clif here because we restarted the cluster at that time)Expected Behavior

The cluster should recycle the file descriptor/connections and should not stop working.

Current Behavior

The cluster could not handle client request. The performance is downgrade

Possible Solution

Steps to Reproduce (for bugs)

Context

Regression

Your Environment

minio --version): RELEASE.2022-06-02T02-11-04Zuname -a): Linux 5.4.0-54-generic Adding more api suite tests #60~18.04.1-Ubuntu SMP Fri Nov 6 17:25:16 UTC 2020 x86_64 x86_64 x86_64 GNU/LinuxThe text was updated successfully, but these errors were encountered: