New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

vo_opengl: generate 3DLUT against source and use full BT.1886 #3002

Conversation

| const double gamma = 2.40; | ||

| double binv = pow(disp_black.Y, 1.0/gamma); | ||

| double gain = 1.0 - binv; | ||

| double lift = binv / gain; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think this should be lift = binv; otherwise looks good

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Just double checking:

The LittleCMS formula is Y = (a·X + b)^g + c. The BT.1886 formula is L = gain·(V+lift)^g with gain = (Lw_inv - Lb_inv)^g and lift = Lb_inv / (Lw_inv - Lb_inv).

Fitting this into the LittleCMS formula gives us:

(a·X + b)^g = gain · (V+lift)^g

(a·X + b)^g = (Lw_inv - Lb_inv)^g · (V+lift)^g

(a·X + b)^g = ((Lw_inv - Lb_inv) · V + (Lw_inv - Lb_inv) · lift)^g

⇒ a = Lw_inv - Lb_inv = 1.0 - binv

b = (Lw_inv - Lb_inv) · lift = (Lw_inv - Lb_inv) · Lb_inv / (Lw_inv - Lb_inv) = Lb_inv

Looks like you were right. I missed the distribution over the addition inside the right hand side when doing this in my head.

Thanks. Incorporated the suggestion, which affected the output to a very small degree. Now, apart from a difference in the color temperature, I get a 100% identical output (brightness-wise) in the black test ramp. NOTE to testers: If you tried out the previous version of the diff, make sure to delete your icc cache if you are using one. (I did not bother incrementing the 3dlut version for this trivial change) |

| tonecurve = cmsBuildParametricToneCurve(cms, 4, | ||

| (double[5]){1.8, 1.0, 0.0, 1/16.0, 0.03125}); | ||

| break; | ||

|

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Now that MP_CSP_TRC_BT_1886 is used for the actual BT.1886 case, obviously an additional

case MP_CSP_TRC_BT_709: tonecurve = cmsBuildGamma(cms, 1.961); break;

(alternatively called MP_CSP_TRC_BT_709_simple_gamma) is required for the QuickTime compatible configuration.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

That should be a separate patch. I'd consider calling it MP_CSP_TRC_APPLE, if you agree?

I'm not really sure how you'd expect to use it, though - you'd need to inject e.g. --vf=format:gamma=apple to force the video into using this pseudo-colorspace.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I suggest taking this to the conversation to make it more readable.

|

@UliZappe You can have your apple compat mode with something like this: I'm not sure how to fit this into the actual code though, since it relies on the cmsFLAGS_SLOPE_LIMIT_16 existing. Would need to add a separate check/#ifdef for the presence of that flag; and if not present (e.g. older or unpatched LittleCMS) disable it. Either way, I would not merge that particular code until it's part of the official LittleCMS. |

|

… continuing from the source comments.

I don’t think that would be a good idea:

That’s a good question I’m not sure about myself. I was just about to ask you how all these variants should be presented to the user. Certainly, one single flag that encompasses everything required for QuickTime compatibility would be nice for those who want that, but I’m not sure how it will fit in the whole range of color management options that you see. What is the user interface you envision for your variant? Which options will the user have? This I find confusing. How is

Yep, that’s a problem, and your suggestion probably the only clean solution.

Problem is, so far I do not know if this will ever happen. On the other hand, to the best of my knowledge, it seems to be the only way to 100% emulate the QuickTime approach without actually using ColorSync, which @wm4 understandably does not want (nor do I). But without this Little CMS patch the black crush issue will remain in the QuickTime approach. 😠 |

I don't think the value makes sense for anything else, really. What other source profile do you think a value of 1.961 should be applied to, if not BT.1886? |

Do all video formats that mpv can now distinguish use the BT.709 TRC? I thought some differed, but I can’t find the code right now that tries to guess the various formats (where is it?). Of course, if all video formats mpv can handle use the BT.709 TRC, then you are right, at least pragmatically (I still don’t understand how BT.1886 can be looked upon as a video source format, and find it a bit odd to have to select BT.1886 TRC to actually select BT.709 TRC …). (If everything is (Forget my DCI-P3 example from above, this was nonsense, since it’s a destination profile.) |

|

To elaborate on my not understanding: As I wrote, pragmatically your code should work if indeed all video sources mpv handles have the same TRC. My confusion is about the semantic conceptualization of the code. The code fragment we discuss is within the In all the other cases of the But in the case you call You (implicitly) assume the BT.709 TRC as the TRC of the HD video (etc.) input source but want to emulate the color appearance adjustment which is implicit in using a BT.1886 (an output spec) video monitor to output HD video (etc.) in a video studio. So the semantically correct (pseudo) code would be the following from my POV: That would be much clearer semantically from my POV, but of course, that’s just me. (It basically means that in ICC color management, no appearance adjustment is the default case.) Again, pragmatically it makes no difference. |

|

@fhoech I've been thinking some more about this code, in particular this bit:

We're essentially just using the black point's CIE Y value as the reference brightness for all three RGB channels. But this makes little sense to me - shouldn't we convert the display's black point to linear RGB (in the video's color space) and use those linear RGB values as black points on a per-channel basis? |

|

@UliZappe There is no such thing as MP_CSP_TRC_BT_709 in mpv's code base. MP_CSP_TRC_BT_1886 is the EOTF associated with all of the ITU-R style profiles (i.e. ITU-R BT.601, BT.709 and BT.2020). As for the other cases, they exist for when the user e.g. wants to view a .jpg stored in ProPhotoRGB format, or AppleRGB, or sRGB, or whatever. While it's true that essentially all digital video uses BT.1886, mpv has at least some semblance of support for image formats. (Very limited, though, since it relies on FFmpeg's autodetection and doesn't support e.g. embedded ICC profiles) |

Ideally yes, and ideally the resulting curves should gradually cross over from PCS neutral to actual device black, relatively close to black (e.g. Argyll CMS and DisplayCAL do this blending in |

This commit refactors the 3DLUT loading mechanism to build the 3DLUT against the original source characteristics of the file. This allows us, among other things, to use a real BT.1886 profile for the source. This also allows us to actually use perceptual mappings. Finally, this reduces errors on standard gamut displays (where the previous 3DLUT target of BT.2020 was unreasonably wide). This also improves the overall accuracy of the 3DLUT due to eliminating rounding errors where possible, and allows for more accurate use of LUT-based ICC profiles. The current code is somewhat more ugly than necessary, because the idea was to implement this commit in a working state first, and then maybe refactor the profile loading mechanism in a later commit. Fixes mpv-player#2815.

I know. 😉 That’s why I’m suggesting renaming All the other profiles in this list (ProPhoto RGB etc.) are source/working spaces, as is BT.709. BT.1886 is the corresponding destination/display space. But as I said, this doesn’t affect functionality, it only affects my brain. 😉

OK, if this is the case then all is fine. I wasn’t sure whether mpv treats SMPTE 240M (and possibly even other, exotic formats) separately. |

It is named after the document that defines it (ITU-R BT.1886). If you name it BT.709, that would be more confusing IMO - what curve does BT.2020 use? What curve does BT.601 etc. use? |

This makes the black point closer (chromatically) to the white point, by ensuring channels keep their consistent brightness ratios as they go down to zero. I also raised the 3DLUT version as this changes semantics and is a separate commit from the previous one.

|

@fhoech I went for the per-channel approach, I'm pleased to report that the result is even better now: My black point matches the exact black point I calibrated it to (via ArgyllCMS, using In the resulting video, apart from the differences in gamut, I can no longer see *any difference between CMS on and CMS off: no change in brightness, no change in color temperature. (When viewing on what should theoretically be a perfectly calibrated BT.1886 display) The code is slightly heavier, unfortunately. I wish there was a cleaner way to handle passing and freeing the cmsToneCurves. (Is a double-free on cmsToneCurve a bad thing? The documentation doesn't clarify) |

That's true, and IMO we should fix it by fixing the other curves to correspond to the actual EOTF as well - but given a general lack of material I've found on this I'm not really sure what to do about it. What kind of device should, e.g. an sRGB-tagged or ProPhotoRGB-tagged image be displayed on? Should we also be using a BT.1886-style curve? I know that sRGB was always intended to be displayed on a vaguely defined CRT, but how do we simulate that in mpv? BT.1886 as well? I mean, BT.1886 is after all literally a document defining what we should consider as a reference CRT response. |

But BT.1886 only defines the case with the implicit appearance adjustment, not the gamma 1.961 case …

Arguably, the same as BT.709. You can confirm that by looking at the definition of the TRC in the specs of those other video norms and finding that it’s always the same as the one in BT.709 – while there is no connection to any data defined in the BT.1886 spec. I concede that saying “BT.601 and BT.2020 use the BT.709 TRC” comes from BT.709 being the prevalent video norm today. In 10 years, maybe everybody will say “BT.601 and BT.709 use the BT.2020 TRC”. But if we wanted to take account of that, we’d need a generic term for these TRCs, and BT.1886 isn’t that term, because it does not define any of these TRCs.

Outside of color management, yes, where using a display with some predefined characteristics was the only chance to get colors at least roughly correct. But that’s not important anymore as soon as you use display profiles. And for still images (assuming this is what sRGB is used for) editing and viewing conditions are most certainly the same nowadays (within a huge statistical dispersion, of course). Or do you know anyone who uses one computer for editing images, but another one in a different room to watch them? And this means no appearance adjustment (contrast expansion) is typically required, anyway.

Therefore, I would clearly say no to this question as far as still images are concerned. (An exception might be viewing images on a video projector, but this is certainly not the prevalent viewing case.) |

BT 709 is an "input" i.e. camera colorspace. There's some indication that its colorimetry should be treated as focal plane referred estimates i.e. the colorimetry is not fully deterministic (somewhat underpinned by what Adobe is doing with their Rec709 ICCv4 "HDTV" profile, which includes an according 'ciis' colorimetric intent image state tag).

sRGB should probably use the sRGB TRC (or simplified gamma 2.22), although its original intention was to be viewed on a gamma 2.4 display (without ICC color management in that case). In practice how sRGB is used warrants the former more though (and definitely justified by its use as one of the de-facto "default" working spaces in many ICC color managed applications). ProPhoto should be gamma 1.8 (although a "linear" ProPhoto with gamma 1.0 also exists). Many other actual working spaces (like AdobeRGB, ECI-RGB etc) have well-defined tone curves. |

In a way, yes, but it’s also already standardized RGB and therefore also used as a working space. You won’t profile your video camera and then convert your video from the individual camera profile to a standardized working space, as you would typically do with a scanner and sometimes at least with a still camera. So de facto BT.709 is also a video color working space. |

Let's consider a thought experiment. You have two identical and perfect displays, both of which have the exact sRGB gamut with no error, and both of which have their reference white at 120 cd/m² and their reference black at true 0. Suppose both displays have a full 16 bit precision from software to output, so that we can ignore rounding errors and other such things. One of the displays, you calibrate using your favorite calibration software to a curve of your choosing and measure+generate a .ICC profile. You then open an image tagged ‘sRGB’ in your favorite color-managed image viewer and display the result on the screen. (In theory, disregarding errors and imperfections, the choice of the curve used during this calibration should not impact the final result, right?) The other display, you open the same image an an image viewer that ignores color management and just sends the pixel values to the display as-is. What curve do you calibrate your second display to in order for the first display and the second display to end up looking identical? I cannot find a clear answer to this question. Seems to me that it depends on what the color management software on display ‘A’ is doing under the hood. |

sRGB (I don't know of any CMMs that silently substitute a gamma 2.2 TRC, but certainly the other way around).

True, but if it's working correctly then the results should match. |

|

Alright, then our MP_CSP_TRC_SRGB is implemented as correctly as it can be. (As for the rest, i.e. curves other than BT.1886 and sRGB, I don't think they're important enough to warrant caring much about them) |

Just to make sure, since this sentence is ambiguous – are you saying I don't know of any CMMs that silently substitute a gamma 2.2 TRC with an sRGB TRC or I don't know of any CMMs that silently substitute a gamma 2.2 TRC for an sRGB TRC ? |

|

The latter

|

Well … yes … 😇 |

|

The overall response of the two images is clearly very similar now, as expected. More importantly, the differences you are seeing in brightness is now not caused by the fact that one has a higher or lower gamma (per se) but with the fact that one is a cut-out of a gamma curve rather than a full gamma curve, so the overall slope is less. (It's slightly “flatter”) As for which one is correct, I still think we need more sources than just Melancholia. For all we know, Melancholia is an outlier that was mastered to be correct in QuickTime Player by people using Apple products, rather than a Blu-ray that was mastered with traditional means in an encoding studio that uses devices calibrated to BT.1886. We've discussed before about whether the former should be a consideration in our process or not, but I don't think we reached a conclusion without further studio insight - but I remain by my belief that BT.1886 is the dominant standard overall. Either way, even in the case of Apple-mastered content, it still makes some sense providing a QuickTime emulation mode simply for this fact alone, regardless of what may or may be correct or not. (It wouldn't be the first compatibility mode implemented in mpv for sake of consistency with real-world deviations from the standard, see also e.g. aegisub compatibility hacks)

It's significantly easier to see the difference if you flip back and forth between the two images, rather than looking at them side-by-side. Also turn off color management in your image program.

If you want colors identical to a real BD player just turn off color management. :D |

With my linked patch it should work for matrix profiles too, although of course in the case of missing contrast information (e.g. no tag) mpv would now print a warning asking the user to manually specify contrast. (And default to 1000:1) |

OK. Test will have to wait until I return home again.

Isn’t 1:1000 far too high? I would have thought the average computer display has something like 250–300 : 1. Just saw this (edited?) remark of yours now:

No, that’s not what I base my argument on. I base it on the very simple idea that different applications on a computer have to behave consistently between each other (the very thing that you say does not exist). If I have a (properly tagged) still image and open it in different image viewing applications, it must always look exactly the same. Just as a sound file must always sound exactly the same, no matter which player application I use. Just as a PDF document must always look the same, no matter which PDF viewer I use. I think this is a very obvious idea; maybe in the case of color, it’s especially obvious for Mac users as OS X is completely color managed – there is no such thing as a non-color managed application on OS X (of course, unless the programmers willfully avoid this). OS X uses ICC color management. So this is the conceptual framework every content has to comply with on OS X to guarantee this consistency. BT.1886 is completely orthogonal to ICC color management. Nowhere in the BT.1886 spec you will find the word “ICC”, and nowhere in the ICC spec you will find the word “BT.1886”. Acknowledged standards in the ICC world such as Marti Maria’s BPC implementation contradict BT.1886; you wrote that yourself. And since you implemented BT.1886 on mpv, you are certainly aware that the required transforms are very different from the ICC approach of Space1 → PCS → Space2. Semantically, BT.1886 assumes a dim viewing environment, whereas ICC compliant applications do not. And BT.1886 implements the corresponding appearance adjustment in the source, whereas ICC would place it into the display profile. It just does not fit together. I really have no idea how you’ve come to the conclusion that BT.1886 should play any role in computer based video (not even the word “computer” appears in the BT.1886 spec!). I’m aware this is a wide-spread misconception (Charles Poynton, anyone?), but it’s a misconception nonetheless. In a computer context, I must be able to take a (tagged) still image, convert it to a short mp4 movie, open this movie file in a player application, and the image I see in the movie player must be exactly like the still image I see in my image viewer/editor. This idea seems completely obvious to me; if color management on computers is worth anything, it must achieve that. But image viewers/editors use ICC color management to display the image on the screen. Therefore, it cannot be consistent if a very different mechanism, BT.1886, is used to display the movie images on the screen. If they look similar, it is by coincidence rather than by design; therefore, it is not reliable, but reliable consistency in color reproduction is the hallmark and raison d’être of ICC color management. I’m well aware that you will disagree. I just wanted to point out that it’s not that I don’t have any arguments on my side besides one Melancholia image. So let’s just agree to disagree. PS: I’m not saying QuickTime Player is the best possible video player. Quite the contrary, if you consider that it uses the ColorSync CMM without turning on BPC (for whatever reason). If we manage to emulate QuickTime behavior in mpv (Slope Limiting and all) and use BPC, mpv will (from my POV) probably be superior to anything else as far as color reproduction is concerned. |

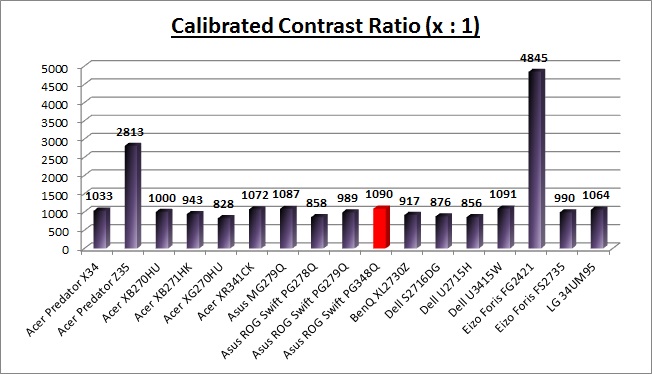

No way! We are talking about the absolute output luminance at white divided by the absolute output luminance at black, right? Most displays I have come across have had something close to 1000:1 contrast. Even my years-old U2410 has a contrast of 730:1 or so, which seems to be at the absolute lower range. (My other display has about 900:1) tftcentral offers this comparison from their recent articles, including both IPS and TN panels: (The outliers are VA-type) |

Well, give me an NEC 2690WUXi2 and I would probably confirm this observation.

Nvidia Shield?

I don't know, but I don't believe that the lower right corner (?) is really that relevant, compared to the face of the actress right in the center of the frame. I don't doubt your measurements, though.

Considering our Real World™® Applicability again, the most common display (panel) type is still TN, almost to the point of ubiquity. There is this saying: If in doubt, assume it's TN. They have their benefits, but there is one dramatic disadvantage: color and contrast instability by even slight changes in viewing angles. I somehow doubt that a difference in these sample images can be perceived reliably, because the effect of viewing angle change overshadows that completely.

So I have to cut them manually? I think I'll pass 😄 |

This is a flawed argument - you can't just “convert” a still image to a short mp4 movie trivially. For example say the image was RGB, we first need to convert this to some YUV space, encode it as such, subsample it, etc. If you want to convert an image to a movie file while preserving the colors 1:1, then your encoder needs to use a conversion that is identical to the conversion used by the decoder (obviously). If the decoder uses BT.1886, then your encoder would also need to use BT.1886. (That is, when converting the still image to a movie clip, you would need to undergo the color space transformation from sRGB to BT.1886) Alternatively, you could produce a movie clip that is tagged as sRGB (instead of tagged as BT.709/BT.1886) and skip the conversion (but of course, not all color spaces have appropriate tagging available). To put it into image terms, you are now arguing the equivalent of “I should be able to just convert a JPG to PNG and get the same colors as before!” by constructing an example of a scenario where the PNG encoder fails to preserve the embedded ICC profile in the former. Of course in this conversion it's obvious to both of us that there's no mistake in an image viewer producing a different result between the two (because they were mis-tagged to begin with, and the default assumption of sRGB does not match the ICC profile of the former). But this is not that different from the video file situation as presented. If you convert an sRGB image file to a short movie clip, and then tag this movie clip as BT.1886 instead of sRGB, you should expect to see a different result! Now of course, this is not quite as trivial as I made it sound since BT.1886 is contrast-indexed family of color spaces rather than a single color space, which means an encoder would have a hard time trying to encode to BT.1886 in the general sense. (And normally, an encoder should target the BT.709 TRC) But of course, BT.1886 is not designed with such goals in mind (unlike ICC). We're only supporting BT.1886 in the interest of being compatible with existing files that were produced with ITU-R methodology and tagged as such. |

I think the root of our misunderstanding might be that I'm operating under the implicit assumption that mpv will be primarily used to play “legacy” DVD / Blu-ray video content. Of course, this assumption is not entirely easy to make, and you could also argue that the default behavior should target, say, YouTube videos of gameplay footage. (Which would/should be treated as sRGB) But ideally, what you want to do in such cases is properly tag the media files so that there's no ambiguity. Remember that mpv only uses BT.1886 when the source is detected (or guessed) as BT.1886. It uses sRGB when the source is detected or guessed as sRGB, instead. (For example RGB content) At some point we're drifting from an argument about how to implement BT.1886 to an argument about “should BT.1886 be the default assumption for untagged files?”, which is not an argument that we should conflate. Right now, let's focus on implementing BT.1886 correctly - and if you think that Apple-produced content must be handled differently from “legacy” content (Blu-ray movies, DVDs, etc.), then I propose you:

|

These are not the users targeted by color management in mpv. The users targeted by color management in mpv are the users who will write thousands of comments discussing color management across 10+ issues/PRs. ;) |

If it's a really old TFT, who knows. But even my aging NEC CCFL wide-gamut IPS desktop monitor can achieve around double that (and that is with some black point hue correction, which raises the black level).

Everything may be color managed, but the bugs certainly undermine the idea of consistency. E.g., SafarI's color management is flawed or downright broken (CSS colors are handled as if in the display color space for example, there are rendering bugs with gradients which makes them flicker and shift in color when scrolling, etc...), Preview's color is broken in El Capitan [1], and I could probably easily list a few more if I were to look. Apple needs to get their act together (although I still think they are to be commended for going the fully color managed desktop route). But that discussion is OT for this thread. [1] https://discussions.apple.com/thread/7291462?start=0&tstart=0

BPC was originally concocted by Adobe, it's not part of the ICC standard, and could be described as a (very simple) form of perceptual gamut mapping (that only maps the lightness axis). BPC is useful, and Marti did a great job matching Adobe's implementation (and the existence of littleCMS alone is an important hallmark for ICC color management).

That sounds like a strawman. Also, "computer-based video" seems like an exceedingly broad (non-)definition that really doesn't seem to be very useful. What is "computer-based video"? Video that is created (or edited) on a computer? All digital video probably falls into that category. Video that is played back, or at least can be played back on a (desktop) computer? Sans the copy protection of some media, this also encompasses the majority of digital video material. Anyways, not really interested in that discussion.

For some inexplicable reason I think the mentioned entity knows a thing or two about digital video and imaging, and reckon misconceptions (if any) elsewhere...

It uses perceptual intent rendering, so it doesn't need BPC (unless you happen to have a matrix display profile that encodes the black point in its TRC tags, in which case I would expect to get black crush. LUT display profiles on the other hand usually have an appropriate perceptual B2A0 table). |

I wonder if we should use perceptual rendering intent by default in mpv as well. For things like LUT profiles that have a black-scaled perceptual table (but an absolute-scaled colorimetric table) it would have improved our results to begin with. I'm just always sort of wary of perceptual rendering intent due to the underdefined nature of it, such as the implication that it could be doing some form of gamut stretching or other perceptual “adjustments”; so if we can get a result that's also perceptually well-behaved by using relative mapping + black point compensation I would naively prefer the latter. Edit: Specifically, it's a profile with a matrix + curves (r/g/bTRC), said curves mapping to absolute luminance (not black-scaled). |

No! Safari is the only browser that correctly handles CSS colors. Firefox used to be able to do it, if you enabled an advanced option (non-default), but even that doesn't work anymore if you also enable hardware acceleration (the default). Without hardware acceleration, Firefox is not usable on my computer. Chrome doesn't even pretend to try. There's a 7-year old unresolved bug, filled a few days after Chrome's public release. All of this is very offtopic though. |

I'd agree with that notion, for the same reasons. Although I can certainly envision cases where perceptual rendering could be preferable (e.g. display gamut smaller than source, and the perceptual table incorporating gamut compression).

[ we should probably note for others that -Zr is a command line parameter for Argyll's colprof to set the profile's default rendering intent ] |

Then it should be handling them as sRGB (like untagged images), or honor the CSS |

|

Yes, that's what it does. I haven't done anything, it just works. I guess it was enabled in a relatively recent version, but I can't tell you which. FWIW it works in Safari 9.1 (11601.5.17.1) on OS X 10.11.4 (15E65). |

CSS colors should be treated as sRGB as per the spec. Firefox seems to do this on my machine, with or without hardware acceleration enabled.

This might be worth reconsidering in the future as BT.2020 content becomes relevant and computer monitors remain BT.709. Right now LittleCMS does clipping in L*Ch, which might be undesirable for some content types. But we'd have to see this content first, I think. |

Of course I can. I open it in Final Cut Pro X (or any other ICC color managed NLE, if one existed) – et voilà, it is an mp4 movie with 10 s length by default. 😛

Exactly! This is what I’ve been saying all along.

Yes. But BT.1886 is not only against the spec (which says HD movies are to be encoded in BT.709), it is also impossible, because BT.1886 takes display specifics into account (something that is completely misplaced architecturally from an ICC POV). Therefore the argument resolves exactly the other way around: If the encoder uses BT.709, then the decoder has to use this, too (and not BT.1886). This is what I’ve been saying for how many hundred postings?

No, I don’t think that’s the core of the disagreement. Watching Blu-ray content is important to me, too. I thought the difference might be that I’m not “just watching” movies for entertainment; I’m a scientist who actively deals with them – watches a few minutes (in daylight conditions), makes a screenshot and copies it into a word processing application, then goes back to mpv etc. This is why color consistency between applications is paramount for me, but it’s no complete explanation, because even if I’m “just watching” blu-ray movies, they look clearly inferior and distorted, “wrong”, from my POV with the BT.1886 setting. If I had to make free associations, I would say they look like TV instead of cinema, and I certainly want the latter.

😅 But in earnest, that’s only partly true. As far as I’m concerned, I certainly have the “educational” goal of enabling Joe User to watch his movies in correct colors without much ado. Now if we only could agree what these correct colors are. 😁 |

I’m the first to admit that. There’s far too many bugs in OS X. But that doesn’t mean the goal is wrong. It means more work is required to achieve it.

IIRC, Marti’s version is officially endorsed now.

I can’t check that since I’m not at home, but whatever QuickTime Player does, judging by the latest screenshot of the QuickTime Test Pattern HD movie that I posted above (created with a LUT display profile), it is not sufficient. |

Using the BT.709-specified curve for decoding is one of the very first things we did, way back before any of these discussions happened. Look how far that got us. :p I cannot find a universe in which “use gamma 1.961” and “use BT.709” agree. BT.709 in no unclear terms defines the exact curve used to encode, and encoding (decoding) with that curve gives you a result that is visibly different from encoding (decoding) with gamma 1.961. So far, all your arguments seem to be based only on “do exactly what ColorSync is doing”, the most compelling argument for this I have seen being “so I can copy/paste screenshots into other ColorSync-applications without introducing round trip errors”. |

Far, IMHO. There were only two steps missing: Realising that (because of _real world_® concerns?) the complex BT.709 TRC was replaced by the closest simple gamma approximation (which is 1.961), and that Slope Limiting was assumed.

I assume this universe is called “mobility”. Just like in the early days of color managed image editing the sRGB TRC was often silently replaced by gamma 2.2 for performance reasons, the same seems to have been done with movies to ensure mobile devices will be able to play them. And who knows, maybe there’s some truth to the mental gymnastics with which Apple rationalizes its choice of gamma 1.961. (Something along the lines of: In a classic setup, BT.709 encoded video was edited and reproduced with a display with a simple gamma TRC; now we need to reduce contrast, because we don’t want an appearance adjustment for a dim viewing environment (to ensure consistency with other computer applications), but we stick to the simple gamma curve, only with the lower value of 1.961. – Maybe BT.709 encoded videos generally do look more truthful with a simple gamma curve because of that. I cannot say for sure, as I never researched this systematically; all I know is that anecdotally, Blu-rays look as intended with the simple gamma curve.)

This is correct, and it is unfortunate. I’m ambivalent about this. On one hand, it would certainly have been better to stick to the standard specs literally (or formulate a new standard) . On the other hand, I’ve never understood why this standard used such a convoluted TRC instead of a simple gamma in the first place. (Yes, I know all the arguments, but they aren’t convincing in an ICC color management context.) But in any case, Final Cut Pro X is 5 years old now, and it’s still the only ICC color managed NLE out there. So Apple simply set a de facto standard for ICC color managed video. If ICC video color management will win out at all (as I think it will), it will win out with BT.709 gamma 1.961.

I sounds a bit trite the way you phrase it, but yes, that’s the crucial point. ICC video color management will allow for that, or it will fail. |

I doubt any mobile device used software color management during a time where this change would have made even a quantum of difference.

But this is where I don't really understand the derivation. If the intent is to encode values in a way that they themselves can decode to get the same result, you could have picked some monitor-referred technical gamma like 2.2 (which actually seems to match the BT.1886 brightness fairly accurately overall) in both their encoders and their decoders. (And obviously, if Apple had done this instead - it would look the same in your Melancholia sample as it currently does with the 1.961 curve in QuickTime; except that for the rest of the non-QuickTime world it would look right as well) To me, 1.961 just looks significantly brighter than other media, regardless of the room I'm in. I felt this way so strongly that I had to introduce and set Given the fact that movies are (supposedly? as per the ITU-R) mastered on displays which are close to a 2.2 effective gamma overall, this does not surprise me in the slightest.

All these standards are old enough to be pretty much based on doing whatever worked best when displayed directly on a CRT without color management. (Same with sRGB) Since real CRT's responses are fairly complicated even compared to these curves and don't really match a pure power curve, approximations like sRGB or the original BT.709 encoding transfer worked “better” (e.g. less black crush) than the simpler alternatives. You have to remember also that CRTs have a real infinite contrast, so their curves go down to the real blacks in ways which we can't even properly comprehend with modern displays. As a CRT approaches zero, and a pure power curve approaches zero, their reproduction almost surely deviates significantly more than the deviation you get with a modern display (that is itself just a pure power curve stretched onto the display, calculated by a chip on the display which can easily just mathematically inverse whatever you give it and is not really bound by the constraints of cathode ray mathematics). Presumably, somewhere as digital displays became standard and the studios switched over from using CRTs to LCD displays calibrated to CRT approximations (e.g. pure powers or BT.1886), we stopped mastering things for direct playback on uncalibrated infinite contrast CRTs and started mastering them for direct playback on simplified curve displays instead. So at some level, the original BT.709 curves do not even matter so much as the colors are adjusted during postproduction from what the BT.709-encoding camera sent and what actually gets delivered to the display. It's worth keeping in mind that BT.1886 and gamma 2.2 are both pretty much the exact same thing: gamma approximations of the complicated CRT gamma that are stretched to meet the black point of the device. The only difference is that BT.1886 is stretched along the gamma curve (in the “input space”, if you will) and a normal “gamma 2.2” display is just stretched in the output space (linear brightness stretching, as is done in ICC since it enables device-invariant black point compensation). |

|

Btw, from what I remember, you seemed to argue often that the environment-based gamma drop was “bogus” or not something backed up with rigor. If so, shouldn't the same apply in reverse? If real-world mastering displays used a gamma like 2.2 - 2.4 (which we have “confirmed” from more documents than just ITU-R's, for example the EBU Tech 3320 document) in a dark viewing environment, and you are now taking these films into a bright viewing environment - then by the very same logic shouldn't we still use the 2.4 curves in these bright environments? Obviously if we were writing a new standard in a clean room, this is irrelevant, and we could have picked 2.2 or 2.4 in either case, but we're trying to establish mpv's behavior based on what's being done in practice. Ps. I think the fact that you think 2.2 is too dark and I think 1.961 is too bright is probably due to the fact that I'm in a very dark room and, from what I can tell, you're in a very bright room. |

|

EBU Tech 3320 actually specifies another reason for why the OETF has always been segmented: Analog filter design. Pure powers might be fine with nice discrete digital values, because a value of 1/255 is simply not close enough to 0 to encounter numerical problems - but in a purely analog filter you would technically need an infinite gain to implement them properly, and the noise level of such signals was probably higher to begin with. Either way, after reading re-reading Annex A of this document it seems very clear to me that in a truly black-scaled device you would definitely want to be using a pure power curve of gamma 2.4 to decode content (ignoring for the time being the precise performance near 0 as compared to a real CRT). The only inconsistencies all show up once you consider what to do on an LCD display that doesn't have an infinite contrast. BT.1886 suggests contrast scaling in the gamma space (x axis), ICC suggests contrast scaling in the absolute space (y axis), and this is where our differences come from. So really, the difference comes down to how we want to do black point compensation in mpv! Maybe the right resolution to this whole ordeal should be a tunable setting for the black point compensation algorithm, be it source (use the BT.1886 with detected contrast), linear (use the simplified curve and turn on LittleCMS' BPC) or none (just black clip)? To put some numbers onto the table, I decided to figure out how the curves actually compare when comparing these two approaches on a sample device with a 1000:1 contrast and assuming a reference (black-scaled) curve of gamma 2.4. The blue line is calculated such that The red line is calculated such that I then tweaked the exponent of the red line such that it very closely matched the blue line at around the mid-way point (input signal 0.5) where the deviation was the greatest. This results in the yellow line. The yellow line corresponds exactly to a digital display calibrated to a naive gamma of 2.2 (“naive” in the sense that the gamma function is calculated normalized to the monitor's brightness range, rather than absolute luminance), which is what we would get in mpv if we decoded with a pure power curve and then turned on black point compensation. (Or used a black-scaled matrix profile) Clearly, the yellow line with a value of 2.2 almost exactly matches the BT.1886 approach as far as the overall brightness is concerned. Some cut-outs for clarity: Obviously, as we can see, the biggest difference is that the blue curve has a much “nicer” near-black behavior than the gamma curves, which is evident in the screenshots made above. Probably this difference is what slope limiting ultimately paves over? Edit:

To investigate this, I tried looking at the end-to-end distortion assuming a 2.4 reference display and a 1000:1 target display. (Blue is still with BT.1886's BPC, red is still with naive 2.2 + ICC BPC. Both x and y axis are linear light) Et voila, yellow being with slope limiting on the relevant direction: I do sure wonder why Apple decided slope limiting turns out to look better than without. :p Edit 2: |

|

While I had wolframalpha open, I decided to explore the legitimacy of the “pure powers are bad for inputs” suggestion: To simulate very naively an input image containing noise, I decided to model a noisy input To show the effects of the noisy input signal, I have taken zoomed cut-outs of various regions. (I normalized their sizes by input area in gamma space, so we get a fair representation of the value range from 0 to 255 in a typical 8 bit video file) For comparison, here are the “piecewise” transforms actually specified in BT.709 (at the relevant levels for the linear slope). Note: The x axis is now dimensioned as according to the slope of the linear segment, not the pure power curve. This also helps solidify the point tl;dr even mathematically there seem to be very good noise-canceling properties when encoding a camera input to a “complex” TRC; and in particular we avoid exponential blow-up in the coding value ranges. (In a pure power curve and assuming zero-scaled input, the difference between 8-bit 0 and 1 is the difference between 0 cd/m² and 0.000001676 cd/m², which is just absurd for camera inputs that have a much higher noise floor and displays that have a much smaller dynamic range. This is what we mean by “infinite gain” at 0. Now I know you've done testing based on taking camera inputs and saving them in pure power spaces to “prove” that pure power curves are fine. But this si the problem: Mathematically, your images round-trip well because by going from already piecewise-processed inputs to pure power inputs you're just slightly compressing into the “right” direction (away from zero - notice how the pure power curve is distinctly and obviously above the linear segment in the coding range (y axis) at the same PCS value (x axis)). Your signal round-trips well modulo some rounding errors, because the noise is already gone, and you are just taking what's effectively close to 0, ballooning it into the range 0-10, and then squashing this back together when going back to your non-infinite-gain display. Of course it round trips perfectly. :p |

|

One further update since I just realized something: We've been talking about treating the 1.961 as being the equivalent of the film “without” the implied gamma drop from source to target. (≈ 1.2) So I decided to see what I would get if I plotted the 1000:1 BT.1886 curve with an extra It now ends up significantly closer to the curve of pure power gamma 1.961 (with BPC)! I wonder what your Melancholia sample would look like if you played it with |

Well, it’s a bit different. When Apple conceived the iPhone, OS X was already completely color managed, and they did not want the iPhone to fall behind. However, the iPhone processor wasn’t powerful enough for ICC color management, so what they did was to calibrate the iPhone screen to sRGB (probably with a simple gamma 2.2, I’m not sure) and convert each and every image that is copied to the iPhone to sRGB if it isn’t in this space already. This is possible since users cannot simply copy a file to the iPhone, they have to use apps like iPhoto or iTunes for that, so Apple could perform these automatic color space conversions behind the scenes on OS X. Apple PR called this “targeted color management”. But this was not possible for movies; it would take far too long to convert a movie to another color space before copying it to the iPhone. But since sRGB and BT.709 share the primaries, the only thing to be adjusted in real time within the iPhone was the TRC. Making this adjustment as efficient as possible might well have been part of the reason Apple used gamma 1.961 instead of the complex BT.709 TRC. (The funny thing is that from your POV, they could/should have simply left the sRGB TRC as is …)

OK, gamma 2.2 would work as well for an “immanent” round trip, i.e. a round trip of image data that is already encoded on the computer. But ICC color management isn’t just about immanent color consistency. It’s also about color consistency with the physical world, otherwise the ICC spec would not have to care about things like viewing environments etc. Now make the following thought experiment: You take a picture and a (very short) movie of one specific scene, with an (idealized) DSLR that also allows to shoot HD movies and that has a truly “neutral” or “faithful” picture style for both still images and HD movies (unfortunately, real world cameras seemingly never do). On the computer, the still image is displayed with some standard still image viewer/editor, the movie with mpv with BT.1886. Will they look the same? No, they won’t, because the still image will be displayed with “pure” ICC color management without any contrast enhancement, whereas the movie will be displayed with the contrast enhancement implied in BT.709 reproduced with BT.1886. So to provide consistency for this kind of “physical round trip”, Apple could not use just any TRC, they had to use the source TRC, which is BT.709 or (simplified) gamma 1.961. If you do that, the still image and the movie will (in principle, implementation details aside) look the same.

Yep, as far as the output/display side is concerned, I’m afraid this is indeed the case – i.e. it’s purely historical ballast. Now, as far as the input/encoding side is concerned …

These are interesting musings. One important point they might ignore is that software never handles really raw image data. Everything that arrives at a software interface is already pre-processed to one degree or another by the camera or the scanner. Anyway, let’s compare your musings with actual implementations. If you take a professional still image camera, you can always choose (at least) between sRGB and Adobe RGB (1998) for fully processed images. If you use the RAW format and do the final processing in software, you might also choose ProPhoto RGB. Clearly, Adobe RGB (1998) and ProPhoto RGB are the color spaces that provide the better images, but in contrast to sRGB they both sport a simple gamma curve. If you look at the historical development, you’ll find that sRGB was introduced in 1996 by Microsoft and HP, Adobe RGB (1998) by, well, Adobe in 1998, and ProPhoto RGB by Kodak in 1999. Clearly, the image experts among these vendors are Adobe and Kodak. Both introduced their color spaces after sRGB, and both used a simple gamma curve instead of following the preceding sRGB example. It’s interesting to read Kodak’s explanation for that in their ProPhoto RGB white paper, page 5. In contrast to sRGB, ProPhoto RGB was conceived from the beginning with basically a simple 1.8 gamma curve. However, it did originally have a small linear segment at the dark end. But since Adobe Photoshop could not handle such color profiles at that time, Kodak decided to let go of this linear segment in their actual implementation of the ICC profile and use a Slope Limit in their CMM instead (Adobe was also known to use a slope limit in their CMM). In fact, if we encounter any patent issues wrt/ Slope Limiting, it will most probably be with patents from Kodak. Kodak wrote in their ProPhoto RGB white paper that maybe later on, a new ProPhoto RGB profile with a linear segment could be introduced, but as we know now, this has never happened. Instead, the ICC color management world seems to have settled on simple gamma curves plus Slope Limiting for the most important color spaces in the professional still image world, Adobe RGB (1998) and ProPhoto RGB (although Apple didn’t join the Slope Limiting club until 2009). It’s worth noting that ProPhoto RGB is an output-referred color space in Kodak’s terminology, as are all the other color spaces we’re talking about here. As far as encoding is concerned, Kodak sticks with the linear part, in compliance with your musings. So the idea seems to be something along the following lines: Let’s encode with a complex TRC (because of the reasons you discussed), but once we have the data encoded and are in the output-referred “working color space”, let’s switch to a simple gamma + Slope Limit, as this simplifies things. Since we do the final color grading in the output referrred space, anyway, that’s fine wrt/ consistency, as long as we stick with this TRC from then on. 10 years later, when implementing ICC video color management, Apple seems to have followed Kodak’s approach exactly.

True. I’ve read from cognitive psychologists who dispute these effects, at least to the asserted extent. Then add in the commercial interest in a justification to make images look pleasing to the potential consumer, and industry standards can become “complicated”. I read (but unfortunately didn’t save) a study by a cognitive psychologist who more or less confirmed the contrast drop in dim environments with a relatively small screen space (TV), but argued his data showed that this reverses for completely dark rooms with a relatively large screen space (cinema).

No, not really. Basically, there’s two cases:

This surely sounds plausible. I have indeed calibrated the lighting of my room to provide for exactly the same (il)luminance as my display, so that the display looks as if it is lit, just like a piece of paper. (My display has a luminance of 200 cd/m² which roughly equals 700 lx on my desk, so even a bit more than the 500 lx of the assumed viewing condition of the ICC spec.) And when I want to simply watch a movie, I do this with a projector in a completely dark room, cinema-like, which is why I found the above mentioned study so interesting which says that no perceptual gamma drop occurs in this situation. But right now, I’m sitting in a dimly lit hotel room, look at the two Melancholia images on my tiny laptop screen, and still feel the reference still image is more correct than the mpv image. Although admittedly, the mpv version doesn’t look as “wrong” to me now as it did before. But all kinds of psychological effects might influence this impression, so there’s hardly anything objective about it. But all in all, I think this whole subject is unclear enough to make a decision for “the one correct solution” impossible and rather warrant options, the contrast enhancement you desire being one of them. What remains is my conviction that your solution is architecturally misplaced. If we assume that a contrast enhancement is indeed required for dim viewing conditions, this is an appearance effect that takes place in our cognition. To take that into account, the EOTF would have to become an “EOCTF” (electrical → optical → cognitive), and clearly the only place where this can be dealt with in the ICC architecture is the monitor profile, which handles the final steps of the data flow up to and including our perception of the image in specific viewing conditions. I wonder if it would be possible to build a monitor profile that would result in exactly the RGB screen values you’re getting now, but by a (from the CMM’s POV) “simple” BT.709 gamma 1.961 → PCS → special monitor profile transform. If so, this would be the architecturally correct solution, as it would affect all ICC color managed applications on your computer in a consistent manner.

Of course. You should be able to make this even closer by using a slightly different value than Going into the opposite direction of thought, I wonder if we could do something like replace Slope Limiting for simple gamma curves in monitor profiles by the corresponding segment of a BT.1886 curve. This might get us out of patent troubles, and the dark segment in the BT.1886 curve is clearly less coarse than the “official” Slope Limit approach. When no actual contrast ratio is known, a default value could be chosen that makes the curve approach the Slope Limit 16 curve.

You mean as an additional |

Applying the complex Rec. 709 TRC (with the linear segment near black) when decoding would eliminate the effect it should have on the camera side, which is making sure that noise is less visible! (the linear segment makes sure that relatively large steps in luminance levels near black at the camera side lead to relatively small encoded RGB steps, effectively "compressing" the noise, which when decoded with a power function helps making it less visible. If you apply the exact inverse of Rec. 709 when decoding, then it would reverse some of that effect, lifting remaining noise back up - same goes for compression artifacts).

It should be noted that the basic "promise" that ICC color management makes is one of colorimetry (reproduce same CIE values within limitations of the involved devices). Incidentally, this is viewing conditions and appearance agnostic.

Coincidentally, if you assume a (quite common) 1000:1 contrast display, and shooting (or rather, outputting) sRGB with the camera, then well, yes, they will look exactly the same (sans some miniscule differences that you won't probably be able to make out by eye)...

Staying in the "outputting sRGB directly from the camera" example, the image will already be processed by the camera, which means a bright (e.g. outdoor) to dimmer (indoor) viewing adjustment suitable for viewing on a (e.g.) computer monitor would have already been performed by the camera (if it does its job right!). And yes, even a 200cd/m2 display can be considered "dimmer" in comparison to (e.g.) a bright outdoor environment.

CRTs non-linear tone reproduction being a good match to the non-linearity of the human visual system, while pretty much coincidence, is the reason why this "historical ballast" is still relevant today (by the way, I find such statements somewhat cringe-worthy, it's like saying electricity is "historical ballast" just because we have moved on from early power generators).

It seems to me you are misunderstanding the nature of the contrast enhancement (S-Curve) of TV and monitor "torch" (presentation/store) modes versus the higher gamma necessary for correct visual appearance in a dim environment. |

.oO( Maybe one day I should just “misplace” the de-sigmodization curve and start marketing mpv? ) |

|

+1 for image adjustment with properly saturated, fresh, vivid colors. Let's make those colors pop! |

This commit refactors the 3DLUT loading mechanism to build the 3DLUT

against the original source characteristics of the file. This allows us,

among other things, to use a real BT.1886 profile for the source. This

also allows us to actually use perceptual mappings. Finally, this

reduces errors on standard gamut displays (where the previous 3DLUT

target of BT.2020 was unreasonably wide).

This also improves the overall accuracy of the 3DLUT due to eliminating

rounding errors where possible, and allows for more accurate use of

LUT-based ICC profiles.

The current code is somewhat more ugly than necessary, because the idea

was to implement this commit in a working state first, and then maybe

refactor the profile loading mechanism in a later commit.

Fixes #2815.