This repository contains the complete code from the blog post Transfer Learning with Tensornets and Dataset API. The goal of this project is to tackle the the 2013 Facial Expression Recognition Challenge using transfer learning implemented with Tensorflow's high-level API's.

You can download the data from the above link, copy it to the data/ folder and subsequently run ./datagen.sh in your Terminal. This function divides the fer2013.csv file into train and validation sets.

While it is possible to train this model locally, I did so on Goolge's ML Engine with the gcloud command-line tool. Make sure you upload both .csv files to a Google Cloud Storage bucket first. The trainer program and its utility functions are located in the ./train/ folder. An example script (to be run from the root of this directory) would look something like the following:

gcloud ml-engine jobs submit training 'jobname' --region europe-west1 \

--scale-tier 'basic-gpu' \

--package-path trainer/ \

--staging-bucket 'gs://bucketname' \

--runtime-version 1.9 \

--module-name trainer.task \

--python-version 3.5 \

--packages deps/Cython-0.29.2.tar.gz,deps/tensornets-0.3.6.tar.gz \

-- \

--train-file 'gs://path/to/train.csv' \

--eval-file 'gs://path/to/valid.csv' \

--num-epochs 8 \

--train-batch-size 32 \

--eval-batch-size 32 \

--ckpt-out 'gs://path/to/save/model'When the job finishes, the checkpoint files are saved to the location you specified ('gs://path/to/save/model' above). After downloading the files, we can freeze the weights and optimize the graph for inference using the trainer/freeze.py. From the command-line:

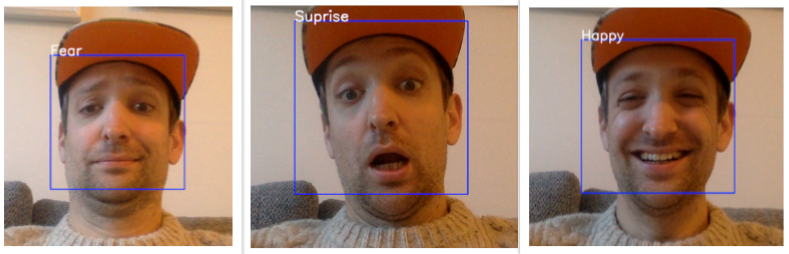

./freeze.py --ckpt ~/path/to/model.ckpt --out-file frozen_model.pbThe .pb file can subsequently be used directly with OpenCV for instance, running the model on our webcam:

./videocam.py --cascade-file face_default.xml --pb-file path/to/frozen_model.pb