GitHub actions will soon become a major player on the CI SaaS market, as far as I can tell GitHub actions can easily replace most CI tools out there especially if you ship code as container images. With GitHub actions you can do more then CI/CD, most tasks performed today with bots (code sign validations, issue management, notifications, etc) can be made into workflows and run solely by GitHub.

Why would you give up on your current CI SaaS and self hosted bots for GitHub actions? For once GitHub actions simplifies automation by offering a serverless platform capable of handling most development tasks, as a developer you don't have to jump from one SaaS to another to diagnose some build error. The less environments you have to use on a regular basis the better it gets and as a developer you probably spend most of your time on GitHub anyway.

In many ways GitHub actions are similar to FaaS, as with functions a GitHub action can be triggered by an event and you can chain multiple actions to create a workflow that defines how you want to react to that particular event.

Most FaaS solutions made for Kubernetes let you package a function as a container image, a Github action is no more then that, a piece of code packaged as a container image that GitHub will run for you.

In order to make a GitHub action all you need to do is to create a Dockerfile. Here is an example of a GitHub action that runs unit tests for a golang project:

FROM golang:1.10

LABEL "name"="go test action"

LABEL "maintainer"="Stefan Prodan <support@weave.works>"

LABEL "version"="1.0.0"

LABEL "com.github.actions.icon"="code"

LABEL "com.github.actions.color"="green-dark"

LABEL "com.github.actions.name"="gotest"

LABEL "com.github.actions.description"="This is an action to run go test."

COPY entrypoint.sh /entrypoint.sh

ENTRYPOINT ["/entrypoint.sh"]And the container entry point script that runs the tests:

#!/usr/bin/env bash

set -e +o pipefail

APP_DIR="/go/src/github.com/${GITHUB_REPOSITORY}/"

mkdir -p ${APP_DIR} && cp -r ./ ${APP_DIR} && cd ${APP_DIR}

go test $(go list ./... | grep -v /vendor/) -race -coverprofile=coverage.txt -covermode=atomic

mv coverage.txt ${GITHUB_WORKSPACE}/coverage.txtIf the tests pass, the coverage report is copied to the GitHub workspace. The workspace is shared between all the actions part of the same workflow, that means that you can use the coverage report generated by the go test action in another action that could publish the report to codecov.

This is how you can define a workflow that will publish the test coverage after a git push:

workflow "Publish test coverage" {

on = "push"

resolves = ["Publish coverage"]

}

action "Run tests" {

uses = "./.github/actions/gotest"

}

action "Publish coverage" {

needs = ["Run tests"]

uses = "./.github/actions/codecov"

args = "-f ${GITHUB_WORKSPACE}/coverage.txt"

}Th above workflow uses actions defined in the same repository as the app code but you can also define GitHub actions in a

dedicated repo, for example instead of ./.github/actions/gotest it could be stefanprodan/gh-actions/gotest@master or a even

a container image hosted on Docker Hub docker://stefanprodan/gotest:latest. Running each workflow step in a container is not a novelty,

Jenkins docker pipelines, Drone, GCP builder and other tools are doing the same thing but what I like about GitHub actions is that you

don't need to build and publish the action container image to a registry.

Github lets you reference a git repo where your action Dockerfile is and it will build the container image before running the workflow.

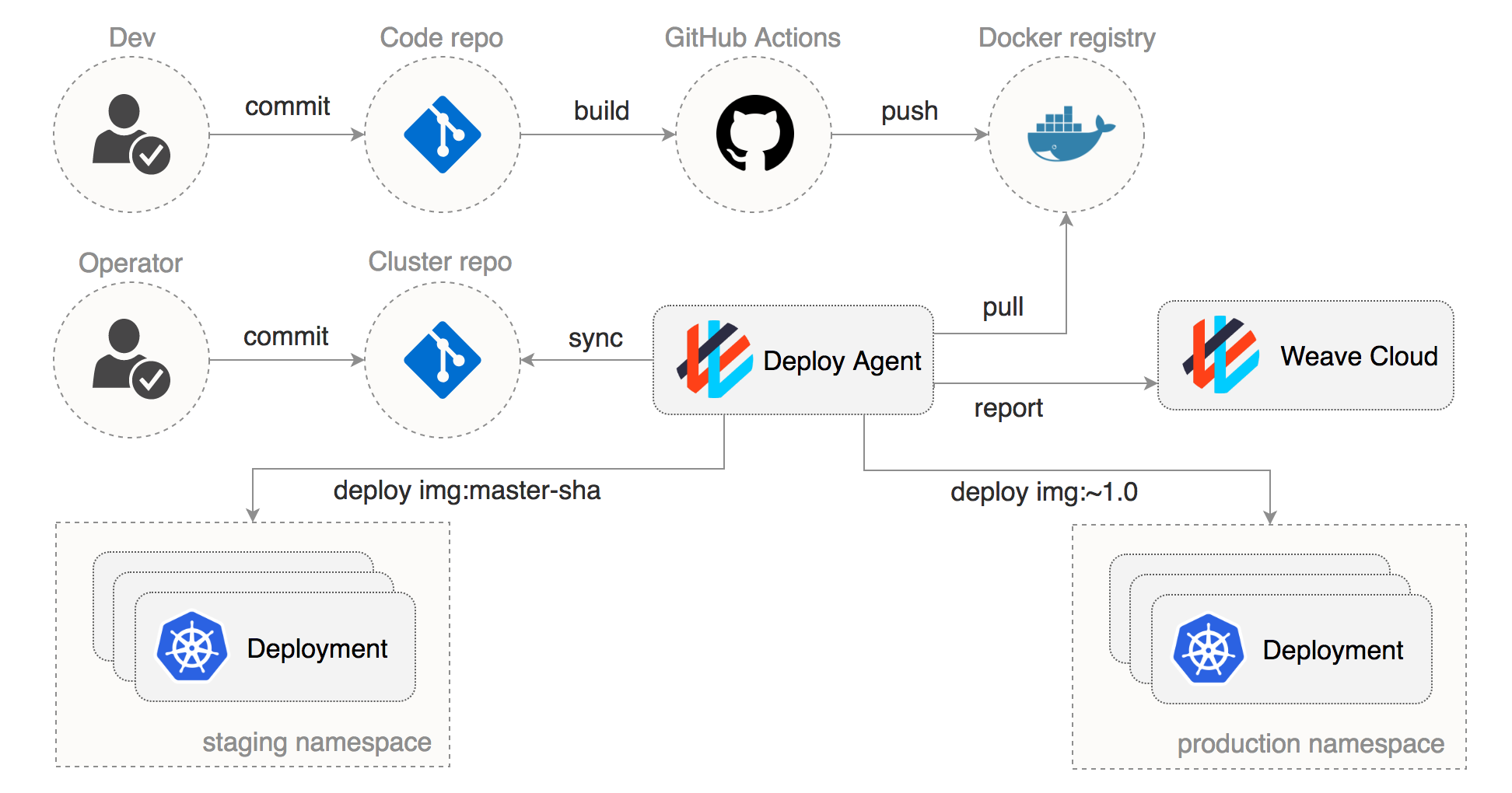

GitOps has a different approach on how you define and deploy your workloads.

In the GitOps model the state of your Kubernetes cluster(s) is kept in a dedicated git repo (I will refer to this as the config repository). That means the app deployments, Helm releases, network polices and any other Kubernetes custom resource are managed from a single repo that defines your cluster desired state.

If you look at the GKE CI/CD example made by GitHub, the deployment file is the same repo with the app code and the Docker image tag is being injected from an environment variable. This works fine until you run into a cluster melt down or you need to rollback a deployment to a previous version. In case of a major incident you have to rerun the last deployment workflow (in every app repo) but that doesn't mean you will end up with the same state as it was before since you'll be building and deploying new images.

GitOps solves this problem by reapplying the config repo every time the cluster diverges from the state defined in git. For this to work you'll need a GitOps operator that runs in your cluster and a container registry where GitHub actions are publishing immutable images (no latest tags, use semantic versioning or git sha).

I will be using gh-actions-demo to demonstrate a GitOps pipeline including promoting releases between environments.

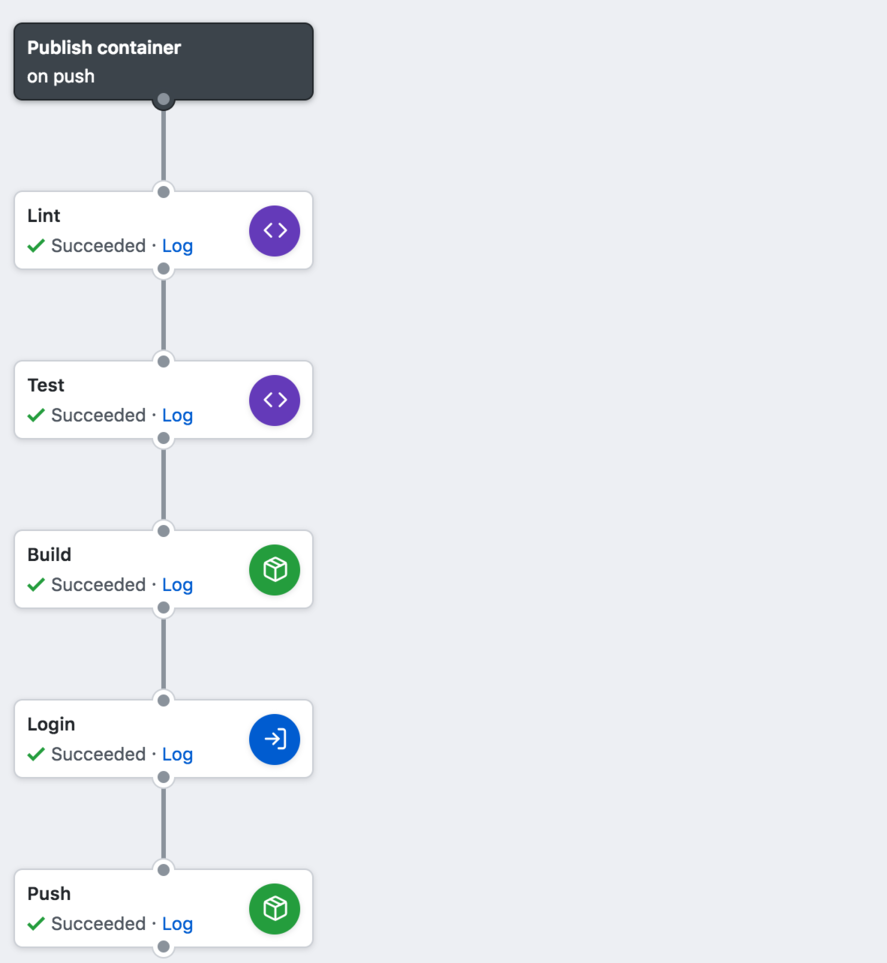

This is how the GitHub workflow looks for a golang app:

When commits are pushed to the master branch, the GitHub workflow will produce a container image as in repo/app:branch-commitsha.

When you do a GitHub release, the build action will tag the container image as repo/app:git-tag.

The GitHub actions used in this workflow can be found here.

workflow "Publish container" {

on = "push"

resolves = ["Push"]

}

action "Lint" {

uses = "./.github/actions/golang"

args = "fmt"

}

action "Test" {

needs = ["Lint"]

uses = "./.github/actions/golang"

args = "test"

}

action "Build" {

needs = ["Test"]

uses = "./.github/actions/docker"

secrets = ["DOCKER_IMAGE"]

args = ["build", "Dockerfile"]

}

action "Login" {

needs = ["Build"]

uses = "actions/docker/login@master"

secrets = ["DOCKER_USERNAME", "DOCKER_PASSWORD"]

}

action "Push" {

needs = ["Login"]

uses = "./.github/actions/docker"

secrets = ["DOCKER_IMAGE"]

args = "push"

}If you have access to GitHub Action, here is how you can bootstrap a private repository with the above workflow.

Now that the code repository workflow produces immutable container images, let's create a config repository with the following structure:

├── namespaces

│ ├── production.yaml

│ └── staging.yaml

└── workloads

├── production

│ ├── deployment.yaml

│ └── service.yaml

└── staging

├── deployment.yaml

└── service.yaml

You can find the repository at gh-actions-demo-cluster.

The cluster repo contains two namespaces and the deployment YAMLs for my demo app. The staging deployment is for when I push to the master branch and the production one if for when I do a GitHub release.

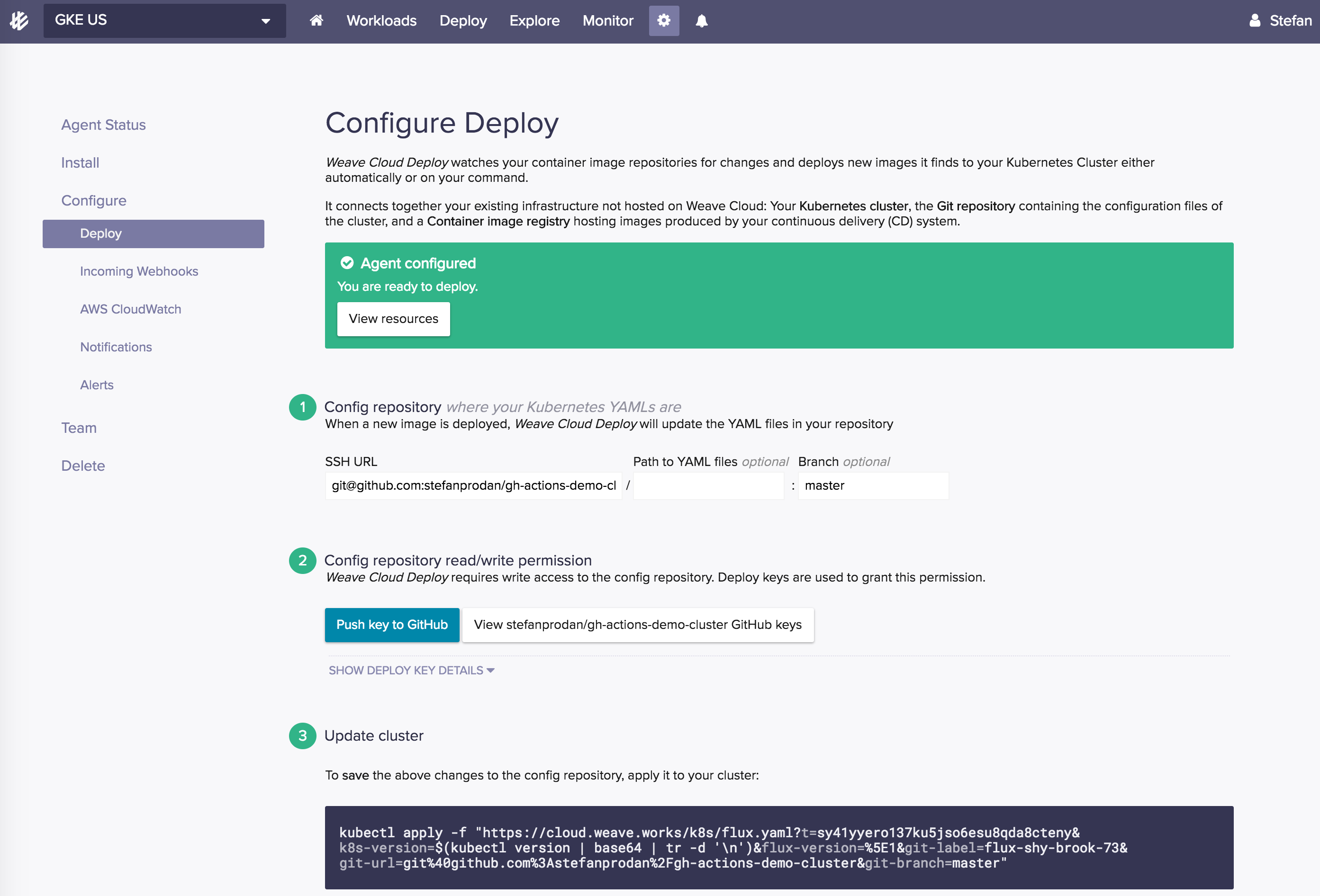

In order to create these objects on my cluster, I'll install the Weave Cloud agents and connect the GitOps operator to my cluster repo.

When Weave Cloud Deploy runs for the first time, it will apply all the YAMLs inside the repo: namespaces first, then the services and finally the deployments.

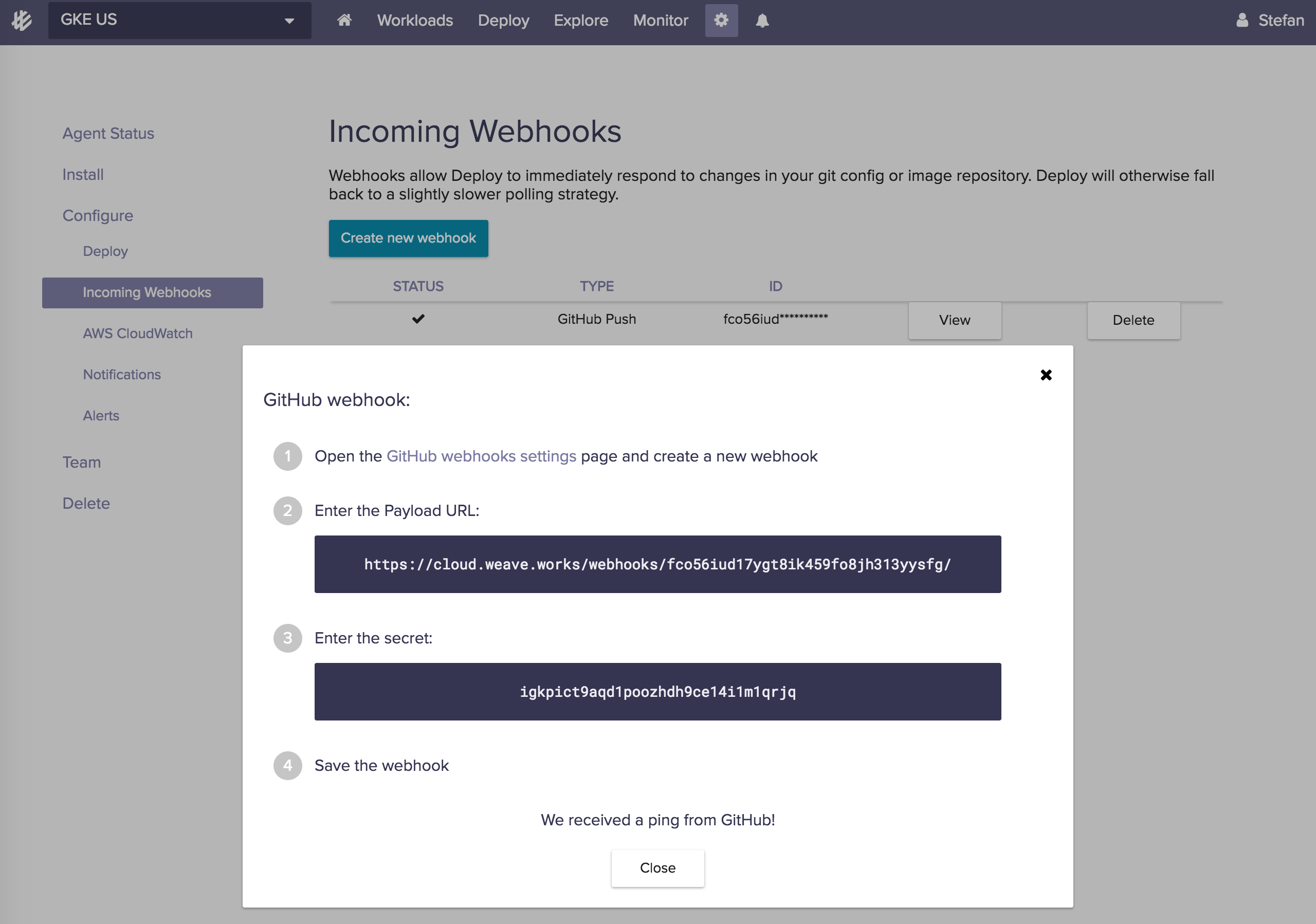

Weave Cloud Deploy will pull and apply any changes made to the repository every five minutes, to trigger it on every commit I can setup a GitHub web hook from the UI:

Now that my workloads are running in both namespaces I want to automate the deployment in such a way that every time I push to the master branch the resulting image would run on staging and every time I do a GitHub release the production workload would be updated.

Weave Cloud Deploy allows you to define automated deployment polices based on container image tags. These policies can be specified using annotations on the deployment YAML.

In order to automate the master branch deployment I can edit the staging/deployment.yaml and create a glog filter:

apiVersion: apps/v1

kind: Deployment

metadata:

name: podinfo

namespace: staging

annotations:

flux.weave.works/tag.podinfod: glog:master-*

flux.weave.works/automated: 'true'

spec:

template:

spec:

containers:

- name: podinfod

image: stefanprodan/podinfo:master-a9a1252For GitHub release I can create a semantic version filter and instruct Weave Cloud Deploy to update my production deployment on every patch release:

apiVersion: apps/v1

kind: Deployment

metadata:

name: podinfo

namespace: production

annotations:

flux.weave.works/tag.podinfod: semver:~1.3

flux.weave.works/automated: 'true'

spec:

template:

spec:

containers:

- name: podinfod

image: stefanprodan/podinfo:1.3.0If I push commits to the demo app master branch, GitHub actions will publish a image as stefanprodan/podinfo:master-48761af,

Weave Cloud will update the cluster repo and deploy that image to the staging namespace.

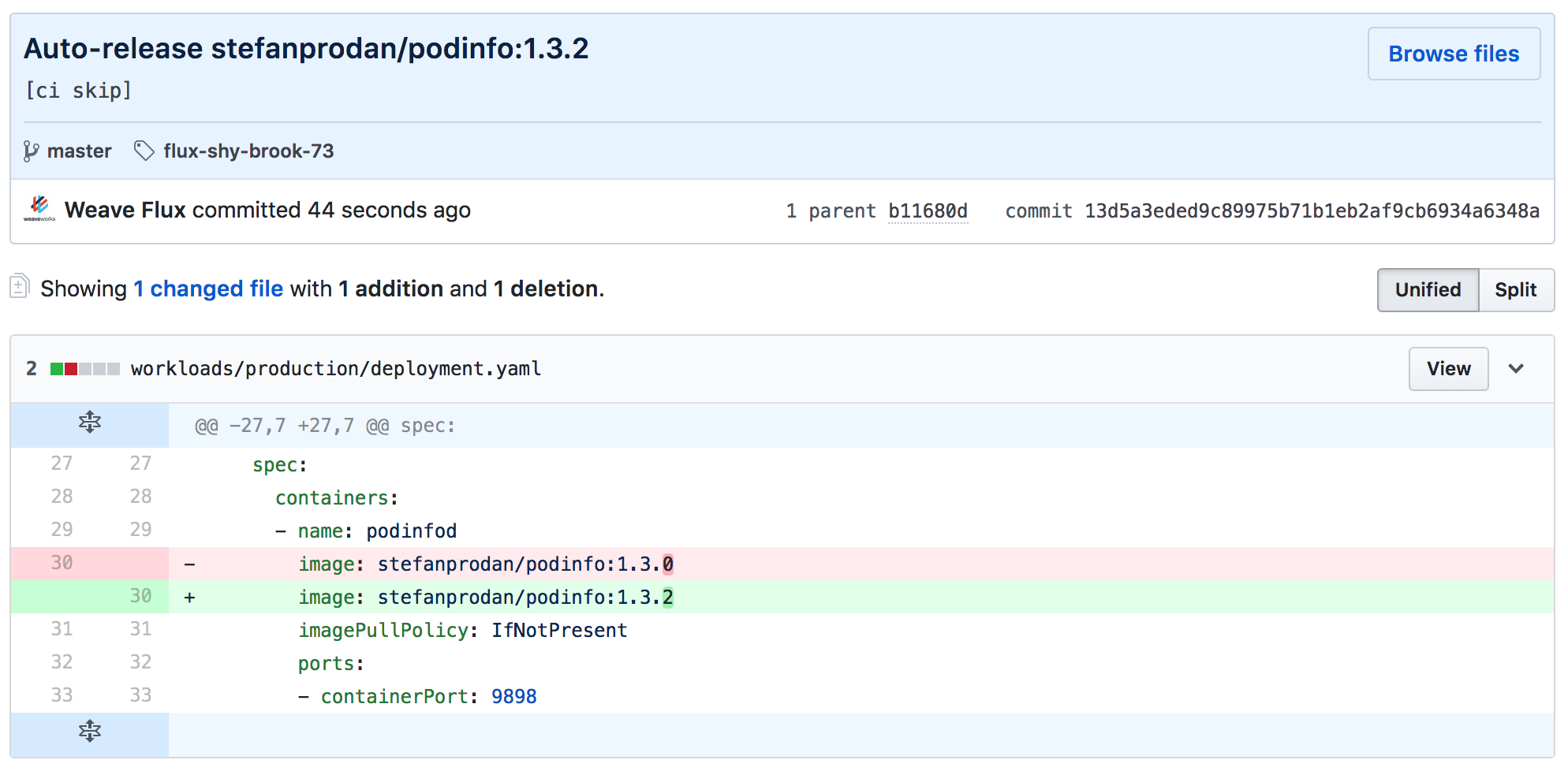

If I make a new release by pushing a tag to my demo app repo with git tag 1.3.2 && git push origin 1.3.2 the GitHub actions will

test, build and push a container image as in stefanprodan/podinfo:1.3.2 to the registry.

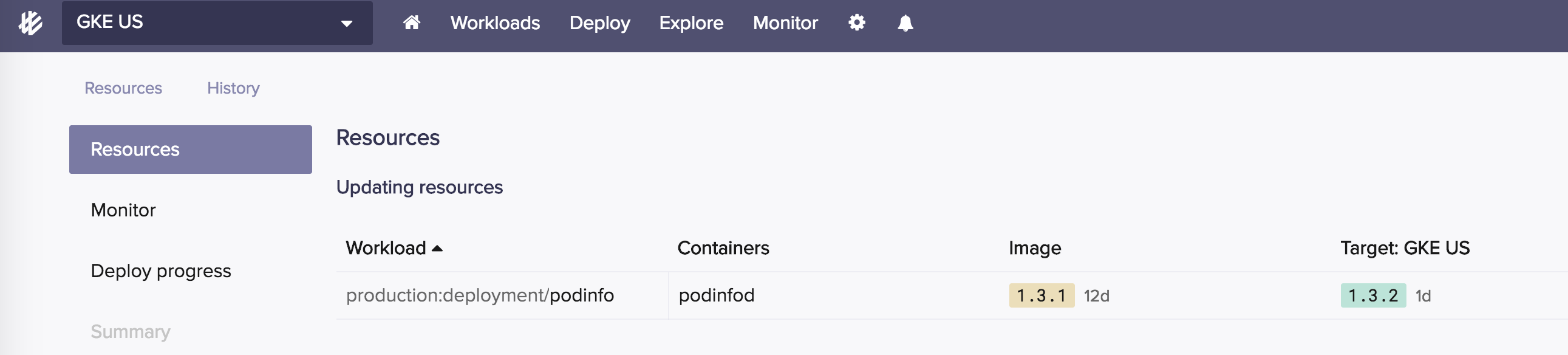

Weave Cloud Deploy will fetch the new image tag from Docker Hub,

will update the production deployment in the cluster repo and will apply the new deployment spec.

Now if I want to rollback this deployment I can undo the git commit or I can use Weave Cloud and do a manual release of a previous version:

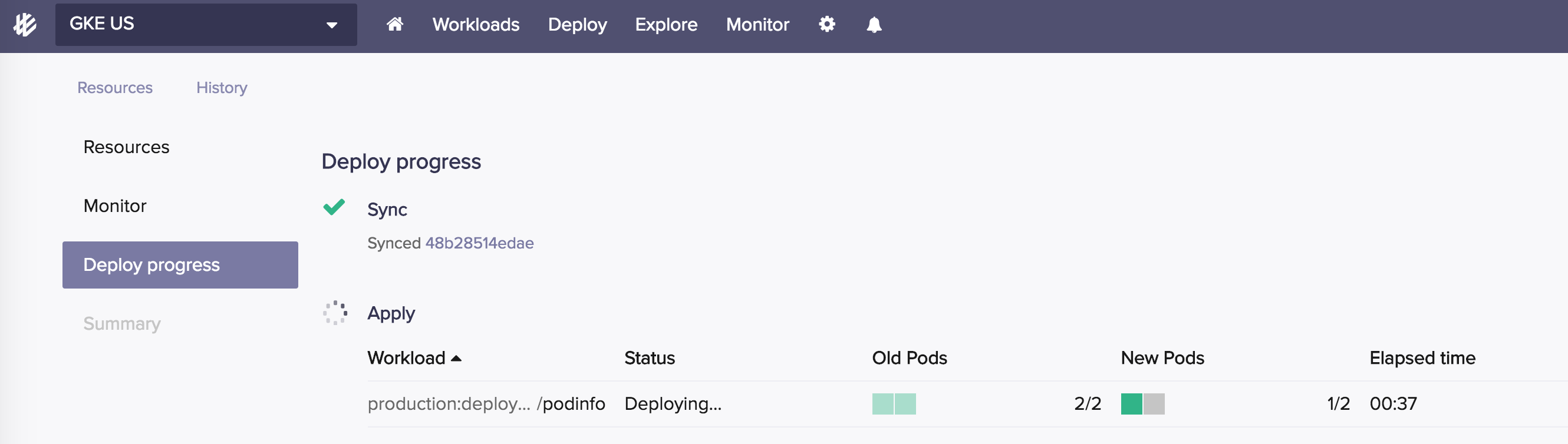

Weave Cloud will commit the new image tag to git and will report the deployment progress: