-

Notifications

You must be signed in to change notification settings - Fork 38

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Use pytest to populate parameterized test instances #36

Conversation

|

One outstanding issue: the parent test in the summary window will have its result based on the most recent run: if only a passing set of instances were run, it will show green, even if there are failing instances from previous runs. |

I think a better non-hacky solution here would be for neotest core to support tests without a location: if |

Here's a rough go at a more generic version of that (there are a bunch of other places that would also need to use this, this is just illustrative!): --- a/lua/neotest/client/init.lua

+++ b/lua/neotest/client/init.lua

@@ -122,6 +122,10 @@ function NeotestClient:get_nearest(file_path, row, args)

local nearest

for _, pos in positions:iter_nodes() do

local data = pos:data()

+ if not data.range then

+ pos = pos:closest_parent_with("range")

+ data = pos:data()

+ end

if data.range and data.range[1] <= row then

nearest = pos

else

diff --git a/lua/neotest/types/tree.lua b/lua/neotest/types/tree.lua

index b0ae7af..d2dd470 100644

--- a/lua/neotest/types/tree.lua

+++ b/lua/neotest/types/tree.lua

@@ -144,6 +144,14 @@ function Tree:iter_parents()

end

end

+function Tree:closest_parent_with(data_attr)

+ for parent in self:iter_parents() do

+ if parent:data()[data_attr] then

+ return parent

+ end

+ end

+end

+

---@return neotest.Tree

function Tree:root()

local node = self |

|

@rcarriga What do you think to this approach, of shelling out to pytest to get the test instances? If you're happy with it at a high-level, I can work through this code to make it a bit more robust. (No rush on a response, I totally understand that you have other things going on in your life!) |

|

I think this approach makes total sense, I hadn't thought of the complexities of parameters in pytest. I also like making the ranges optional, it's an elegant way of saying that a test doesn't exist statically 👍 When I initially started writing this plugin, I was actually going to use pytest for discovery as that's what VSCode does, but I found it to be crazy slow for some projects and unreliable in that if there was error during discovery, the whole thing would fall over and nothing would be found. Your solution here solves the latter, since only parameters would be missing if it failed, which is great! The performance is still a concern for me. I think there are some things we can do to address it though.

This is just me spitballing right now though! I'd be interested to see performance as is. I'll have a test myself when I get a chance but if you're interested, you can run the benchmark consumer (hopefully, I don't think anyone else has ever used it) which will tell you how long it took to parse a project. This is really only useful on large projects, I like to use the cpython repo. nvim --headless -c 'lua require("neotest").benchmark.start()' |

Excellent!

I've actually had second thoughts on this: I've opened nvim-neotest/neotest#147 and will comment more over there in a minute.

Agreed, the performance is definitely a problem at the moment!

Yep, though I think I'd like to make this configurable: pytest fixtures can be parameterised, and do not have to be defined in the same test file (or even Python module) as the tests themselves, so we can't reliably determine even the existence of instances via TS. I think three states (names invented right now and subject to change) make sense:

I definitely like the idea of shifting more of this over into Python (and not only because I know Python much better than Lua 😅). However, instead of integrating those positions into the tree earlier, I was thinking that a good way of addressing performance issues might be to introduce two-phase position discovery: neotest core would call The code in this PR would be modified so that Does that make any sense with how the core/rendering is currently structured?

Yeah, this would be very cool! I reached out to #pytest on IRC, and they don't know of any existing project which does something like that: an interesting area for research!

Well..... running in the CPython repo kinda forkbombs my machine as currently implemented 😅. I've added code to only invoke Currently, we invoke pytest per-test-file: there are 742 |

|

I've just pushed up a change which will only run With this change, I'm seeing CPython benchmark times with pytest drop to ~5s, as we would expect. pytest (the codebase) benchmark times are ~5s: a grep suggests that there are 41 (of 108) |

Instead of adding a new adapter method for this, neotest core could accept an iterator as the return value of

Fantastic 😆

Brilliant, not impacting people that don't need this is my top concern, once we can allow users to not be affected by performance problems, it becomes much easier to do this iteratively. |

I'm experimenting with this now: --- a/lua/neotest/client/init.lua

+++ b/lua/neotest/client/init.lua

@@ -261,13 +261,19 @@ function NeotestClient:_update_positions(path, args)

self:_parse_files(adapter_id, path, files)

else

logger.info("Parsing", path)

- local positions = adapter.discover_positions(path)

- if not positions then

+ local found = false

+ for positions in adapter.discover_positions(path) do

+ if positions then

+ found = true

+ logger.debug("Found", positions)

+ logger.info("FOUND", path)

+ self._state:update_positions(adapter_id, positions)

+ logger.info("POST-UPDATE", path)

+ end

+ end

+ if not found then

logger.info("No positions found in", path)

- return

end

- logger.debug("Found", positions)

- self._state:update_positions(adapter_id, positions)

end

end)

if not success thenWith corresponding local neotest-python changes to (a) correctly return an iterator with two steps, and (b) sleep 5s in the pytest execution to make it easier to see if we're getting two-phase updates. I see multiple calls to After a bit more experimentation, adding |

I'm not 100% sure why, but output after the first load of a file appears to go to stderr.

pytest parameterized tests don't have a distinct location: don't focus

them based on cursor movement.

Requires this neotest patch:

--- a/lua/neotest/client/init.lua

+++ b/lua/neotest/client/init.lua

@@ -123,7 +123,9 @@ function NeotestClient:get_nearest(file_path, row, args)

for _, pos in positions:iter_nodes() do

local data = pos:data()

if data.range and data.range[1] <= row then

- nearest = pos

+ if not data.should_skip then

+ nearest = pos

+ end

else

return nearest, adapter_id

end

Paths can include pattern-matching characters (such as "-"), so we switch to `string.find` which allows us to disable pattern-matching.

|

I was thinking of instead of waiting for all of the parsing to complete before returning from local positions_iter = adapter.discover_positions(path)

local positions = positions_iter()

if not positions then

logger.info("No positions found in", path)

return

end

logger.debug("Found", positions)

self._state:update_positions(adapter_id, positions)

async.run(function()

local positions = positions_iter()

while positions do

self._state:update_positions(adapter_id, positions)

positions = positions_iter()

end

end)We might need some sort of way of stopping the async function so that it doesn't overwrite newer results |

Non-pytest projects won't have it available.

Just pushed this change up! This is using module-level state (in the new module), so if you'd prefer to handle that differently, let me know what you'd like to see. |

|

That's fine, it's relatively simple so it'll be easy to remove 👍 I'll give a review when I get a chance 😄 |

Some fixes: - Parsing of parameters doesn't rely on regex so it's less brittle - Removes `goto` statements which aren't guaranteed to be available Some refactoring: - Switches to using a lib function to run the process and collect output. - Doesn't launch process and then collect results after parsing is complete. The parsing for a single file is so fast that there's not much advantage of doing this. This change allows us to remove module state and simplify the code - Renamed some variables/functions. This was just some very subjective changes that helped me understand a bit better but not enforcing these changes.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

So I've tested and reviewed. Got a bit stuck in with it when testing and ending up making some changes so I've created a PR to address the comments so feel free to take what you like from that (OddBloke#1) 😄

My PR requires some changes in latest neotest master so be sure to rebase if using it

lua/neotest-python/pytest.lua

Outdated

| pty = true, | ||

| on_stdout = function(_, data) | ||

| for _, line in pairs(data) do | ||

| local test_id, param_id = string.match(line, "(.+::.+)(%[.+%])\r?") |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This breaks if the parameters contains square brackets. Tested with https://github.com/pydantic/pydantic/blob/1ac1199f08c42803dc7c5e6fae754afd53e3f1b4/tests/test_utils.py#L105.

lua/neotest-python/pytest.lua

Outdated

| for _, value in positions:iter_nodes() do | ||

| local data = value:data() | ||

| if data.type ~= "test" then | ||

| goto continue |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can't use goto neovim/neovim#19849

lua/neotest-python/pytest.lua

Outdated

| local pytest_job = pytest_jobs[path] | ||

| if pytest_job then | ||

| -- Wait for pytest to complete, and merge its results into the TS tree | ||

| async.fn.jobwait({ pytest_job }) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Pretty sure this blocks the entire editor

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah, it does, I have a fix for that locally.

lua/neotest-python/pytest.lua

Outdated

| test_instances[path] = {} | ||

| local test_instances_for_path = test_instances[path] | ||

| _, pytest_jobs[path] = pcall(async.fn.jobstart, cmd, { | ||

| pty = true, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is there a reason to set pty = true?

|

Hey @OddBloke just wanted to check if you're still interested in continuing this PR 😄 It'd be great to get this functionality in so if you're unable, I'd be happy to take it on. |

|

Hey, this ping was timed well: this is a hack week at work, so let me see if I can get my head back into this and push it over the line! |

|

I've merged @rcarriga's suggested changes PR and done some manual testing and, with nvim-neotest/neotest#304, this seems to be working well! There is still something funky going on with status markers: only the latest defined of the child tests which have run updates the marker. So if you run the entire set of children, the last result will be the only one reflected: if you then run any child but the last one, the status marker does not even update to reflect that the test is running. If you run individual children (from the summary window), then the status marker is updated iff you haven't run any later-listed children. |

|

(That doesn't feel like it necessarily need to block landing this, so I'm marking this ready for review.) |

|

I've filed nvim-neotest/neotest#305 for the aforementioned funkiness. |

|

Sorry I haven't had much time to work on personal projects so haven't had a chance to look at this. Thank you for picking this back up and the additional fixes! This all looks great and works perfectly AFAICT so time to merge! |

|

@rcarriga Hm, this change seems to be showing all Python parametrize as failed now :( |

Can confirm. Parametrized tests run perfectly, but are shown as failed. |

This fixed it for me:

There's a snippet on this at the bottom of the readme on this repo. The downside is testing is not as snappy. |

Thanks for the hint! Right in plain sight, sorry for not seeing this obvious fix :) |

|

There was a bug that has now been fixed so you can set |

(This is very rough, so I'm opening it as draft: this is the first Lua I've written that wasn't neovim configuration, so it will need substantial rework before it can land!)

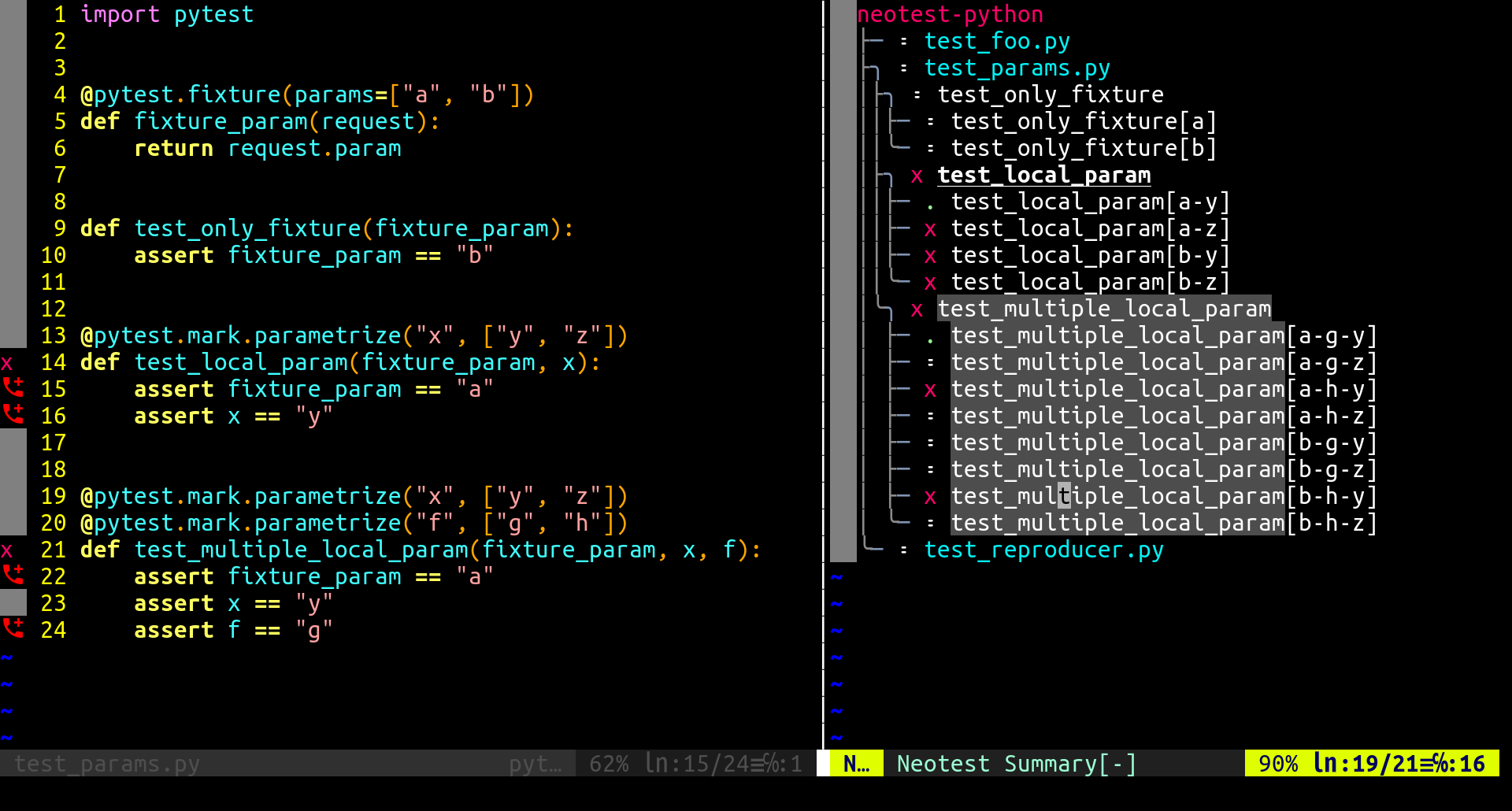

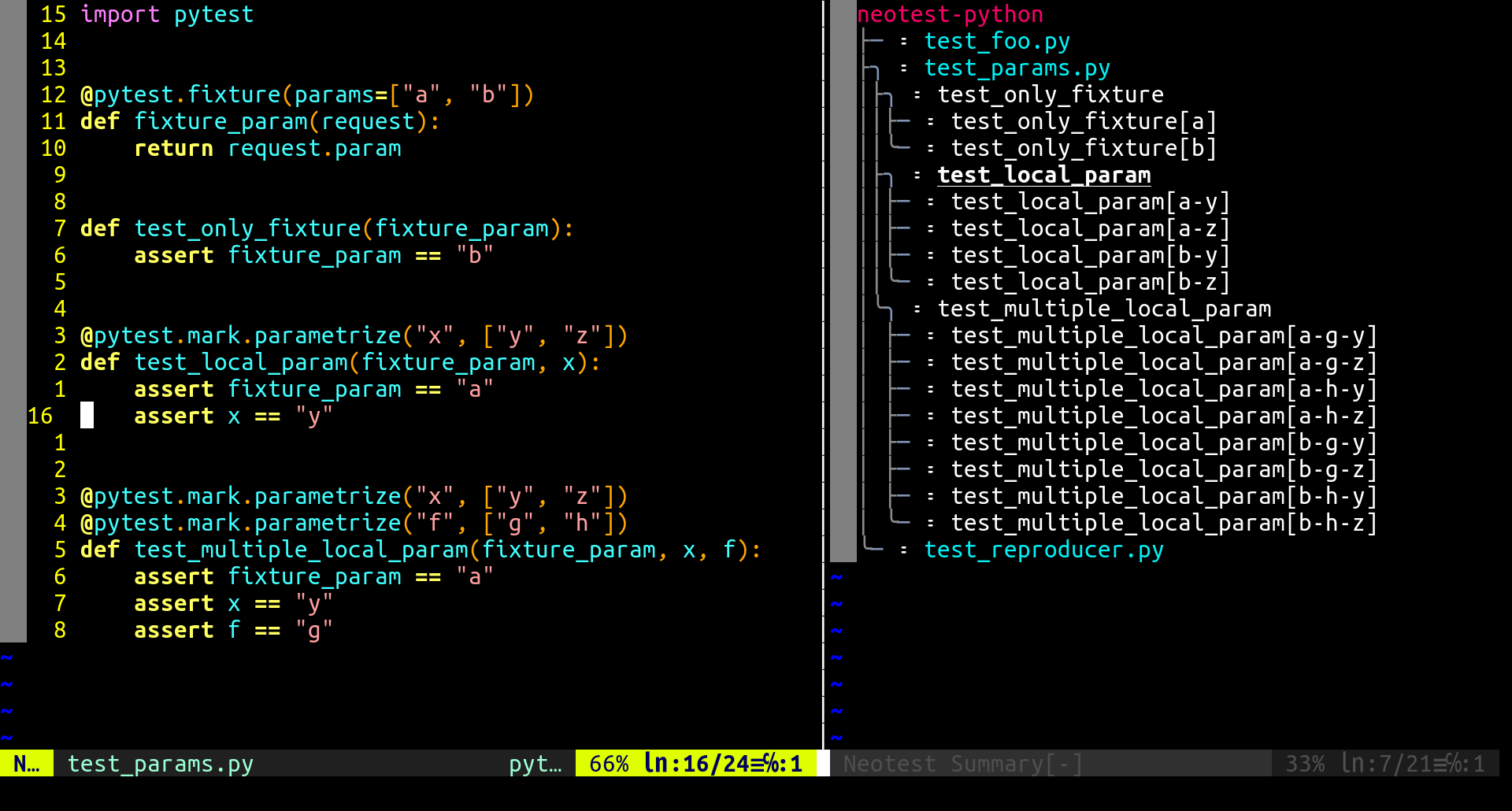

These commits modify

PythonNeotestAdapter.discover_positions(path)to:pytest --collect-only <path>(vianeotest_python.py)[a-b-c])This would fix #35

It also includes one modification to get the UX to work correctly: individual test instances aren't tied to locations in

pytest, as they can be generated by the cross-product of several decorators and/or fixtures. It follows that the cursor position should still select the parent test, and not the test instances. As far as I can tell, this isn't currently achievable in neotest: settingrange = nilon the position results in all sorts of errors, and leaving it the same as the parent test results in the last test instance being focused.With this hack to neotest core, and 602a0ac, positions can declare that they should be excluded from focus (a better variable name would not go amiss, but it's getting late!):

This results in the parameters being displayed in the summary, while the parent test is selected:

neotest.run.runin a test definition will run all test instances:And you can selectively run individual test instances from the summary window (the highlighting of the test name is from another plugin, oopsie):