-

Notifications

You must be signed in to change notification settings - Fork 57

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Feature/support helm in release manager #916

Feature/support helm in release manager #916

Conversation

|

Alright ... I have silently watched this for a while ... first of, kudos to the nice work! I thought about the Helm integration on a more general note, and I'm going to throw a bit of a curve ball to you ;) My basic question is: do you even want to make the Helm integration work like the Tailor integration? The Tailor integration is very "Deployment(Config) centric", because:

However, I think long-term you want to be in a place where you can basically deploy any Helm chart. This means that you do not know which resources are your deployment units (think CRDs from operators, like, for example, a "Cluster" resource for a Postgres cluster). So I am wondering if it wouldn't be nice to have the Helm "branch" somewhat separated from the Tailor part. In the Helm "branch", I would question which checks are really needed (e.g. the checks that Deployments are controlled by the release manager are probably not needed at all because the Helm chart cannot be exported from the DEV namespace, so by definition all things are version controlled). Or, is it really needed to collect pod data like IP addresses to put them into the GxP templates when IP addresses may change anyway? Maybe we can use other evidence instead which is better suited and easier to get? So - just some food for thought. I am aware this would probably blow up the scope of this PR - but it may also make a few things easier that were difficult because of the current constraints? |

|

I'll add another convenience method to be able to import external images. Since we still require images to be owned by the OpenShift registry that should be quite helpful anytime people use a chart from the interwebs. |

|

And, finally!, the tests pass again. Yay, I guess! |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@serverhorror - see the comments

docs/modules/jenkins-shared-library/partials/odsComponentStageCopyImage.adoc

Show resolved

Hide resolved

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Martin - one big thing is the tests... we should write a bunch of tests all around this?

|

From our conversation yesterday @clemensutschig:

I hope this is helpful, let me know if there are other questions that I may help with! |

There is (well: already was) a test to deploy helm via the componet pipeline. You're right making a few more tests is probably useful @michaelsauter |

|

@michaelsauter - thanks for for the hard challenge . this helps a lot ..

|

| @@ -137,8 +137,11 @@ class OpenShiftService { | |||

| valuesFiles.collect { upgradeFlags << "-f ${it}".toString() } | |||

| values.collect { k, v -> upgradeFlags << "--set ${k}=${v}".toString() } | |||

| if (withDiff) { | |||

| def diffFlags = upgradeFlags.findAll { it != '--atomic' } | |||

| def diffFlags = upgradeFlags.findAll { it != '--atomic' || it != '--three-way-merge'} | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I do not understand this line. You wouldn't need to check since you handle the case that --three-way-merge is already given down below, no? Apart from that, --three-way-merge isn't a known flag for helm upgrade.

--atomic is filtered out because it is a known flag for helm upgrade, but it is not handled by helm diff. But as I said I think it's better to set the env var to ignore unknown flags in diff, otherwise you prevent most upgrade flags to be used as it would break helm diffing.

@michaelsauter I'm not sure that this is something the library should do -- I will mention it in the docs. I think that makes sense. The case I know about is:

OPEN

DONE

Added documentation in 88702bc

we picked the same model that was implemented for tailor :) |

|

I think this iready to be merged .. @metmajer @michaelsauter - for a very big first cut - it's feature wise at what tailor does / did |

Thanks for all the help I received here. Should I open a new issue to craft a quickstarter that uses helm so people see how it would work? |

This warrants a bigger discussion- how we want to handle this ... :/ (e.g. do it for all quickstarters in one short, .. or a flag, .. or) |

This fixes calls such as: ``` + tailor --verbose --non-interactive \ -n play-test apply --selector app=example-component-name \ --exclude bc,is \ --param ODS_OPENSHIFT_APP_DOMAIN=apps.example.invalid \ --param ODS_OPENSHIFT_APP_DOMAIN=apps.example.invalid \ --verify --ignore-unknown-parameters ```

…elm-in-release-manager

Use same paths as ScanWithSonarStage see: * opendevstack#916 (comment)

|

IMO this is ready to merge. I see there have been build errors that should be solved first. |

I have fixed this with your requests for the SonarQube changes. All checks are passing again, see: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Well done, @serverhorror, kudos for the hard work and contribution :) Please wait with the merge until our test cases indicate success. We are working on it and will let you know soon. Then, let's please ensure we squash-merge this thing :)

I can't even merge, I don't have the technical permissions :) |

|

@metmajer -- I went thru the cases with your tester today. I think it looks good. Can we, please, merge this before the year ends. I would really, really appreciate it if the open and merge would happen in the same year and I pinky swear not to open another PR this close to the years end :) |

|

@serverhorror appreciate it or not, but this contribution is critical enough to deserve a validation through a test. The test case might be partly defined already, but has not been executed yet. Once we have a green light, your contribution is ready to be merged. Otherwise, we taint |

|

To add more context to this conversation and hopefully clarify: the PR by @serverhorror is most welcome and will be released early next year. Therefore, we are not under pressure of merging this code into |

|

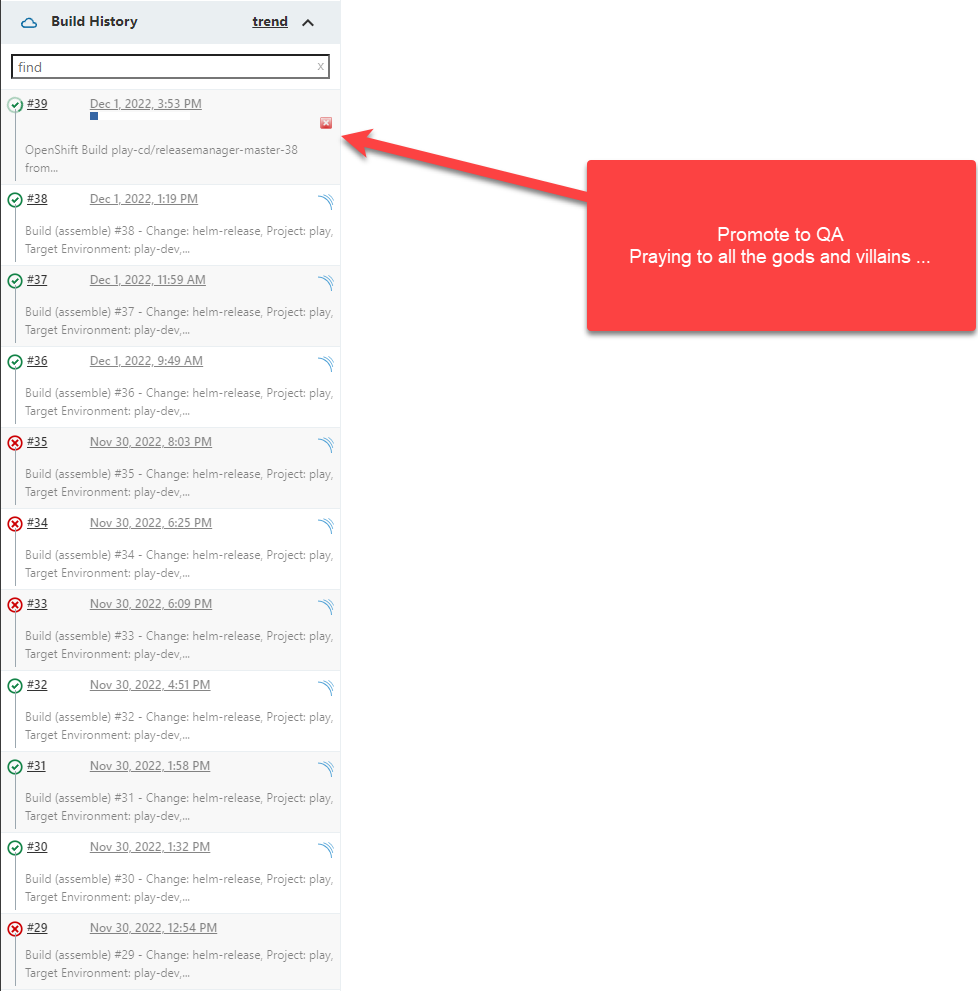

Over the last weeks, @serverhorror @jafarre-bi @albertpuente and others worked on triaging random errors during pipeline executions using Helm. We didn't see any clear evidence that this was related to Helm directly but needed a better understanding of the overall stability to decide on the next steps. When the team was confident about the final result, we decided to consider the implementation Done and to give the merge a go. Thanks for everyone's patience. Some contributions need a close look to ensure the quality of the resulting feature. This was certainly the case here. Well done, everyone! |

first cut of HELM support, which also once and for all eliviates the DeploymentConfig limitations

This is still dirty! - helm variables from component pipeline are NOT in the RM