-

Notifications

You must be signed in to change notification settings - Fork 21.3k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

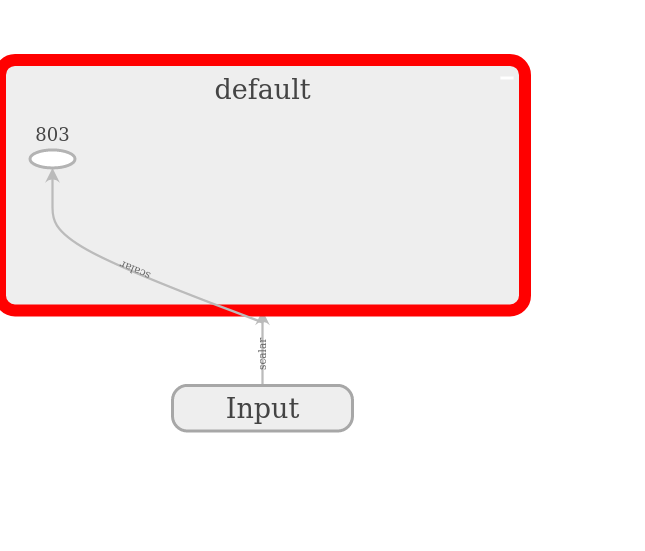

[TensorBoard] Graph with objects other than torch.nn.Module can not be visualized. #30459

Comments

|

@yangsenius How as a mock and there is no error. |

|

I use this model https://github.com/HRNet/Higher-HRNet-Human-Pose-Estimation/blob/master/lib/models/pose_higher_hrnet.py @lanpa |

|

@yangsenius I noted that you are using torch==1.2.0. Also, you have mixed installation of pip and conda. Would you try again with a cleaner and newer environment? Thanks. |

|

Thanks |

|

@yangsenius How did you resolve this issue? I'm facing the same issue even with newly created conda env as well. |

|

No, I didn't resolve this bug even in a cleaner and newer environment. @tshrjn @lanpa EnvironmentCollecting environment information... OS: Ubuntu 16.04.6 LTS Python version: 3.7 Nvidia driver version: 418.43 Versions of relevant libraries: I guess that current verion of PyTorch or Tensorboard lib does not support some specific computation graphs. I can not figure out what causes this issue. When I used a simple model to import torch

from torchvision.models.resnet import resnet50

from torch.utils.tensorboard import SummaryWriter

#from tensorboardX import SummaryWriter

net = resnet50()

inputs = torch.randn(1,3,256,256)

o = net(inputs)

graph = SummaryWriter()

graph.add_graph(net, (inputs,) )I think this problem is not a special case, many people using new version of PyTorch (>1.0) have confused by this. |

|

@yangsenius I think the problem is the |

|

@yangsenius Could you solve the problem ? If so can you please share |

|

I think just the |

|

@yangsenius How do you fix the None in https://github.com/HRNet/Higher-HRNet-Human-Pose-Estimation/blob/e4b52e078b0e5a7470e023ca79c429686ba40cf8/lib/models/pose_higher_hrnet.py#L410 transition_layers.append(None) |

|

Because the So I try define a class none_module(nn.Module):

def __init__(self,):

super(none_module, self).__init__()

self.none_module_property = TrueThen use |

|

@Kevin0624 Does it work? |

This comment has been minimized.

This comment has been minimized.

|

I don't think the

|

class NoOpModule(nn.Module):

"""

https://github.com/pytorch/pytorch/issues/30459#issuecomment-597679482

"""

def __init__(self, *args, **kwargs):

super().__init__()

def forward(self, *args, **kwargs):

return argsThen in And then in forward, for each respective stage (e.g. stage 3 here): if not isinstance(self.transition3[i], NoOpModule):

x_list.append(self.transition3[i](y_list[-1]))

else:

x_list.append(y_list[i]) |

I'm still getting assert(isinstance(orig, torch.nn.Module)) BTW I put transition_layers.append(NoOpModule()) in place of transition_layers.append(None) |

|

You may need to modify several places in which transition_layers is appended |

There seems to be only one line where None append of transition_layers is written. https://github.com/HRNet/HigherHRNet-Human-Pose-Estimation/blob/master/lib/models/pose_higher_hrnet.py#L410 @ |

Also the |

|

@yangsenius Thank you very much. It is working now. |

@yangsenius May I ask which version of tensorboardx and pytorch you use? |

PyTorch == 1.2.0 and TensorboardX==2.0 (likely) |

Hi @yangsenius thanks for your quick reply! I tried your method and Pytorch==1.2 and also TensorboardX==2.0 but I got the following error. Any Idea? |

|

@newwhitecheng. Can you have a try with tensorboardX==1.4? I may have updated the version. |

sure, thanks a lot, I think it's just a version mismatch problem. Thanks for your valuable solution! |

|

These days, you can also use See cpbotha/deep-high-resolution-net.pytorch@b1a7fdb for how to apply this fix on the pose HRNet code. |

@cpbotha Thanks very much for your proposal. The |

|

@newwhitecheng Did it work for you with tensoboardX==1.4? |

🐛 Bug

I use tensorboardX to

add_graph(model, (dump,)), butTo Reproduce

Steps to reproduce the behavior:

Expected behavior

Environment

Collecting environment information...

PyTorch version: 1.2.0

Is debug build: No

CUDA used to build PyTorch: 10.0.130

OS: Ubuntu 16.04.6 LTS

GCC version: (Ubuntu 5.4.0-6ubuntu1~16.04.11) 5.4.0 20160609

CMake version: Could not collect

Python version: 3.7

Is CUDA available: Yes

CUDA runtime version: 10.1.105

GPU models and configuration:

GPU 0: GeForce RTX 2080 Ti

GPU 1: GeForce RTX 2080 Ti

Nvidia driver version: 418.43

cuDNN version: Probably one of the following:

/usr/lib/x86_64-linux-gnu/libcudnn.so.7.5.0

/usr/local/cuda-10.1/targets/x86_64-linux/lib/libcudnn.so.7

Versions of relevant libraries:

[pip3] numpy==1.16.2

[pip3] numpydoc==0.8.0

[pip3] torch==1.2.0

[pip3] torchvision==0.4.0

[conda] blas 1.0 mkl

[conda] mkl 2019.1 144

[conda] mkl-service 1.1.2 py37he904b0f_5

[conda] mkl_fft 1.0.10 py37ha843d7b_0

[conda] mkl_random 1.0.2 py37hd81dba3_0

[conda] torch 1.2.0 pypi_0 pypi

[conda] torchvision 0.3.0 pypi_0 pypi

Additional context

The same problem occurred when using

torch.utils.tensorboardThe text was updated successfully, but these errors were encountered: