-

Notifications

You must be signed in to change notification settings - Fork 21.3k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

randperm: add torch check to ensure generator device = tensor device #47022

Conversation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

lgtm 👍 side note, it looks like test_randperm is fairly long... can/should it be split up into multiple smaller tests, or is it a series of steps that must be done sequentially?

Yea, it is marked as a slow test--I'm not sure what the best thing to do is, since that whole test is wholistic in that it only deals with randperm, but it could def be split up. |

gotcha; yeah I'm less concerned about the speed since as you mentioned, it's already marked as slow, but if it can be split up, I think it should, since that would make it clear which parts of it are independent from each other |

|

Just want to note that this is BC breaking. But I don't think it matters too much. I'll let others decide. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I rescind my approval 😛 these are the results from running the tests on my end, after commenting out the @slowTest on test_randperm:

$ python test/test_tensor_creation_ops.py -k test_randperm

/home/sestep/github/pytorch/pytorch/torch/random.py:95: UserWarning: CUDA reports that you have 8 available devices, and you have used fork_rng without explicitly specifying which devices are being used. For safety, we initialize *every* CUDA device by default, which can be quite slow if you have a lot of GPUs. If you know that you are only making use of a few CUDA devices, set the environment variable CUDA_VISIBLE_DEVICES or the 'devices' keyword argument of fork_rng with the set of devices you are actually using. For example, if you are using CPU only, set CUDA_VISIBLE_DEVICES= or devices=[]; if you are using GPU 0 only, set CUDA_VISIBLE_DEVICES=0 or devices=[0]. To initialize all devices and suppress this warning, set the 'devices' keyword argument to `range(torch.cuda.device_count())`.

warnings.warn(

FF

======================================================================

FAIL: test_randperm_cpu (__main__.TestRandomTensorCreationCPU)

----------------------------------------------------------------------

RuntimeError: Expected a 'cuda' generator device but found 'cpu'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/sestep/github/pytorch/pytorch/torch/testing/_internal/common_device_type.py", line 274, in instantiated_test

result = test_fn(self, *args)

File "test/test_tensor_creation_ops.py", line 1263, in test_randperm

self.assertRaisesRegex(RuntimeError, regex, lambda: torch.randperm(n, device='cuda', generator=cpu_gen))

AssertionError: "Expected a * generator device but found *" does not match "Expected a 'cuda' generator device but found 'cpu'"

======================================================================

FAIL: test_randperm_cuda (__main__.TestRandomTensorCreationCUDA)

----------------------------------------------------------------------

RuntimeError: Expected a 'cuda' generator device but found 'cpu'

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/sestep/github/pytorch/pytorch/torch/testing/_internal/common_utils.py", line 833, in wrapper

method(*args, **kwargs)

File "/home/sestep/github/pytorch/pytorch/torch/testing/_internal/common_utils.py", line 833, in wrapper

method(*args, **kwargs)

File "/home/sestep/github/pytorch/pytorch/torch/testing/_internal/common_device_type.py", line 274, in instantiated_test

result = test_fn(self, *args)

File "test/test_tensor_creation_ops.py", line 1263, in test_randperm

self.assertRaisesRegex(RuntimeError, regex, lambda: torch.randperm(n, device='cuda', generator=cpu_gen))

AssertionError: "Expected a * generator device but found *" does not match "Expected a 'cuda' generator device but found 'cpu'"

----------------------------------------------------------------------

Ran 2 tests in 31.307s

FAILED (failures=2)

LOL ya I just spotted my regex mistake/didn't realize the tests were skipped last time 😅 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@janeyx99 has imported this pull request. If you are a Facebook employee, you can view this diff on Phabricator.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

approving again since the tests pass now :) it would be good to address Rong's comments though

💊 CI failures summary and remediationsAs of commit 3339eac (more details on the Dr. CI page): 💚 💚 Looks good so far! There are no failures yet. 💚 💚 1 failure confirmed as flaky and can be ignored:

This comment was automatically generated by Dr. CI (expand for details).Follow this link to opt-out of these comments for your Pull Requests.Please report bugs/suggestions on the GitHub issue tracker or post in the (internal) Dr. CI Users group. This comment has been revised 27 times. |

ec5e54b

to

c2d1141

Compare

|

Add a "BC-breaking note" at the top of the PR summary describing what's changing and why it's BC-breaking. This section is used when the release notes are compiled. The current PR summary can appear in a separate section below it: BC-breaking Note: explanation for release notes PR Summary: current PR Summary The PR's name should be updated to clarify it's changing the behavior of the randperm operation. Follow-up question, what's with the documentation of torch.randperm? I don't even see a generator argument. torch.randperm also appears twice in search results when queries for. Does it have have 2 rst entries? |

|

@mruberry Just updated the summary of the PR with why I think it's considered BC-breaking, but I'm not 100% sure that's the reason. Can @ssnl confirm? Also, the randperm docs seem to be hardcoded here: |

|

Yeah, to me this is BC breaking mainly because CPU genenerators can't be used for CUDA generation when size is small anymore, as @janeyx99 has indicated in main description now. |

|

A separate thing: it might be good to consider moving these checks to |

Oh what would be the difference? |

|

|

Right. Seems like we should update the docs (and remove redundant entries while we're at it). |

c52d1bd

to

3339eac

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@janeyx99 has imported this pull request. If you are a Facebook employee, you can view this diff on Phabricator.

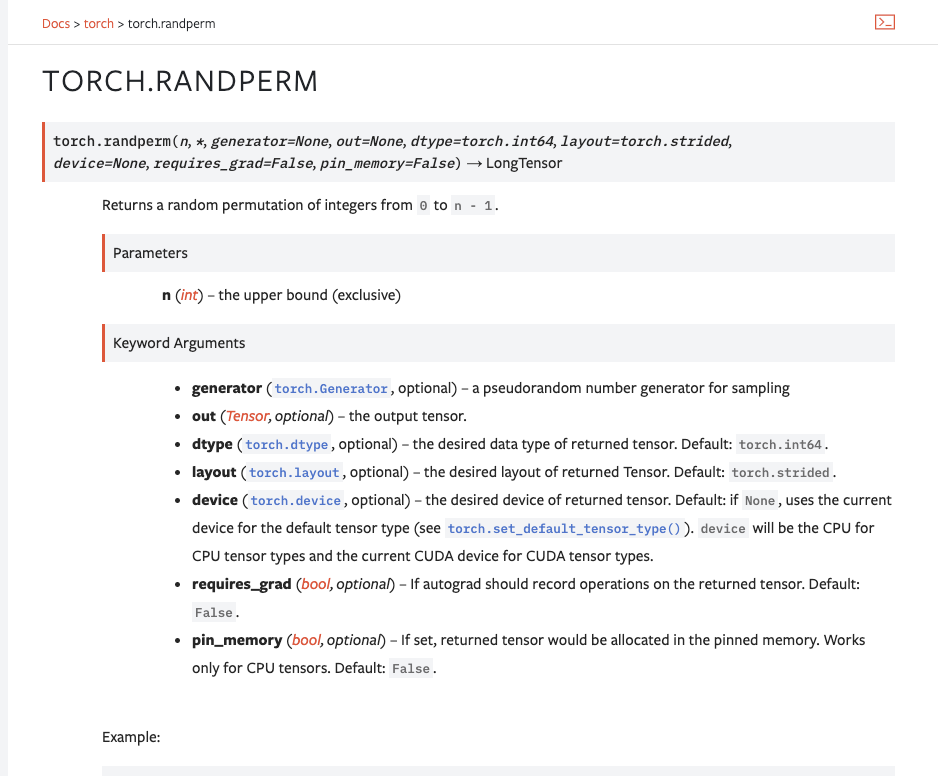

Summary: The `randperm` documentation is outdated and did not use to include the optional `generator` parameter. This PR just adds that along with the `pin_memory` parameter. This PR was brought up in [PR 47022](#47022), but is now rebased onto master. New docs look like:  Pull Request resolved: #47231 Reviewed By: mruberry Differential Revision: D24711960 Pulled By: janeyx99 fbshipit-source-id: 3ff8be62ec33e34ef87d017ea97bb950621a3064

BC-breaking Note:

This PR disallows passing in a generator of a different device than the tensor being created during

randpermexecution. For example, the following code which used to work no longer works.It now errors:

PR Summary:

Fixes #44714

Also added + ran tests to ensure this functionality.

Disclaimer: More work needs to be done with regards to small cuda tensors when a generator is specified, look at the issue thread for more details.