Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

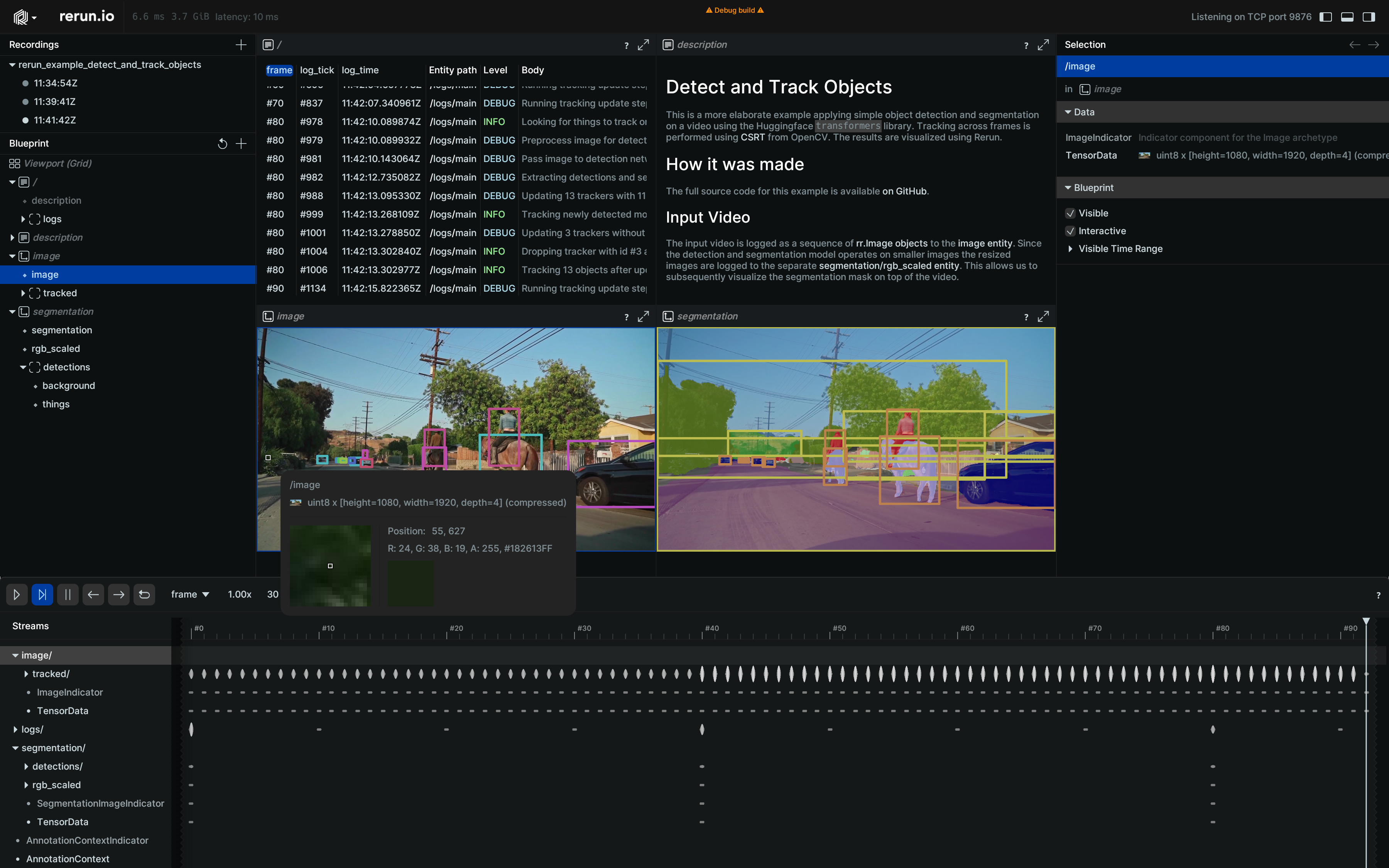

### What * partially solves #4926 * fixes new issues where we get two spaces views in our 2d code examples for lines Removes "child of root" heuristics for 2D space views and improves image grouping behavior a bit. Had to do slight adjustments to object tracking demo so that it still looks good:  But imho the adjusted hierarchy also makes a lot of sense. Soon user can configure this from blueprint, we can then think about removing non-explicit bucketing. See * #3985 ### Checklist * [x] I have read and agree to [Contributor Guide](https://github.com/rerun-io/rerun/blob/main/CONTRIBUTING.md) and the [Code of Conduct](https://github.com/rerun-io/rerun/blob/main/CODE_OF_CONDUCT.md) * [x] I've included a screenshot or gif (if applicable) * [x] I have tested the web demo (if applicable): * Using newly built examples: [app.rerun.io](https://app.rerun.io/pr/5087/index.html) * Using examples from latest `main` build: [app.rerun.io](https://app.rerun.io/pr/5087/index.html?manifest_url=https://app.rerun.io/version/main/examples_manifest.json) * Using full set of examples from `nightly` build: [app.rerun.io](https://app.rerun.io/pr/5087/index.html?manifest_url=https://app.rerun.io/version/nightly/examples_manifest.json) * [x] The PR title and labels are set such as to maximize their usefulness for the next release's CHANGELOG * [x] If applicable, add a new check to the [release checklist](tests/python/release_checklist)! - [PR Build Summary](https://build.rerun.io/pr/5087) - [Docs preview](https://rerun.io/preview/4040710e64651d60a9b1f1afacfa899be19e11cc/docs) <!--DOCS-PREVIEW--> - [Examples preview](https://rerun.io/preview/4040710e64651d60a9b1f1afacfa899be19e11cc/examples) <!--EXAMPLES-PREVIEW--> - [Recent benchmark results](https://build.rerun.io/graphs/crates.html) - [Wasm size tracking](https://build.rerun.io/graphs/sizes.html)

- Loading branch information

Showing

3 changed files

with

68 additions

and

31 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters