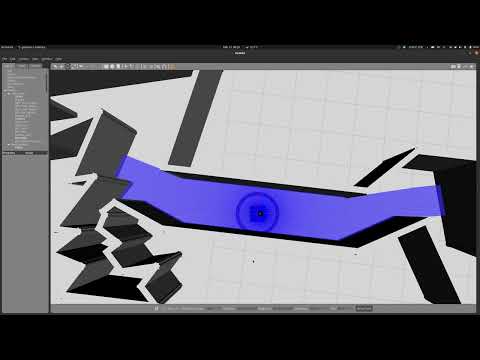

Generating velocity commands by the laser data to a neural network? Sounds like a simple idea. But it is not that easy. I created this repository to try different approaches to solve this problem, but I couldn't find time to focus on the model itself. Maybe you can create a better model?

-

Install ROS Noetic.

-

Clone the repository into

~/catkin_ws/srcfolder and build the workspace:$ cd ~/catkin_ws/src $ git clone https://github.com/salihmarangoz/deep_navigation $ cd .. $ rosdep install --from-paths src --ignore-src -r -y $ catkin build --cmake-args -DCMAKE_BUILD_TYPE=RelWithDebInfo

-

Install Python dependencies (ToDo)

$ source /opt/ros/melodic/setup.bash; source ~/catkin_ws/devel/setup.bash

$ roscore-

For creating a dataset:

# Each in different terminal. Do not forget to source ros setup.bash files $ roslaunch deep_navigation simulation.launch $ roslaunch deep_navigation create_dataset.launch $ roslaunch deep_navigation ros_navigation.launch -

For training the network:

notebooks/example.ipynb(with jupyter notebook)

-

For running the network:

# Each in different terminal. Do not forget to source ros setup.bash files $ roslaunch deep_navigation simulation.launch $ roslaunch deep_navigation deep_navigation.launch

- Run gazebo:

$ gazebo- In the gazebo gui:

Edit->Building Editor - After building your world save the design into

modelsin this repository. - In

worldfolder, copydeep_parkour.world, modify line 14 (change deep_parkour to your worlds model name) - In

simulation.launchsetstart_fake_mappingto false for enabling mapping. Also setworld_fileto its new value. - Run

simulation.launchandros_navigation.launchfor exploring whole world. - After mapping the world, save the map with the following command:

$ rosrun map_server map_saver # Files may be saved into home folder- Copy the map files into

worldfolder, and insimulation.launchmodifymap_fileparameter and also setstart_fake_mappingto true again. - Rename files and then modify the first line of

.yamlfile. - Done.

Modify filedeep_navigation/models/custom_p3at/model.sdf

<ray>

<scan>

<horizontal>

<samples>360</samples> <!-- 1040!!! -->

<resolution>2</resolution>

<min_angle>-3.14</min_angle> <!-- 90deg: -1.570796 -->

<max_angle>3.14</max_angle> <!-- 90deg: 1.570796 -->

</horizontal>

</scan>

<range>

<min>0.08</min>

<max>20.0</max>

<resolution>0.01</resolution>

</range>

<noise>

<type>gaussian</type>

<mean>0.01</mean>

<stddev>0.005</stddev>

</noise>

</ray>Modify file deep_navigation/worlds/custom.world

For parameter real_time_update_rate:

- 1000 is real world time.

- 2000 is 2x faster.

- 5000 is 5x faster... and so on.

<!-- Simulator -->

<physics name="ode_100iters" type="ode">

<real_time_update_rate>1000</real_time_update_rate>

<ode>

<solver>

<type>quick</type>

<iters>100</iters>

</solver>

</ode>

</physics>