New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Feed exports: add batch deliveries #4434

Feed exports: add batch deliveries #4434

Conversation

5c339bb

to

1081bc5

Compare

Codecov Report

@@ Coverage Diff @@

## master #4434 +/- ##

==========================================

+ Coverage 85.17% 86.29% +1.12%

==========================================

Files 162 160 -2

Lines 9749 9671 -78

Branches 1437 1420 -17

==========================================

+ Hits 8304 8346 +42

+ Misses 1186 1063 -123

- Partials 259 262 +3

|

c0b194e

to

41ee371

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Good starting point @BroodingKangaroo

Other stuff to keep in mind:

- Documentation is missing

- Tests are missing

scrapy/extensions/feedexport.py

Outdated

| for uri, feed in self.feeds.items(): | ||

| if not self._storage_supported(uri): | ||

| raise NotConfigured | ||

| if not self._exporter_supported(feed['format']): | ||

| raise NotConfigured | ||

|

|

||

| def open_spider(self, spider): | ||

| if self.storage_batch: | ||

| self.feeds = {self._get_uri_of_partial(uri, feed, spider): feed for uri, feed in self.feeds.items()} |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

One approach is that, whenever we reach the expected count, just close the current file/deliver it and create a new one.

55aebab

to

13fae39

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hey @BroodingKangaroo, good job, thanks for the PR. In addition to the tests and docs requested by @ejulio, please make sure that this works well after #3858.

Seems like the failing tests are related to #4434, please rebase or merge the latest master to fix them.

a616ae8

to

cbf34ce

Compare

scrapy/extensions/feedexport.py

Outdated

| @@ -335,7 +400,7 @@ def _get_uri_params(self, spider, uri_params): | |||

| params = {} | |||

| for k in dir(spider): | |||

| params[k] = getattr(spider, k) | |||

| ts = datetime.utcnow().replace(microsecond=0).isoformat().replace(':', '-') | |||

| ts = datetime.utcnow().isoformat().replace(':', '-') | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Any reason for this change?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

replace(microsecond=0) - removes microseconds. But if several files were sent within one second they will have the same names.

scrapy/extensions/feedexport.py

Outdated

| slot.start_exporting() | ||

| slot.exporter.export_item(item) | ||

| slot.itemcount += 1 | ||

| if self.storage_batch_size and slot.itemcount % self.storage_batch_size == 0: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I guess you should be calling a routine similar to close_spider here, right?

Otherwise we're just creating the local files, but never sending the S3 or some other storage that is not local.

Also, I wrote a piece of this functionality and had issues when exporting to S3.

I had to upload file.read() instead of just file as it was raising errors.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The current implementation of the _start_new_batch() function repeats the close_spider() function in some aspects.

I tried running it for S3. It seems the program worked correctly.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

My advice here is to extract the common behavior to a function.

As there is no logging here about a file that was created.

Also, if you look into the behavior of close_spider, you'll notice that file storage is called in thread, therefore not blocking the current code.

Otherwise, we'll be blocking the main thread while storing the files and, maybe, causing performance issues

|

@BroodingKangaroo , should I review something here or are you working on some of the changes? |

@ejulio I slowed down a little. There is no big change since your last review, just cosmetic one. |

a17b721

to

839e8a6

Compare

|

@BroodingKangaroo sure, no problems. |

3b89f59

to

b229330

Compare

I think “size” should be avoided, as it can be misleading (item count? bytes?). On a different but related topic, I think it makes sense for new |

That makes a lot of sense to keep the codebase simple, however having general settings also allow to keep project settings simple and avoid duplication, so I don't really have a strong opinion at the moment. On the other hand, you convinced me about the "size" name. That said, I still think that if we introduce a new general setting, it should be |

9fedcd1

to

6454d45

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Almost ready to go IMHO, I just left one question about testing packages.

| @@ -12,6 +12,7 @@ deps = | |||

| -ctests/constraints.txt | |||

| -rtests/requirements-py3.txt | |||

| # Extras | |||

| boto3>=1.13.0 | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is this really needed to test the changes in this pull request?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Now it needs to perform BatchDeliveriesTest::test_s3_export. If we delete this line, the test will be skipped at every run.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think what @elacuesta means is whether or not test_s3_export could be reimplemented without adding a new dependency to the tests, which is a valid point. So, could it be reimplemented without boto3? And, if so, what would be the pros and cons of each test implementation?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Using botocore (we already have this dependency) here means that we have to basically re-implement boto3 anyway, including authentication, all security protocols, exceptions. Also, we have to maintain it if S3 API changes.

docs/topics/feed-exports.rst

Outdated

|

|

||

| And your :command:`crawl` command line is:: | ||

|

|

||

| scrapy crawl spidername -o dirname/%(batch_id)d-filename%(batch_time)s.json |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@BroodingKangaroo Hi, I'm trying to run scrapy installed from your branch and I'm getting an error with the parentheses, following your docs example highlighted above:

$ scrapy crawl books -o books-%(batch_id)d-ending.json

bash: syntax error near unexpected token `('

It seems to accept the expression surrounded by quotes (as bellow), so I'd suggest to update the docs with it wrapped with quotes:

$ scrapy crawl books -o 'books-%(batch_id)d-ending.json'

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You are right, I will update the docs.

I should say that this also happens in the master branch with placeholders like %(time)s, %(name)s and I didn't find information about quotes in such cases in docs.

|

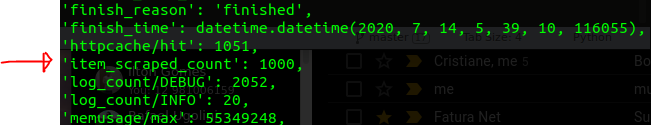

@BroodingKangaroo Hi, I have I was expecting to see 10 files with 100 items per file, but I ended up with 11 files: and the last one is empty: |

Hi, @rafaelcapucho. It is similar to creating an empty file even if nothing is scrapped (current behavior of the master branch). On the other hand, in this case, it is maybe less relevant to user expectations. |

409524f

to

86f7ac2

Compare

9534484

to

3e04927

Compare

|

Thanks @BroodingKangaroo for all the work you put into this feature, and also for swiftly addressing all the review comments - that was impressive! An of course, thanks everyone who reviewed and tested it: @ejulio, @elacuesta, @Gallaecio, @victor-torres, @rafaelcapucho. I'm merging it; if there are any further minor issues, we can address them separately. |

Fixes #4250