-

-

Notifications

You must be signed in to change notification settings - Fork 215

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Slack-side disconnects #208

Comments

|

I am seeing the same thing on larger production bots. I am going to guess this is slack-side, but it also looks like we're not seeing/handling the disconnect properly somehow. I would say first lets understand what we expect on a disconnect like this? An exception? |

|

For celluloid we want to emit a |

|

Thanks for the reply. One strange thing is that the bot goes down in the evening, when no one is at work. About what we expect. I guess an exception with a reason would be a good start. I will also reach out to Slack, and hear with them. |

|

I stuck a restart into my bots (eg. dblock/slack-strava@bc29324) and I am seeing about a dozen of these every hour on a bot system with hundreds of connections. Did you hear back from slack? Either way we definitely don’t have correct handling code here and the client thinks the connection is still open - maybe we can reproduce this or at least attempt to handle this based on that data being recieved? What’s that data is a good question. |

|

hey, there! i'm from slack and i'd like to help you folks get this issue resolved. while i do have some expertise in our RTM API and Websockets (i helped build our node implementation), my Ruby is a little rusty. as a first step, i'd like to reproduce this with minimal steps, in my own app/workspace. if anyone has any shortcuts for me, please share. otherwise i'll update here as i find things. |

|

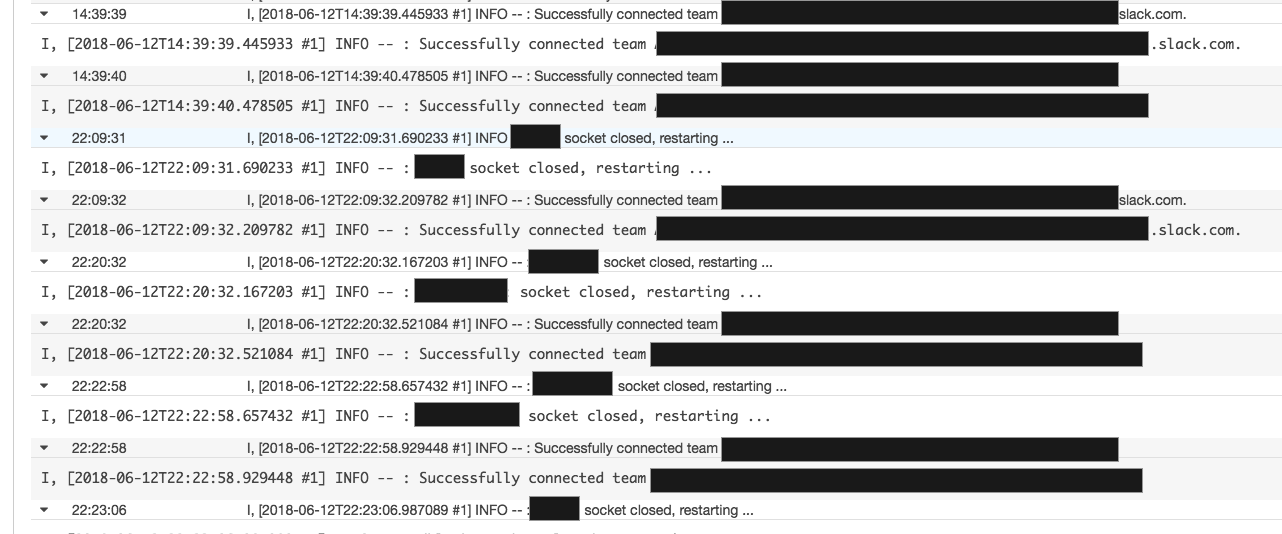

@dblock @Kyrremann I can also confirm the strange disconnects but not seeing any issues restarting via latest We're not running close to hundreds of teams yet and getting to the Attaching a log from Prod with names redacted to protect the innocent. The disconnects start happening around 10PM UTC. |

|

Oh woops, just realized we're in |

|

Hi @aoberoi and thanks for jumping in! I think the easiest is to run a full blown bot. Alternatively we can write some code to open hundreds of RTM connections and see what happens. Here's a full bot: Clone https://github.com/slack-ruby/slack-shellbot. Run Registration creates a team = Team.first

100.times do

SlackShellbot::Service.instance.start!(team)

endYou should get 100 instances of the bot running. |

|

Here's a recent down log from a bot with 297 teams. I left team IDs in there for you @aoberoi in case you have logs to look at on your side. These all went offline in the 5 minutes preceding this log. Note that we also see successful disconnects/restarts. |

|

If you want to add more logging to the code that handles disconnects, put it here. |

|

Any luck with this? Our bot disconnects daily. It's a important piece of our infrastructure we use to coordinate deployments. |

|

My bot disconnects (silently) about once every three days, on a team with only a handful of users. Not sure if that helps, but at least it's a data point. |

|

I sent a support-ticket to the Slack team this morning. I'll let you know when I hear something. I'm afraid we won't get much help, as it's kinda outside their scope to support us (maybe?). |

|

If someone understands what's being sent on the wire or wants to try to that would be helpful. In the end the websocket library simply doesn't see the disconnect. |

|

I'm not really able to help with this issue directly, but there has been some rumblings of using a https://gist.github.com/ioquatix/c24f107e2cc7f48e571a37e8e93b0cda?ts=2 Just FYI. Yes, I know it's a different gem, but it can't be all that different. |

Se issue på Github for mer info: slack-ruby/slack-ruby-client#208

|

I'm sorry I've been absent from this issue for so long. I was traveling over the last 2 weeks for Slack and hadn't had any time to focus on what was going on here. Notes:

Action Items:

Questions:

What can you do?

|

|

I can't answer yes on any of the questions, but I was curios if other people also where running on Google Cloud Engine? |

|

My bots are all on DigitalOcean. |

|

I also can't answer yes, unfortunately; my bot runs locally here on-site (Ubuntu). |

|

Just wanted to chime in and say I'm having the same problem, and am on Heroku. My logs show that the bot is still logging conversations in the slack channel, but the Slack desktop client does not show its presence and the bot does not respond to commands. Restarting the bot does fix the issue. |

|

Not sure if this is a step forward, but, I added a ping function, that would try to stop and restart my client when it was offline from Slack. Sadly that didn't work, as I ended up with a The code I used is this: get '/bot_presence' do

client = ::Slack::Web::Client.new(token: ENV['SLACK_API_TOKEN'])

status = client.users_getPresence(user: ENV['SLACK_BOT_ID'])

if status['presence'] == 'away'

instance = Standbot::Bot.instance

instance.stop!

instance.start_async

json(message: 'Restarting bot successfully')

return

end

json(message: status)

endAnd the stack trace is this: |

|

I failed to find how to "see" this in celluloid-io and it's apparently no longer maintained. Maybe someone can add support for the most-bestest async io library du jour? #210 |

|

My bot is also hosted on digital ocean. I had a restart once a day which seemed to fix it for quite a while, but recently the problem has been getting worst. I just restarted the bot in the afternoon to find the bot offline in the evening. Is there no way that we can take an active approach in determining the connection is still alive by sending our own |

|

Thanks for that information. I believe you've highlighted a new issue; I'm looking into this now. |

|

FWIW I am also using slack-ruby-bot. Could be a thing.

I’ll work on getting some debug logs together.

…-- Eric

On Oct 29, 2018, at 15:05, Rodney Urquhart ***@***.***> wrote:

Thanks for that information. I believe you've highlighted a new issue; our reconnect logic is conflicting with the reconnect logic that exists within slack-ruby-bot.

I'm looking into this now.

—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub, or mute the thread.

|

|

Also using |

|

Seems reasonable, thanks everyone. I am excited about the possibility of killing any kind of retry stuff in slack-ruby-bot and ping inside slack-ruby-bot-server. LMK how I can help. |

|

I've been monitoring this issue since it was reported. Over the past few days our connections are failing repeatedly, multiple times per day across the majority of teams. I thought it might have been a network problem or temporary issue, but it hasn't gone away. I implemented a ping and restart method borrowed from slack-ruby-bot, which has helped. Has anyone else seen this problem ramp up recently? |

|

I have just encountered this problem. |

|

For everyone above trying @RodneyU215's branch and using slack-ruby-bot, upgrade to slack-ruby/slack-ruby-bot#203, which is Let us know if it's better! |

|

My bot still dies even with both gems pulled from @RodneyU215 's branches. It eventually crashed and systemd restarted it. Here are some logs: https://gist.github.com/stormsilver/b940b0df2fde5825e46087fc80f14039

|

|

Thanks for everyone's help and continued efforts on triaging this. I've been on bereavement leave the last few weeks, but I'm back and will be diving back into this issue soon. |

|

@RodneyU215 Ahhh man. That stinks. Sorry to hear this :( Thanks for all your hard work on the disconnecting issues. |

|

@stormsilver I'd like to try and duplicate your issues. Where's your bot being hosted? And which concurrency library are you using? Thus far I've been using the hi.rb example to triage this issue and it appears to be resolved. I'd like to find an example or some code that reproduces any current errors. |

|

@RodneyU215 Our bot is hosted on premise on an Ubuntu 18.04 machine. We are using I would say it's definitely much more reliable than a few months ago; however, we do still see problems where the bot goes AWOL. I have debug logging turned on permanently now so when and if it happens again, I will update this thread. |

|

I ended up fixing the symptoms by wrapping all of the activerecord calls in my bot with |

|

Just an FYI, all pending changes related to this issue have been merged into the Anyone affected by this issue should use gem 'slack-ruby-client', github: 'slack-ruby/slack-ruby-client', branch: 'master'

gem 'slack-ruby-bot', github: 'slack-ruby/slack-ruby-bot', branch: 'master'

gem 'async-websocket' |

|

Do you mind giving a brief summary of what the root problem was and how it was fixed? |

|

This issue was opened to triage a problem where apps using this client would silently lose its connection. @dblock investigated the issue and found that the client did not gracefully handle disconnects. @aoberoi also investigated Slack’s infrastructure and did not find any changes or issues that would cause these disconnects. We’ve not seen the same impact on any other libraries. Over the last few months I worked with @dblock to implement some features that should drastically reduce the impact of unexpected disconnections. When using the client with a supported asynchronous library (i.e. This ping is sent to Slack’s message server which validates from our end that the connection is truly alive. In the event that a response is not received we now:

All this being said investigations are still underway to both reproduce the issue and identify an underlying root cause. I truly appreciate everyone’s help, support and contributions to this issue. |

|

Since updating to |

|

That's good news @stormsilver, which concurrency do you use? And offline/online you mean just briefly as it reconnects I assume? |

|

@dblock Correct, just a brief blip as the bot reconnects. We are using |

|

Btw, I want to release this. Just didn't have time to roll it in my production bots, which is always a good test. Hopefully will get to it soon. |

|

TL:DR Does the Master have any breaking changes, or can we add it in an assume most everything works the same, just the fix for disconnecting from the socket? |

|

BTW, Thanks to everyone for all this work!!! Sorry I didn't say it in the last post!!! You all rock! |

No other breaking changes, see https://github.com/slack-ruby/slack-ruby-client/blob/master/CHANGELOG.md for details. If you use slack-ruby-bot you need slack-ruby/slack-ruby-bot#203 and if you use slack-ruby-bot-server we still have to remove the ping worker from that. If someone wants to PR this I'd be grateful. I also think we need to bump major version here and make those gems next versions dependent on a minimum of slack-ruby-client to make sure we don't run into incompatibilities. |

|

is there a way to disable keepalive messages from debug logs? [DEBUG] @slack/client:RTMClient2 send() in state: connected,ready |

|

I've released:

These should all address the problems above. Open a new issue if they don't. Closing this one. |

In the last week my slack bot has started to disconnect from our Slack team. There are no logs of disconnect, or stack traces. I've started running the bot in debug-mode, and the last thing it logged before it disconnected was the following.

I'm not sure how to debug this my self, and I have no idea for what is wrong.

My setup is running on Google App Engine, with one process for a Sinatra web and a different process running the slackbot. Neither of the process stops, but the bot is offline in Slack.

The code for the project is available here: https://github.com/navikt/standbot

Any help would be welcoming!

The text was updated successfully, but these errors were encountered: