-

Notifications

You must be signed in to change notification settings - Fork 74k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

os.environ["CUDA_DEVICE_ORDER"] not work #25152

Comments

|

can anyone help me, Please |

|

You want specify visible devices not the device order. Use |

|

I will try, thank you |

|

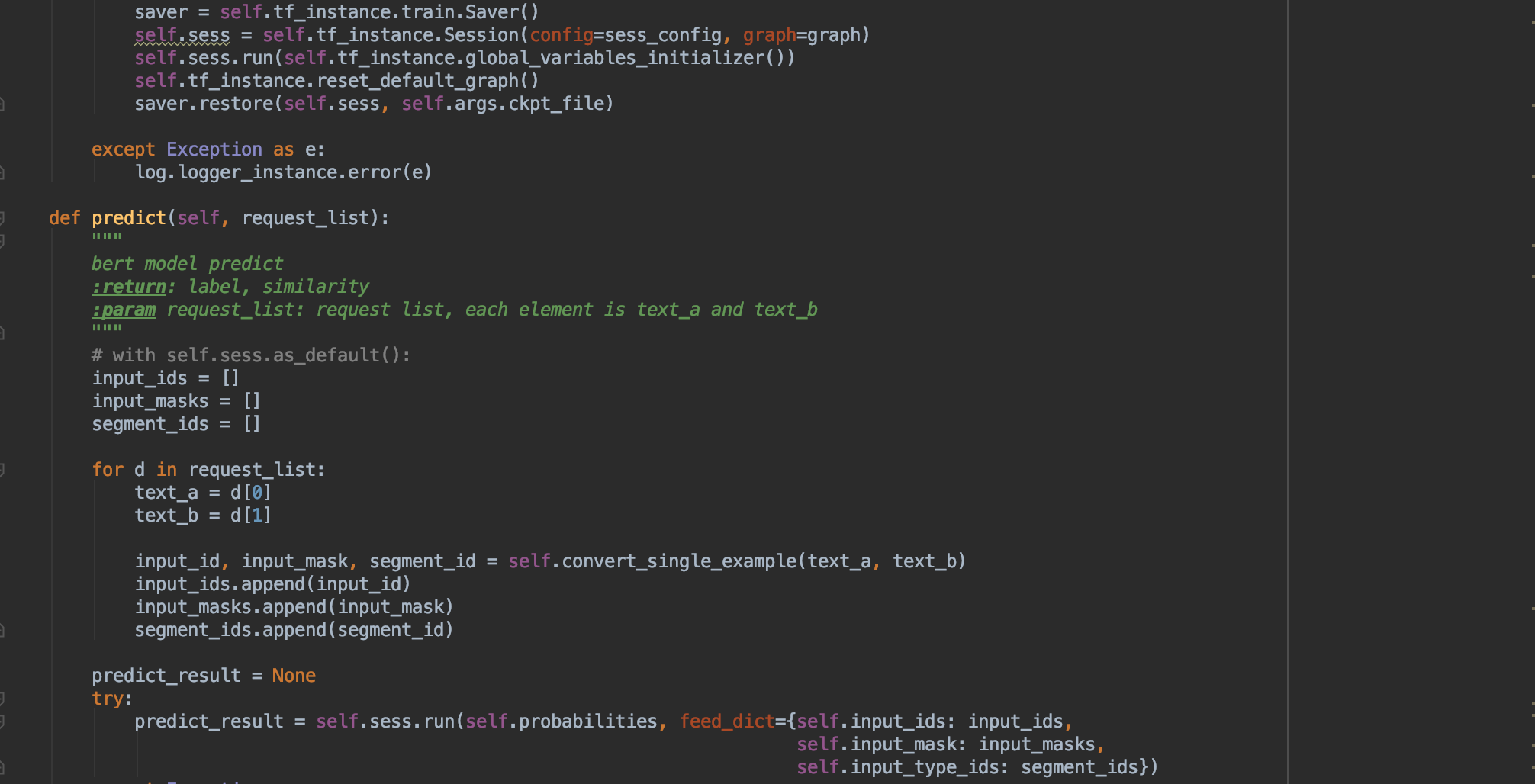

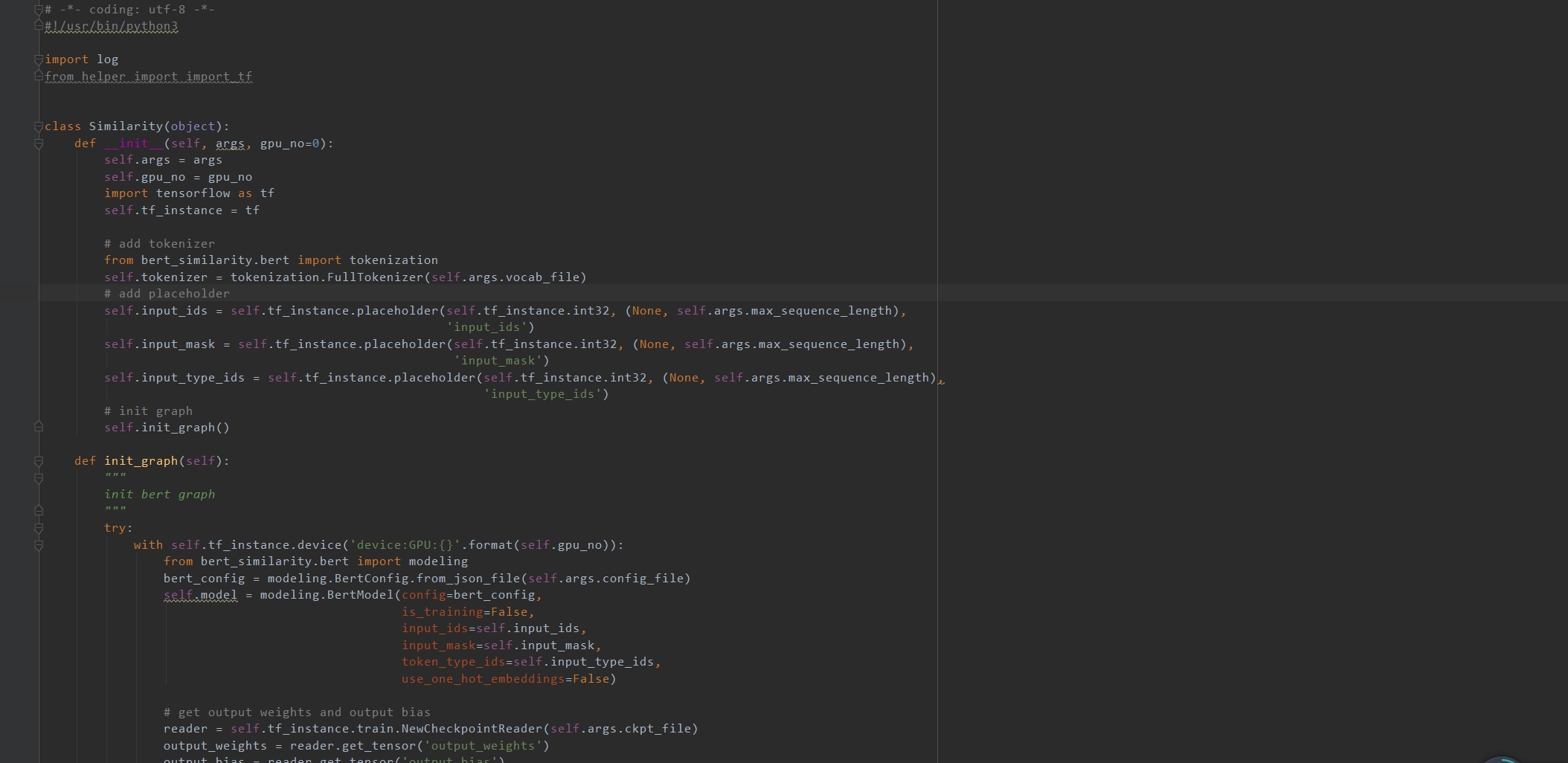

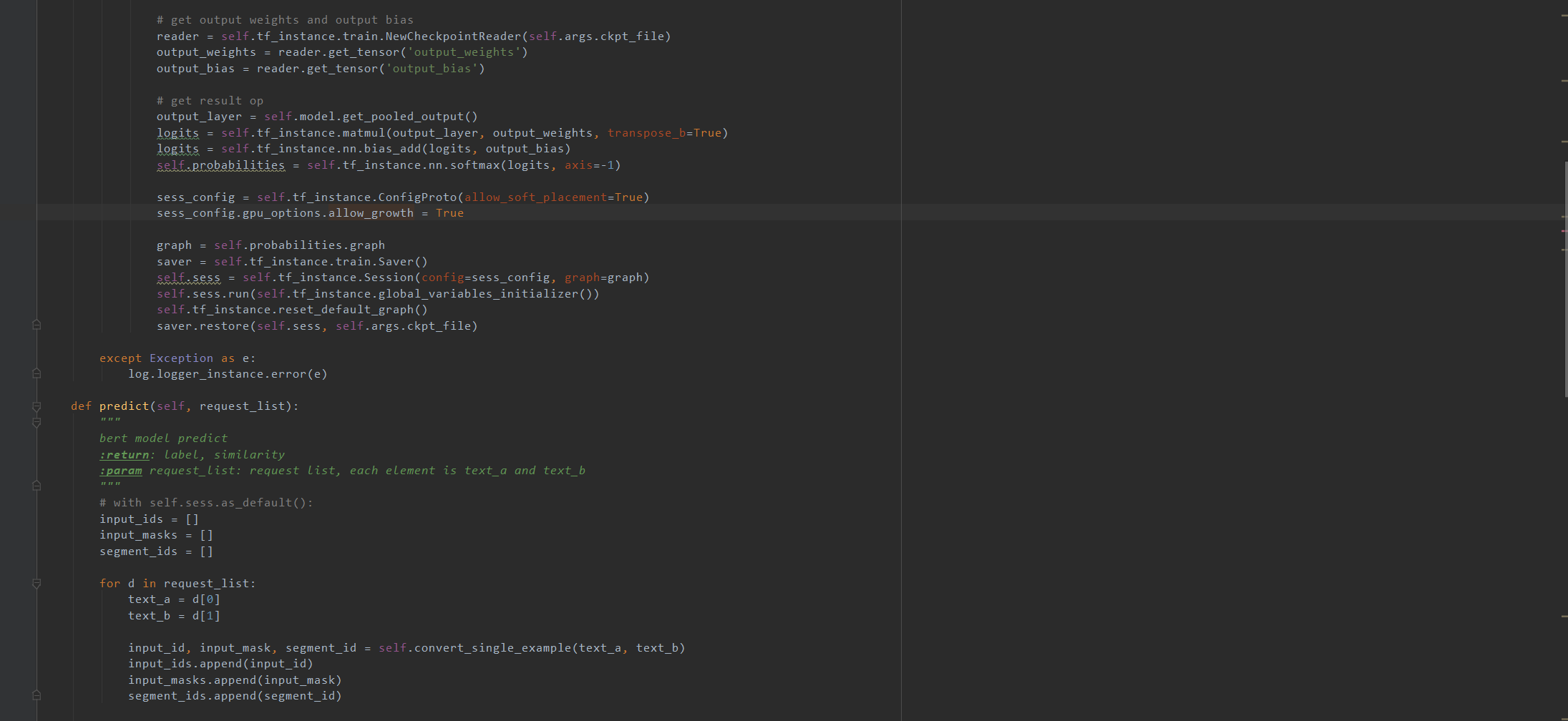

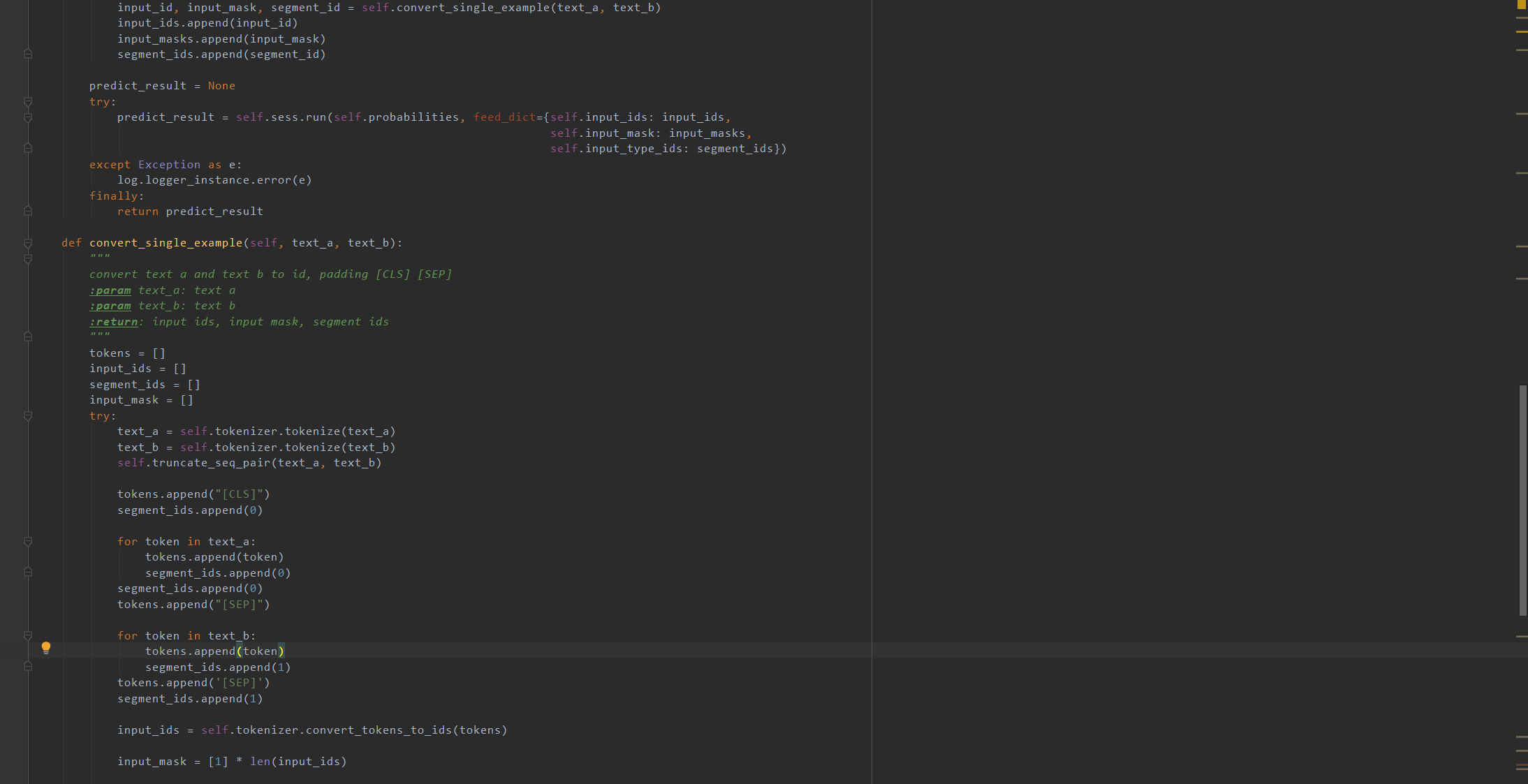

@DavidWiesner this is my code, but it still not work, it occur same condition, can you help me? |

|

Bert is also importing tensorflow so put your environment variables before import bert |

|

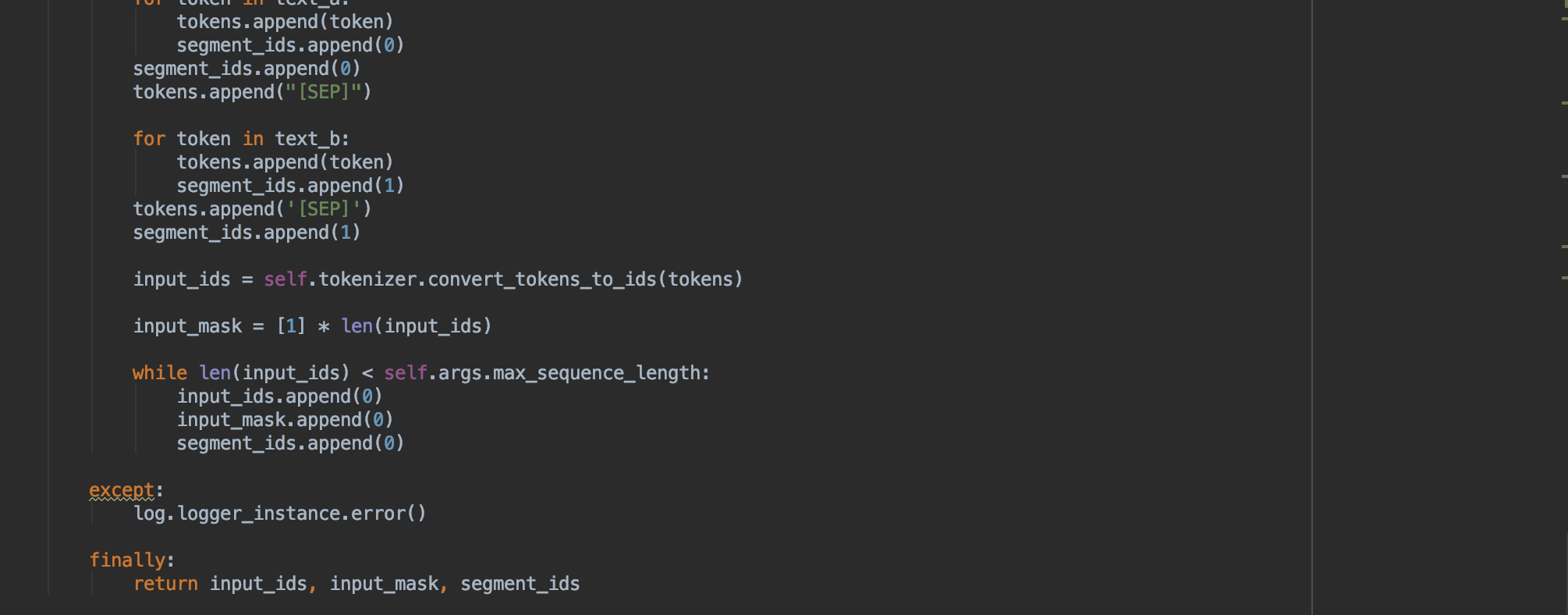

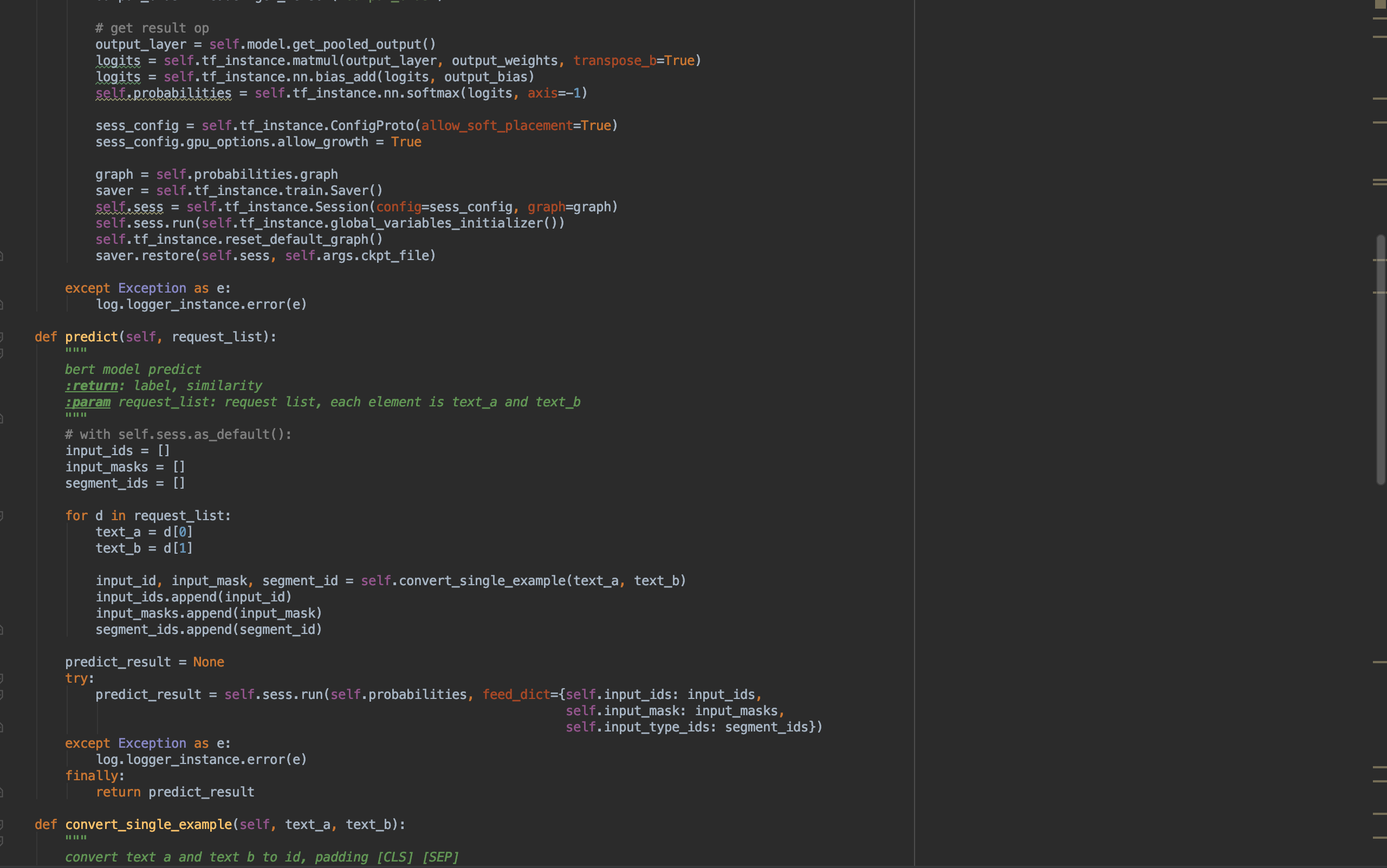

@DavidWiesner but I change my code, it still occur same condition, this is my code, after change, |

|

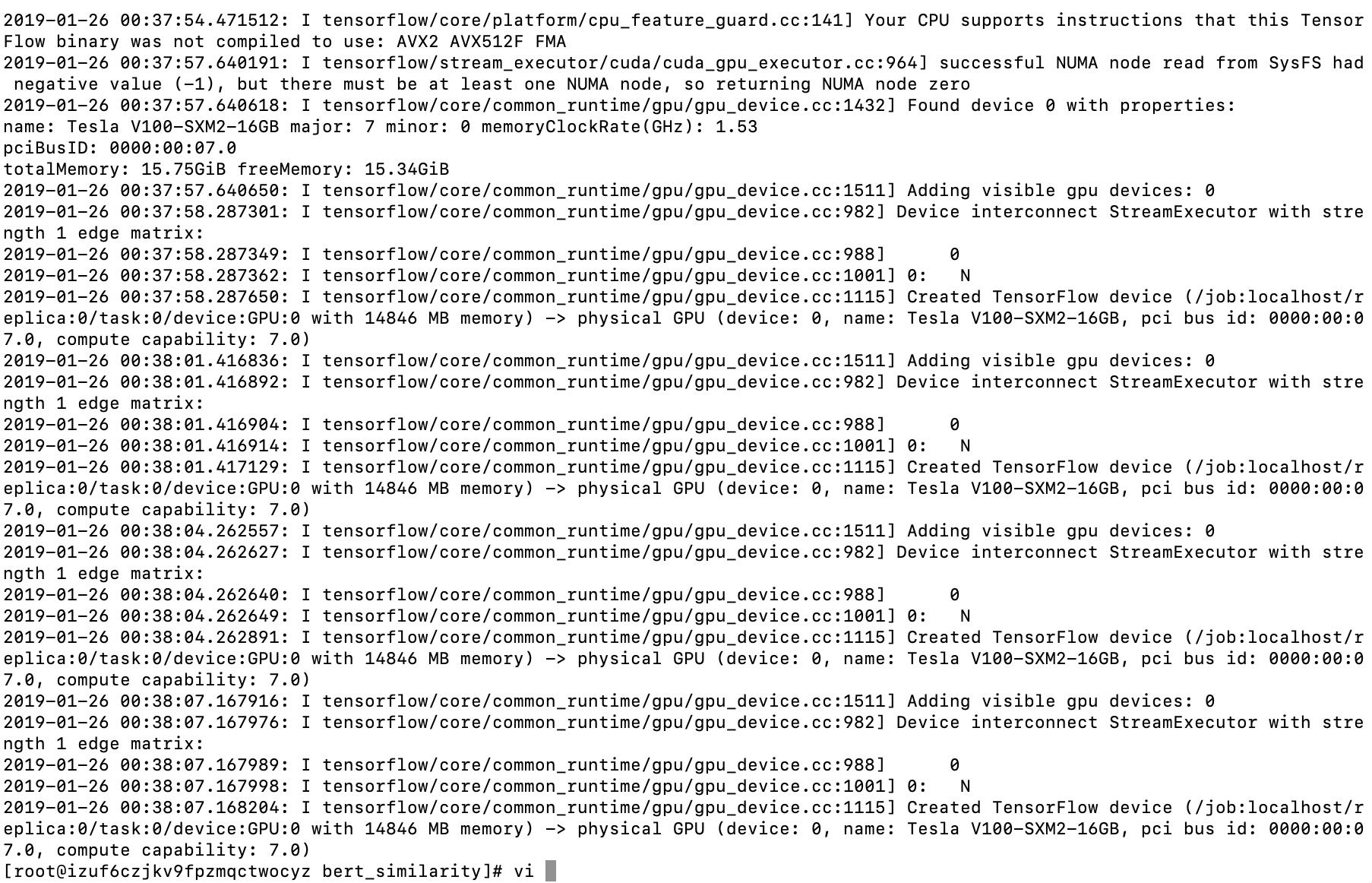

@DavidWiesner this is first page, it lossed |

|

@DavidWiesner please help me, this is very important to me, I was perplex by this problem 2 days. |

|

It is working. In the log you see only one gpu will be used |

|

@DavidWiesner sorry, It took me so long to get back to you, But in my code, I set different gpu number, when I create class instance, I create 4 class instance, I want first instance work in fist gpu, second instance work in second gpu, third instance work in third gpu, fourth instance work in fourth gpu, but in my log, four instance still work in first gpu, I try many time, but it it same. |

|

@omalleyt12 Can you help me? |

|

@guptapriya Can you help me? |

|

hi @policeme - have you tried creating your graph under a Hope this helps. |

|

Hi @guptapriya , but it wasn't work, this is my code and running time log, please help me. |

|

Can you try a simple example first, such as the one from the guide and see if there you're able to get ops on different GPUs? I wonder if TF is not detecting the multiple GPUs at all for some reason. It would be easier to debug with a small simple code snippet. |

|

@guptapriya in this program, it work, I have 1 GPU, when I set GPU:1, it will occur exception, but I don't know why my first program doesn't work, can you tell me why. |

|

@guptapriya this is running time log. |

|

Do you only have 1 GPU? If yes, do you mean why your original program didn't throw exception when you tried to use other GPUs? |

|

yes |

|

if you want original code, I can put my code into github |

|

can you check what is the value of |

|

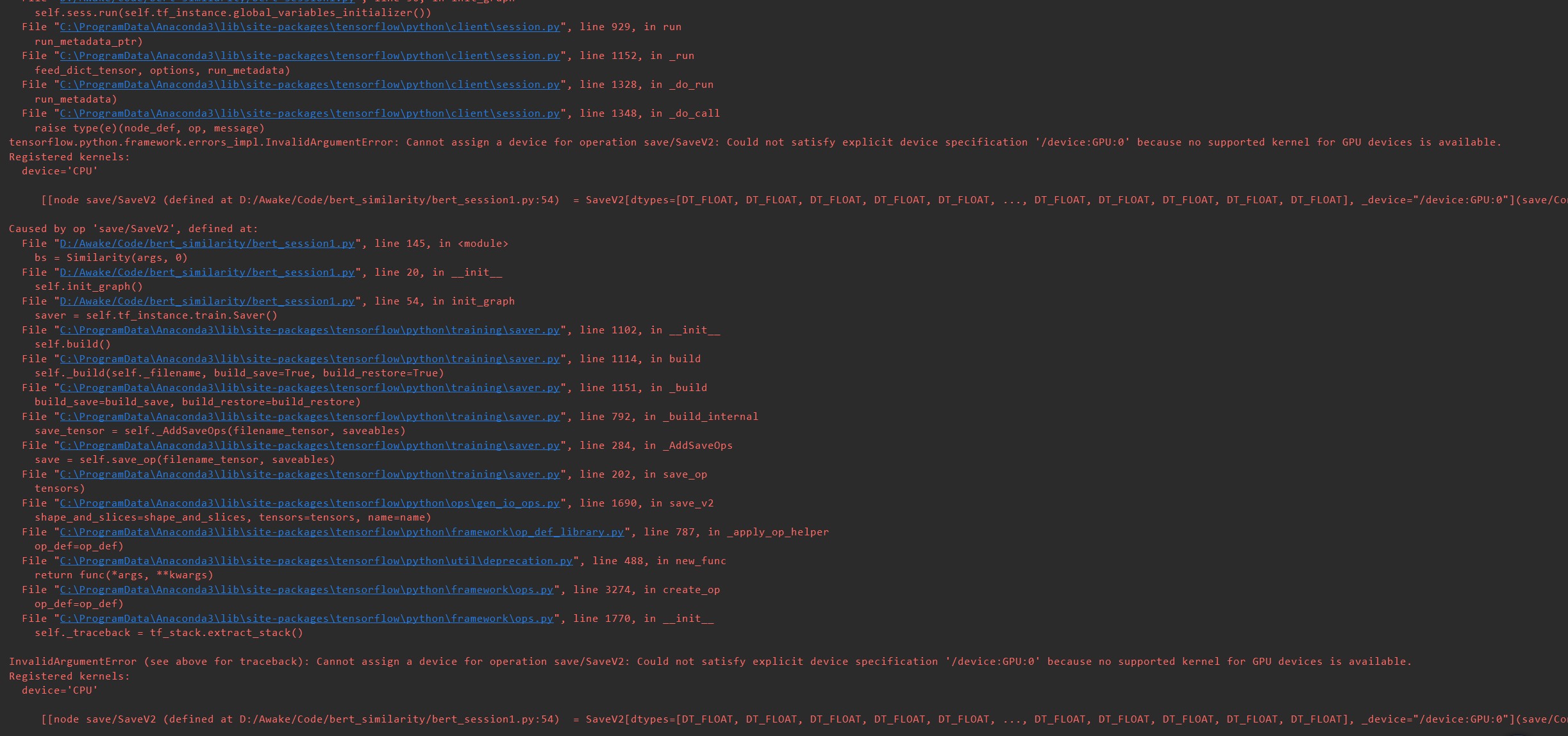

That's a different error because you're trying to place the saver ops on GPU which will not work. Also, it doesn't look like there is a bug here being reported, so I will close this ticket now. I believe stack overflow is a better venue for clarifications on usage, such as this. |

|

please do not close this issue, how is this not a bug ? all these people having this issue, the least you can do is to help or leave this open. |

|

@jaingaurav can you help look into this, and see if there is a bug, and maybe provide the recommended APIs? |

|

@policeme Hi, Have you solved this problem? I also encountered this problem. |

|

no I haven't

Cally Ma (马雄)

Chongqing University of Post and Telecommunications

Mobile: +86 15025700935

E-mail: mx15025700935@aliyun.com

…------------------------------------------------------------------

发件人:lingdavid <notifications@github.com>

发送时间:2019年9月30日(星期一) 10:55

收件人:tensorflow/tensorflow <tensorflow@noreply.github.com>

抄 送:Cally <mx15025700935@aliyun.com>; Mention <mention@noreply.github.com>

主 题:Re: [tensorflow/tensorflow] os.environ["CUDA_DEVICE_ORDER"] not work (#25152)

@policeme Hi, Have you solved this problem? I also encountered this problem.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub, or mute the thread.

|

|

I am facing the similar issue using Pytorch. You may change your running command from However, I don't know why some codes could work some codes don't. |

|

@shamangary You need to set that before the first use of cuda rather than after that. The following works well for me. |

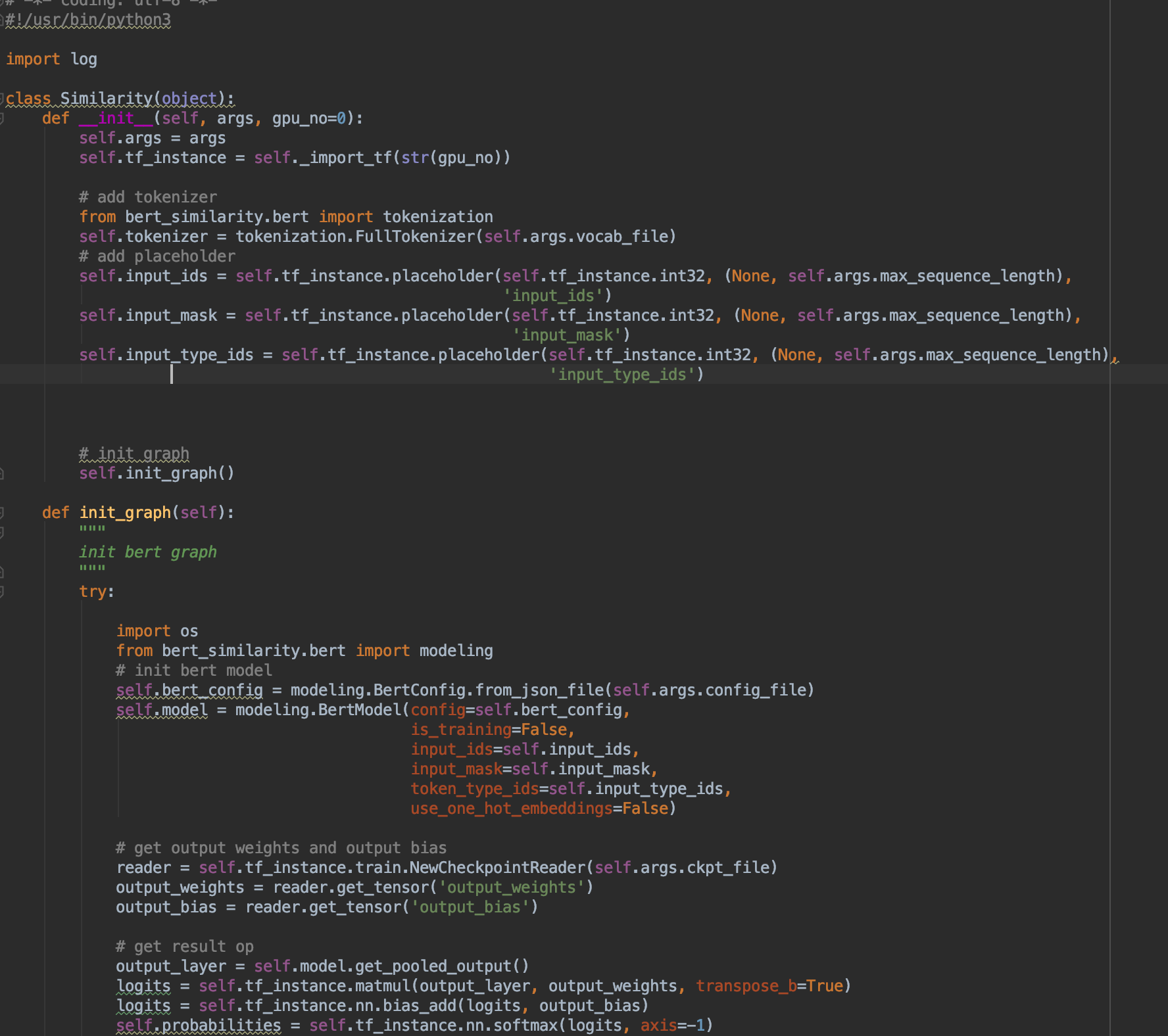

I have two GPU, I want to create 2 graph in 2 GPU, first graph in first GPU, second graph in second GPU.

2.when I create second graph in second GPU, I use os.environ["CUDA_DEVICE_ORDER"] = '1', but second graph still create on first GPU, I try many different ways, but it still not work.

It's a bug?

The text was updated successfully, but these errors were encountered: