-

Notifications

You must be signed in to change notification settings - Fork 2

Carpet Localisation

This project relates to the development of a carpet-based localisation system for an office robot.

Specifically, development of a system which tracks position over time by comparing the color of the carpet beneath the robot to a map of the global color pattern. In this way the robot can keep track of where it is at all times, which is essential to successful navigation around the office.

Note this project is not expected to be of any use to anyone else, as the approach is quite particular to our specific carpet. It just happened that our carpet was so well suited to localisation, I had to give it a shot!

I'm sharing mostly in the hope there is some interest in the problems and/or approaches taken, or if anyone finds some humor in the allocation of a decent amount of engineering time to a fairly insignificant and uncommon problem (localisation on our specific carpet).

This page gives an overview of how the problem was tackled, and what the final implementation looks like. See the 'links' section at the bottom for references to all source code.

Keywords: particle filter, gaussian mixture model, ROS

Here's the robot in its natural environment:

Some characteristics of the carpet observable from this image:

- The carpet consists of a grid of 0.5m x 0.5m colored tiles

- There are four colors (dark blue, light blue, black and beige)

- The colors are distributed pseudo-randomly throughout the office

- The color pattern is not likely subject to change over time (avoids issues related to map dynamics which may be encountered, for instance, in lidar localisation)

Overall the carpet reminds me of a giant multi-color QR code, which is what led me to believe this could be a basis for reliable location tracking.

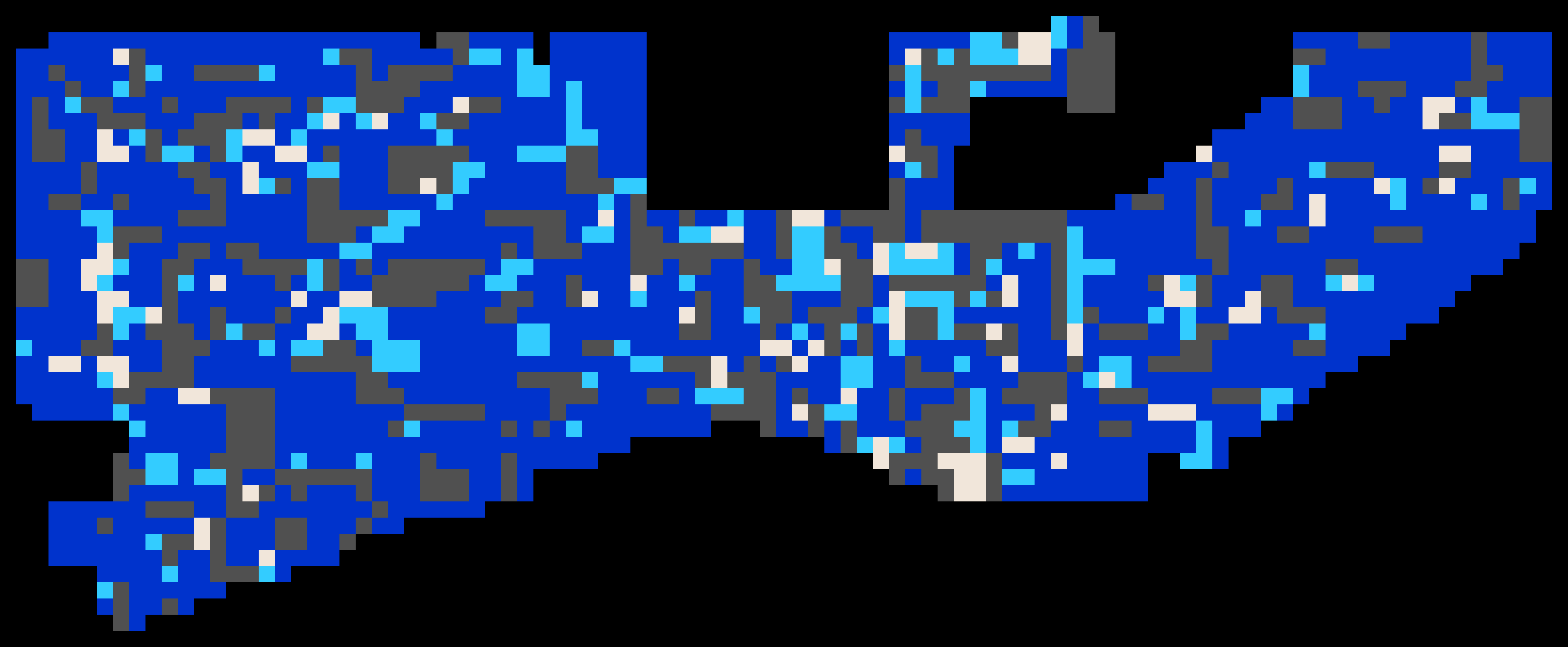

The following full office carpet map was produced by (laboriously!) recording carpet colors in an excel spreadsheet, which was then converted to a .png image file:

Some observations of the map as a whole:

- The map covers a roughly 20m by 55m area.

- The color pattern shows little in the way of patterns/repetition - global localisation should be achievable after observing only a small sub-patch of carpet.

- Whoever can tell me where the above photo was taken with reference to the above carpet map gets 50 points.

With the goal of localising based on carpet color, a means of sensing carpet color was required. I figured a camera should do for this, so I fixed a basic USB camera along with an LED for illumination to the underside of the robot, as shown:

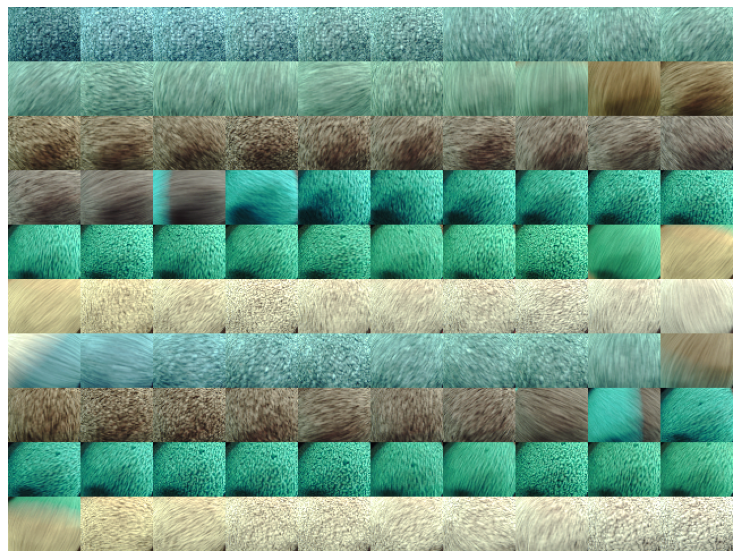

This camera produces images of the carpet under the robot that look like the following:

Figure: 100 sample images from the robot's carpet camera.

Note the colors here look much greener than in real life (for instance as shown in the 'target environment' photo above). This was deemed of little consequence as long as the color values are consistent and distinguishable, which turned out to be the case.

The next task was to determine which of the four colors (dark blue, light blue, black or beige) are dominant in a given image. To this end I created the training set of images shown above, containing multiple images of all four carpet colors. I took average HSV values for each image, and then fit a Gaussian Mixture Model with four classes to the HSV values, which captured the distinct clusters associated with the four carpet colors.

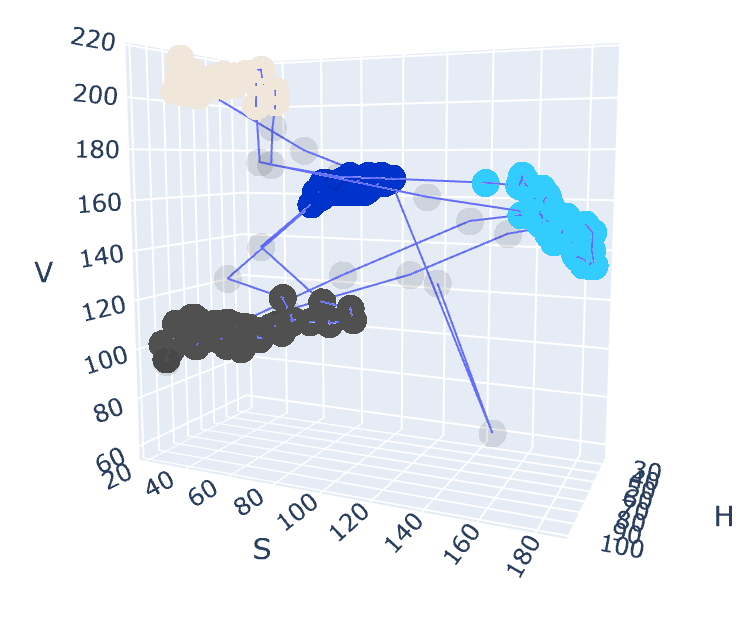

The following shows the four clusters associated with the four carpet colors in HSV space:

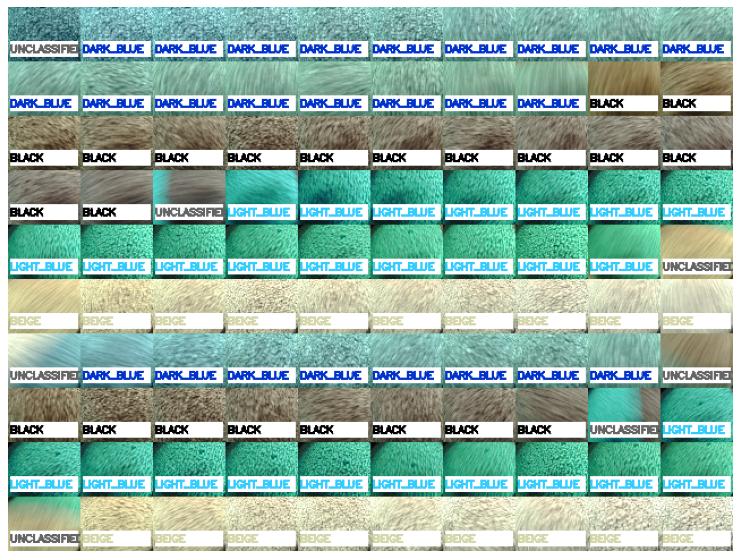

The following shows the results of applying the fitted model to determine carpet color over the training set images:

From visual inspection the assigned colors appeared to match ground truth well, so this is the model I went with. Color detection has turned out to be a well performing, reliable component in the carpet localisation system, and hasn't required much tuning beyond initial training. I'm happy with it!

For more detail, the full jupyter notebook used to train the classifier is available for viewing here.

So, we know the color of the carpet beneath the robot - but how do we use that to find out where we are?!

I'm sure various approaches would work well, but I went for a particle filter, which I am familiar with. In contrast to a Kalman filter, the particle filter is well suited to the task as it can capture the highly non-Gaussian nature of the measurement updates (color observation) and process updates (odometry accumulation). For implementation, I was able to leverage the pfilter library, which made it easy to get running (thanks johnhw!).

The particle filter takes odometry and carpet color as inputs. Odometry updates move the particles (noisily) through the environment, while color observations updates kill off particles located on carpet squares of different color. Hopefully this process results in the convergence of the particle cloud to the true robot pose.

The particle filter was tested on simulated data as shown below, with promising results:

Figure: particle filter running on simulated input data (see simulator.py), driving in a loop on a small map. The particles (red) generally track the true position (green) fairly well.

TODO: add link to Sebastian Thrun particle filter tutorials.

At this point I had

- A robot with a camera

- A color classifier, and

- A localisation algorithm.

To pull this together into a working robotic system, I used a variety of ROS components. Further I created a ROS node to integrate my color classification and particle filter into the ROS network. Some ROS components/packages of use:

- RViz, for visualisation. Also the '2D Pose Estimate' tool is used to seed the particle filter.

- Rosbag, for recording/playback of test runs

- create_robot, for controlling the robot and providing odometry

- cv_camera to access the usb camera

- cartographer_ros - when I realised my encoder odometry was not great, I switched to using lidar odometry, using cartographer's scan matching.

- The navigation stack, providing global and local planners to move the robot to specified goal locations.

TODO: would be nice to put an rqt_graph screenshot here.

With the system up and running in the target environment, I took some time to tune some of the available particle filter parameters. A log was taken driving five full loops of the office, and manually recording the ground truth pose of the robot at the end of each loop. This log could then be replayed using different particle filter parameters, with quality assessed based on how well the outputs match the recorded ground truth.

In practice the key parameters for tuning were the particle filter noise parameters (odometry noise and color measurement weighting), and the number of particles to use. Tuning to good performance on this 5-loop gave me some confidence that the system would run well in the longer term in real-world use.

The full notebook used for tuning is available here.

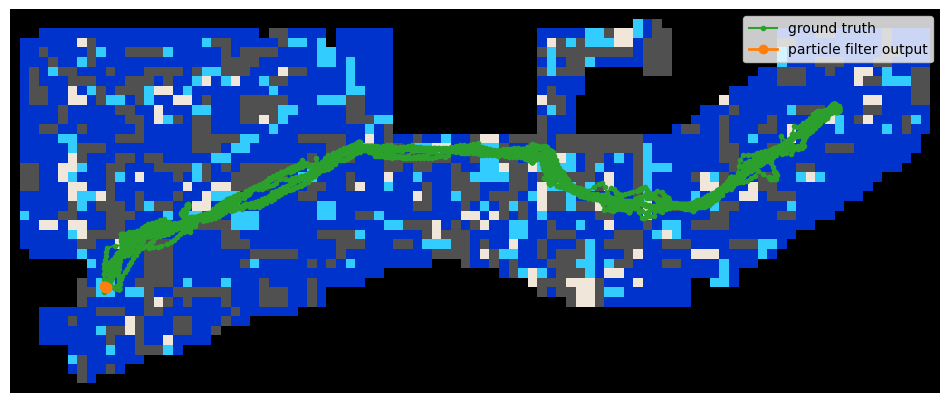

To give some idea of the importance of parameter tuning, here's an illustration of the particle filter output when well tuned (note an error in the legend - the particle filter output is shown in green):

The output trajectory repeatedly matches the true trajectory for this test run.

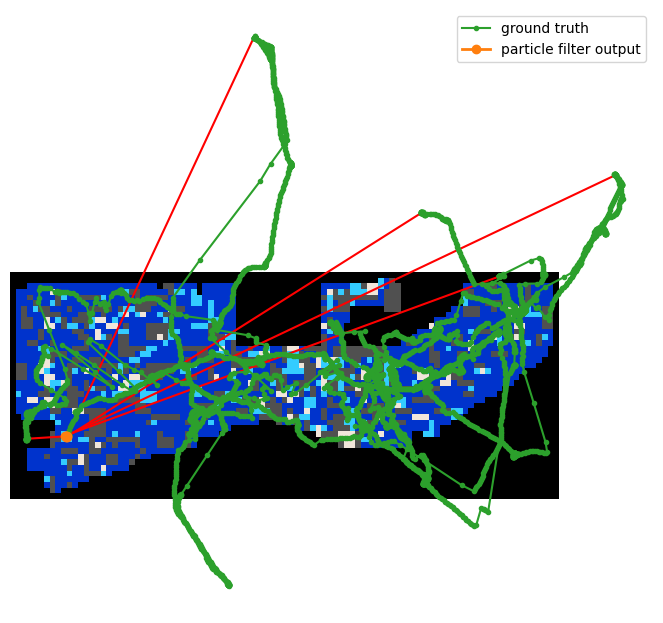

In contrast, this is the output when poorly tuned:

Obviously an appropriate parameter set is critical to achieving good results with the particle filter!

At the time of writing the localisation system is deemed more-or-less complete, and is being used in its first application - timesheet reminders.

Here's a .gif showing the RViz visualisation of the live system:

As can be seen, the raw images are being classified into the four carpet colors, which are then used to update the particle filter. The particles can be observed moving and dying off in response to various inputs from the camera, which results in the particle cloud following the robot's ground truth pose reliably. All working as expected!

Observed performance is good/reliable, although with an occasional divergence requiring re-seeding.

I feel that with enough work this system could be made quite bullet proof, and also be made self-seeding by matching local patches of carpet pattern to the global map. However I've about reached the end of my attention span for this project, so I think I'll leave it there! On to other things!

Thanks for reading. If you have any thoughts or questions about the project, I'd love to hear from you. Feel free to use the discussion tab: https://github.com/tim-fan/carpet_localisation/discussions/1#discussion-3562911

My code:

- Python package containing the carpet-based particle filter: https://github.com/tim-fan/carpet_localisation

- Jupyter notebook showing the process of tuning the filter on real-world data: https://nbviewer.jupyter.org/github/tim-fan/carpet_localisation/blob/master/notebooks/PF%20Parameter%20Optimisation%20-%205%20Office%20Loops.ipynb

- Code related to classification of carpet color from images: https://github.com/tim-fan/carpet_color_classification

- Jupyter notebook used to train the color classifier: https://nbviewer.jupyter.org/github/tim-fan/carpet_color_classification/blob/main/notebooks/train_classifier.ipynb

- ROS package for using carpet localisation in a ROS system: https://github.com/tim-fan/carpet_localisation_ros

- Longer term project for the office bot, containing move base configuration and more: https://github.com/tim-fan/office_bot

External code:

- Python package implementing the particle filter: https://github.com/johnhw/pfilter