New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

bail meme: out of loom #3645

Comments

|

Getting a very similar error for my fakezod: |

|

Yeah this has become a really serious problem recently, since the |

|

What's the main culprit in |

|

my ship seems to be getting a slightly different error now. It was bail meme, now it's bail oops. |

|

I have the same issue. I breached a couple of months ago, and was using my moon exclusively since. Switched to my planet yesterday (my planet was only relaying connections for my moon(s)), and encountered this issue after my planet half-joined a group (I think). The name came up as Used the build here: #3235 (comment) The planet does run for a while, and pulls in messages and data (like which ships have sunk) but then it eventually crashes with some variation of the above. My planet has ~14.7 million events. |

|

I've had a similar issue a few times this evening. I have no idea if it's related, but I'm also seeing quite a few messages like these in dojo. |

|

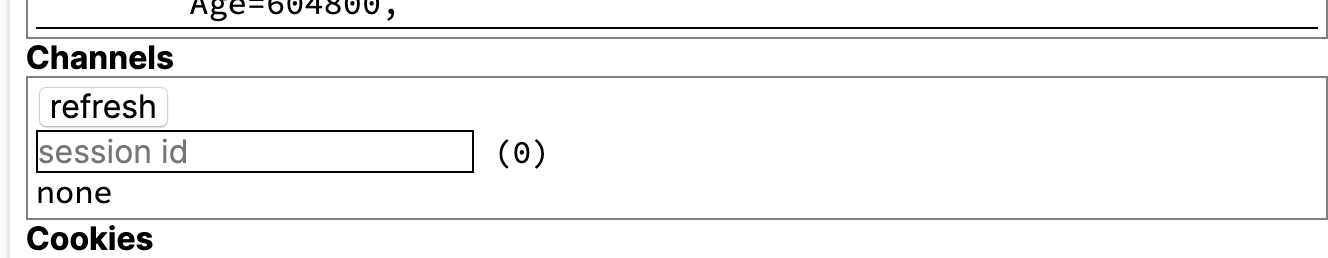

i am experiencing same issue after i half-joined and/or half-unsubscribed some of the channels/groups yesterday. my ship keeps crashing a few minutes after boot. +trouble: |

|

i also got this |

|

Update to my previous comment: #3645 (comment) It seems the issue has been resolved for me. I used the stable binary after running It's been running for over a day now with no issues. |

|

@t-e-r-m i am on |

|

booted up my moon and I'm seeing the same behavior output of +trouble: |

|

@david-xlvrs see #3235 (comment) |

|

thanks @t-e-r-m. I tried that running meld with that binary (#3235 (comment)) and it successfully completed. Unfortunately, I'm still crashing when running the urbit binary from that release and the latest stable binary release (v0.10.8). |

|

Hi All, Sorry for the delayed response. I realize that the current state of our memory management tooling has not been fully explained in any one place, so I'll attempt to do so here. First of all, the crash output of the current release is fraught, due to a) bad error messages and b) a crash-handling bug. Both problems will be fixed in the next release (#3471). "pier: serf error: end of file" means "the worker process unexpectedly shutdown" (in a unique dialect combining urbit and unix idioms). Everything from "address X out of loom" to "Assertion failed" results from the crash-handling bug described above (when it follows the "end of file" error, that is). The relevant detail preceeds both: These are errors are sometimes ephemeral, since the loom holds both are persistent state and the "workspace" for processing a given event. In which case, restarting urbit is all that's required. But they usually recur, and require further intervention to be stopped. The first thing to try upon restart is If the above fails, or is not possible due to crash frequency, or does not reclaim enough space to move forward, there are two remaining options. Both involve as-of-yet unreleased features.

Note that The only way to work around this is to run it on a machine with more memory. (There are various ways this implementation could be made to use less memory, most of them involving more sophisticated hashtable implementations. Reach out if you're the kind of person who likes writing hashtables!) Finally, there's From the dojo, run the following: This will write a The following output will tell you that it worked: at which point, you can run Obviously, this recovery process is both unnecessarily complex and tediously manual. The next kernel and runtime releases will bring some affordances, but much more are still needed. Urbit should be able to avoid many of these memory-pressure scenarios proactively, and automatically recover from many more. This remains a top development priority. Most of this will involve more sophisticated memory management and error-handling in the runtime -- I've been putting down the foundations for this throughout 2020. But some will need to involve limits and their enforcement inside arvo (such as #3680, or better handling of |

|

Thank you very much @joemfb for the detailed response. The manual |

|

My ship is broken. I'm getting regular "out of loom" crashes. According to mass (see https://hatebin.com/gcjhvqkfqb ) I have 1.3GB of vane-e channels: I can't "cram" or "meld" the box using the pre-release binaries mentioned above - full outputs at https://hatebin.com/hmgnwsldft |

|

It's worth noting that |

|

Closing in favor of #4182. |

Describe the bug

Ship crashes with error

I used the Mac binary to run meld from: #3235 (comment)

Output of meld:

Started ship after meld, and it crashed again:

System (please supply the following information, if relevant):

Additional context

My pier is 15.68 GB, which seems kind of large.

The text was updated successfully, but these errors were encountered: