-

Notifications

You must be signed in to change notification settings - Fork 35

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Update recommended min UA Resource Timing buffer size to 250 #155

Conversation

| @@ -784,15 +784,15 @@ <h3>Extensions to the <code>Performance</code> Interface</h3> | |||

| interface to allow controls over the number of | |||

| <a>PerformanceResourceTiming</a> objects stored.</p> | |||

| <p>The recommended minimum number of | |||

| <a>PerformanceResourceTiming</a> objects is 150, though this may be | |||

| <a>PerformanceResourceTiming</a> objects is 250, though this may be | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Slightly tangential nit: I think we need to define a stronger language for the minimum, as I suspect that UAs which will decide to go with lower buffer sizes will be ignored by developers, which will result in dropped entries and potential compat issues. That doesn't have to happen in this PR though.

|

I support this change, but I think it needs some commitment from all UAs, as partial adoption may lead to dropped entries (when developers start assuming that the new value is the minimum everywhere) |

|

This seems sane to me - just a quick question about when we buffer events. Are we planning on having that apply to resource timing? If so, do we know what the data looks like if we only look at resources fetched before onload? |

|

@tdresser I don't think we'll be able to stop queuing RT entries post-onload once we land the PO buffering logic, as that would break nearly every existing client. RT will have to be a special case where we do both: we will provide a way for PO to retrieve buffered entries queued prior to onload (e.g. via passing buffered flag to PO registration), and we will continue the logging post onload into the existing buffer. For new specs, we don't have to do this, but given wide adoption of RT I think we need to provide both to make the migration path simple, without breaking old clients. FWIW, I think the "what is the distribution of same-frame resources prior to onload" question is worth investigating a bit further. I believe we should have all the right bits in HTTP Archive to extract this: we have detailed data on initiator / frame, and we can filter out any requests initiated after onload fires. @nicjansma @rviscomi perhaps you can do some digging? |

|

LGTM |

|

@nicjansma can you open implementation bugs to modify that value and link them here? |

|

This sounds good for us, too. |

Happy to help, but not sure of the exact problem statement. It may be worth creating a new thread on discuss.httparchive.org and documenting the question/answer so the community can help as well. |

|

Firefox 62 will contain this change per https://bugzilla.mozilla.org/show_bug.cgi?id=1462880 |

|

Chromium CL is at https://chromium-review.googlesource.com/c/chromium/src/+/1078107 |

Following a proposed spec change[1], this CL changes the number of entries buffered by default before the Resource Timing buffer is full. [1] w3c/resource-timing#155 Bug: 847689 Change-Id: Id93bfea902b0cf049abdac12a98cc4fba7ff1dd3

Following a proposed spec change[1], this CL changes the number of entries buffered by default before the Resource Timing buffer is full. [1] w3c/resource-timing#155 Bug: 847689 Change-Id: Id93bfea902b0cf049abdac12a98cc4fba7ff1dd3

Following a proposed spec change[1], this CL changes the number of entries buffered by default before the Resource Timing buffer is full. [1] w3c/resource-timing#155 Bug: 847689 Change-Id: Id93bfea902b0cf049abdac12a98cc4fba7ff1dd3 Reviewed-on: https://chromium-review.googlesource.com/1078107 Reviewed-by: Nicolás Peña Moreno <npm@chromium.org> Commit-Queue: Yoav Weiss <yoav@yoav.ws> Cr-Commit-Position: refs/heads/master@{#563133}

Following a proposed spec change[1], this CL changes the number of entries buffered by default before the Resource Timing buffer is full. [1] w3c/resource-timing#155 Bug: 847689 Change-Id: Id93bfea902b0cf049abdac12a98cc4fba7ff1dd3 Reviewed-on: https://chromium-review.googlesource.com/1078107 Reviewed-by: Nicolás Peña Moreno <npm@chromium.org> Commit-Queue: Yoav Weiss <yoav@yoav.ws> Cr-Commit-Position: refs/heads/master@{#563133}

Following a proposed spec change[1], this CL changes the number of entries buffered by default before the Resource Timing buffer is full. [1] w3c/resource-timing#155 Bug: 847689 Change-Id: Id93bfea902b0cf049abdac12a98cc4fba7ff1dd3 Reviewed-on: https://chromium-review.googlesource.com/1078107 Reviewed-by: Nicolás Peña Moreno <npm@chromium.org> Commit-Queue: Yoav Weiss <yoav@yoav.ws> Cr-Commit-Position: refs/heads/master@{#563133}

@igrigorik - just noticed that the "what is the distribution of same-frame resources prior to onload" question went unanswered. As far as cross-origin iframes go, their data was not included in @nicjansma's research, as RUM has no visibility into their perf timeline. So the HTTPArchive analysis will enable us to see if there's a huge difference between the same-origin's main frame and same-origin iframes as far as resource numbers go, but I doubt that would significantly reduce the numbers in most cases. I agree that it seems worthwhile to dig further there, but not sure we should block on it. WDYT? |

You're probably right, but I'd still love to sanity check this with data. :)

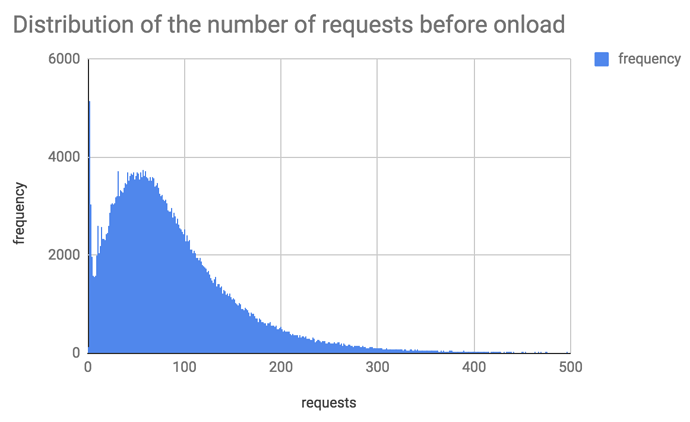

The specific question we're after is: what is the distribution of the count of resources fetched by pages, where the fetch is initiated prior to end of the onload event of the page — e.g. excluding resources deferred to post onload, lazyloaded content, etc. |

|

Ran the HTTP Archive analysis on the distribution of requests before onload. See the full writeup here: https://discuss.httparchive.org/t/requests-per-page-before-onload/1376 In summary, the median is 74 requests, with a bimodal distribution peaking around 1 and 50 requests. IIUC did you also want to split this by initiator? |

|

@rviscomi awesome work, thank you!

I don't think it would directly affect what we're after here, but might be an interesting datapoint. One thing, what percentile is 250 req's? What are the 95, 97, 99th, etc? |

|

250 is the 95.86th %ile The 95th is 234 For more detailed percentile info see the raw data in this sheet. |

|

@rviscomi awesome data, thanks! :) Since it all adds up, I haven't heard objections and this change has already landed in 2 implementations - I'm merging it. |

…ze to 250., a=testonly Automatic update from web-platform-testsChange Resource Timing default buffer size to 250. Following a proposed spec change[1], this CL changes the number of entries buffered by default before the Resource Timing buffer is full. [1] w3c/resource-timing#155 Bug: 847689 Change-Id: Id93bfea902b0cf049abdac12a98cc4fba7ff1dd3 Reviewed-on: https://chromium-review.googlesource.com/1078107 Reviewed-by: Nicolás Peña Moreno <npm@chromium.org> Commit-Queue: Yoav Weiss <yoav@yoav.ws> Cr-Commit-Position: refs/heads/master@{#563133} -- wpt-commits: f696c249c6d41c32bdc04d0bec688ee00228a0cf wpt-pr: 11257

…ze to 250., a=testonly Automatic update from web-platform-testsChange Resource Timing default buffer size to 250. Following a proposed spec change[1], this CL changes the number of entries buffered by default before the Resource Timing buffer is full. [1] w3c/resource-timing#155 Bug: 847689 Change-Id: Id93bfea902b0cf049abdac12a98cc4fba7ff1dd3 Reviewed-on: https://chromium-review.googlesource.com/1078107 Reviewed-by: Nicolás Peña Moreno <npm@chromium.org> Commit-Queue: Yoav Weiss <yoav@yoav.ws> Cr-Commit-Position: refs/heads/master@{#563133} -- wpt-commits: f696c249c6d41c32bdc04d0bec688ee00228a0cf wpt-pr: 11257

Spec issue: w3c/resource-timing#155 UltraBlame original commit: 761d1da1d483d5573f91acd0d28082e90fbed28a

Spec issue: w3c/resource-timing#155 UltraBlame original commit: 761d1da1d483d5573f91acd0d28082e90fbed28a

Spec issue: w3c/resource-timing#155 UltraBlame original commit: 761d1da1d483d5573f91acd0d28082e90fbed28a

…ze to 250., a=testonly Automatic update from web-platform-testsChange Resource Timing default buffer size to 250. Following a proposed spec change[1], this CL changes the number of entries buffered by default before the Resource Timing buffer is full. [1] w3c/resource-timing#155 Bug: 847689 Change-Id: Id93bfea902b0cf049abdac12a98cc4fba7ff1dd3 Reviewed-on: https://chromium-review.googlesource.com/1078107 Reviewed-by: Nicolás Peña Moreno <npmchromium.org> Commit-Queue: Yoav Weiss <yoavyoav.ws> Cr-Commit-Position: refs/heads/master{#563133} -- wpt-commits: f696c249c6d41c32bdc04d0bec688ee00228a0cf wpt-pr: 11257 UltraBlame original commit: 898838b493f9ff1a7a94f17c40998c08d50c16c9

…ze to 250., a=testonly Automatic update from web-platform-testsChange Resource Timing default buffer size to 250. Following a proposed spec change[1], this CL changes the number of entries buffered by default before the Resource Timing buffer is full. [1] w3c/resource-timing#155 Bug: 847689 Change-Id: Id93bfea902b0cf049abdac12a98cc4fba7ff1dd3 Reviewed-on: https://chromium-review.googlesource.com/1078107 Reviewed-by: Nicolás Peña Moreno <npmchromium.org> Commit-Queue: Yoav Weiss <yoavyoav.ws> Cr-Commit-Position: refs/heads/master{#563133} -- wpt-commits: f696c249c6d41c32bdc04d0bec688ee00228a0cf wpt-pr: 11257 UltraBlame original commit: 898838b493f9ff1a7a94f17c40998c08d50c16c9

…ze to 250., a=testonly Automatic update from web-platform-testsChange Resource Timing default buffer size to 250. Following a proposed spec change[1], this CL changes the number of entries buffered by default before the Resource Timing buffer is full. [1] w3c/resource-timing#155 Bug: 847689 Change-Id: Id93bfea902b0cf049abdac12a98cc4fba7ff1dd3 Reviewed-on: https://chromium-review.googlesource.com/1078107 Reviewed-by: Nicolás Peña Moreno <npmchromium.org> Commit-Queue: Yoav Weiss <yoavyoav.ws> Cr-Commit-Position: refs/heads/master{#563133} -- wpt-commits: f696c249c6d41c32bdc04d0bec688ee00228a0cf wpt-pr: 11257 UltraBlame original commit: 898838b493f9ff1a7a94f17c40998c08d50c16c9

I'd like to propose a modest change to the "recommended" initial UA RT buffer size of 150.

I've been doing some research into various aspects of ResourceTiming "visibility", and have found that over 14% of sites generate more than 150 resources in their main frame.

Here's a distribution of the Alexa Top 1000 sites and how many RT entries are from their main frame (from a crawl with the buffer size increased in the head):

In this crawl, ~18% of sites would have hit the 150 limit (and only 15% of those sites set the buffer size higher).

The 150 number is a UA-recommended limit, though I believe all current UAs choose 150. It is not a spec requirement.

There's some previous discussion on whether 150 is appropriate, or if we should suggest UAs pick a higher default here: #89

The reason I think it should be increased is that:

setResourceTimingBufferSize, third-party analytics scripts that may be loaded lower priority or after onload might want to increase this limit to ensure they capture all of the page's resources, but by the time they load, the buffer may already be fullIt would still be up to UAs to decide if they want to chose 250, stay at 150, or a go for a lower initial limit (maybe even depending on the environment).

Changing to 250 will leave only 2.5% of the Alexa Top 1000 sites with a full buffer (more than 250 resources in the main frame).

Preview | Diff