-

Notifications

You must be signed in to change notification settings - Fork 100

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Model Convergence Problem #29

Comments

|

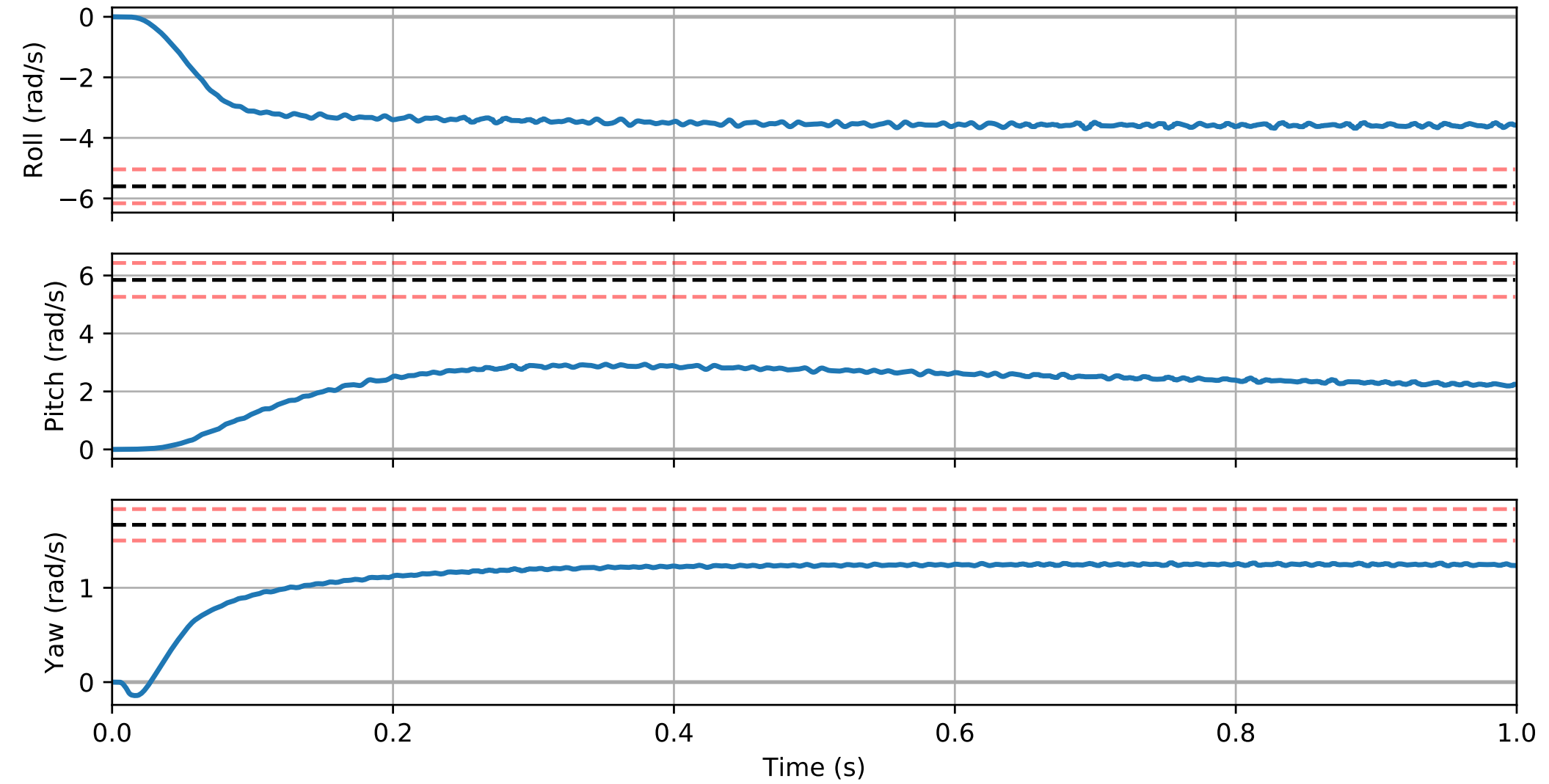

Hi @hnsyzjianghan, RL can be really sensitive and finicky. I havn't tried the older version of gymfc with gz9 and ROS but if the PID example is working fine than that shouldnt cause any problems. The graph you are showing me isn't awful, its learning the step just not quite there. Some things to check/try,

|

|

Dear william,

|

|

So that script is just a demo script for interfacing with gymfc using openai baselines, it isn't the scripts used for the manuscript. For your own work its going to involve you implementing your own script to interface with the gym. The gym source for the neuroflight paper was never published, just the neuroflight firmware code. gymfc2 is a huge implementation change, its now a general flight control tuning framework. gymfc2 core it self an openai gym any more however this is extremely easy to do as you just need to inherit the FlightControlEnv class and provide your own state and reward functions. Will be porting over the openai gym interfaces to the new architecture when I have time. An example of creating a subclass can be found here. Further details will be in my thesis which will be completed in the next couple weeks, and when I have time I'll add more to the readme and additional examples. In the mean time these are new motor models that I've ported over for gymfc2 https://github.com/wil3/gymfc-aircraft-plugins. |

|

Sorry, I went on a business trip the other day. Thank you for your reply. I will use your model to finish my project. |

|

Hi william, |

|

Hi @hnsyzjianghan, |

|

Okay, I'll change it in the future work, thank you. |

Hi william,

I am trying to run this project (gymfc v0.1.0) on Ubuntu 18.04 with gazebo 9。I use baseline's PPO1 algorithm to train the controller, just like the example you gave me.

https://gist.github.com/wil3/4115a31c527afd4a7f8ecfab88fa4a24

However, after 15 million steps, the EpRewMean seems does not converge, although it's much higher than its initial value. Because I load the trained graph and find the performance of the controller does not meet the criteria in your paper.

I use the gazebo 9 attached to ROS, using the code you provided(run_gymfc.py), please tell me where the problem is, thank you.

The text was updated successfully, but these errors were encountered: