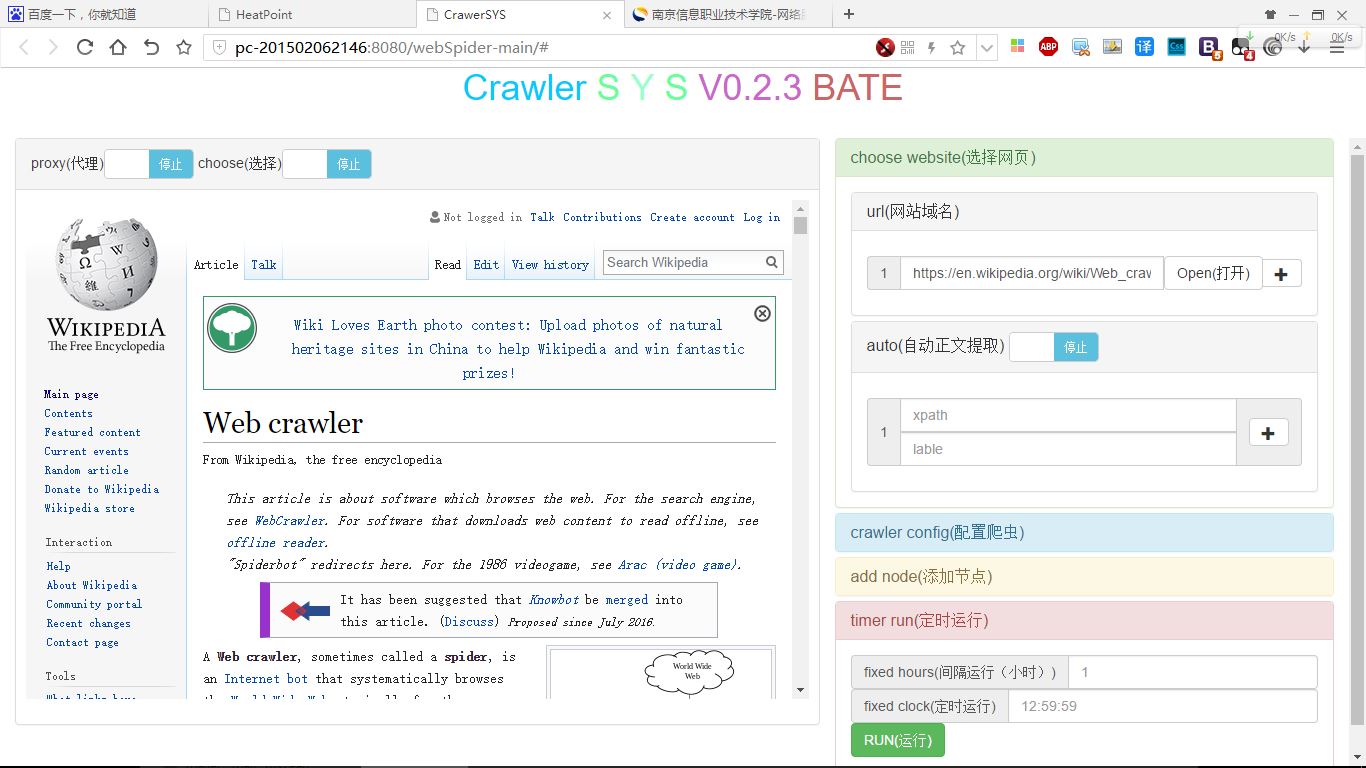

Light-weight High-performance Reliable Smart Distributed Crawler System preview 中文文档

-

it's very small. Streamline the process to perfection.

- Use java socket to Communication.

- Use web socket to monitor.

- Use jsoup to download.

- Use xsoup to get data.

-

it's very fast. Because using HikariCP it can access database very fast.

I crawler damai all date using 10 thread working on 4Mbps network take me less than 30s.

-

It's very accurate. It rarely go wrong when deal with dig data.

I have another project working on this HeatPoint. You can see it's vary distinct.

-

It can automatic get data(only new or blog) from website you entry without training.

- You can git war to you tomcat Server.

- You can download jar and use it in your project.

List<String> xpath = new ArrayList<String>(); xpath.add("//a/@href");

List<String> url = new ArrayList<String>(); url.add("https://www.github.com");

new Crawler(url,xpath).run(); //get data by xpathList<String> url = new ArrayList<String>(); url.add("https://www.github.com");

new Crawler(url,"http.+",1000).run(); //automatic get title and contentThis project dependent on several Open source project

jsoup ,xsoup , boilerpipe , HikariCP

Create new crawler by List of url and xpath.

Create new crawler by List of url and Map of lable(key),xpath(value).

Create new auto crawler by List of url. Use urlRegex to limit url type.It will get title and content automatic without xpath.

Start running in this thread. It will wait until all node finished.

Start running in other thread.

Run in several hours.

Run in fixed time like 12:00:00. Format: "HH:mm:ss"

Add node. Default: "127.0.0.1:6543". Format: "ip : port".

Select special url and dispose with special xpath.

Data persistence. Save to your database (mysql).If asList is false only first date pre xpath.

JDBC configuration. Default: "127.0.0.1:3306/crawler","root","root". Format: "ip : port /dataBaseName".

Use breadth-first crawler. Default: "//a/@href",5000

The amount of data to send each time.Less limit more accurate monitoring but slower.Default: 30

Change crawler speed.Depend on your network bandwith.Recommend: 2~3 per Mbps.Default: 10

Slow down crawler to avoid connection time out error.Default: 0

If you want to processing acquired data or use your own way to save date.you can implements Dispose and use it as parameter.

Add several exceptions at one times.

Add unify header for any url. Default:{"User-Agent", "Mozilla/4.0 (compatible; MSIE 5.0; Windows NT; DigExt)"}

Add unify cookie for any url.

If you use it without limit.It will never over.

Start crawler server by default port.

Default: 6543

Return org.jsoup.Connection for next step.

Send http request without response.

Get json from http request.

Extraction data by xpath from Document.

Ignore SSL certificate.To avoid Validation failure.

Same as Integer.parseInt(String s) but more smart.

Return similarity between two String.

Return most similar String in List.If less than filter return 0.

| name | class | describe |

|---|---|---|

| url | String | page url |

| res | List<List<String>> | date by xpath order |

| resMap | Map<String, List<String>> | you can use it if you add lables |

| link | List<String> | add link if you want |