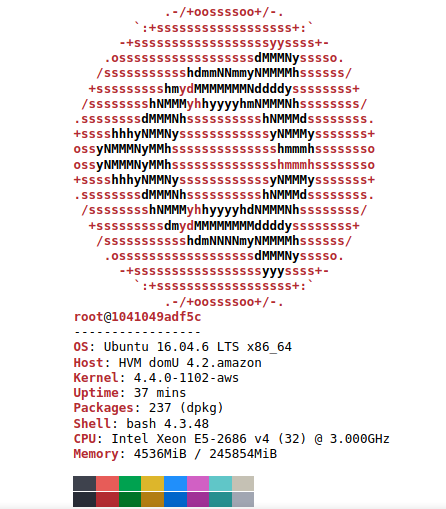

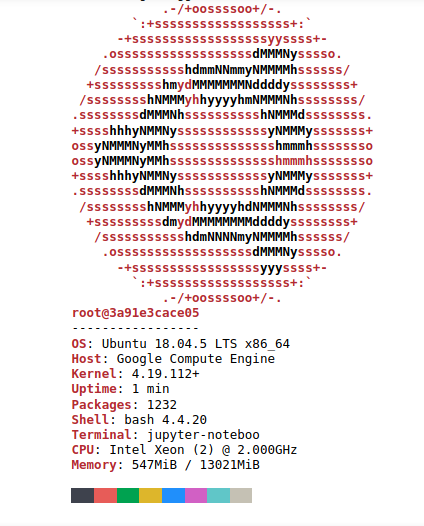

Hello, I have prepared two speed tests for you on NVIDIA GPUs that I have access to. GPUs have been accessed via Google Colab and AWS.

WARNING : Instead of evaluating these GPUs alone, I recommend you to examine them with all their hardware, these GPUs may give different results in different applications or tests at different times.

Graphics processor unit : The graphics processor unit, or GPU for short, is the device used for graphic creation in personal computers, workstations or game consoles. Modern GPUs are extremely efficient at rendering and displaying computer graphics, and their high parallel structures make it more efficient than CPU for complex algorithms. The GPU can be just above the graphics card or integrated into the motherboard.

1 . Speed test : I created four matrices with 10000 rows and 10000 columns on the GPU. First I multiply matrix a and b and assign it to variable y, then I multiply matrix c and d and assign it to variable z, and finally I multiply matrix y and z and assign it to variable x, and I did this operation 1000 times in total.

import time, torch

bas = time.time()

a = torch.rand(10000, 10000, device=torch.device("cuda"))

b = torch.rand(10000, 10000, device=torch.device("cuda"))

c = torch.rand(10000, 10000, device=torch.device("cuda"))

d = torch.rand(10000, 10000, device=torch.device("cuda"))

for i in range(0,1000):

y = a@b

z = c@d

x = y@z

son = time.time()

print("1.test result (second) : " + str(son-bas))2 . Speed test : With C ++, I manually allocated two places in the GPU memory (10000 rows and 10000 columns) and assigned values to these reserved areas with loops. Then I multiplied these matrices with each other.

You can access the cuda, c and header files written for Test 2 from these links. Links: https://ahmetfurkandemir.s3.amazonaws.com/kernel.cu (kernel.cu), https://ahmetfurkandemir.s3.amazonaws.com/dev_array.h (dev_array.h), https://ahmetfurkandemir.s3.amazonaws.com/kernel.h (kernel.h), https://ahmetfurkandemir.s3.amazonaws.com/matrixmul.cu (matrixmul.c).

#include <iostream>

#include <vector>

#include <stdlib.h>

#include <time.h>

#include <cuda_runtime.h>

#include "kernel.h"

#include "kernel.cu"

#include "dev_array.h"

#include <math.h>

#include <stdio.h>

using namespace std;

int main()

{

// Perform matrix multiplication C = A*B

// where A, B and C are NxN matrices

int N = 10000;

int SIZE = N*N;

// Allocate memory on the host

vector<float> h_A(SIZE);

vector<float> h_B(SIZE);

vector<float> h_C(SIZE);

// Initialize matrices on the host

for (int i=0; i<N; i++){

for (int j=0; j<N; j++){

h_A[i*N+j] = sin(i);

h_B[i*N+j] = cos(j);

}

}

// Allocate memory on the device

dev_array<float> d_A(SIZE);

dev_array<float> d_B(SIZE);

dev_array<float> d_C(SIZE);

d_A.set(&h_A[0], SIZE);

d_B.set(&h_B[0], SIZE);

matrixMultiplication(d_A.getData(), d_B.getData(), d_C.getData(), N);

cudaDeviceSynchronize();

d_C.get(&h_C[0], SIZE);

cudaDeviceSynchronize();

printf("END");

return 0;

}There are a total of 4 GPU bananas belonging to the Tesla series, let's examine them in order.

- Yes, as you can see, we have a machine with 4 Tesla V100 GPUs(It has 64GB of video memory.) in total and we also have a 16-core Intel (R) Xeon (R) CPU.

- We have a Tesla P4 GPU with 7.6GB of video memory, we also have an Intel (R) Xeon (R) CPU with 1 core.

- We have a Tesla P100 GPU with 16.2GB of video memory, we also have a 1-core Intel (R) Xeon (R) CPU.

- We have a Tesla T4 GPU with 15 GB of video memory, we also have a 1-core Intel (R) Xeon (R) CPU.

1 . Let's recall what our test is. I created four matrices with 10000 rows and 10000 columns on the GPU. First I multiply matrix a and b and assign it to variable y, then I multiply matrix c and d and assign it to variable z, and finally I multiply matrix y and z and assign it to variable x, and I did this operation 1000 times in total.

import time, torch

bas = time.time()

a = torch.rand(10000, 10000, device=torch.device("cuda"))

b = torch.rand(10000, 10000, device=torch.device("cuda"))

c = torch.rand(10000, 10000, device=torch.device("cuda"))

d = torch.rand(10000, 10000, device=torch.device("cuda"))

for i in range(0,1000):

y = a@b

z = c@d

x = y@z

son = time.time()

print("1.test result (second) : " + str(son-bas))-

1-) 4 X NVIDIA Tesla V100 GPU : 291.4778277873993 (Second), about 4.85 minutes.

-

2-) NVIDIA Tesla P4 GPU : 1071.427838563919 (Second), about 17.85 minutes.

-

3-) NVIDIA Tesla P100 GPU : 479.9311819076538 (Second), about 7.99 minutes.

-

4-) NVIDIA Tesla T4 GPU : 1293.739860534668 (Second), about 21.56 minutes.

Our machine with 4 X NVIDIA Tesla V100 GPU won this race.

2 . Let's recall what our test is. With C ++, I manually allocated two places in the GPU memory (10000 rows and 10000 columns) and assigned values to these reserved areas with loops. Then I multiplied these matrices with each other.

#include <iostream>

#include <vector>

#include <stdlib.h>

#include <time.h>

#include <cuda_runtime.h>

#include "kernel.h"

#include "kernel.cu"

#include "dev_array.h"

#include <math.h>

#include <stdio.h>

using namespace std;

int main()

{

// Perform matrix multiplication C = A*B

// where A, B and C are NxN matrices

int N = 10000;

int SIZE = N*N;

// Allocate memory on the host

vector<float> h_A(SIZE);

vector<float> h_B(SIZE);

vector<float> h_C(SIZE);

// Initialize matrices on the host

for (int i=0; i<N; i++){

for (int j=0; j<N; j++){

h_A[i*N+j] = sin(i);

h_B[i*N+j] = cos(j);

}

}

// Allocate memory on the device

dev_array<float> d_A(SIZE);

dev_array<float> d_B(SIZE);

dev_array<float> d_C(SIZE);

d_A.set(&h_A[0], SIZE);

d_B.set(&h_B[0], SIZE);

matrixMultiplication(d_A.getData(), d_B.getData(), d_C.getData(), N);

cudaDeviceSynchronize();

d_C.get(&h_C[0], SIZE);

cudaDeviceSynchronize();

printf("END");

return 0;

}Test 2-a, let's first see which GPU will compile the Cuda file named matrixmul.cu. The files are compiled with nvcc (Cuda compiler).

# compilation test

bas = time.time()

!nvcc matrixmul.cu

son = time.time()

print("2.test-a result (second) : " + str(son-bas))-

1-) 4 X NVIDIA Tesla V100 GPU : 1.413379192352295 (Second).

-

2-) NVIDIA Tesla P4 GPU : 2.9613592624664307 (Second).

-

3-) NVIDIA Tesla P100 GPU : 1.4539947509765625 (Second).

-

4-) NVIDIA Tesla T4 GPU : 1.6754465103149414 (Second).

The machine with 4X NVIDIA Tesla V100 GPU won the race 2-a by a small margin.

Test 2-b, which GPU will be able to finish running the compiled file first.

# run the compiled file, test

bas = time.time()

!./a.out

son = time.time()

print("2.test-b result (second) : " + str(son-bas))-

1-) 4 X NVIDIA Tesla V100 GPU : 9.453376293182373 (Second).

-

2-) NVIDIA Tesla P4 GPU : 8.686630487442017 (Second).

-

3-) NVIDIA Tesla P100 GPU : 8.072553873062134 (Second).

-

4-) NVIDIA Tesla T4 GPU : 8.99604868888855 (Second).

The machine with 4X NVIDIA Tesla P100 GPU won the race 2-b by a small margin.

-

According to my observations, in short and simple operations, all GPUs, regardless of GPU video memory and CPU, can finish in a very short and close time.

-

But in long and laborious calculations, high GPU memory and a good CPU allow it to stand out from other competitors.

-

If we look at the graphics and results, today's winner is 4 X NVIDIA Tesla V100 GPUs :).

-

WARNING : Instead of evaluating these GPUs alone, I recommend you to examine them with all their hardware, these GPUs may give different results in different applications or tests at different times.