-

Notifications

You must be signed in to change notification settings - Fork 20

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Future Features #2

Comments

|

Suggestion 1: Detect scene change, and don't interpolate them (To avoid scene change artifacts). Instead, copy the original frame into where the interpolated frames would have been. Suggestion 2: Detect and interpolate only duplicated frames. (Good for anime where there are multiple duplicated frames, sometime 1 dupe, sometimes 2 or 3, etc. Its super inconsistent through out the video) |

|

@Brokensilence It is so COOOOOOL to point out that the multi-frame repetition phenomenon that often occurs in anime, I think this is usually ignored by other users. However, I am more considering the whole pipeline we talking about. For duplicate frames, And for the same reason, |

* update: use tables to enhance expressiveness * update: fix table format * update: fix table info * update: address Usage * add: zh-cn doc * update: eng-cn readme link * update: eng-cn readme link * update: remove external links * upload and black ori deoldify * update: add deoldify location * update: upload and black ori deoldify * update: try deoldify for images * update: try deoldify art for images * del: deinit DeOldify * add: re-init DeOldify * update: black * update: set GPU0 as default * update: todo * update: prepare and run stable version * update: descriptions * cleanup * del: deoldify saas * cleanup * update: add cfg output * update: fix cfg opt * update: fix arg * update: desc. and args. * update: add one blank * update: args desc * update: try internal link * update: try internal link #2

|

According to #3 , model-level DeOldify has been integrated. |

|

Hi, i'm really enjoying your work. |

|

@meguerreroa |

|

Actually i'm a total newbie in the area so i'm unsure of which one is better or should be added. But what i have seen, there are some articles and posts talking about ESRGAN. Sorry not to be helpful. |

I do not know much about the subject, but with a little research on the models, I can highlight some points: ESRGAN:

MMSR:

TecoGAN:

SRGAN:

SRVAE:

I do not know if you knew what I said, but I hope I helped. Honestly I have only tried TecoGAN at Colab, but the results did not seem good (More definition, but many errors), maybe I did something wrong, but I wanted to give my experience. Sorry if there are mistakes in my English, I did it with a translator. Edit: Thanks for your work. |

|

@meguerreroa That is all right! "The greatest pleasure in life is doing what people say you cannot do", we can be better. 😃 @EtianAM In my opinion, the open-source field of super-resolution seems to have been very stagnant in the past two years. By looking at the information displayed on Image Super-Resolution |

Thanks for answer. |

I know the question was not addressed to me, but I wanted to raise the question or problem about the removal of frames As I said, I do not know much about this, but one solution I can think of is to run DAIN on a small part of the frames until it can complete the missing frames, but I wonder if it will not cause a strange effect, besides the problems that those kind of changes in the name and numbering of the files imply. |

|

@EtianAM @Brokensilence I moved #DAIN in Anime# into #4 . More discussions are welcome. |

|

Generating Digital Painting Lighting Effects via RGB-space Geometry It really impressed me with what it can do!!! |

|

Suggestion: *I have tried doing this, but I'm not a python expert, and I'm still struggling to change whats needed for this to work. This is good for when we have large videos, we split them into parts first, so when using COLAB, even if we hit the 12h session limit, we don't lose the entire progress. Also good for when we split the video into different scenes using scene change detection beforehand. (Still trying to implement auto scene detection into the repo, for now I'm using "PySceneDetect" on my local computer to split the video into the different scenes first.) |

|

@Brokensilence WIP. But notes that if we add such function, we need more strict checks. |

|

I had been trying to use Video2x on linux without being able to do it, but about 2 days ago the developer updated the linux dependencies a bit and fixed a few problems with this OS. I should mention that to run it you need Python 3.8 and some other dependencies that need to be run as root, which could be a problem |

Are you still working on this? |

|

@LizandroVilDia Yep. You can find the |

|

AnimeGANv2 ? :) |

|

Amazing! Thanks for the info. WIP.

… |

@EtianAM Done, try it through :) |

|

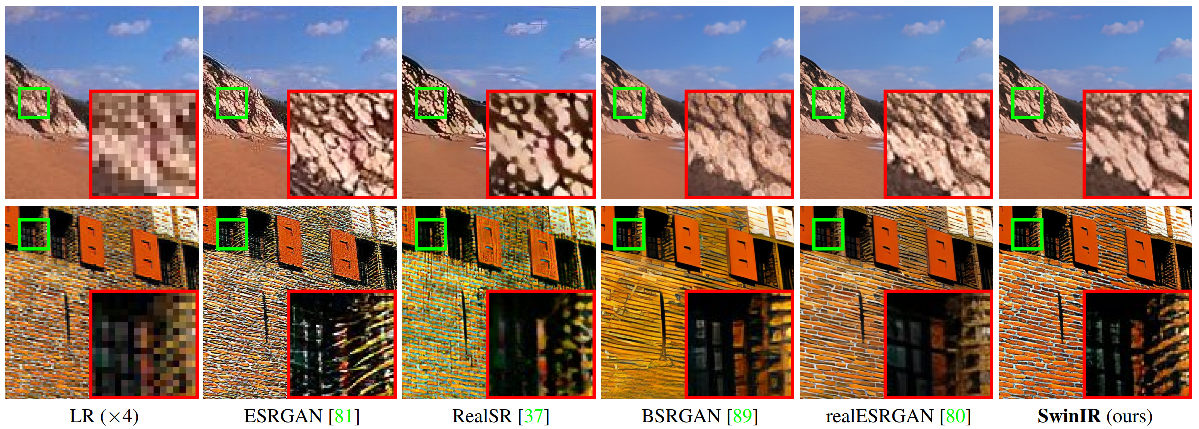

Dunno if can be useful for you in any way, but check out SwinIR by @JingyunLiang too: |

|

@forart Thanks for sharing, i'll take a look. :) |

This issue will continue open and receive the wanted feature, as well as discuss the priority of which the feature merged in.

Feel free to share your point and welcome to join and contribute together.

The text was updated successfully, but these errors were encountered: