Architecture similar to 'Playing Atari with Deep Reinforcement Learning' (2013) V Mnih, K Kavukcuoglu, D Silver et al.

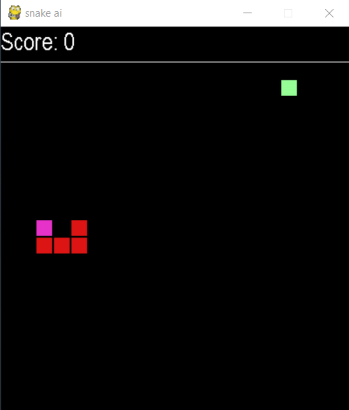

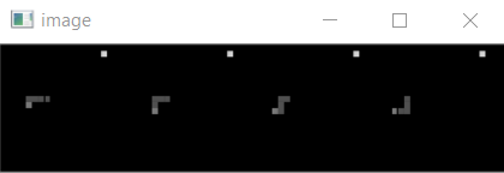

The game's pixels are used directly as network input. 400 x 400 RGB game screen greyscaled and downsampled to 84 x 84. 4 frames stacked and fed into a 3d convolution

Three convolutional layers, one fully connected, and batch norm/dropout to help against overfitting

- Conv [16 1x5x5 filters, stride 1x2x2, relu] - features in each layer

- Conv [32 2x3x3 filters, stride 2x2x2, relu] - features between layers

- Conv [64 1x3x3 filters, stride 1x2x2, relu] - conv pooling

- Batch Normalisation

- Dropout [0.2]

- Dense [128, relu]

- Dense [4, softmax]

- Long term memory training

- End of each game round, randomly select a mini batch of state transition memories (old_state, action, new_state, reward) to train the network

- Short term memory training

- Train the last state transition

See config.ini

Keras, CV2, Numpy, matplotlib, configparser