3. If we have neural network with tow layers, first have 3 neu. second 1 neu, and we apply a linear function for first layer and sigmoid func. for second layer, we end up with a simple logistic regression algorithm, in another word the neural network change into logistic regression.

Gradient of any function [src2] is a vector with its partial derivatives.

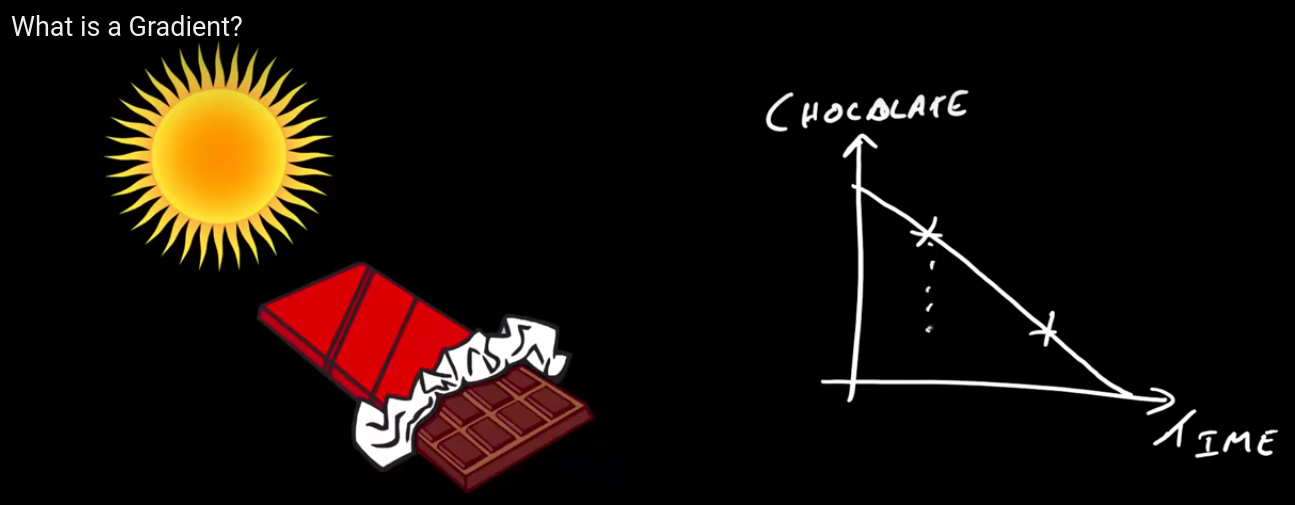

Gradient [src3] is talk about change. this slope telling us how quickly is change, by finding the gradient of the that graph bellow we know how quickly is changing.

Gradient [src4] is another word for "slope". The higher the gradient of a graph at a point, the steeper the line is at that point.

- A positive gradient means that the line slopes goind upwards.

- A negative gradient means that the line slopes downwards.

Reosurces

-

Gradient descent measures the local gradient of the error function with regards to the parameter vector θ.

-

When gradient is zero you are in the bottom (the minimum), keep in minde could be many minimums local and global.

-

An important parameter in Gradient Descent is the size of the steps, determined by the learning rate hyperparameter.

Resources

- [IBM] https://www.ibm.com/cloud/learn/gradient-descent

- [src1] Hands on ML with tens. and sklearn

- [src2]

Resources

Resources