-

Notifications

You must be signed in to change notification settings - Fork 19

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Provide mechanism for streaming logs from modules #92

Comments

|

an early attempt to do this https://github.com/bcoca/ansible/tree/update_json |

|

The logging socket/connection here sounds like a specific case of more generally supporting additional connection channels between controller/remote. ie, the current 2 channels (stdin/stdout) plus one or more additional channels for the streaming stdout and/or stderr and/or logging. Making bits that use connection plugins poll/select additional channels provided by the connection plugin seems doable, especially if the connection plugin can make those channels available as fd's. Probably not too difficult with something like paramiko, but I don't know of an obvious way to do it with the 'ssh' connection plugin. Perhaps using it's port or unix socket forwarding? |

|

Vaguely related pr: ansible/ansible#20930 - module logging ('log_records' in module return json) ansible/ansible#20930 is for supporting a way for modules to use stdlib logging and to return log records in the json results. It also includes an example callback that shows how to then send those log records to a regular python logging handler. But #20930 does not add any streaming support however, and streaming is the main point of this proposal. ie of the 3 'Problems' mention in #92 (comment) my #20930 pr only addresses 'Modules that use third-party libraries that have python stdlib logging based logging can't wire up those loggers in any way that causes output to return to ansible-playbook.' |

|

There is a relation with multiple stream support for Windows as Powershell has 5 output streams and preferably we may want to capture some of those streams on the master as well. (I couldn't find a reference to prior discussions about this) |

|

@emonty The links in the description are 404 now. Are these the correct current urls? https://github.com/openstack-infra/zuul/blob/master/zuul/ansible/library/command.py |

|

I refreshed my https://github.com/bcoca/ansible/tree/update_json branch, almost there, got basics working, just need to hook into callbacks and make sure its always unbuffered. |

|

@bcoca Is this going into Ansible 2.8? |

|

idk yet, i have other priorities right now, its was 'done' at the beginning of 2.7 but i hit a huge performance hit when forcing all python to be unbuffered, there have been other users that have sent patches to it, but i have had other priorities and no time to check/confirm that fixes the issue. |

|

In case somebody want to debug own modules with "online" logs, here's my workaround: https://stackoverflow.com/a/54448411/2795592 |

|

Is this likely to make it into 2.8? This would be awesome for checking |

|

Hey guys, Any news on that? |

|

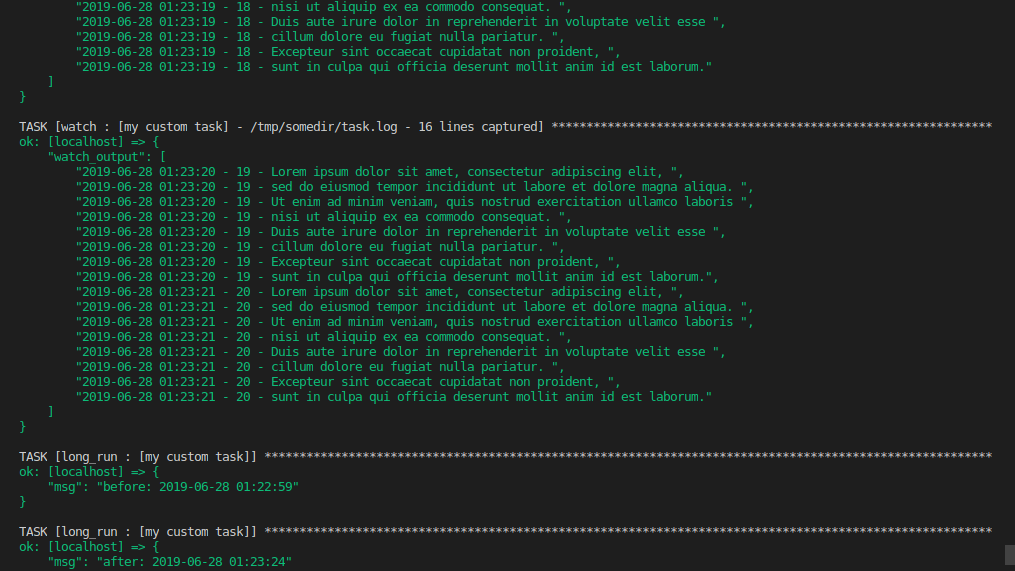

In the meantime, for anyone wanting a solution for this, I achieved a result that was satisfactory for me and created a repository with a minimal demonstration of what it does and the steps to reproduce it: https://github.com/lucasbasquerotto/ansible-live-output-demo Screenshot |

|

This obviously didn't make |

|

I'm quite interested to see this, as well, as I run a lot of long-running tasks, and I get complaints that my playbooks are hanging. |

|

Same, also I'd like this (for the same reasons + debugging long running commands). |

|

I fully second this too, I have been using Ansible to migrate existing shell-based infrastructure scripts and the first step is to run them via This can also be an asset when introducing Ansible to shell-based workflows and how easy it is to run the existing scripts with Ansible. Without streaming output, this is a far less appealing proposal... |

|

I have created a solution to start a simple socket server on controller node to receive realtime logs from managed node. It's not considering security issue and performance. I have used it for trigger and monitor long running scripts and it's working fine for the purpose. |

|

+1 |

|

+1 I'm using ansible to manage the backup of a database and a file system and ansible hides the progress that is logged to stdout. |

|

It's necessary. my custom module reports realtime status, but i can't see info at ansible stdout |

|

+1. I think it's very valuable for anyone trying to use ansible as a CI/CD. Sometimes one wants to see the logs during the build process. |

|

Hi, |

|

See my PR/branch linked above, I hit a problem with python buffering and have not had time to revisit it. |

|

I...guess I don't understand. And this may be a massive oversimplification but... When I "async"/poll 0 a command that prints output, there appears to already be a STDOUT captured with that command (I assume STDERR also). When I then use "async_status" the output from the async'd job prints upon job completion. That seems to me that there's a stdout/stderr for each of these executions and it's at some point accessible to whatever is running async/poll > 0 or async/poll = 0/async_status. In a real language, each "poll" iteration you'd simply flush stdout and debug/print the resulting buffer for status. The problem with ansible is that currently a command run async/poll > 0 or async_status sets up a loop that we can't interact with. While "async/poll > 0" might be an issue to deal with since it's not really it's own module, I don't see why "async_status" couldn't be setup with a "show_stdout: yes" and "show_stderr: yes" argument that simply turns on a debug or flush/debug of the buffers each time the loop iterates the check. I get the concept of the buffering problem, but ANY output, right now, would be better than what we have (which is absolutely nothing till a process completes). Even if the buffer display is behind the actual output it's still better than having absolutely nothing till the end. Users can control their own behavior by forcing their own commands (such as those run shell or command) to flush buffers periodically so that up to date status is acquired by Ansible. |

|

@rgadwagner stdout/stderr in the case of async is just a proxy for the normal module response, it is not 'stdout/stderr' from commands run by the module (if any, many modules do not run system commands) . Async in that case just proxies the 'final result' while having an intermediate poll asking 'are you done yet?' which is not even a 'progress' but a binary state (finshed/not finished). This could be part of a system to report 'current feedback' but modules would still need to be modified to actually provide that feedback. |

|

@bcoca what's the performance issue with the unbuffered python ? I was wondering if we couldn't keep it buffered but flush the buffer at regular interval instead if somehow that would help with performance (?). I believe that would be an acceptable tradeoff (get an update each N millisecond or seconds is much better than at the command completion) |

|

basically the issue was that starting python in an unbuffered state made everything take a lot longer, fact gathering was really painfully slow. Yes setting up a flush timer would be a workaround, but it needs to affect everything, other options I was exploring was limiting teh 'unbuffered' state to stdout instead of all python file handles. something like this: |

|

need it in 2023 |

|

i use screen -d -m for a detached screen, then i run the script, Ansible returns it is done, although it is still running, if i want to trace the progress, i will login to the server and attach to the screen and see the output, or tail -f the output log file. Here is my work arround. |

|

Tip: if you've forgotten to run something in tmux or screen, it's not too late. Check out: https://github.com/nelhage/reptyr |

|

@ehrenmann1977 cool but it's a bit of a shame to get to that point (6 tasks just for that)... Everyone proposes techniques here and there. Imagine if we need the final result of the (long) script before going to the next task (used other techniques again?). The best would be to have a default solution included in ansible and beneficial to all. A lot of hype around this feature🤞. +1 |

|

Just wanted to chime in with a use case for this: I have a fido2 device that I want to automate doing cryptenroll. However, it asks for user presence at some specific moments otherwise the command times out. Streaming these logs would be great for this automation. +1 |

|

This feature can greatly improve developer experience when ansible used as universal build tool (like make). |

Agree |

|

+1 |

1 similar comment

|

+1 |

|

Any updates on this? |

|

+1 |

2 similar comments

|

+1 |

|

+1 |

This comment was marked as off-topic.

This comment was marked as off-topic.

This comment was marked as off-topic.

This comment was marked as off-topic.

Rather than wasting everyone's time with +1, if someone really wants it implemented, they can create a PR with this feature or push RedHat via support if they're paid customers. It's open source. |

|

I no longer use Ansible. Rather, I use pyinfra which has this feature built-in and has nicer syntax. No need to mess with YAML DSL anymore |

This comment was marked as off-topic.

This comment was marked as off-topic.

|

Any updates here? Still open in Aug. 2024. |

Proposal: Provide mechanism for streaming logs from modules

Author: Monty Taylor <@emonty> IRC: mordred

Date: 2018/01/04

Motivation

Long running tasks can be a black hole of despair for a user, and waiting until one is complete to be able to see what happened can often be frustrating.

We have a hacked-in version of output streaming for command/shell modules in our ansible-playbook invocations in Zuul v3:

https://github.com/openstack-infra/zuul/blob/feature/zuulv3/zuul/ansible/library/command.py

https://github.com/openstack-infra/zuul/blob/feature/zuulv3/zuul/ansible/callback/zuul_stream.py#L118-L167

but it would be better if such a facility was available to all ansible users, and for a facility to exist for module authors to be able to do something similar.

Assuming we can agree on an overall approach, we can implement the majority of this as an update to the existing zuul streaming code and then forklift it over when it's working well. (most of the code is in standalone classes with minimal amounts of updates to the guts of existing Ansible objects)

Problems

What problems exist that this proposal will solve?

Solution proposal

tl;dr - Emit structured log messages over a socket from AnsibleModule back to a port on the calling machine that is optionally forwarded to the remote machine over the Connection. When the messages are received on the calling machine, fire a Callback method to handle them.

There is a 2x2 supportability matrix:

Detecting whether the local port is reachable from the remote machine without forwarding is not consistently and efficiently possible, so defaulting realtime_streaming to off is the safest thing. As a follow-on it could be possible to automatically enable streaming for Connection plugins that support it. Thus having the default for realtime_streaming be None rather than False so we can have None mean "on, defaulting to forwarding the port if the connection supports it, otherwise off" and False being explicit disable and True being explicit enable.

In the Task executor, pass _play_context._log_streaming_port and _play_context._log_streaming_host to the remote module invocation via _ansible private variables

In AnsibleModule, if _ansible_remote_stream_host and port are set, instantiate a StreamingLogHandler. If the are not set, instantiate a NullHandler.

In AnsibleModule, add methods to connect log stream to existing library logging

In AnsibleModule, add a ```stream_message`` method (or something with a better name) to allow for sending a single message back over the wire easily from module code.

in modules/command.py, watch stderr/stdout as it happens and call self.stream_message for each line. (Perhaps want to have C.REALTIME_STREAMING cause stdout and stderr to be combined - since it'll ultimately only be one stream - but leave the current split behavior for non-streaming cases so that people have the differentiation.)

Write StreamingLogHandler class either as a subclass of logging.handlers.SocketHandler or from scratch that sends each message and its data as JSON. subclassing would be easiest, but since we want to support richer structured data from twiggy as well, we need to assess whether the existing SockerHandler is robust enough and gets all of the data to the other side.

Add a Callback method that can be called for each log message received

Write LogStreamListener class to listen on the port for log messages, unpack them and call the Callback method with the expanded data.

Testing

Oh dear god yes. There will definitely need to be testing.

The text was updated successfully, but these errors were encountered: