-

Notifications

You must be signed in to change notification settings - Fork 329

List of Project Ideas for GSoC 2017

If you haven't read it already, please start with our How to Apply guide.

Below is a list of high impact projects that we think are of appropriate scope and complexity for the program.

There should be something for anyone fluent in C++ and interested in writing clean, performant code in a modern and well maintained codebase.

Projects are first sorted by application:

- appleseed: the core rendering library

- appleseed.studio: a graphical application to build, edit, render and debug scenes

- appleseed-max: a native plugin for Autodesk 3ds Max

- New standalone tools

Then, for each application, projects are sorted by ascending difficulty. Medium/hard difficulties are not necessarily harder in a scientific sense (though they may be), but may simply require more refactoring or efforts to integrate the feature into the software.

Similarly, easy difficulty doesn't necessarily mean the project will be a walk in the park. As in all software projects there may be unexpected difficulties that you will need to identify and overcome with your mentor.

Several projects are only starting point for bigger adventures. For those, we give an overview of possible avenues to expand on them after the summer.

appleseed projects:

- Project 1: Resumable renders

- Project 2: IES light profiles

- Project 3: Single-file, zip-based project archives

- Project 4: Volume rendering

- Project 5: Adaptive image plane sampling

- Project 6: Procedural assemblies

- Project 7: Improved many-light sampling

- Project 8: Switch to Embree

- Project 9: Implicit shapes

- Project 10: Unbiased Photon Gathering

- Project 11: Spectral rendering using tristimulus colours

appleseed.studio projects:

- Project 12: Material library and browser

- Project 13: Render history and render comparisons

- Project 14: Python scripting

- Project 15: OpenColorIO support

appleseed-max projects:

New standalone tools:

(Renders and photographs below used with permission of the authors.)

- Required skills: C++

- Challenges: None in particular

- Primary mentor: Franz

- Secondary mentor: Esteban

Rendering a single image can take a very long time, depending on image resolution, scene complexity and desired level of quality.

It would be convenient to allow stopping a render and restarting it later. This would, for instance, enable the following typical workflow:

- Render for a few seconds, or a few minutes, then interrupt the render.

- Work with the low quality render as if it was high quality: apply tone mapping or other forms of post-processing, preview the entire animation, etc.

- When time allows, resume rendering where it left off to get a smoother result.

Tasks:

- Determine what needs to be persisted to disk to allow resuming an interrupted render.

- Define a portable file format for interrupted renders. Can we reuse an existing format? For instance, could we store resume information as metadata in an OpenEXR (*.exr) file?

- When rendering is stopped, write an "interrupted render file".

- Allow appleseed.cli (the command line client) to start rendering from a given "interrupted render file".

Possible follow-ups:

- Expose the feature in appleseed.studio.

- List and present all available interrupted renders when opening a project.

- Identify when a render cannot be resumed, e.g., because the scene has changed in the meantime.

- Required skills: C++

- Challenges: None in particular

- Primary mentor: Esteban

- Secondary mentor: Franz

IES light profiles describe light distribution in luminaires. This page has more information on the topic. IES light profile specifications can be found on this page. Many IES profiles can be downloaded from this page.

There are no particular difficulties with this project. The parsing code can be a bit hairy but there are many open source implementations that can be leveraged or peeked at in case of ambiguity. The sampling code is probably the most interesting part but it shouldn't be difficult.

Tasks:

- Learn about IES profiles and understand the concepts involved.

- Determine what subset of the IES-NA specification needs to be supported.

- Investigate whether libraries exist to parse IES files.

- If no suitable library can be found, implement our own parsing code (IES profiles are text files with a mostly parsable structure).

- Add a new light type that implements sampling of IES profiles.

- Create a few test scenes demonstrating the results.

- Required skills: C++

- Challenges: Some refactoring

- Primary mentor: Franz

- Secondary mentor: Esteban

Today, appleseed projects are made up of many individual files, some of them very small. This is convenient when editing the project, but not when sharing it with other users or transporting it across a network.

The idea of this project is to introduce a new format that packs an entire project into a single archive file, preferably a genuine ZIP file (in which case we suggest the .appleseedz file extension).

appleseed should be able to natively read this file format, without unpacking it first. Non-packed project files must continue to be supported.

If we decide to adopt a format other than ZIP we should also investigate whether it makes sense to provide standalone tools to pack and unpack projects.

Should we decide to go with the ZIP format, packed project files will initially be manually created using any standard ZIP archiver.

Tasks:

- Determine if we can efficiently read individual files from a ZIP archive without unpacking it.

- Determine if we can instruct OpenImageIO to read textures from an archive file.

- If the ZIP format is not suitable, design a simple (preferably LZ4-compressed) file format for packed projects.

- Refactor

.objand.binarymeshfile readers to allow reading from a packed project. - Refactor

.appleseedfile reader to allow reading from a packed project. - Refactor the image pipeline to allow reading textures from a packed project (with OpenImageIO).

- Implement reading packed project files (will automatically work in all appleseed tools, including appleseed.cli and appleseed.studio).

Possible follow-ups:

- Implement a standalone command line utility to pack/unpack projects.

- Expose packing/unpacking in appleseed.studio.

- Adapt

rendernode.pyandrendermanager.pyscripts to work natively with packed project files.

- Required skills: C++

- Challenges: Understanding and updating the existing path tracing code

- Primary mentor: Esteban

- Secondary mentor: Franz

Volume rendering (or volumetric rendering) is one of the most requested features in appleseed. Currently, appleseed only renders the surface of objects, that is, how light bounces off objects, and treats the space between objects as a void. Volume rendering implies to compute how light is absorbed and scattered by air, smoke or fog molecules, or by denser media such as milk or marble.

This is a vast topic. This project will only be scratching the surface of what volume rendering implies. Depending on the student, we may limit the project to simple homogeneous volumes and single scattering.

Tasks:

- Read about volume rendering learning what homogenous volumes and single scattering are.

- Implement basic absorption and scattering formula as a set of simple unit tests.

- Modify the path tracing code to implement basic ray marching.

- Compute attenuation during ray marching.

- Add single scattering.

- Add a new Volume entity type to appleseed and expose it in appleseed.studio.

Possible follow-ups:

- Implement multiple scattering.

- Implement (multiple?) importance sampling.

- Add support for OpenVDB, a volume description file format.

- Compare diffuse profile-based and volume-based subsurface scattering to check that the former methods are working correctly.

- Recreate the scene from the photograph above and render it with appleseed.

- Required skills: C++, reading and understanding scientific papers

- Challenges: None in particular

- Primary mentor: Franz

- Secondary mentor: Esteban

(Illustration from Adaptive Sampling by Yining Karl Li.)

Like most renderers, appleseed has two types of "image plane sampler", i.e., it has two ways of allocating samples (which is the basic unit of work) to pixels:

- The uniform sampler allocates a fixed, equal number of samples to every pixel. This is simple and works well, but a lot of resources are wasted in "easy" areas that don't require many samples to get a smooth result, while "difficult" area remain noisy.

- The adaptive sampler tries to allocate more samples to difficult pixels and fewer samples to easy pixels. While it may work acceptably with a bit of patience, it's hard to adjust, and it can lead to flickering in animations.

The idea of this project is to replace the existing adaptive sampler by a new one based on a more rigorous theory. We settled for now on a technique described in Adaptive Sampling by Yining Karl Li. However, the project should start with a quick survey of available techniques.

Tasks:

- Survey available modern techniques to adaptively sample the image plane.

- Implement the chosen adaptive sampling algorithm.

- Make sure the new adaptive sampler works well in animation (absence of objectionable flickering).

- Determine which settings should be used as default.

- Expose adaptive sampler settings in appleseed.studio.

- Compare quality and render times with the uniform image sampler.

- Remove the old sampler, and add an automatic project migration step to maintain backward compatibility.

- Required skills: C++

- Challenges: Light refactoring

- Primary mentor: Esteban

- Secondary mentor: Franz

Procedural assemblies are plugins that can generate parts of scenes procedurally, as opposed to loading geometry from mesh and curve files. They are very powerful as they can easily generate repetitive or mathematical structures with code. They are commonly used in hair, fur, and procedural instancing tools to describe the scene geometry to renderers.

The idea of this project is to add support for procedural assembly plugins to appleseed. This would allow appleseed to support geometry generation tools commonly used by artists such as Yeti by peregrine*labs or Autodesk XGen.

Tasks:

- Design and implement an API for procedural geometry generation.

- Implement plugin loading and execution at scene setup time.

- Write some simple procedural geometry generators.

- Document the procedural geometry generation API in the wiki.

Possible followups:

- Investigate ways to assign materials and modify other attributes of procedurally generated geometry.

- Required skills: C++, reading and understanding scientific papers

- Challenges: Refactoring

- Primary mentor: Franz

- Secondary mentor: Esteban

(Illustration by Nathan Vegdahl — Source)

To maximize rendering efficiency, it is important for the renderer to chooses the right light sources to sample, based on the point currently being shaded. Typically, the renderer will select a few lights at random and sample those. By repeating the process many times (over many pixel samples) an accurate estimation of total incident light is computed.

Today, appleseed has a pretty basic light sampling strategy:

- It always sample all "non-physical lights" (point lights, purely directional lights, spot lights)

- For area lights, it computes N "light samples". Computing a light sample involves:

- Choosing a light-emitting triangle at random, based on its surface area (the larger the surface area the more likely it is to be chosen)

- Then choosing a point on the selected triangle, at random (uniformly)

While this simple algorithm works well for scenes with few lights, it leads to considerable noise when the number of lights is large.

Nathan Vegdahl, author of the Psychopath experimental renderer, has come up with an interesting technique that he first described on appleseed-dev. He later posted additional details on ompf2.

The paper Stochastic Light Culling, recently posted in the Journal of Computer Graphics Techniques, describes an algorithm similar to Nathan's. The two techniques are discussed and compared on appleseed-dev.

Here is some sample code from Psychopath implementing Nathan's technique: light_tree.hpp.

Tasks:

- Establish clearly the differences between Nathan's algorithm and Stochastic Light Culling. Possibly get in touch with Nathan.

- Determine the limitations of the technique.

- Determine if, and how, we need to augment/modify the

LightandEDFinterfaces. - Build a simple light tree that spawns all assembly instances, possibly limited to point lights and/or area lights, depending on what's easiest.

- Modify the

LightSamplerclass in order to sample light tree.

Possible follow-ups:

- Figure out if having one light tree per assembly makes sense, and if it does, how to implement it considering that we don't want to put light trees in assemblies as this would couple a rendering technique (light tree sampling) with a modeling technique (assemblies).

- Improve heuristics used when computing light tree node probabilities.

- Can we improve anything?

- Required skills: C++

- Challenges: Heavy refactoring

- Primary mentor: Franz

- Secondary mentor: Esteban

(Imperial Crown of Austria, model by Martin Lubich, rendered with Intel Embree — Source)

The act of tracing rays through the scene is by far the most expensive activity performed by appleseed during rendering. Our ray tracing kernel was state-of-the-art around 2006, there are faster algorithms (QBVH for instance) and faster implementations based on vectorized instructions.

Intel has been developing a pure ray tracing library called Embree which offers state-of-the-art performance on Intel (and supposedly AMD) CPUs. For many years Embree was lacking essential features that prevented its adoption in appleseed, such as multi-step deformation motion blur. Today it seems that Embree offers everything we need and that a switch is finally possible.

Tasks:

- Make sure that Embree fully supports our needs (double precision ray tracing, motion blur). If it does not, determine if we can still use it for some assemblies while using the traditional intersector for others.

- Determine which parts of the ray tracing kernel (trace context, intersector, assembly trees, region trees, triangle trees) we can keep and which parts to discard.

- Add Embree to the build.

- Write a minimal integration of Embree into appleseed (no motion blur, no instancing).

- Compare performances and CPU profiles with the existing ray tracing kernel.

- Determine how to support motion blur or instancing with Embree.

Possible follow-ups:

- Add support for remaining features (motion blur or instancing, depending on what was implemented during the project).

- Run whole test suite and investigate regressions if any.

- Clean up integration.

- Required skills: C++

- Challenges: Heavy refactoring

- Primary mentor: Esteban

- Secondary mentor: Franz

Currently appleseed only supports geometry defined by triangle meshes and curves. While this is very flexible it's rather inefficient for simple shapes like spheres and cylinders.

The objective of this project would be to add support for ray tracing simple shapes directly without converting them into triangle meshes.

In addition it would allow, in the future, the use of these shapes as light sources and use specialized light sampling algorithms whenever possible.

Tasks:

- Determine the best way to generalize scene intersection code to handle implicit shapes.

- Refactor existing code.

- Write intersection routines for simple shapes like spheres, disks and rectangles.

Possible follow-ups:

- Better sampling of lights defined by implicit shapes (rectangles, disks, spheres...)

- Rendering particle systems as a collection of implicit shapes.

- Required skills: C++, reading and understanding scientific papers

- Challenges: Getting it right

- Primary mentor: Franz

- Secondary mentor: Esteban

(Illustration from Unbiased Photon Gathering for Light Transport Simulation.)

Light transport is the central problem solved by a physically-based global illumination renderer. It implies finding ways to connect, with straight lines (in the absence of scattering media such as smoke or fog), light sources with the camera taking into account how light is reflected by objects. Efficient light transport is one of the most difficult problem in physically-based rendering.

The paper Unbiased Photon Gathering for Light Transport Simulation proposes to trace photons from light sources (like it is done in the photon mapping algorithm) and use these photons to establish paths from lights to the camera in an unbiased manner. This is unlike traditional photon mapping which performs photon density estimation.

Since appleseed already features an advanced photon tracer it should be possible to implement this algorithm, and play with it, with reasonable effort.

Tasks:

- Figure out what we need to store in photons in order to allow reconstruction of a light path.

- Add a new lighting engine, drawing inspiration from the SPPM lighting engine which also needs to trace both photons from lights, and paths from the camera.

- Trace camera paths. At the extreme end of these paths lookup the nearest photon and establish a connection.

- Render simple scenes, such as the built-in Cornell Box, for which we know exactly what to expect, and carefully compare results of the new algorithm with ground truth images.

- Required skills: C++, reading and understanding scientific papers

- Challenges: Refactoring

- Primary mentor: Esteban

- Secondary mentor: Franz

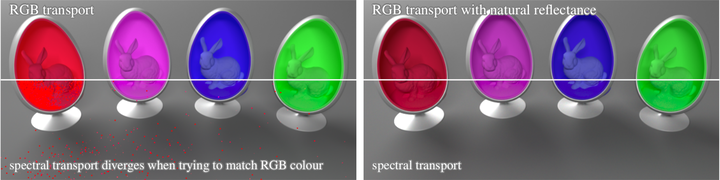

(Illustration from Physically Meaningful Rendering using Tristimulus Colours.)

One of most unique features of appleseed is the possibility of rendering in both RGB (3 bands) and spectral (31 bands) colorspaces and to mix both color representations in the same scene.

This feature requires the ability to convert colors from RGB to a spectral representation. While the conversion is not well defined in a mathematical sense, many RGB colors map to the same spectral color, there are some algorithms that try to approximate this conversion.

The goal of this project would be to implement an improved RGB to spectral color conversion method based on this paper: Physically Meaningful Rendering using Tristimulus Colours.

This would improve the correctness of our renders for scenes containing both RGB and spectral colors.

Tasks:

- Implement the improved RGB to spectral color conversion method.

- Add unit tests and test scenes.

- Render the whole test suite, identify differences due to the new color conversion code and update reference images as appropriate.

- Required skills: C++, Qt

- Challenges: None in particular

- Primary mentor: Franz

- Secondary mentor: Esteban

We need to allow appleseed.studio users to choose pre-made, high quality materials from a material library, as well as to save their own materials into a material library, instead of forcing them to recreate all materials in every scene. Moreover, even tiny scenes can have many materials. Artists can't rely on names to know which material is applied to an object. A material library with material previews would be a tremendous help to appleseed.studio users.

A prerequisite to this project (which may be taken care of by the mentor) is to enable import/export of all material types from/to files. This is already implemented for Disney materials (which is one particular type of materials in appleseed); this support should be extended to all types of materials.

Ideally, the material library/browser would have at least the following features:

- Show a visual collection of materials, with previews of each materials and some metadata (name, author, date and time of creation)

- Allow filtering materials based on metadata

- Allow to drag and drop a material onto an object instance

- Highlight the material under the mouse cursor when clicking in the scene (material picking)

- Allow replacing an existing material from the project by a material from the library

Possible follow-ups:

- Allow for adding "material library sources" (e.g., GitHub repositories) to a material library. This would allow people to publish and share their own collections of materials, in a decentralized manner.

- Integrate the material library from appleseed.studio into appleseed plugins for 3ds Max and Maya.

- Required skills: C++, Qt

- Challenges: None in particular

- Primary mentor: Franz

- Secondary mentor: Esteban

Finding the right trade-off between render time and quality, lighting setup, or material parameters can involve a large number of "proof" renders. A tool that would saving a render to some kind of render history and compare renders from the history would be very helpful to both users and developers.

When saved to the history, renders should be tagged with the date and time of the render, total render time, render settings, and possibly even the rendering log. In addition, the user should be able to attach comments to renders.

Task:

- Build the user interface for the render history.

- Allow for saving a render to the history.

- Allow simple comparisons between two renders from the histoy.

- Allow comparing a render from the history with the current render (even during rendering).

- Allow for saving the render history along with the project.

Possible follow-ups:

- Add more comparison modes (side-by-side, toggle, overlay, slider).

- Add a way to attach arbitrary comments to renders.

- Required skills: C++, Python, Qt

- Challenges: Impacts the build system and the deployment story

- Primary mentor: Esteban

- Secondary mentor: Franz

Integrating a Python interpreter will allow users of appleseed.studio to use scripting to generate, inspect, and edit scenes, and to customize the application for their specific needs.

In addition it will allow future parts of appleseed.studio to be written in Python, speeding up the development process and making it easier to contribute.

Tasks:

- Integrate a Python interpreter in appleseed.studio.

- Import appleseed.python at application startup and write code to make the currently open scene in appleseed.studio accessible from Python.

- Add a basic script editor and console widget to appleseed.studio using Qt.

- Allow limited customization of appleseed.studio (such as custom menu items) in Python.

- Required skills: C++, Qt, OpenGL

- Challenges: Impacts the build system

- Primary mentor: Esteban

- Secondary mentor: Franz

OpenColorIO (OCIO) is an open source project from Sony Pictures Imageworks. Support for OCIO in appleseed.studio would allow the user to adjust gamma, exposure, and to transform colors of the rendered images using 3D LUT in real time.

More details can be found in this issue.

Tasks:

- Add OpenGL to the build.

- Write an OpenGL-based render widget.

- Introduce a second render buffer:

- The first buffer holds the original, non-corrected render;

- The second buffer holds the color-corrected render and is displayed on screen.

- Add OpenColorIO to the build.

- Apply color correction to the second buffer.

Possible follow-ups:

- Add UI widgets to appleseed.studio to allow for adjusting gamma and exposur.

- Add UI widgets to appleseed.studio to allow for choosing the color profile to apply.

- Add UI widgets to appleseed.studio to allow for loading custom 3D LUTs.

- Required skills: C++, Win32

- Challenges: Using the 3ds Max API

- Primary mentor: Franz

- Secondary mentor: Esteban

Currently, the appleseed plugin for Autodesk 3ds Max translates native 3ds Max cameras into appleseed cameras when rendering begins or when the scene is exported. While this works and is enough for simple scenes, native 3ds Max camera is lacking many features that are required for realistic renderings, such as depth of field and custom bokeh shapes.

The idea of this project is to allow 3ds Max users to instantiate, and manipulate, an appleseed camera entity that exposes all functionalities of appleseed's native cameras.

The principal difficulty of this project will be to determine how to write a camera plugin for 3ds Max as there appears to be little documentation on the topic. One alternative to creating a new camera plugin would be to simply inject appleseed settings into the 3ds Max camera's user interface, if that's possible. Another alternative solution would be to place appleseed camera settings in appleseed's render settings panel.

- Determine how to create a camera plugin in 3ds Max. If necessary, ask on CGTalk's 3dsMax SDK and MaxScript forum.

- Expose settings from native appleseed cameras.

Possible follow-ups:

- Add user interface widgets to adjust depth of field settings.

- Required skills: C++, Win32

- Challenges: Using the 3ds Max API

- Primary mentor: Franz

- Secondary mentor: Esteban

At the moment, appleseed plugin for Autodesk 3ds Max only supports "tiled" or "final" rendering mode of 3ds Max: you hit render, render starts and begins to appear tile after tile. Meanwhile, 3ds Max does not allow any user input apart from a way to stop the render.

With the vast amount of computational power in modern workstations, it is now possible to render a scene interactively, while moving the camera, manipulating objects and light sources or modifying material parameters.

In fact, appleseed and appleseed.studio already both have native support for interactive rendering.

The goal of this project is to enable interactive appleseed rendering inside 3ds Max via 3ds Max's ActiveShade functionality. Implementing interactive rendering in appleseed should be even easier with 3ds Max 2017 due to new APIs.

- Investigate the ActiveShade API.

- Investigate the new API related to interactive rendering in 3ds Max 2017.

- Decide whether 3ds Max 2015 and 3ds Max 2016 can, or should, be supported.

- Implement a first version of interactive rendering limited to camera movements.

- Add support for live material and light adjustements.

- Add support for object movements and deformations.

- Required skills: C++, Qt

- Challenges: Building a new tool from scratch

- Primary mentor: Esteban

- Secondary mentor: Franz

The goal of this project is to implement a small standalone render viewer tool. The tool would communicate with the renderer using sockets and would display the image currently being rendered.

The viewer would be handy for appleseed users and also could be integrated in future versions of our integration plugins with Maya and 3ds Max.

Tasks:

- Design a protocol that the renderer and the viewer would use to communicate.

- Write a tile callback class that sends appleseed image data to the viewer.

- Display the image as it is being rendered in the viewer.

Possible follow-ups:

- Add basic image pan and zoom controls.

- Implement simple tone mapping operators.

- Implement basic support for LUTs or OpenColorIO color management.

- Required skills: C++, reading and understanding complicated scientific papers

- Challenges: Building a new tool from scratch

- Primary mentor: Esteban

- Secondary mentor: Franz

(Illustration from Nonlinearly Weighted First-order Regression for Denoising Monte Carlo Renderings.)

appleseed, like other modern renderers, uses Monte Carlo methods to render images. Monte Carlo methods are based on repeated random sampling, which leads to visual noise, or grain. Getting rid of the noise requires a large number of samples and, consequently, can lead to extremely long render times for noise-free images (up to days for a single image).

The objective of this project is to write a denoiser tool based on image processing techniques (NL-Means or similar algorithms). This tool would allow for rendering images with a manageable number of samples in a reasonable amount of time. These images would then be "denoised" in order to obtain smooth images.

Possible references:

- Robust Denoising using Feature and Color Information

- Nonlinearly Weighted First-order Regression for Denoising Monte Carlo Renderings

Also, here is a good video introduction to denoising by Vladimir Koylazov from Chaos Group (makers of the V-Ray renderer): https://www.youtube.com/watch?v=UrOtyaf4Zx8

Lots of interesting paper references near the end of the talk!

Tasks:

- Modify appleseed to output auxiliary images needed by the denoising algorithm.

- Implement the denoiser as a shared library that can be reused.

- Implement a command line driver program that uses the shared library.

Possible follow-ups:

- Use motion vectors to extend denoising to the temporal domain.