-

Notifications

You must be signed in to change notification settings - Fork 222

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Build R-CNN with ResNet #27

Conversation

Codecov Report

@@ Coverage Diff @@

## master #27 +/- ##

==========================================

+ Coverage 48.44% 53.25% +4.81%

==========================================

Files 15 17 +2

Lines 545 569 +24

==========================================

+ Hits 264 303 +39

+ Misses 281 266 -15

Continue to review full report at Codecov.

|

|

@JihongJu Whoa! You’ve been busy! I’m excited to comment. Would you mind giving me a day or two? It’ll take some time to consider and respond. |

|

cc: @mcquin |

| else: | ||

| channel_axis = 1 | ||

|

|

||

| y = keras.layers.TimeDistributed( |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In my opinion, this is the trickiest part of the implementation.

I foresee two problems with using TimeDistributed.

-

I suspect that in nearly every situation TimeDistributed is used to step across a temporal dimension. Unfortunately, we’re the rare exception. It’ll be a challenge to explain this in our documentation (especially if we expect users to re-implement the CNN that use for feature extraction to use TimeDistributed). I’d really like Keras to modify the name to better emphasize its utility.

-

TimeDistributed requires users to implement their feature extractor layer by layer instead of calling on a model instance directly. I’d really prefer something like:

x = keras.layers.Input((224, 224, 3))

y = keras_resnet.ResNet50(x)

y = keras.layers.TimeDistributed(y)If it worked like this, we could hide the y = keras.layers.TimeDistributed(y) statement inside the RCNN constructor. We could probably implement our own wrapper that iterates across a model’s layers and appends a TimeDistributed layer.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@0x00b1 I agree with you here. But I can hardly see how to implement such a wrapper.

For example, we have ResHead that takes feature x as input and returns some outputs y. We need to apply TimeDistributed to each of the layers in between.

Maybe you have good ideas how to do that?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Good question. 😆

A quick method might be iterating over model.layers then pop each layer and append TimeDistributed.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hi, @JihongJu, I added a temporal module to keras-resnet. You should be able to use keras_resnet.block.temporal.basic, keras_resnet.block.temporal.bottleneck, and keras_resnet.block.temporal._shortcut.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@0x00b1 That would be great! I am a bit busy these days. I will get back to this asap.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@0x00b1 I sent a PR to keras-resnet to fix a bug for the temporal shortcut. And could you make a release (0.0.3?) afterwards?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yep :)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Released (0.0.4 because I made a dumb release mistake!)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@0x00b1 Great! Thanks!

keras_rcnn/layers/pooling.py

Outdated

| y2 = regions[:, 1] + regions[:, 3] | ||

| boxes = keras.backend.cast(boxes, keras.backend.floatx()) | ||

| boxes = boxes / self.stride | ||

| x1 = boxes[..., 0] |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

👍

| import keras_rcnn.layers | ||

| import keras_rcnn.heads | ||

|

|

||

|

|

||

| class RCNN(keras.models.Model): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This looks great!

We should also consider adding a features argument that users would use to pass their feature extractor (e.g. ResNet50). What do you think?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@0x00b1 Actually, I think we should go for two arguments: features and heads (maybe rename these two to keep consistency).

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@JihongJu I like it! 👍

|

@JihongJu I merged the backend and pooling changes. |

|

Great. I will continue the work on weekends. |

|

@0x00b1 If you are okay with this design, I will continue with the Mask branch as in TODO with a new PR. |

|

@JihongJu Awesome! Merged! (I might do a little cleanup today or tomorrow.) |

My current implementation is:

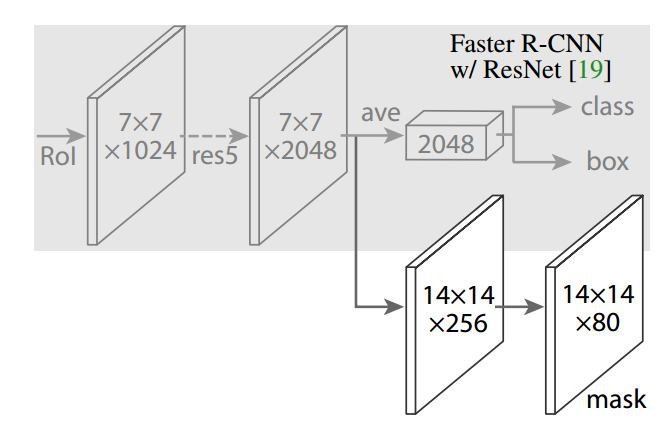

And the R-CNN heads from Mask R-CNN looks like

That becomes:

in

keras_rcnn.heads.ResHead.What do you think? @0x00b1

P.S. The API design should be discussed in #28