By Thanh-Toan Do*, Anh Nguyen*, Ian Reid, Darwin G. Caldwell, Nikos G. Tsagarakis (* equal contribution)

-

Caffe

- Install Caffe: Caffe installation instructions.

- Caffe must be built with support for Python layers.

-

Hardware

- To train a full AffordanceNet, you'll need a GPU with ~11GB (e.g. Titan, K20, K40, Tesla, ...).

- To test a full AffordanceNet, you'll need ~6GB GPU.

- Smaller net will be avalable soon.

-

[Optional] For robotic demo

-

Clone the AffordanceNet repository into your

$AffordanceNet_ROOTfolder. -

Build

Caffeandpycaffe:cd $AffordanceNet_ROOT/caffe-affordance-net# Now follow the Caffe installation instructions: http://caffe.berkeleyvision.org/installation.html# If you're experienced with Caffe and have all of the requirements installed and your Makefile.config in place, then simply do:make -j8 && make pycaffe

-

Build the Cython modules:

cd $AffordanceNet_ROOT/libmake

-

Download pretrained weights. This weight is trained on the training set of the IIT-AFF dataset:

- Extract the file you downloaded to

$AffordanceNet_ROOT - Make sure you have the caffemodel file like this:

'$AffordanceNet_ROOT/pretrained/AffordanceNet_200K.caffemodel

- Extract the file you downloaded to

After successfully completing installation, you'll be ready to run the demo.

-

Export pycaffe path:

export PYTHONPATH=$AffordanceNet_ROOT/caffe-affordance-net/python:$PYTHONPATH

-

Demo on static images:

cd $AffordanceNet_ROOT/toolspython demo_img.py- You should see the detected objects and their affordances.

-

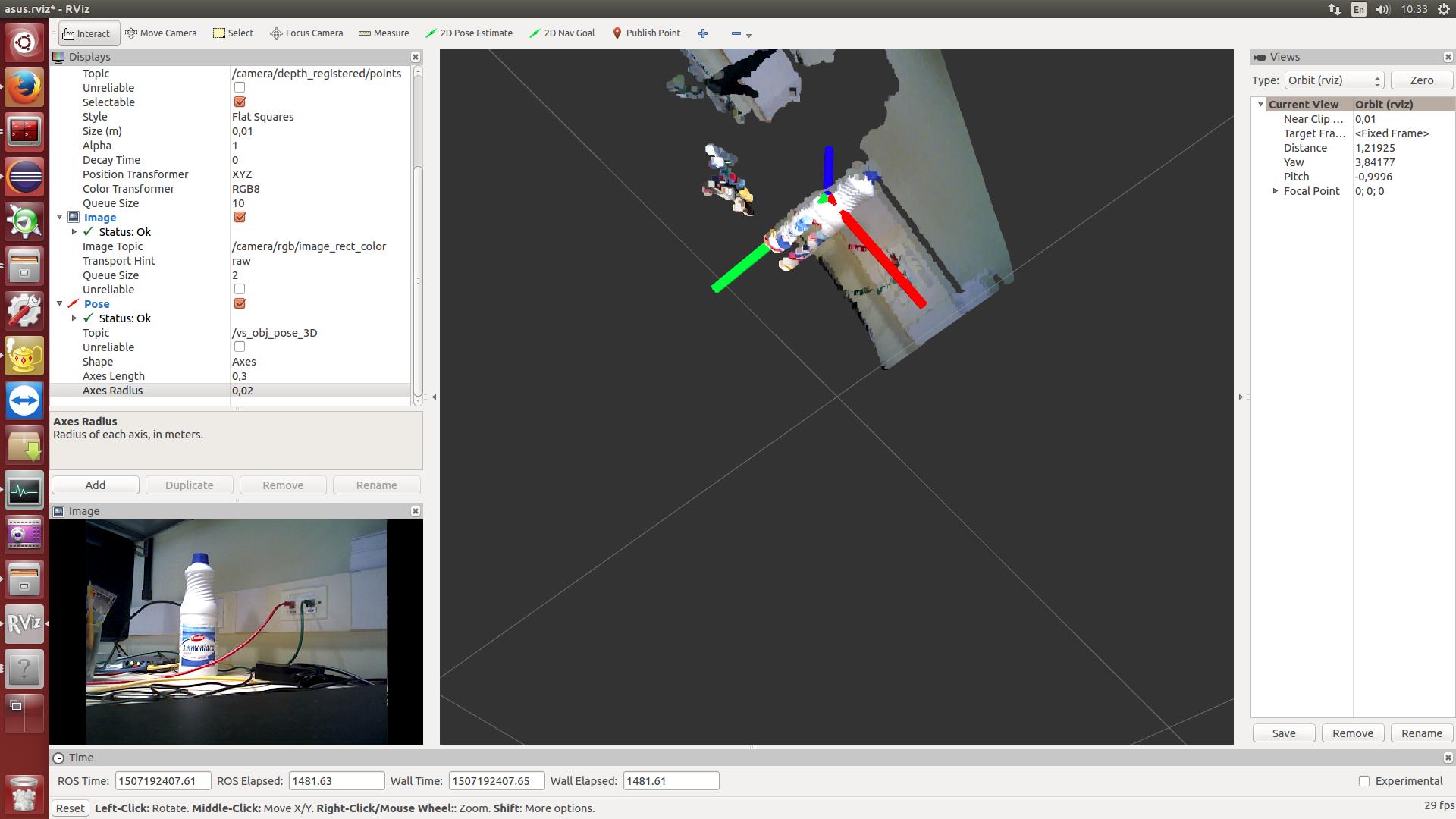

(Optional) Demo on depth camera (such as Asus Xtion):

- With AffordanceNet and the depth camera, you can easily select the interested object and its affordances for robotic applications such as grasping, pouring, etc.

- First, launch your depth camera with ROS, OpenNI, etc.

cd $AffordanceNet_ROOT/toolspython demo_asus.py- You may want to change the object id and/or affordance id (line

380,381indemo_asus.py). Currently, we select thebottleand itsgraspaffordance. - The 3D grasp pose can be visualized with rviz. You should see something like this:

-

We train AffordanceNet on IIT-AFF dataset

- We need to format IIT-AFF dataset as in Pascal-VOC dataset for training.

- For your convinience, we did it for you. Just download this file and extract it into your

$AffordanceNet_ROOTfolder. - The extracted folder should contain three sub-folders:

$AffordanceNet_ROOT/data/cache,$AffordanceNet_ROOT/data/imagenet_models, and$AffordanceNet_ROOT/data/VOCdevkit2012.

-

Train AffordanceNet:

cd $AffordanceNet_ROOT./experiments/scripts/faster_rcnn_end2end.sh [GPU_ID] [NET] [--set ...]- e.g.:

./experiments/scripts/faster_rcnn_end2end.sh 0 VGG16 pascal_voc - We use

pascal_vocalias although we're training using the IIT-AFF dataset.

- AffordanceNet vs. Mask-RCNN: AffordanceNet can be considered as a general version of Mask-RCNN when we have multiple classes inside each instance.

- The current network achitecture is slightly diffrent from the paper, but it achieves the same accuracy.

- Train AffordanceNet on your data:

- Format your images as in Pascal-VOC dataset (as in

$AffordanceNet_ROOT/data/VOCdevkit2012folder). - Prepare the affordance masks (as in

$AffordanceNet_ROOT/data/cachefolder): For each object in the image, we need to create a mask and save as a .sm file. See$AffordanceNet_ROOT/utilsfor details.

- Format your images as in Pascal-VOC dataset (as in

If you find AffordanceNet useful in your research, please consider citing:

@article{AffordanceNet17,

title={AffordanceNet: An End-to-End Deep Learning Approach for Object Affordance Detection},

author={Do, Thanh-Toan and Nguyen, Anh and Reid, Ian and Caldwell, Darwin G and Tsagarakis, Nikos G},

journal={arXiv:1709.07326},

year={2017}

}

If you use IIT-AFF dataset, please consider citing:

@inproceedings{Nguyen17,

title={Object-Based Affordances Detection with Convolutional Neural Networks and Dense Conditional Random Fields},

author={Nguyen, Anh and Kanoulas, Dimitrios and Caldwell, Darwin G and Tsagarakis, Nikos G},

booktitle = {IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

year={2017},

}

MIT License

This repo used a lot of source code from Faster-RCNN

If you have any questions or comments, please send us an email: thanh-toan.do@adelaide.edu.au and anh.nguyen@iit.it