-

Notifications

You must be signed in to change notification settings - Fork 1

Image filter (Vite)

In this example we're going to build a web app that apply a filter on an image. We'll create two versions: one that processes the image in the UI thread and another which offloads the task to web workers.

First, we'll create the basic skeleton:

npm create vite@latestNeed to install the following packages:

create-vite@5.2.2

Ok to proceed? (y) y

✔ Project name: … filter

✔ Select a framework: › React

✔ Select a variant: › JavaScript + SWC

cd filter

npm install

npm install --save-dev rollup-plugin-zigar

mkdir zig imgAdd the plugin in vite.config.js:

// https://vitejs.dev/config/

export default defineConfig({

plugins: [react(), zigar({ topLevelAwait: false })],

})

Replace the code in App.jsx with the following:

import { useCallback, useEffect, useRef, useState } from 'react';

import SampleImage from '../img/sample.png';

import './App.css';

function App() {

const srcCanvasRef = useRef();

const dstCanvasRef = useRef();

const fileInputRef = useRef();

const [ bitmap, setBitmap ] = useState();

const [ intensity, setIntensity ] = useState(0.3);

const onOpenClick = useCallback(() => {

fileInputRef.current.click();

}, []);

const onFileChange = useCallback(async (evt) => {

const [ file ] = evt.target.files;

if (file) {

const bitmap = await createImageBitmap(file);

setBitmap(bitmap);

}

}, []);

const onRangeChange = useCallback((evt) => {

setIntensity(evt.target.value);

}, [])

useEffect(() => {

// load initial sample image

(async () => {

const img = new Image();

img.src = SampleImage;

await img.decode();

const bitmap = await createImageBitmap(img);

setBitmap(bitmap);

})();

}, []);

useEffect(() => {

// update bitmap after user has selected a different one

if (bitmap) {

const srcCanvas = srcCanvasRef.current;

srcCanvas.width = bitmap.width;

srcCanvas.height = bitmap.height;

const ctx = srcCanvas.getContext('2d', { willReadFrequently: true });

ctx.drawImage(bitmap, 0, 0);

}

}, [ bitmap ]);

useEffect(() => {

// update the result when the bitmap or intensity parameter changes

if (bitmap) {

const srcCanvas = srcCanvasRef.current;

const dstCanvas = dstCanvasRef.current;

const srcCTX = srcCanvas.getContext('2d', { willReadFrequently: true });

const { width, height } = srcCanvas;

const srcImageData = srcCTX.getImageData(0, 0, width, height);

dstCanvas.width = width;

dstCanvas.height = height;

const dstCTX = dstCanvas.getContext('2d');

dstCTX.putImageData(srcImageData, 0, 0);

}

}, [ bitmap, intensity ]);

return (

<div className="App">

<div className="nav">

<span className="button" onClick={onOpenClick}>Open</span>

<input ref={fileInputRef} type="file" className="hidden" accept="image/*" onChange={onFileChange}/>

</div>

<div className="contents">

<div className="pane align-right">

<canvas ref={srcCanvasRef}></canvas>

</div>

<div className="pane align-left">

<canvas ref={dstCanvasRef}></canvas>

<div className="controls">

Intensity: <input type="range" min={0} max={1} step={0.0001} value={intensity} onChange={onRangeChange}/>

</div>

</div>

</div>

</div>

)

}

export default AppBasically, we have two

HTML canvases in our app. We

load the initial image with the first useEffect hook, placing the resulting bitmap into the

state variable bitmap:

useEffect(() => {

// load initial sample image

(async () => {

const img = new Image();

img.src = SampleImage;

await img.decode();

const bitmap = await createImageBitmap(img);

setBitmap(bitmap);

})();

}, []);The async iife is necessary here, as we cannot useEffect would be unhappy receiving a promise.

The second useEffect hook, activated when bitmap changes, draws the bitmap on the first canvas:

useEffect(() => {

// update bitmap after user has selected a different one

if (bitmap) {

const srcCanvas = srcCanvasRef.current;

srcCanvas.width = bitmap.width;

srcCanvas.height = bitmap.height;

const ctx = srcCanvas.getContext('2d', { willReadFrequently: true });

ctx.drawImage(bitmap, 0, 0);

}

}, [ bitmap ]);The third useEffect hook then obtains an

ImageData object from the first

canvas and draws it on the second canvas:

useEffect(() => {

// update the result when the bitmap or intensity parameter changes

if (bitmap) {

const srcCanvas = srcCanvasRef.current;

const dstCanvas = dstCanvasRef.current;

const srcCTX = srcCanvas.getContext('2d', { willReadFrequently: true });

const { width, height } = srcCanvas;

const srcImageData = srcCTX.getImageData(0, 0, width, height);

dstCanvas.width = width;

dstCanvas.height = height;

const dstCTX = dstCanvas.getContext('2d');

dstCTX.putImageData(srcImageData, 0, 0);

}

}, [ bitmap, intensity ]);We need a new index.css:

:root {

font-family: Inter, system-ui, Avenir, Helvetica, Arial, sans-serif;

line-height: 1.5;

font-weight: 400;

color-scheme: light dark;

color: rgba(255, 255, 255, 0.87);

background-color: #242424;

font-synthesis: none;

text-rendering: optimizeLegibility;

-webkit-font-smoothing: antialiased;

-moz-osx-font-smoothing: grayscale;

}

body {

margin: 0;

display: flex;

flex-direction: column;

place-items: center;

min-width: 320px;

min-height: 100vh;

}And App.css:

#root {

flex: 1 1 100%;

width: 100%;

}

.App {

display: flex;

position: relative;

flex-direction: column;

width: 100%;

height: 100%;

}

.App .nav {

position: fixed;

width: 100%;

color: #000000;

background-color: #999999;

font-weight: bold;

flex: 0 0 auto;

padding: 2px 2px 1px 2px;

}

.App .nav .button {

padding: 2px;

cursor: pointer;

}

.App .nav .button:hover {

color: #ffffff;

background-color: #000000;

padding: 2px 10px 2px 10px;

}

.App .contents {

display: flex;

width: 100%;

margin-top: 2em;

}

.App .contents .pane {

flex: 1 1 50%;

padding: 5px 5px 5px 5px;

}

.App .contents .pane CANVAS {

border: 1px dotted rgba(255, 255, 255, 0.10);

max-width: 100%;

max-height: 90vh;

}

.App .contents .pane .controls INPUT {

vertical-align: middle;

width: 50%;

}

@media screen and (max-width: 600px) {

.App .contents {

flex-direction: column;

}

.App .contents .pane {

padding: 1px 2px 1px 2px;

}

.App .contents .pane .controls {

padding-left: 4px;

}

}

.hidden {

position: absolute;

visibility: hidden;

z-index: -1;

}

.align-left {

text-align: left;

}

.align-right {

text-align: right;

}Finally, download the following image into img as sample.png (or choose an image of your own):

With everything in place, we can start Vite in dev mode:

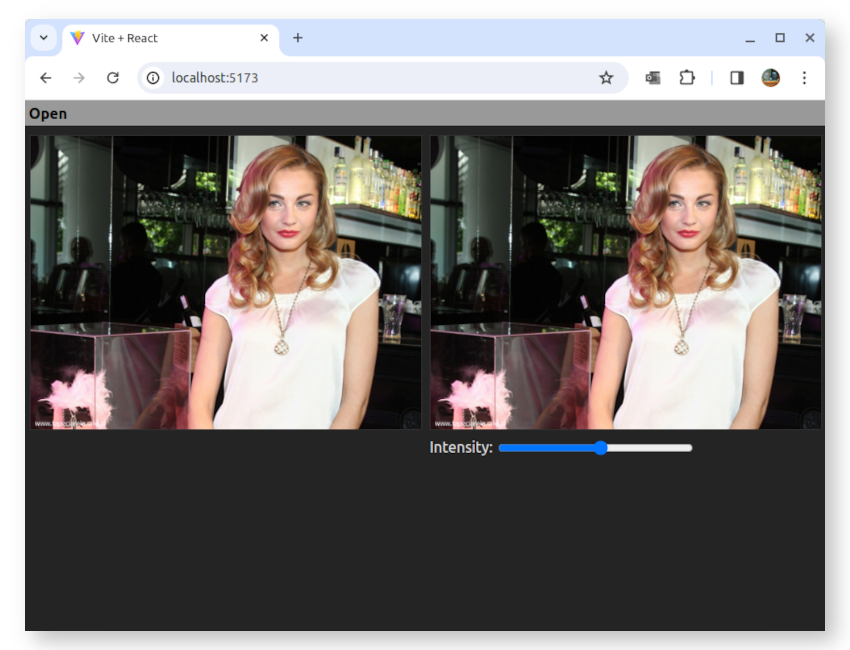

npm run devYou should see the following in the browser:

Nothing will happen when you move the slider, as we haven't yet implemented the filtering functionality. We'll proceed with doing so now that we see that the basic code for our app is working.

First, we add our Zig file:

// Pixel Bender kernel "Sepia" (translated using pb2zig)

const std = @import("std");

pub const kernel = struct {

// kernel information

pub const namespace = "AIF";

pub const vendor = "Adobe Systems";

pub const version = 2;

pub const description = "a variable sepia filter";

pub const parameters = .{

.intensity = .{

.type = f32,

.minValue = 0.0,

.maxValue = 1.0,

.defaultValue = 0.0,

},

};

pub const inputImages = .{

.src = .{ .channels = 4 },

};

pub const outputImages = .{

.dst = .{ .channels = 4 },

};

// generic kernel instance type

fn Instance(comptime InputStruct: type, comptime OutputStruct: type, comptime ParameterStruct: type) type {

return struct {

params: ParameterStruct,

input: InputStruct,

output: OutputStruct,

outputCoord: @Vector(2, u32) = @splat(0),

// output pixel

dst: @Vector(4, f32) = undefined,

// functions defined in kernel

pub fn evaluatePixel(self: *@This()) void {

const intensity = self.params.intensity;

const src = self.input.src;

const dst = self.output.dst;

self.dst = @splat(0.0);

var rgbaColor: @Vector(4, f32) = undefined;

var yiqaColor: @Vector(4, f32) = undefined;

const YIQMatrix: [4]@Vector(4, f32) = .{

.{

0.299,

0.596,

0.212,

0.0,

},

.{

0.587,

-0.275,

-0.523,

0.0,

},

.{

0.114,

-0.321,

0.311,

0.0,

},

.{ 0.0, 0.0, 0.0, 1.0 },

};

const inverseYIQ: [4]@Vector(4, f32) = .{

.{ 1.0, 1.0, 1.0, 0.0 },

.{

0.956,

-0.272,

-1.1,

0.0,

},

.{

0.621,

-0.647,

1.7,

0.0,

},

.{ 0.0, 0.0, 0.0, 1.0 },

};

rgbaColor = src.sampleNearest(self.outCoord());

yiqaColor = @"M * V"(YIQMatrix, rgbaColor);

yiqaColor[1] = intensity;

yiqaColor[2] = 0.0;

self.dst = @"M * V"(inverseYIQ, yiqaColor);

dst.setPixel(self.outputCoord[0], self.outputCoord[1], self.dst);

}

pub fn outCoord(self: *@This()) @Vector(2, f32) {

return .{ @as(f32, @floatFromInt(self.outputCoord[0])) + 0.5, @as(f32, @floatFromInt(self.outputCoord[1])) + 0.5 };

}

};

}

// kernel instance creation function

pub fn create(input: anytype, output: anytype, params: anytype) Instance(@TypeOf(input), @TypeOf(output), @TypeOf(params)) {

return .{

.input = input,

.output = output,

.params = params,

};

}

// built-in Pixel Bender functions

fn @"M * V"(m1: anytype, v2: anytype) @TypeOf(v2) {

const ar = @typeInfo(@TypeOf(m1)).Array;

var t1: @TypeOf(m1) = undefined;

inline for (m1, 0..) |column, c| {

comptime var r = 0;

inline while (r < ar.len) : (r += 1) {

t1[r][c] = column[r];

}

}

var result: @TypeOf(v2) = undefined;

inline for (t1, 0..) |column, c| {

result[c] = @reduce(.Add, column * v2);

}

return result;

}

};

pub const Input = KernelInput(u8, kernel);

pub const Output = KernelOutput(u8, kernel);

pub const Parameters = KernelParameters(kernel);

// support both 0.11 and 0.12

const enum_auto = if (@hasField(std.builtin.Type.ContainerLayout, "Auto")) .Auto else .auto;

pub fn createOutput(allocator: std.mem.Allocator, width: u32, height: u32, input: Input, params: Parameters) !Output {

return createPartialOutput(allocator, width, height, 0, height, input, params);

}

pub fn createPartialOutput(allocator: std.mem.Allocator, width: u32, height: u32, start: u32, count: u32, input: Input, params: Parameters) !Output {

var output: Output = undefined;

inline for (std.meta.fields(Output)) |field| {

const ImageT = @TypeOf(@field(output, field.name));

@field(output, field.name) = .{

.data = try allocator.alloc(ImageT.Pixel, count * width),

.width = width,

.height = height,

.offset = start * width,

};

}

var instance = kernel.create(input, output, params);

if (@hasDecl(@TypeOf(instance), "evaluateDependents")) {

instance.evaluateDependents();

}

const end = start + count;

instance.outputCoord[1] = start;

while (instance.outputCoord[1] < end) : (instance.outputCoord[1] += 1) {

instance.outputCoord[0] = 0;

while (instance.outputCoord[0] < width) : (instance.outputCoord[0] += 1) {

instance.evaluatePixel();

}

}

return output;

}

const ColorSpace = enum { srgb, @"display-p3" };

pub fn Image(comptime T: type, comptime len: comptime_int, comptime writable: bool) type {

return struct {

pub const Pixel = @Vector(4, T);

pub const FPixel = @Vector(len, f32);

pub const channels = len;

data: if (writable) []Pixel else []const Pixel,

width: u32,

height: u32,

colorSpace: ColorSpace = .srgb,

offset: usize = 0,

fn constrain(v: anytype, min: f32, max: f32) @TypeOf(v) {

const lower: @TypeOf(v) = @splat(min);

const upper: @TypeOf(v) = @splat(max);

const v2 = @select(f32, v > lower, v, lower);

return @select(f32, v2 < upper, v2, upper);

}

fn pbPixelFromFloatPixel(pixel: Pixel) FPixel {

if (len == 4) {

return pixel;

}

const mask: @Vector(len, i32) = switch (len) {

1 => .{0},

2 => .{ 0, 3 },

3 => .{ 0, 1, 2 },

else => @compileError("Unsupported number of channels: " ++ len),

};

return @shuffle(f32, pixel, undefined, mask);

}

fn floatPixelFromPBPixel(pixel: FPixel) Pixel {

if (len == 4) {

return pixel;

}

const alpha: @Vector(1, T) = if (len == 1 or len == 3) .{1} else undefined;

const mask: @Vector(len, i32) = switch (len) {

1 => .{ 0, 0, 0, -1 },

2 => .{ 0, 0, 0, 1 },

3 => .{ 0, 1, 2, -1 },

else => @compileError("Unsupported number of channels: " ++ len),

};

return @shuffle(T, pixel, alpha, mask);

}

fn pbPixelFromIntPixel(pixel: Pixel) FPixel {

const numerator: FPixel = switch (@hasDecl(std.math, "fabs")) {

// Zig 0.12.0

false => switch (len) {

1 => @floatFromInt(@shuffle(T, pixel, undefined, @Vector(1, i32){0})),

2 => @floatFromInt(@shuffle(T, pixel, undefined, @Vector(2, i32){ 0, 3 })),

3 => @floatFromInt(@shuffle(T, pixel, undefined, @Vector(3, i32){ 0, 1, 2 })),

4 => @floatFromInt(pixel),

else => @compileError("Unsupported number of channels: " ++ len),

},

// Zig 0.11.0

true => switch (len) {

1 => .{

@floatFromInt(pixel[0]),

},

2 => .{

@floatFromInt(pixel[0]),

@floatFromInt(pixel[3]),

},

3 => .{

@floatFromInt(pixel[0]),

@floatFromInt(pixel[1]),

@floatFromInt(pixel[2]),

},

4 => .{

@floatFromInt(pixel[0]),

@floatFromInt(pixel[1]),

@floatFromInt(pixel[2]),

@floatFromInt(pixel[3]),

},

else => @compileError("Unsupported number of channels: " ++ len),

},

};

const denominator: FPixel = @splat(@floatFromInt(std.math.maxInt(T)));

return numerator / denominator;

}

fn intPixelFromPBPixel(pixel: FPixel) Pixel {

const max: f32 = @floatFromInt(std.math.maxInt(T));

const multiplier: FPixel = @splat(max);

const product: FPixel = constrain(pixel * multiplier, 0, max);

const maxAlpha: @Vector(1, f32) = .{std.math.maxInt(T)};

return switch (@hasDecl(std.math, "fabs")) {

// Zig 0.12.0

false => switch (len) {

1 => @intFromFloat(@shuffle(f32, product, maxAlpha, @Vector(4, i32){ 0, 0, 0, -1 })),

2 => @intFromFloat(@shuffle(f32, product, undefined, @Vector(4, i32){ 0, 0, 0, 1 })),

3 => @intFromFloat(@shuffle(f32, product, maxAlpha, @Vector(4, i32){ 0, 1, 2, -1 })),

4 => @intFromFloat(product),

else => @compileError("Unsupported number of channels: " ++ len),

},

// Zig 0.11.0

true => switch (len) {

1 => .{

@intFromFloat(product[0]),

@intFromFloat(product[0]),

@intFromFloat(product[0]),

maxAlpha[0],

},

2 => .{

@intFromFloat(product[0]),

@intFromFloat(product[0]),

@intFromFloat(product[0]),

@intFromFloat(product[1]),

},

3 => .{

@intFromFloat(product[0]),

@intFromFloat(product[1]),

@intFromFloat(product[2]),

maxAlpha[0],

},

4 => .{

@intFromFloat(product[0]),

@intFromFloat(product[1]),

@intFromFloat(product[2]),

@intFromFloat(product[3]),

},

else => @compileError("Unsupported number of channels: " ++ len),

},

};

}

fn getPixel(self: @This(), x: u32, y: u32) FPixel {

const index = (y * self.width) + x - self.offset;

const src_pixel = self.data[index];

const pixel: FPixel = switch (@typeInfo(T)) {

.Float => pbPixelFromFloatPixel(src_pixel),

.Int => pbPixelFromIntPixel(src_pixel),

else => @compileError("Unsupported type: " ++ @typeName(T)),

};

return pixel;

}

fn setPixel(self: @This(), x: u32, y: u32, pixel: FPixel) void {

if (comptime !writable) {

return;

}

const index = (y * self.width) + x - self.offset;

const dst_pixel: Pixel = switch (@typeInfo(T)) {

.Float => floatPixelFromPBPixel(pixel),

.Int => intPixelFromPBPixel(pixel),

else => @compileError("Unsupported type: " ++ @typeName(T)),

};

self.data[index] = dst_pixel;

}

fn pixelSize(self: @This()) @Vector(2, f32) {

_ = self;

return .{ 1, 1 };

}

fn pixelAspectRatio(self: @This()) f32 {

_ = self;

return 1;

}

inline fn getPixelAt(self: @This(), coord: @Vector(2, f32)) FPixel {

const left_top: @Vector(2, f32) = .{ 0, 0 };

const bottom_right: @Vector(2, f32) = .{ @floatFromInt(self.width - 1), @floatFromInt(self.height - 1) };

if (@reduce(.And, coord >= left_top) and @reduce(.And, coord <= bottom_right)) {

const ic: @Vector(2, u32) = switch (@hasDecl(std.math, "fabs")) {

// Zig 0.12.0

false => @intFromFloat(coord),

// Zig 0.11.0

true => .{ @intFromFloat(coord[0]), @intFromFloat(coord[1]) },

};

return self.getPixel(ic[0], ic[1]);

} else {

return @splat(0);

}

}

fn sampleNearest(self: @This(), coord: @Vector(2, f32)) FPixel {

return self.getPixelAt(coord);

}

fn sampleLinear(self: @This(), coord: @Vector(2, f32)) FPixel {

const c = coord - @as(@Vector(2, f32), @splat(0.5));

const c0 = @floor(c);

const f0 = c - c0;

const f1 = @as(@Vector(2, f32), @splat(1)) - f0;

const w: @Vector(4, f32) = .{

f1[0] * f1[1],

f0[0] * f1[1],

f1[0] * f0[1],

f0[0] * f0[1],

};

const p00 = self.getPixelAt(c0);

const p01 = self.getPixelAt(c0 + @as(@Vector(2, f32), .{ 0, 1 }));

const p10 = self.getPixelAt(c0 + @as(@Vector(2, f32), .{ 1, 0 }));

const p11 = self.getPixelAt(c0 + @as(@Vector(2, f32), .{ 1, 1 }));

var result: FPixel = undefined;

comptime var i = 0;

inline while (i < len) : (i += 1) {

const p: @Vector(4, f32) = .{ p00[i], p10[i], p01[i], p11[i] };

result[i] = @reduce(.Add, p * w);

}

return result;

}

};

}

pub fn KernelInput(comptime T: type, comptime Kernel: type) type {

const input_fields = std.meta.fields(@TypeOf(Kernel.inputImages));

comptime var struct_fields: [input_fields.len]std.builtin.Type.StructField = undefined;

inline for (input_fields, 0..) |field, index| {

const input = @field(Kernel.inputImages, field.name);

const ImageT = Image(T, input.channels, false);

const default_value: ImageT = undefined;

struct_fields[index] = .{

.name = field.name,

.type = ImageT,

.default_value = @ptrCast(&default_value),

.is_comptime = false,

.alignment = @alignOf(ImageT),

};

}

return @Type(.{

.Struct = .{

.layout = enum_auto,

.fields = &struct_fields,

.decls = &.{},

.is_tuple = false,

},

});

}

pub fn KernelOutput(comptime T: type, comptime Kernel: type) type {

const output_fields = std.meta.fields(@TypeOf(Kernel.outputImages));

comptime var struct_fields: [output_fields.len]std.builtin.Type.StructField = undefined;

inline for (output_fields, 0..) |field, index| {

const output = @field(Kernel.outputImages, field.name);

const ImageT = Image(T, output.channels, true);

const default_value: ImageT = undefined;

struct_fields[index] = .{

.name = field.name,

.type = ImageT,

.default_value = @ptrCast(&default_value),

.is_comptime = false,

.alignment = @alignOf(ImageT),

};

}

return @Type(.{

.Struct = .{

.layout = enum_auto,

.fields = &struct_fields,

.decls = &.{},

.is_tuple = false,

},

});

}

pub fn KernelParameters(comptime Kernel: type) type {

const param_fields = std.meta.fields(@TypeOf(Kernel.parameters));

comptime var struct_fields: [param_fields.len]std.builtin.Type.StructField = undefined;

inline for (param_fields, 0..) |field, index| {

const param = @field(Kernel.parameters, field.name);

const default_value: ?*const anyopaque = get_def: {

const value: param.type = if (@hasField(@TypeOf(param), "defaultValue"))

param.defaultValue

else switch (@typeInfo(param.type)) {

.Int, .Float => 0,

.Bool => false,

.Vector => @splat(0),

else => @compileError("Unrecognized parameter type: " ++ @typeName(param.type)),

};

break :get_def @ptrCast(&value);

};

struct_fields[index] = .{

.name = field.name,

.type = param.type,

.default_value = default_value,

.is_comptime = false,

.alignment = @alignOf(param.type),

};

}

return @Type(.{

.Struct = .{

.layout = enum_auto,

.fields = &struct_fields,

.decls = &.{},

.is_tuple = false,

},

});

}The above code was translated from a Pixel Bender filter using pb2zig. Consult the intro page for an explanation of how it works.

At the top of App.jsx, insert the following import statement:

import { createOutput } from '../zig/sepia.zig';At the bottom, add this function:

async function createImageData(width, height, source, params) {

const input = { src: source };

const output = await createOutput(width, height, input, params);

const ta = output.dst.data.typedArray;

const clampedArray = new Uint8ClampedArray(ta.buffer, ta.byteOffset, ta.byteLength);

return new ImageData(clampedArray, width, height);

}The Zig function createOutput() has the follow declaration:

pub fn createOutput(

allocator: std.mem.Allocator,

width: u32,

height: u32,

input: Input,

params: Parameters,

) !Outputallocator is automatically provided by Zigar. We get width and height from the source canvas.

params contains a single f32: intensity. We initialize it using our state variable

of the same name, which changes when we move the slider.

Input is a parameterized type:

pub const Input = KernelInput(u8, kernel);Which expands to:

pub const Input = struct {

src: Image(u8, 4, false);

};Then further to:

pub const Input = struct {

src: struct {

pub const Pixel = @Vector(4, u8);

pub const FPixel = @Vector(4, f32);

pub const channels = 4;

data: []const Pixel,

width: u32,

height: u32,

colorSpace: ColorSpace = .srgb,

offset: usize = 0,

};

};Image was purposely defined in a way so that it is compatible with the browser's

ImageData. Its

data field is []const @Vector(4, u8), a slice pointer that accepts a Uint8ClampedArray

as target without casting. We can therefore simply pass { src: source } to createOutput as

input.

Like Input, Output is a parameterized type. It too can potentially contain multiple images. In

this case (and most cases), there's only one:

pub const Output = struct {

dst: {

pub const Pixel = @Vector(4, u8);

pub const FPixel = @Vector(4, f32);

pub const channels = 4;

data: []Pixel,

width: u32,

height: u32,

colorSpace: ColorSpace = .srgb,

offset: usize = 0,

},

};The typedArray property of output.dst.data gives us a Uint8Array. ImageData wants a

Uint8ClampedArray so we need to convert it before passing it to the constructor:

const ta = output.dst.data.typedArray;

const clampedArray = new Uint8ClampedArray(ta.buffer, ta.byteOffset, ta.byteLength);

return new ImageData(clampedArray, width, height);Now it's just a matter of inserting a call into our useEffect hook:

useEffect(() => {

// update the result when the bitmap or intensity parameter changes

(async() => {

if (bitmap) {

const srcCanvas = srcCanvasRef.current;

const dstCanvas = dstCanvasRef.current;

const srcCTX = srcCanvas.getContext('2d', { willReadFrequently: true });

const { width, height } = srcCanvas;

const srcImageData = srcCTX.getImageData(0, 0, width, height);

const dstImageData = await createImageData(width, height, srcImageData, { intensity });

dstCanvas.width = width;

dstCanvas.height = height;

const dstCTX = dstCanvas.getContext('2d');

dstCTX.putImageData(dstImageData, 0, 0);

}

})();

}, [ bitmap, intensity ]);We have to wrap everything in an async iife due to createImageData being async, which in turn is

due to our disabling of top-level await to accommodate older browsers.

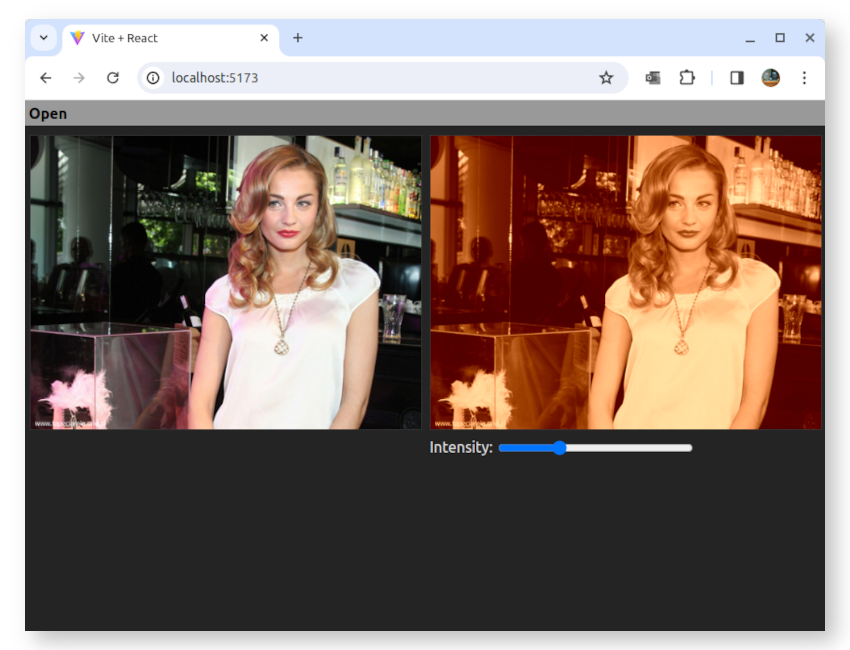

Now our app does what it's supoosed to:

If you open a large image in the app, you might notice the slider becoming "sticky". This is due to the image filter blocking the UI thread for extended amount of time. To avoid an unrepensive UI, we want to offload the work to web workers.

To begin, create worker.js and move createImageData() into it, along with the import statement

for our Zig filter:

import { createOutput } from '../zig/sepia.zig';

async function createImageData(width, height, source, params) {

const input = { src: source };

const output = await createOutput(width, height, input, params);

const ta = output.dst.data.typedArray;

const clampedArray = new Uint8ClampedArray(ta.buffer, ta.byteOffset, ta.byteLength);

return new ImageData(clampedArray, width, height);

}Then add the following communicative plumbing:

onmessage = async (evt) => {

const [ name, jobId, ...args ] = evt.data;

try {

const [ result, transfer ] = await runFunction(name, args);

postMessage([ name, jobId, result ], { transfer });

} catch {

postMessage([ 'error', jobId, err ]);

}

};

async function runFunction(name, args) {

switch (name) {

case 'createImageData':

const output = await createImageData(...args);

const transfer = [ output.data.buffer ];

return [ output, transfer ];

default:

throw new Error(`Unknown function: ${name}`);

}

}

postMessage('ready');When our worker receives a message, it calls createImageData() and send a message back with the

result. The message contains a job id, which allows us to find the right promise to resolve in

the UI thread. The

transfer option of postMessage()

is used to avoid unnecessary copying of memory.

In App.jsx we add the following code:

async function createImageData(width, height, source, params) {

const args = [ width, height, source, params ];

const transfer = [ source.data.buffer ];

return startJob('createImageData', args, transfer);

}

function purgeQueue() {

pendingRequests.splice(0);

}

let keepAlive = true;

let maxCount = navigator.hardwareConcurrency;

const activeWorkers = [];

const idleWorkers = [];

const pendingRequests = [];

const jobs = [];

let nextJobId = 1;

async function acquireWorker() {

let worker = idleWorkers.shift();

if (!worker) {

if (maxCount < 1) {

throw new Error(`Unable to start worker because maxCount is ${maxCount}`);

}

if (activeWorkers.length < maxCount) {

// start a new one

worker = new Worker(new URL('./worker.js', import.meta.url), { type: 'module' });

// wait for start-up message from worker

await new Promise((resolve, reject) => {

worker.onmessage = resolve;

worker.onerror = reject;

});

worker.onmessage = handleMessage;

worker.onerror = (evt) => console.error(evt);

} else {

// wait for the next worker to become available again

return new Promise(resolve => pendingRequests.push(resolve));

}

}

activeWorkers.push(worker);

return worker;

}

async function startJob(name, args = [], transfer = []) {

const worker = await acquireWorker();

const job = {

id: nextJobId++,

promise: null,

resolve: null,

reject: null,

worker,

};

job.promise = new Promise((resolve, reject) => {

job.resolve = resolve;

job.reject = reject;

});

jobs.push(job);

worker.onmessageerror = () => reject(new Error('Message error'));

worker.postMessage([ name, job.id, ...args], { transfer });

return job.promise;

}

function handleMessage(evt) {

const [ name, jobId, result ] = evt.data;

const jobIndex = jobs.findIndex(j => j.id === jobId);

const job = jobs[jobIndex];

jobs.splice(jobIndex, 1);

const { worker, resolve, reject } = job;

if (name !== 'error') {

resolve(result);

} else {

reject(result);

}

// work on pending request if any

const next = pendingRequests.shift();

if (next) {

next(worker);

} else {

const workerIndex = activeWorkers.indexOf(worker);

if (workerIndex !== -1) {

activeWorkers.splice(workerIndex, 1);

}

if (keepAlive && idleWorkers.length < maxCount) {

idleWorkers.push(worker);

}

}

}The code above implements a rudimentary job queue. The new version of createImageData() submits

a job and waits for it to be completed. In startJob(), you can see at the bottom that the

transfer option is used here as well to transfer ownership of the ImageData's backing buffer to

the worker:

worker.postMessage([ name, job.id, ...args], { transfer });

return job.promise;

}Start up the app in dev mode again. The setting slider should now move more smoothly.

You will run into an error when you try to create a production build in the usual manner:

npm run buildvite v5.2.7 building for production...

✓ 19 modules transformed.

x Build failed in 446ms

error during build:

RollupError: Expression expected

at getRollupError (file:///.../node_modules/rollup/dist/es/shared/parseAst.js:376:41)

at ParseError.initialise (file:///.../node_modules/rollup/dist/es/shared/node-entry.js:11175:28)

at convertNode (file:///.../node_modules/rollup/dist/es/shared/node-entry.js:12915:10)

at convertProgram (file:///.../node_modules/rollup/dist/es/shared/node-entry.js:12235:12)

at Module.setSource (file:///.../node_modules/rollup/dist/es/shared/node-entry.js:14074:24)

at async ModuleLoader.addModuleSource (file:///.../node_modules/rollup/dist/es/shared/node-entry.js:18713:13)

As noted in the Vite documentation, "config.plugins only applies to workers in dev". Our existing config therefore would leave our Zig code unprocessed, interpreted erroreously as JavaScript.

To fix that, open vite.config.js and add a new worker section:

// https://vitejs.dev/config/

export default defineConfig({

plugins: [react(), zigar({ topLevelAwait: false })],

worker: {

format: 'esm',

plugins: () => [zigar({ topLevelAwait: false })],

},

})Now you're ready to build and preview:

npm run build

npm run preview

Without the overhead of Zig runtime safety, the app should be much snappier. It should be noted

that nothing stops you from adding optimize: 'ReleaseSmall' to the plugin options so you would

get full performance from WASM code even during development.

A major advantage of using Zig for a task like image processing is that the same code can be deployed both on the browser and on the server. After a user has made some changes to an image on the frontend, the backend can apply the exact same effect using the same code. Consult the Node version of this example to learn how to do it.

The image filter employed for this example is very rudimentary. Check out pb2zig's project page to see more advanced code.

That's it for now. I hope this tutorial is enough to get you started with using Zigar. The plan is to add more examples in the future. Stay tuned!