-

-

Notifications

You must be signed in to change notification settings - Fork 1.7k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Can't optimize HighLevelGraph (delayed object) #6219

Comments

|

The functions in Apologies, but I don't have time right now to respond to your actual issue of fusing these tasks, but if you get stuck ping again and I can try to take a look. |

Thanks for the tip. I think figured out some version of this in my last code example. But what I don't understand is how to go back to a Delayed object from the raw optimized graph: dsk_fused = fuse(dask.utils.ensure_dict(store_future.dask))

dask.compute(dsk_fused) # doesn't compute, just returns a the same dict |

|

@rabernat In [1]: from dask.delayed import Delayed

In [2]: def inc(x): return x + 1

In [3]: dsk = {'data': 0, 'result': (inc, 'data')}

In [4]: r = Delayed("result", dsk)

In [5]: r.compute()

Out[5]: 1Looking into why it doesn't fuse now. I have a guess. Edit: For "why doesn't this fuse", I think it's because we don't call diff --git a/dask/delayed.py b/dask/delayed.py

index 2d9ba5fdd..eba1da8c7 100644

--- a/dask/delayed.py

+++ b/dask/delayed.py

@@ -13,7 +13,7 @@ from .compatibility import is_dataclass, dataclass_fields

from .core import quote

from .context import globalmethod

-from .optimization import cull

+from .optimization import cull, fuse

from .utils import funcname, methodcaller, OperatorMethodMixin, ensure_dict, apply

from .highlevelgraph import HighLevelGraph

@@ -456,8 +456,9 @@ def right(method):

def optimize(dsk, keys, **kwargs):

dsk = ensure_dict(dsk)

- dsk2, _ = cull(dsk, keys)

- return dsk2

+ dsk2, deps = cull(dsk, keys)

+ dsk3, deps = fuse(dsk2, keys, dependencies=deps)

+ return dsk3

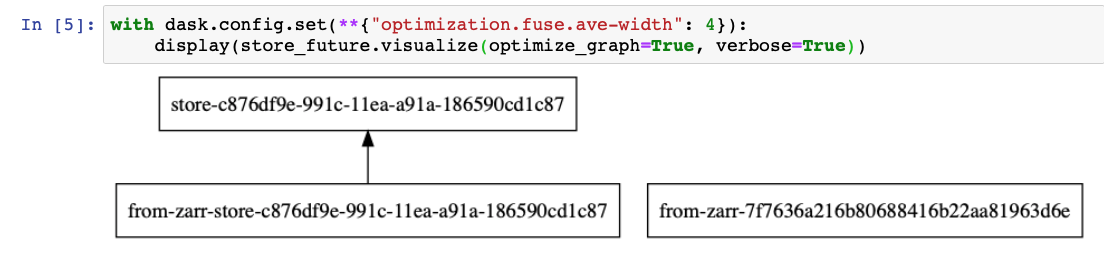

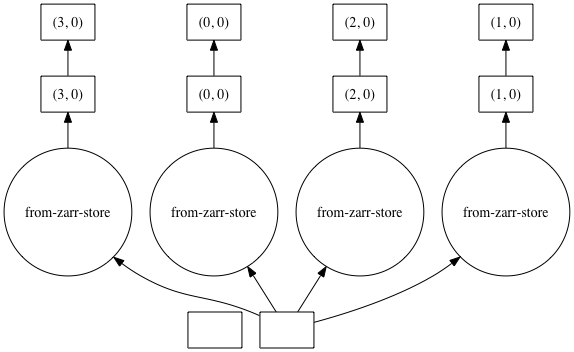

def rebuild(dsk, key, length):Then we get fusion, assuming the with dask.config.set(**{"optimization.fuse.ave-width": 4}):

display(store_future.visualize(optimize_graph=True, verbose=True))Should we be fusing |

|

Thanks for the tip @TomAugspurger! Seems obvious in retrospect, and is probably well documented. With that hint, I got to from dask.delayed import Delayed

delayed_fused = Delayed("read-and-store", dsk_fused)

delayed_fused.compute()However, this brought me a new error: I have concluded that the way forward for many of my problems is to become more hands-on with dask optimizations, so this issue represents me trying to figure out how to apply them. Sorry if the issue tracker is not the ideal place for this to play out. I do feel that this reveals some opportunities for improving the documentation. |

|

I'm not sure this is the graph I want though. I want to fuse vertically, not horizontally. I don't want all my reads in one task and all my writes in the next task. Although this would reduce the total number of tasks, it would make my memory and communication problems worse. Instead, I want four distinct Does this make sense? |

|

To your error, dsk_fused, deps = fuse(dask.utils.ensure_dict(store_future.dask))

delayed_fused = Delayed(store_future._key, dsk_fused)

delayed_fused.compute()I'm thinking more about your desired task graph. |

|

Do we have a sense for why a workaround is needed here? If possible I

think it would be best to focus efforts there first if people have the

time. That way users less sophisticated than Ryan will also benefit.

…On Mon, May 18, 2020 at 8:43 AM Tom Augspurger ***@***.***> wrote:

To your error, fuse returns a tuple of dsk, dependencies, and the key

passed to Delayed needs to be in the task graph. Try

dsk_fused, deps = fuse(dask.utils.ensure_dict(store_future.dask))delayed_fused = Delayed(store_future._key, dsk_fused)delayed_fused.compute()

I'm thinking more about your desired task graph.

—

You are receiving this because you are subscribed to this thread.

Reply to this email directly, view it on GitHub

<#6219 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AACKZTBH6OTOK6RVMED77A3RSFJQTANCNFSM4NDXEKPA>

.

|

My 2 cents: I think we should leave fuse off for delayed (in my experience long linear chains of

The issue was the configuration, if you drop setting the width, you get a single fused chain for each branch. |

Rethinking this - most workloads using |

|

@jcrist to make sure I understand: you'll explicitly call |

|

Rather than call fuse perhaps we want to call `dask.optimize` so that all

array optimizations are called? I think that we do this in other

situations where we cross collection type boundaries.

…On Mon, May 18, 2020 at 8:59 AM Tom Augspurger ***@***.***> wrote:

@jcrist <https://github.com/jcrist> to make sure I understand: you'll

explicitly call fuse in da.store before creating the Delayed that's

returned? If so, then +1.

—

You are receiving this because you commented.

Reply to this email directly, view it on GitHub

<#6219 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AACKZTGJODVPMR7QEKU5QXLRSFLMRANCNFSM4NDXEKPA>

.

|

|

There are two fixes here:

|

I am trying to optimize a simple read / store pipeline by fusing the read and write tasks into a single operation.

Here is a concrete example:

I would like to fuse the

from-zarrandstoretasks into one task.However, I can't apply any optimizations to my graph, because of this error:

raises

(Something similar was seen in radix-ai/graphchain#36.)

As a workaround, I tried to do the optimization on the low level graph:

However, I don't know how to go from this back to a delayed object that I can compute. The documentation on optimization does not really explain how to go back and forth between Dask's various datatypes and this low-level graph.

I feel I am missing some important concept ere, but I can't figure out what.

Dask version 2.10.1.

The text was updated successfully, but these errors were encountered: