Replies: 18 comments 33 replies

-

|

I honestly was not on board with this at all until this line:

In practice, I see so many people screw themselves up trying to split up projects for the wrong reasons. They quickly blow up the great unifying ability of dbt to create a single source of truth, and lose the most positive effect of dbt's current limitations in this area, which is forcing you to collaborate across functions, create unified definitions, and break down silos (it's a feature 🦋 not a 🐛!). The easier we make this, the more likely analytics teams are to end up with two different orders models for two different teams and find themselves hating life. That said! -- housing these projects in a unified repo would help mitigate a lot of the worst effects of separation while gaining the benefits of increased modularity. This actually is the idea of the modern monorepo, and what you're trying to break down is the monolith -- so I would say your alternative title should be 'Down with monolith, up with the monorepo'. |

Beta Was this translation helpful? Give feedback.

-

|

@jtcohen6 , this makes a lot of sense and nicely fits into the whole #DataMesh concept that is emerging. If you want multiple domain teams to each be responsible for a part of the code, those individual code bases should still work together nicely / know of each others existence and dependencies. By doing this, dbt Labs could also start selling dbt as a tool that fits nicely into the 'Data Mesh hype train', which will for sure attract new customers! :-) |

Beta Was this translation helpful? Give feedback.

-

|

More thoughts on model versioning, after a great conversation this afternoon with some interested folks. There are two options here:

|

Beta Was this translation helpful? Give feedback.

-

|

I am extremely interested in this topic. I think there are very few topics being discussed right now that are more impactful for the long-term experience of dbt project development. We have always wanted to provide the modularity of software development when it comes to building knowledge graphs, but dbt in its current form does not yet provide this. Being able to take large chunks of functionality and call them

There are a million other things that are important in this as well (i.e. how does all of this get represented in a visual DAG? i don't believe the correct answer is always just "explode everything and show all of the detail!!") but I think the above two things are the biggest items and other stuff must fall out of the approaches we take there. |

Beta Was this translation helpful? Give feedback.

-

|

@jtcohen6, what if we treat cross project objects just like a source? My colleague's model is a source. (I think, atleast, separate project/repo by team) For all of development purposes they act like a source: you can't (shouldn't) schedule them; you don't need to rebuild them for your development or PR checks. Only when visualizing the lineage or building the dbt docs, dbt would need to extract metadata from the other project and get mapping between database object name and model name to show the upstream lineage. The core of dbt could stay the same. It make sense to use database object name as an interface between teams rather than dbt model name because most (95%+) of the downstream user are your analyst that using various reporting tools. Also, if teams are using alias in models, then you don't have to confirm individual model name. Whereas, if you use model name for reference between dbt project, then model name will also be tied to dependencies along with database object name. Main advantage for customer with existing data transformation that are gradually switching to dbt is: As other teams migrate their code to dbt, your lineage would automatically grow. You don't have to update your code to switch from source() to ref(). If your database has mix of tables build using dbt (from source data already replicated into your database server) and other external integration, then other teams using your table don't need to know how individual tables are built beforehand. Lineage or dbt docs can highlight such tables differently depending on whether they exists in the dbt project or not. Regarding getting the downstream dependencies between project: Like you said, there should be a purpose built "one big docs" jobs that combines metadata from all project. It is safe to assume that this job will have access to metadata from all projects in an account. Versioning has always been tricky with data analytics because you have to continually refresh data in old and new versions. As long as the grain of a table is staying the same, it is efficient manage it within single model (by adding new column for change in logic or if you have to drop a column, wait for all downstream to remove their dependencies). So i think, versioning would be a separate topic on it own. |

Beta Was this translation helpful? Give feedback.

-

|

I'm happy to own the blueprints and mechanical discovery of how exactly this can be a reality in dbt. Going to draft my ideas and work internally at dbt Labs before sharing complete thoughts in this discussion 🧃 |

Beta Was this translation helpful? Give feedback.

-

|

Okay Community! This is going to be a juicy 2 part post. The first is philosophical and the second has code snippets to crystallize the philosophy with something tangible to discuss! Goals:

Note: Portions of this are thematically consistent with @jtcohen6's original post. I do this to prevent you scrolling up and down to understand the full story :) Part 1: Old Problems with New ParadigmsThe ProblemsHaving a monorepo is overwhelming to newcomers and maintainers of a dbt project.

Using a multirepo approach is less overwhelming to reason about files but brittle in building lineage and confident dependencies across repos

Both approaches in their current state hit a ceiling and requires tech lead heroes to roll up their sleeves and offer themselves as PR babysitter tributes. New ParadigmsData Contracts are the API analogy for data

Working with data is very different from APIs

Values of this system

Use Cases/ Who is this for?

|

Beta Was this translation helpful? Give feedback.

-

In the 'exposing a model' interface, you should be able to specify who (e.g. google group) you want to expose your model to. Ideally, we also automatically apply the permissions to access the table to that group |

Beta Was this translation helpful? Give feedback.

-

I'm not sure if you should allow an 'outsider' to trigger an upstream model to build

|

Beta Was this translation helpful? Give feedback.

-

|

Thanks for laying this out @sungchun12 ! It definitely helps to visualize it. I concede that others may be more “in the thick of it” than I ever have been, but here’s my thoughts: Setup

Upgrading contracts

Invoking upstream contract models

Limiting the use of modelsThere’s no questions to answer here, but this section has satisfied the use case of the downstream contracts thought above. I wonder if you could also just reference a folder path here, as I’m imagining a scenario where you want to share most models except a few. Maybe something like: from models:

# Model folder level

+shared: true

+except:

- ref('my_model')

# Sub-folder level

marts:

+shared: trueor sharing from the dbt_project.yml level after defining contracts (which might be a messy idea): from models:

marts:

+share_with: [contract('finance-only'), contract('marketing-only')]@_@ big brain thinking with too smol brain |

Beta Was this translation helpful? Give feedback.

-

|

Quick and Dirty Thoughts

|

Beta Was this translation helpful? Give feedback.

-

|

Hey Everyone! Please follow progress here: https://github.com/orgs/dbt-labs/projects/24 |

Beta Was this translation helpful? Give feedback.

-

|

In conversation with dbt users: Background:

Downside:

Considerations:

|

Beta Was this translation helpful? Give feedback.

-

|

Hey @jtcohen6 , I agree with your assessment of the problem you are trying to solve. Context and problemTo add more context, I work in an organisation where we have thousands of nodes in a single dbt DAG. This is a new and natural phenomenon because of how dbt enables data analysts and analytics engineers to easily contribute models to the dbt project DAG. #power-to-the-people. And yes, at some point, those DAGs get large and unwieldy, resulting in long build times. So the next logical option is to break the big project down into sub-projects, effectively creating a DAG of DAGs. That's where the nightmare begins:

I have summarised these issues and more in my blog post. Clarifying questionI like your proposed solution of having cross-project dependencies using refs: This implies that there would need to be two dbt run modes in the future:

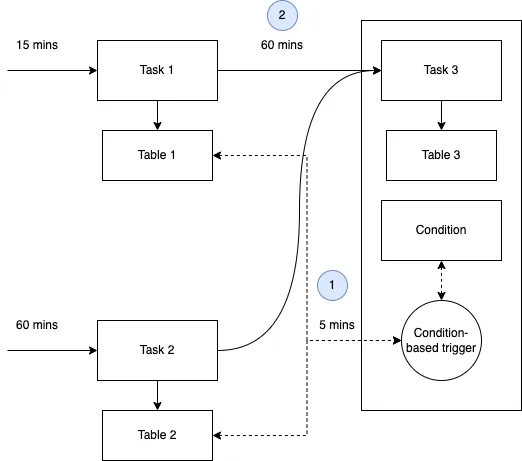

dbt run would be executed at the root of some kind of folder that sits outside of a dbt project folder. Are you thinking along the same lines too? My proposed solutionAn alternative and adjacent approach I would like to suggest is to de-couple the DAG and have each node of the DAG execute independently based on a freshness or condition-based trigger. I have written a blog to propose this approach here. The key highlights are: Create an event-loop that checks every 5 minutes to see if the run conditions are This is similar to the approach of a distribution centre. Packages arrive from multiple sources, however each package is not shipped to a customer immediately. Instead, the distribution centre waits for more packages to arrive before sending a batch of packages at once to maximise delivery. In our context, each node is its own distribution centre, and checks for upstream dependencies before triggering itself. Benefits: Prevents joins on stale data Loose coupling and independent sync frequency This means that each node has its own execution frequency, which allows each node to be executed independently from other nodes at varying intervals. This is in stark contrast to the mono-dag where the entire DAG is triggered on one schedule (e.g. 00:00 daily). Faster build and test times Uses critical path I am open to anyone's thoughts and critique of this approach. |

Beta Was this translation helpful? Give feedback.

-

|

Hey Folks! I’m here to wrap up this month-long R&D effort for dbt Contracts. There’s been a lot of work tracked across slack, github, demo videos, draft PRs that deserve to be consolidated and digestible for you all. Here it goes! TLDR: This work was so rewarding because in the midst of navigating this giant ocean of a problem, the community rose to the occasion to provide constructive feedback, and solidified this problem is worth solving for large data teams. Together, we parsed what building block efforts are needed to make dbt contracts work cohesively. These are efforts that are a win in the short term AND re-usable for contracts work in the long term. Although this research phase is over(at least Sung leading it), the work continues with statically typed SQL coming to dbt(think: enforce data types on columns). Read below to see more :) Goals:

Relevant Videos: https://loom.com/share/folder/30c6ce127a6143e0b28a6720ffe1ca9b Github Project: https://github.com/orgs/dbt-labs/projects/24/views/1 Relevant Docs:

Relevant Slack Threads: Draft PRs as Research: ref works across two projects: #5966 ref permissions within a monorepo: #6007 Exposures as dbt Contracts configs: #5944 Building Block Efforts: These are necessary components to make dbt Contracts function as a whole, integrated experience. Instead of boiling the ocean at once, these should be built and then reused for dbt Contracts when the time comes!

Next Steps:

|

Beta Was this translation helpful? Give feedback.

-

|

Hi team, want to pass on feedback I'm hearing from customers (incl. an organization that could have 100+ developers contributing and where they need to share code across teams while maintaining control on who can modify that code, when and where.)

|

Beta Was this translation helpful? Give feedback.

-

|

Hi all, hope I'm not late to the party but wanted to bring up some suggestions for the contract feature: In the context of data privacy / retention, I think it'd be very useful if the contracts would allow for different column / row level permissions, depending on the consumer. That way we could very easily ensure that people within the company don't have access to data they don't have to use. Is this something you're looking at? Thanks in advance! |

Beta Was this translation helpful? Give feedback.

-

|

Hi @jtcohen6 and dbt team, |

Beta Was this translation helpful? Give feedback.

-

Newer discussion: #6725

Projects should be smaller.

Cross-project lineage should just work.

Alt title: "Down with the monorepo."

Context:

This isn't something we can make immediate progress on—but it's something I've been thinking a lot about, and I want to share that thinking to hear more folks' thoughts. Hence a discussion for now, with issues to follow when we're ready.

Strong premises, loosely stated

dbt runand see1 of 5000 STARTref.What is it?

There's a lot more to say here, and many implementation details I'm still trying to figure out, but I think it comes down to:

.yml) files for the same-named model. That property disambiguation is work we should still pursue, and we should also think about namespacing support for two models with the same names. Those aren't blockers for this work in particular, though, so they're outside scope for this proposal.ref()for models from other projects.dbt-coreskips resolving those refs at parse time, and resolves them as the first step of execution instead.dbt depsto access their source code.dbt-coregets access to a limited amount of metadata, which is more 1:1 mapping table than it is big badmanifest.json. This metadata could be extracted from those other projects, and provided as some sort of artifact.What might it look like?

In my project code:

There's no package named

another_packagein mypackages.yml, and there's no filedim_usersin thedbt_packagesdirectory. That's ok; dbt observes this at parse time, and leaves itself a reminder for later.At runtime, dbt gets access to a "mapping" artifact, akin to:

{ "upstream_project": { "model.upstream_project.dim_users": "analytics.acme.dim_users", "model.upstream_project.fct_activity": "analytics.acme.fct_activity", }, "upstream_project_two": { "seed.upstream_project_two.country_codes": "seed_db.seed_schema.iso2_country_codes", ... }, ... }And dbt compiles accordingly:

Considerations

CODEOWNERS), slim CI even slimmer.dbt-utils, respectively). Within an org, private packages will continue to serve the purpose of configuring.ref'ing a model, dbt will tell you where it lives in the database. That database location could include a version specifier in its schema/alias. As these cross-team and cross-project relationships mature, "public" models will naturally constitute a contracted API, and it will be important to retain the ability to make breaking and backwards-compatible changes. As the maintainer of downstream project B, I could continue referencing a versioned deployment of upstream project A, and then migrate to the new version at my own readiness (within some acceptable window).Beta Was this translation helpful? Give feedback.

All reactions