-

Notifications

You must be signed in to change notification settings - Fork 5.9k

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Add tutorials to walk through key use cases.

- Loading branch information

Showing

7 changed files

with

614 additions

and

0 deletions.

There are no files selected for viewing

118 changes: 118 additions & 0 deletions

118

docs/core/diagnostics/app_is_leaking_memory_eventual_crash.md

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,118 @@ | ||

| --- | ||

| title: Debugging a memory leak - .NET Core | ||

| description: A tutorial walk-through, debugging a memory leak in .NET Core. | ||

| author: sdmaclea | ||

| ms.author: stmaclea | ||

| ms.date: 08/27/2019 | ||

| --- | ||

| # Debugging a memory leak | ||

|

|

||

| In this scenario, the endpoint will slowly start leaking memory and eventually will result in an out of memory exception. To diagnose this scenario, we need several key pieces of diagnostics data. | ||

|

|

||

| ## Memory counters | ||

|

|

||

| Before we dig into collecting diagnostics data to help us root cause this scenario, we need to convince ourselves that what we are actually seeing is a memory leak (memory growth). We can use the [dotnet-counters](dotnet-counters.md) tool to get at this information. | ||

|

|

||

| Lets run the [Sample debug target](sample-debug-target.md). | ||

|

|

||

| ```bash | ||

| dotnet run | ||

| ``` | ||

|

|

||

| Then find the process ID using: | ||

|

|

||

| ```bash | ||

| dotnet-trace list-processes | ||

| ``` | ||

|

|

||

| Before causing the leak, lets check our managed memory counters: | ||

|

|

||

| ```bash | ||

| dotnet-counters monitor --refresh-interval 1 -p 4807 | ||

| ``` | ||

|

|

||

| 4807 is the process ID that was found using `dotnet-trace list-processes`. The refresh-interval is the number of seconds between refreshes. | ||

|

|

||

| The output should be similar to: | ||

|

|

||

| ``` | ||

| Press p to pause, r to resume, q to quit. | ||

| System.Runtime: | ||

| CPU Usage (%) 4 | ||

| Working Set (MB) 66 | ||

| GC Heap Size (MB) 4 | ||

| Gen 0 GC / second 0 | ||

| Gen 1 GC / second 0 | ||

| Gen 2 GC / second 0 | ||

| Number of Exceptions / sec 0 | ||

| ``` | ||

|

|

||

| Here we can see that right after startup, the managed heap memory is 4 MB. | ||

|

|

||

| Now, let's hit the URL (http://localhost:5000/api/diagscenario/memleak/200000) | ||

|

|

||

| Rerun the dotnet-counters command. We should see an increase in memory usage as shown below: | ||

|

|

||

| ``` | ||

| Press p to pause, r to resume, q to quit. | ||

| System.Runtime: | ||

| CPU Usage (%) 4 | ||

| Working Set (MB) 353 | ||

| GC Heap Size (MB) 258 | ||

| Gen 0 GC / second 0 | ||

| Gen 1 GC / second 0 | ||

| Gen 2 GC / second 0 | ||

| Number of Exceptions / sec 0 | ||

| ``` | ||

|

|

||

| Memory has now grown to 258 MB. | ||

|

|

||

| We can safely say that memory is growing or leaking. The next step is collect the right data for memory analysis. | ||

|

|

||

| ### Core dump generation | ||

|

|

||

| When analyzing possible memory leaks, we need access to the apps memory heap. We then analyze the memory contents. Looking at relationships between objects, we create theories on why memory isn't being freed. A common diagnostics data source is a memory dump (Win) or the equivalent core dump (Linux). To generate a core dump of a .NET Core application, we can use the [dotnet-dump)](dotnet-dump.md) tool. | ||

|

|

||

| Using the previous [Sample debug target](sample-debug-target.md) started above, run the following command to generate a core dump: | ||

|

|

||

| ```bash | ||

| sudo ./dotnet-dump collect -p 4807 | ||

| ``` | ||

|

|

||

| 4807 is the process ID that can be found using `dotnet-trace list-processes`. The result is a core dump located in the same folder. | ||

|

|

||

| > [!NOTE] | ||

| > To generate core dumps, `dotnet-dump` requires sudo. | ||

| ### Analyzing the core dump | ||

|

|

||

| Now that we have a core dump generated, use the [dotnet-dump)](dotnet-dump.md) tool to analyze the dump: | ||

|

|

||

| ```bash | ||

| dotnet-dump analyze core_20190430_185145 | ||

| ``` | ||

|

|

||

| Where `core_20190430_185145` is the name of the core dump you want to analyze. | ||

|

|

||

| > [!NOTE] | ||

| > If you see an error complaining that libdl.so cannot be found, you may have to install the libc6-dev package. | ||

| You'll be presented with a prompt where you can enter SOS commands. Commonly, the first thing we want to look at is the overall state of the managed heap: | ||

|

|

||

| ```bash | ||

| dumpheap -stat | ||

| ``` | ||

|

|

||

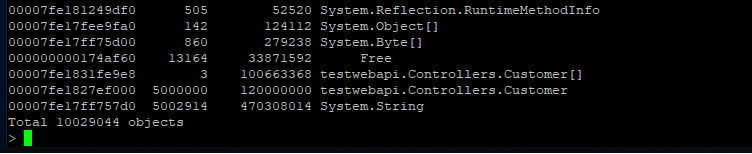

| The (partial) output can be seen below: | ||

|

|

||

|  | ||

|

|

||

| Here we can see that we have quite a few strings laying around (also instances of Customer and Customer[]). We can now use the gcroot command on one of the string instances to see how/why the object is rooted: | ||

|

|

||

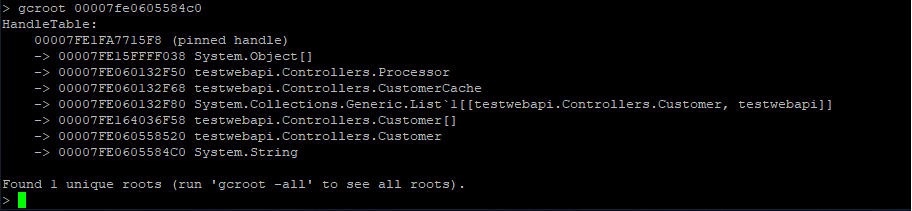

|  | ||

|

|

||

| The string instance appears to be rooted from top-level Processor object which in turn references a cache. We can continue dumping out objects to see how much the cache is holding on to: | ||

|

|

||

|  | ||

|

|

||

| From here, we can now try to back-track (from code) why the cache seems to be growing in an unbound fashion. |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,114 @@ | ||

| --- | ||

| title: Debugging high CPU usage - .NET Core | ||

| description: A tutorial walk-through, debugging high CPU usage in .NET Core. | ||

| author: sdmaclea | ||

| ms.author: stmaclea | ||

| ms.date: 08/27/2019 | ||

| --- | ||

| # Debugging high CPU usage | ||

|

|

||

| In this scenario, the [sample debug target](sample-debug-target.md) will consume excessive CPU. To diagnose this scenario, we need several key pieces of diagnostics data. | ||

|

|

||

| ## CPU counters | ||

|

|

||

| Before we dig into collecting diagnostics data, we need to convince ourselves that what we are actually seeing is a high CPU condition. | ||

|

|

||

| Lets run the [sample debug target](sample-debug-target.md). | ||

|

|

||

| ```bash | ||

| dotnet run | ||

| ``` | ||

|

|

||

| Then find the process ID using: | ||

|

|

||

| ```bash | ||

| dotnet-trace list-processes | ||

| ``` | ||

|

|

||

| Before hitting the above URL that will cause the high CPU condition, lets check our CPU counters using the [dotnet-counters](dotnet-counters.md) tool: | ||

|

|

||

| ```bash | ||

| dotnet-counters monitor --refresh-interval 1 -p 22884 | ||

| ``` | ||

|

|

||

| 22884 is the process ID that was found using `dotnet-trace list-processes`. The refresh-interval is the number of seconds before refreshes. | ||

|

|

||

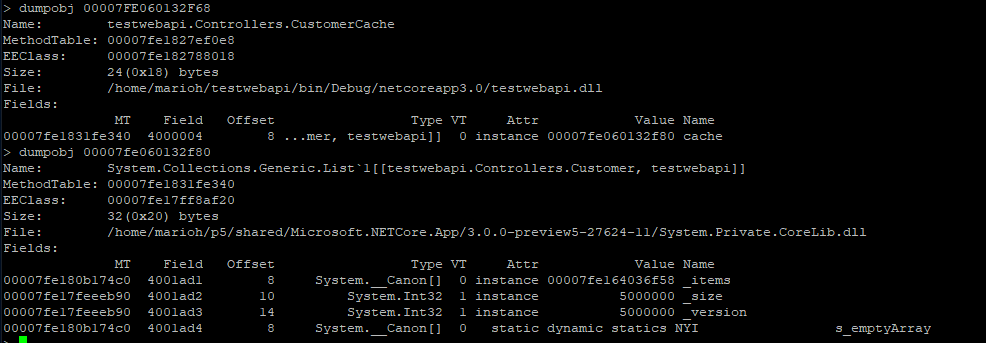

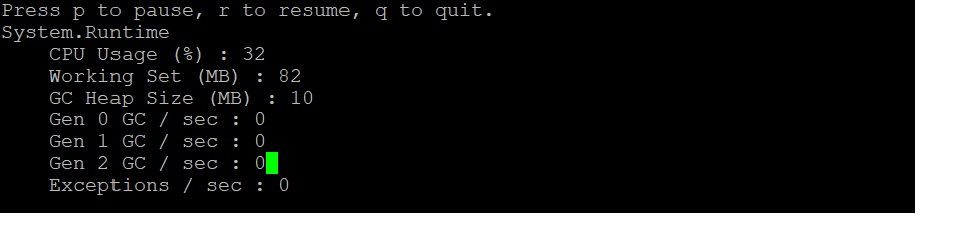

| The output should be similar to the below: | ||

|

|

||

|  | ||

|

|

||

| Here we can see that right after startup, the CPU isn't being consumed at all (0%). | ||

|

|

||

| Now, let's hit the URL (http://localhost:5000/api/diagscenario/highcpu/60000) | ||

|

|

||

| Rerun the [dotnet-counters](dotnet-counters.md) command. We should see an increase in CPU usage as shown below: | ||

|

|

||

|  | ||

|

|

||

| Throughout the execution of that request, CPU hovers at around 30%. | ||

|

|

||

| ```bash | ||

| dotnet-counters monitor System.Runtime[cpu-usage] -p 22884 --refresh-interval 1 | ||

| ``` | ||

|

|

||

| At this point, we can safely say the CPU is running a hotter than we expect. | ||

|

|

||

| ## Trace generation | ||

|

|

||

| When analyzing a slow request, we need a diagnostics tool that can give us insight into what our code is doing. The usual choice is a profiler. There are a few different profilers options. | ||

|

|

||

| ### Profile with `dotnet-trace` then view with Windows `PerfView` | ||

|

|

||

| We can use the [dotnet-trace](dotnet-trace.md) tool. Using the previous [sample debug target](sample-debug-target.md), hit the URL (http://localhost:5000/api/diagscenario/highcpu/60000) again and while its running within the 1-minute request, run: | ||

|

|

||

| ```bash | ||

| dotnet-trace collect -p 2266 --providers Microsoft-DotNETCore-SampleProfiler | ||

| ``` | ||

|

|

||

| 22884 is the process ID that was found using `dotnet-trace list-processes`. | ||

|

|

||

| Let [dotnet-trace](dotnet-trace.md) run for about 20-30 seconds and then hit enter to exit the collection. The result is a `nettrace` file located in the same folder. `nettrace` files are a great way to use existing analysis tools on Windows. | ||

|

|

||

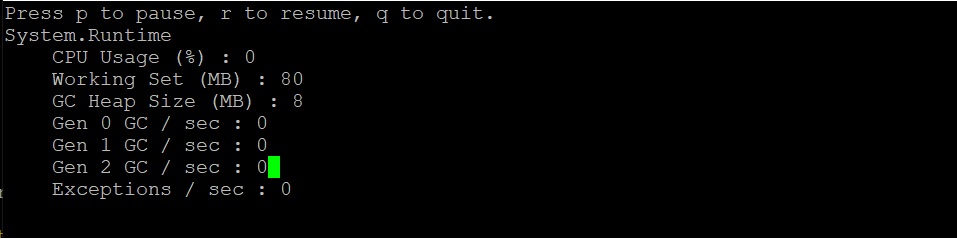

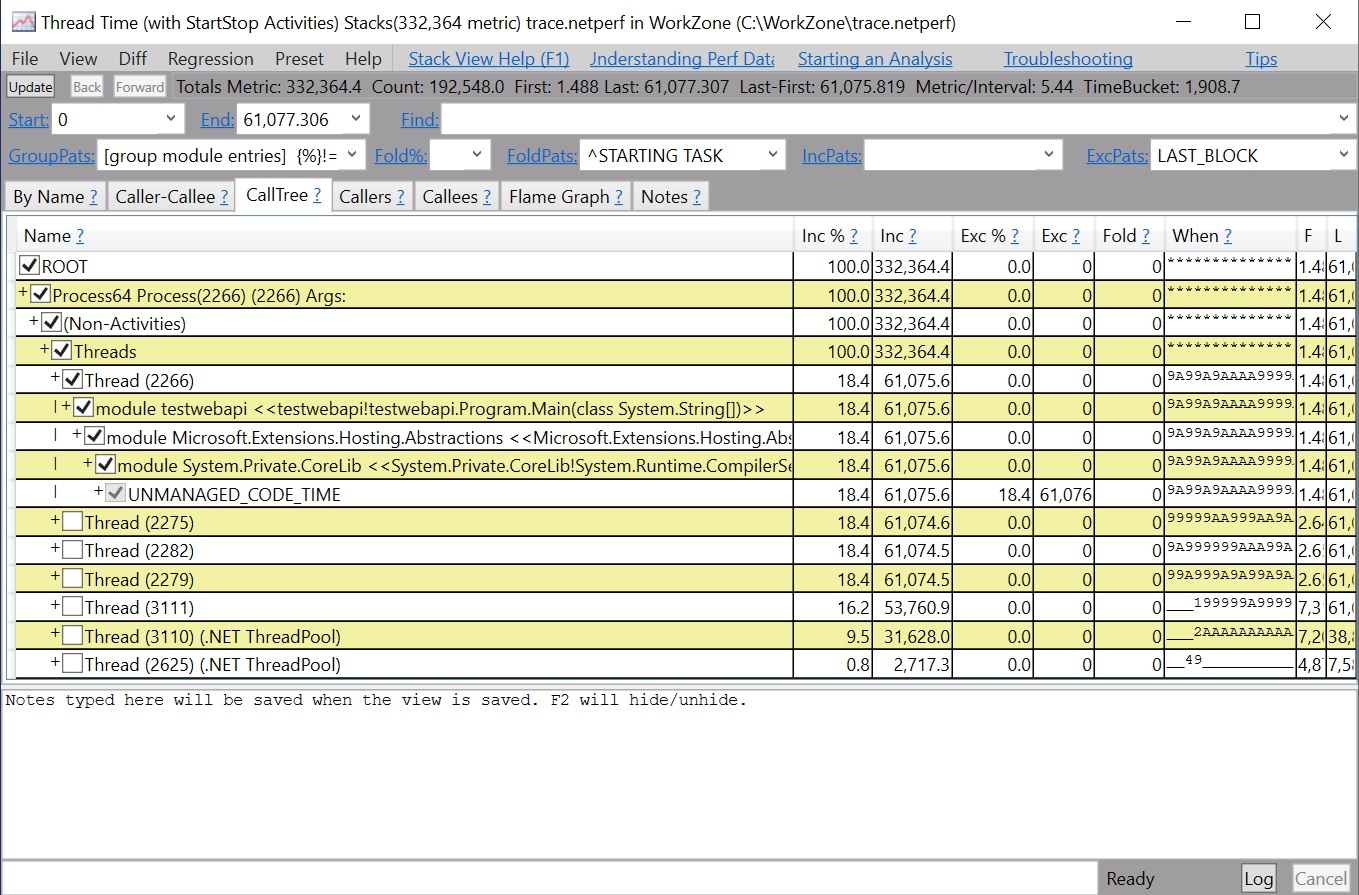

| Open the `nettrace` with `PerfView` as shown below. | ||

|

|

||

|  | ||

|

|

||

| ### Profile and view with Linux `perf` | ||

|

|

||

| Here, we'll demonstrate the Linux `perf` tool to generate .NET Core app profiles. | ||

|

|

||

| Exit the previous instance of the [sample debug target](sample-debug-target.md). | ||

|

|

||

| Set the `COMPlus_PerfMapEnabled` to cause the .NET Core app to create a `map` file in the `/tmp` directory. This `map` file is used by `perf` to map CPU address to JIT-generated functions by name. | ||

|

|

||

| Run the [sample debug target](sample-debug-target.md) in the same terminal session. | ||

|

|

||

| ```bash | ||

| export COMPlus_PerfMapEnabled=1 | ||

| dotnet run | ||

| ``` | ||

|

|

||

| Hit the URL (http://localhost:5000/api/diagscenario/highcpu/60000) again and while its running within the 1-minute request, run: | ||

|

|

||

| ```bash | ||

| sudo perf record -p 2266 -g | ||

| ``` | ||

|

|

||

| This command will start the perf collection process. Let it run for about 20-30 seconds and then hit CTRL-C to exit the collection process. | ||

|

|

||

| You can use the same perf command to see the output of the trace. | ||

|

|

||

| ```bash | ||

| sudo perf report -f | ||

| ``` | ||

|

|

||

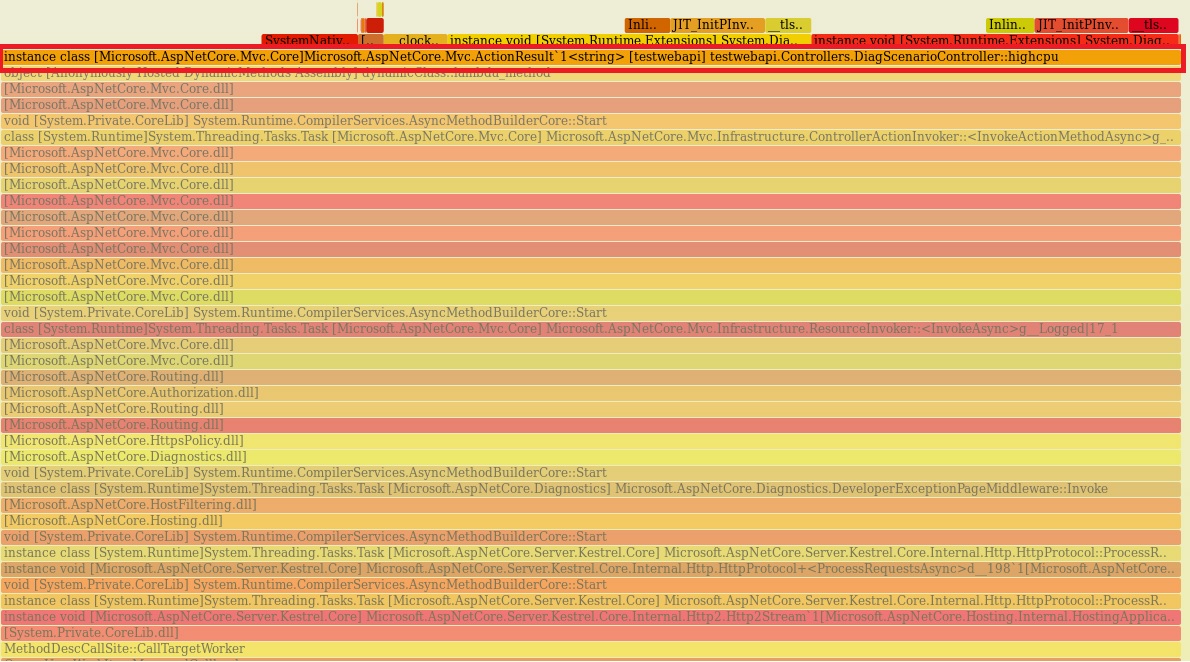

| You can also generate a flame-graph by using the following commands: | ||

|

|

||

| ```bash | ||

| git clone --depth=1 https://github.com/BrendanGregg/FlameGraph | ||

| sudo perf script | FlameGraph/stackcollapse-perf.pl | FlameGraph/flamegraph.pl > flamegraph.svg | ||

| ``` | ||

|

|

||

| This command will generate a `flamegraph.svg` that you can view in the browser to investigate the performance problem: | ||

|

|

||

|  |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,48 @@ | ||

| --- | ||

| title: Diagnostics walk-throughs - .NET Core | ||

| description: | ||

| author: sdmaclea | ||

| ms.author: stmaclea | ||

| ms.date: 08/27/2019 | ||

| ms.topic: overview | ||

| #Customer intent: As a .NET Core developer I want to find the best tutorial to help me debug my scenario. | ||

| --- | ||

| # .NET Core diagnostics walk throughs | ||

|

|

||

| .NET Core has a wide range of diagnostics capabilities. To learn more about production diagnostics in .NET Core 3, we'll run through a set of diagnostics scenarios using the `dotnet` tools. | ||

|

|

||

| > [!NOTE] | ||

| > The walk-throughs were all run on Ubuntu 16.04 and using the .NET Core 3 preview 5 bits. | ||

| > | ||

| > The tools/APIs that are used are based on preview 5 and are subject to change. The tutorial will be updated to account for later previews and final release | ||

| > | ||

| > Please note that you have to be using at least preview 5 for most of the capabilities to work. | ||

| ## Production diagnostics | ||

|

|

||

| Before we jump in, let's take a look at production diagnostic methodology. When an outage occurs, the goal is getting services fully restored quickly. This goal often means restarting the app or node(s). | ||

|

|

||

| To improve service reliability, root cause analysis of failures is key. To get to root cause, we need to collect as much diagnostics data as we can **before** restarting. | ||

|

|

||

| The diagnostics data collected can then be analyzed postmortem to determine root cause and possible fixes. | ||

|

|

||

| ## Preparing | ||

|

|

||

| Each of the scenarios: | ||

|

|

||

| - Uses the [Sample debug target](sample-debug-target.md) to trigger the scenario. | ||

| - Uses the [.NET Core dotnet diagnostic global command-line tools](index.md#net-core-dotnet-diagnostic-global-tools). | ||

|

|

||

| ## The scenarios | ||

|

|

||

| ### Debugging a memory leak | ||

|

|

||

| [Debugging a memory leak](app_is_leaking_memory_eventual_crash.md) walks through finding a memory leak. The [dotnet-counters](dotnet-counters.md) tool is used to confirm the leak. Then the [dotnet-dump](dotnet-dump.md) tool is used to diagnose the leak. | ||

|

|

||

| ### Debugging a slow running application | ||

|

|

||

| [Debugging high CPU usage](app_running_slow_highcpu.md) walks through investigating high CPU usage. It uses the [dotnet-counters](dotnet-counters.md) tool to confirm the high CPU usage. It then walks through using [Trace for performance analysis utility (`dotnet-trace`)](dotnet-trace.md) or Linux `perf` to collect and view CPU usage profile. | ||

|

|

||

| ### Debugging deadlock | ||

|

|

||

| The [debugging deadlock](hung_app.md) tutorial explores using the [dotnet-dump](dotnet-dump.md) tool to investigate threads and locks. |

Oops, something went wrong.