Introduction to MMU in ARC Processors

Skip to next section if you are familiar with how an MMU works at a high level.

A Processor Memory Management Unit or MMU is responsible for enabling virtual to physical address translation, assisted by the OS (to varying degrees).

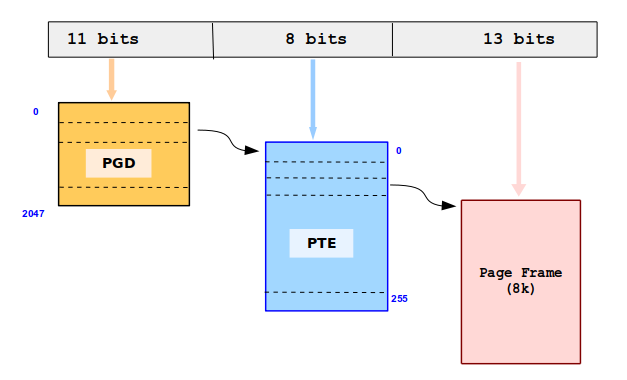

OS managed Page Tables are organized as data structures containing the actual address translation information (per MMU Page). They are organized as Multi-level lookup tables, starting at a top level Table per process (pgd in Linux parlance) whose entries point to next level and so on (total 5 table levels permitted by generic Linux MM code as of today), terminating in a leaf level entry (pte in Linux) which points to the physical page. Different Bits of virtual address are used to index into successive levels’ tables. This is referred to as Page Table Walk. e.g. ARC HS4x Linux uses the following arrangement (8K MMU Page) (note that last 13 bits of virtual address are not used in page table walk itself but for offset of final address within the page).

Additional information pertaining to address translation information per page such as Access Control and Permission Flags (read, write, execute for kernel / user mode) are stored directly or indirectly (an auxiliary table) in the Page Tables too.

Page Tables usually reside in relative slow system Memory (DRAM) and thus can cause hundreds of cycles per level of access if not already in one/more of CPU data caches. Given the tendency of programs to exhibit Spatial Locality (the very reason CPU instruction and Data caches are present), the address translation information per page is also typically cached in On-Chip high speed RAMs called Translation Look-aside Buffer or TLB. A TLB is Fully (or Set) associative table indexed by Virtual address with corresponding physical address, Permission Flags etc.

In classical CISC MMUs, given a load/store request for a virtual address, hardware initiates the Page-Table walk to try and find the address translation. If found, the translation is used to complete the access and additionally also cached into the on-chip TLB in anticipation of reuse due to Spatial Locality of programs. If the lookup doesn't succeed, either due to missing physical page (implying not yet allocated by the OS) or missing intermediate levels' tables, MMU triggers a “Page Fault” Exception, transferring control to OS. OS handles the exception, completing the lookup hierarchy and wiring up the final page if needed before transferring execution control back to hardware, which retires the access. Note that the TLB is a micro-architecture optimization and system would be functional without it, even if not most optimal. OS is not aware of the actual TLB details and only deals with it as occasional TLB flush when changing translation entries (hence Hardware Managed TLB)

Typical RISC MMUs are simpler and they avoid the complexity of hardware walks. They have TLB as the primary and ONLY translation entity in hardware. Given a virtual address (memory read/write) MMU looks up the TLB. If translation entry doesn’t exist it triggers a “TLB Miss” Exception, semantically similar to classical Page Fault and handled by OS similarly except that the TLB entry installation is mandatory for hardware to make forward progress. This can also be referred to as “Software Managed TLB” or "Software Page Walked" architecture.

The simplicity of Software Managed TLB MMU translates to greater power and area efficiency (gate count) as seen in ARCv2 ISA based processors but do have some limitations. The TLB Miss handlers are part of OS such as Linux kernel, typically written in carefully crafted assembly code but prone to nondeterministic execution due to instruction dependencies and pipeline stalls, Instruction cache Misses for handler code etc. A Hardware walker implemented in CPU micro-code would not suffer from such issues. Moreover it is a hard requirement and stepping stone for more complex Virtualization environments of future.

-

Software Managed TLB Architecture: Linux kernel programs the TLB entries, hardware only reads them.

-

2 level hardware TLB

- 4 way Set associative Joint-TLB (code and data) configurable as 256, 512, 1K entries.

- Fully Associative independent Micro-TLB for Code and Data (uITLB 4 entries, uDTLB 8 entries)

-

ASID (Address Space ID) tag allowing concurrent entries with same vaddr to co-exist (different processes with same vaddr mapping to different paddr or permissions for same page etc) without need for flushing the TLB on every context-switch.

-

32-bit address space split into 2G-translated (@ 0x0 ) / 2G-unstranslated (upper @ 0x8000_0000). MMU not involved when running in untranslated space. Kernel runs out of untranslated address hence space - hence linked at 0x8000_0000.

Page Tables are purely software constructs and Linux has full control and flexibility in specifying them as it deems necessary for function and performance. Hardware is simply not aware of page tables at all. At some point in the past, each one of following has been needed/modified/tweaked in ARC Linux kernel.

- Actual encoding of Page Table entries (e.g. exact bit layout of permission flags)

- Geometry (size of each table)

- Number of paging levels needed (implying number of vaddr bits used to index one/more levels)

-

All of Above

-

Support for Super pages along side regular Page (maps to Linux Kernel Transparent Huge Page

THPsupport) -

Optional 40-bit Physical Address PAE40 support

-

New commands to Insert and Delete TLB entries more efficiently

-

Support for 64-bit Virtual Address Space (MMUv48 or MMUv52) or 32-bit Virtual address Space (MMUv32)

-

Hardware page-walked with TLB just being a cache (vs. software page-walked with TLB as the primary table of translations)

-

Hardware mandated Page Table Layout and Geometry (vs. total software flexibility)

-

Kernel Address Space is also translated (vs. dedicated upper 2 GB Untranslated Address Space for kernel and peripherals)

-

Multiple concurrent Page Sizes (vs. only 2 for prior variants: Normal and Super Page)

Note that all of these have significant Linux kernel porting implications.