-

Notifications

You must be signed in to change notification settings - Fork 25.6k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

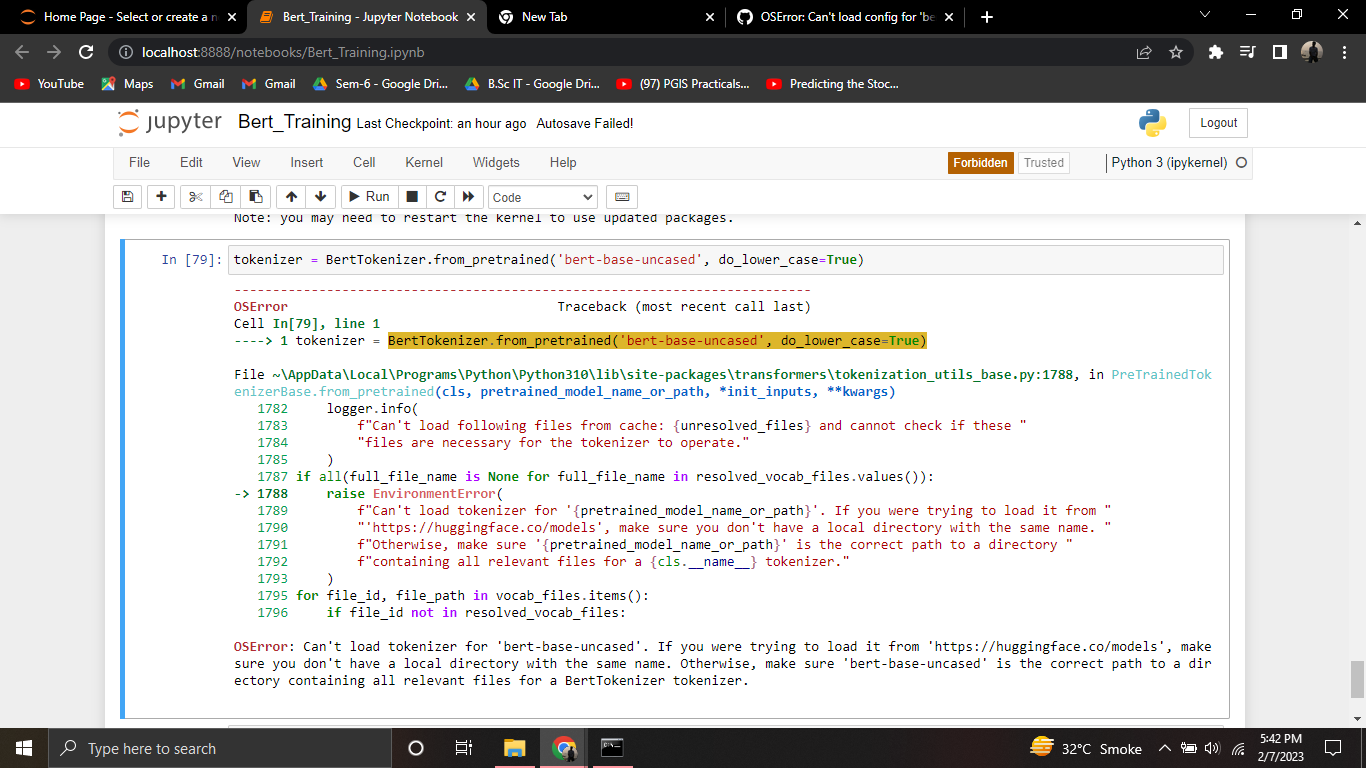

OSError: Can't load config for 'bert-base-uncased #12941

Comments

|

Was it just a fluke or is the issue still happening? On Colab I have no problem downloading that model. |

|

@sgugger Hi it is still happening now. Not just me, many people I know of. I can access the config file from browser, but not through the code. Thanks |

|

Still not okay online, but I managed to do it locally git clone https://huggingface.co/bert-base-uncased #model = AutoModelWithHeads.from_pretrained("bert-base-uncased") #tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased") adapter_name = model2.load_adapter(localpath, config=config, model_name=BERT_LOCAL_PATH) |

|

@WinMinTun Could you share a small collab that reproduces the bug? I'd like to have a look at it. |

|

With additional testing, I've found that this issue only occurs with adapter-tranformers, the AdapterHub.ml modified version of the transformers module. With the HuggingFace module, we can pull pretrained weights without issue. Using adapter-transformers this is now working again from Google Colab, but is still failing locally and from servers running in AWS. Interestingly, with adapter-transformers I get a 403 even if I try to load a nonexistent model (e.g. fake-model-that-should-fail). I would expect this to fail with a 401, as there is no corresponding config.json on huggingface.co. The fact that it fails with a 403 seems to indicate that something in front of the web host is rejecting the request before the web host has a change to respond with a not found error. |

|

Thanks so much @jason-weddington. This will help us pinpoint the issue. (@n1t0 @Pierrci) |

|

Your token for |

|

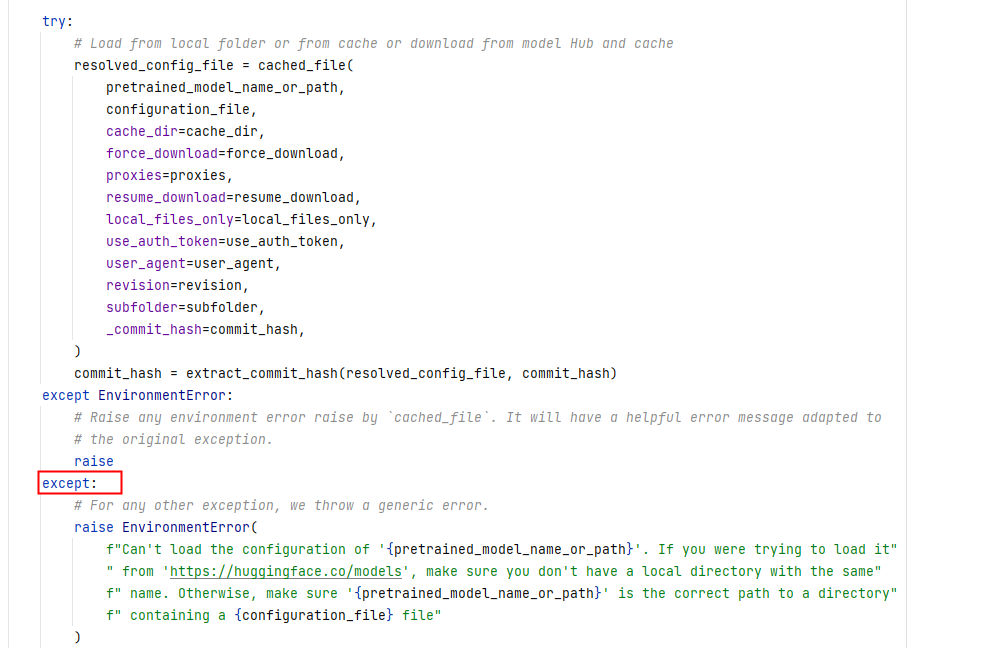

Yes, I have come across this as well. I have tracked it down to this line

It's because the |

|

I've added a pull request to which I think will fix this issue. You can get round it for now by adding |

Accessing this fix huggingface/transformers#13205 After this error huggingface/transformers#12941 was stopping pretrained path being accessed when model is private

|

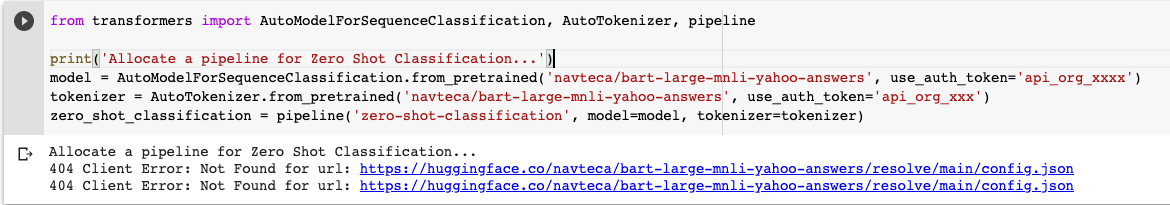

Still getting the same error Error : I have transformers version : |

|

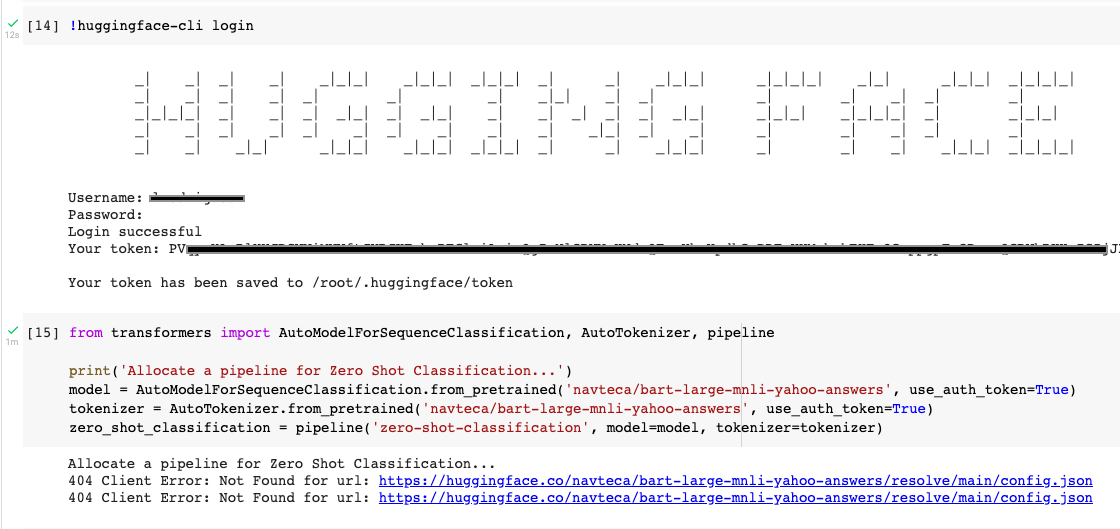

runnign this command and authenticating it solved issue: |

|

Just ask chatGPT LOL...😂😂 |

|

I dont understand it ?? What do u mean .. |

Hello! Thanks for your sharing. I wonder in |

Environment info

It happens in local machine, Colab, and my colleagues also.

transformersversion:Who can help

@LysandreJik It is to do with 'bert-base-uncased'

Information

Hi, I m having this error suddenly this afternoon. It was all okay before for days. It happens in local machine, Colab and also to my colleagues. I can access this file in browser https://huggingface.co/bert-base-uncased/resolve/main/config.json no problem. Btw, I m from Singapore. Any urgent help will be appreciated because I m rushing some project and stuck there.

Thanks

403 Client Error: Forbidden for url: https://huggingface.co/bert-base-uncased/resolve/main/config.json

HTTPError Traceback (most recent call last)

/usr/local/lib/python3.7/dist-packages/transformers/configuration_utils.py in get_config_dict(cls, pretrained_model_name_or_path, **kwargs)

505 use_auth_token=use_auth_token,

--> 506 user_agent=user_agent,

507 )

6 frames

HTTPError: 403 Client Error: Forbidden for url: https://huggingface.co/bert-base-uncased/resolve/main/config.json

During handling of the above exception, another exception occurred:

OSError Traceback (most recent call last)

/usr/local/lib/python3.7/dist-packages/transformers/configuration_utils.py in get_config_dict(cls, pretrained_model_name_or_path, **kwargs)

516 f"- or '{pretrained_model_name_or_path}' is the correct path to a directory containing a {CONFIG_NAME} file\n\n"

517 )

--> 518 raise EnvironmentError(msg)

519

520 except json.JSONDecodeError:

OSError: Can't load config for 'bert-base-uncased'. Make sure that:

'bert-base-uncased' is a correct model identifier listed on 'https://huggingface.co/models'

or 'bert-base-uncased' is the correct path to a directory containing a config.json file

The text was updated successfully, but these errors were encountered: