New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[Large PR] Entire rework of pipelines. #13308

Conversation

| self.assertEqual( | ||

| inputs["input_ids"].tolist(), [[31373, 50256, 17250, 612, 0, 50256, 4919, 389, 345, 5633, 50256]] | ||

| ) | ||

|

|

||

| inputs = conversation_agent._parse_and_tokenize([conversation_1, conversation_2]) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Not doable anymore, simple function can only handle single items at once

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

fine by me!

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is a great refactor and I love how clean the code becomes! I've left a few comments suggestions. Some of it is linked to older code moved around, but let's take this opportunity to make it cleaner!

src/transformers/pipelines/base.py

Outdated

| @@ -50,6 +55,11 @@ | |||

| logger = logging.get_logger(__name__) | |||

|

|

|||

|

|

|||

| def collate_fn(items): | |||

| assert len(items) == 1, "This collate_fn is meant to be used with batch_size=1" | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nit: we are moving away from asserts in the code base, so please use and if xxx, raise ValueError.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Got it ! Any reason why ? (Just curious if there's a background on that decision)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

See internal slack. The main gist is: asserts are more meant for debugging and will be ignored if you use python in optimized mode. This is not a debugging statement, so it should not use an assert.

| if self.call_count > 10 and self.framework == "pt" and self.device.type == "cuda": | ||

| warnings.warn( | ||

| "You seem to be using the pipelines sequentially on GPU. In order to maximize efficiency please use a dataset", | ||

| UserWarning, | ||

| ) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nice check!

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Could eventually link to a doc showing how to use with a Dataset

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes that would be ideal.

cd6e659

to

11e832a

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This looks great! I'm not approving yet because I want to take a bit of time to play with the new pipeline.

| return super().__call__(inputs, **kwargs) | ||

|

|

||

| def set_parameters(self, top_k=None, **kwargs): | ||

| # No parameters on this pipeline right now |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There are some parameters on this pipeline

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

From what I understand this method is going to be called in __init__ and __call__; therefore I see no point in having the user call it themselves. I would put that method as private to clarify its role!

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I understand why you would want it to be private. I have something in the back of my head so it should stay public, but not solid argument right now.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Okay, reflecting on this maybe forward should also become _forward too.

The reason is, the mother class defines infer_forward that would be a better target for users to call themselves.

It takes care of torch.no_grad, training=False, sending back and from the GPU.

It wouldn't break the logic that preprocess, _forward, postprocess should work out of the box for the pipelines writers/maintainers but would at least hint users to not use directly these functions. Maybe documenting that might be a good thing.

| def collate_fn(items): | ||

| if len(items) != 1: | ||

| raise ValueError("This collate_fn is meant to be used with batch_size=1") | ||

| return items[0] |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Would it be possible to have its name be a bit more explicit? I understand where it's used thanks to its name, but I don't understand what it does

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

So no_collate_fn ? :d

src/transformers/pipelines/base.py

Outdated

| return model_outputs | ||

|

|

||

| def get_iterator(self, inputs, num_workers: int): | ||

| os.environ["TOKENIZERS_PARALLELISM"] = "false" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

(nitpick) This has potentially undesirable side effects in the user's setup as it sets this environment variable for the duration of the runtime

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

True, which way would you recommend, only modify it if not set, otherwise don't touch it ?

Or never touch it ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Or unset it sometime later

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think unsetting it sometime later is fine - if it's not obvious how to do it in a clean way I would ignore my comment and just let it be :)

| if self.call_count > 10 and self.framework == "pt" and self.device.type == "cuda": | ||

| warnings.warn( | ||

| "You seem to be using the pipelines sequentially on GPU. In order to maximize efficiency please use a dataset", | ||

| UserWarning, | ||

| ) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Could eventually link to a doc showing how to use with a Dataset

54f2eb0

to

81cbbbd

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is great, tested extensively! I think it would be great to do two things before merging:

- I see no tests are added for the introduced changes. I think it's great that the past behavior still works, but it would be nice to test the added functionalities as well; for example the

preprocess,forward,set_parameters,postprocessmethods, as well as the batching ability, the possibility to consume aDataset, etc. - A feature without docs is a feature no-one knows about :) Would be nice to add a doc explaining how it works. The PR description can definitely be re-used!

| if top_k is not None: | ||

| self.top_k = top_k | ||

| if self.top_k > self.model.config.num_labels: | ||

| self.top_k = self.model.config.num_labels |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The only issue I'm seeing with this method is that it has side effects on the pipeline state, which is surprising in some cases (e.g., on __call__).

For example here:

>>> pp = pipeline("text-generation", max_length=2)You would expect all generations coming from this pipeline to have a max_length of 2. And this works well.

When using the same pipeline for inference, with a max_length override, this too works as one would expect:

>>> pp(["ok", "nice"], max_length=8)

Setting `pad_token_id` to `eos_token_id`:50256 for open-end generation.

Setting `pad_token_id` to `eos_token_id`:50256 for open-end generation.

[[{'generated_text': 'ok\n\nAce is my friend'}], [{'generated_text': "nice will always tell you, 'I"}]What is unexpected is that now the default max length changed to 8; when this should IMO be an override and not set:

>>> pp(["ok", "nice"])

Setting `pad_token_id` to `eos_token_id`:50256 for open-end generation.

Setting `pad_token_id` to `eos_token_id`:50256 for open-end generation.

[[{'generated_text': 'ok and Aarhus in Copenhagen.'}], [{'generated_text': 'nice for a while at first. I'}]There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You are entirely right ! I think we need to change that, and hopefully this will also help improve the cleanliness there.

Thanks I think pointing that out gave me an idea on how to dramatically improve this.

src/transformers/pipelines/base.py

Outdated

| return model_outputs | ||

|

|

||

| def get_iterator(self, inputs, num_workers: int): | ||

| os.environ["TOKENIZERS_PARALLELISM"] = "false" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think unsetting it sometime later is fine - if it's not obvious how to do it in a clean way I would ignore my comment and just let it be :)

| dataloader = DataLoader(dataset, num_workers=num_workers, batch_size=1, collate_fn=collate_fn) | ||

| model_iterator = PipelineIterator(dataloader, self.infer_forward) | ||

| final_iterator = PipelineIterator(model_iterator, self.postprocess) | ||

| return final_iterator |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This could also return list(final_iterator) to return the computed values

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

That would defeat the principle of having an iterator (as calling list for force to generate all outputs first leading to RAM issues on very large datasets at the very least).

It would also be great to have infinite generators be supported (like waiting on a queue that would be great in an API :))

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The idea is to return something you can iterate on, on an item basis.

for item in iterator

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ah, my bad :)

src/transformers/pipelines/base.py

Outdated

| final_iterator = self.get_iterator(inputs, num_workers) | ||

| outputs = [output for output in final_iterator] | ||

| return outputs |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

With above comment this would be simplified to return self.get_iterator(inputs, num_workers) (with a potential name change to the get_iterator method)

| inputs: dict holding all the keyword arguments for required by the model forward method. | ||

| return_tensors: Whether to return native framework (pt/tf) tensors rather than numpy array | ||

| @abstractmethod | ||

| def preprocess(self, input_: Any, **preprocess_parameters: Dict) -> Dict[str, GenericTensor]: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I would make this method private as well -> why should we keep it public if forward shouldn't be called?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

forward should be called. It's just that forward is defined in base vs subclasses, just because of the ensure_on_device + no_grad wrappers that are important.

_forward on the other hand does not contain those guards.

preprocess > forward > postprocess : valid and recommended

preprocess > _forward > postprocess : valid but slower (no grad guards) + no GPU out of the box. (Important to keep readability within pipeline to a max.)

src/transformers/pipelines/base.py

Outdated

| return self._ensure_tensor_on_device(inputs) | ||

|

|

||

| def _ensure_tensor_on_device(self, inputs): | ||

| if isinstance(inputs, dict): |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

why not:

| if isinstance(inputs, dict): | |

| if isinstance(inputs, dict) or isinstance(inputs, list): | |

| return self.ensure_tensor_on_cpu(inputs) |

?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

why do dict and list always have to be on CPU? Why is that?

Also do we really need that function? It's not really intuitive for me that ensure_tensor_on_device puts all lists and dicts on CPU and only tensors to self.device.

Couldn't we just have a:

if isinstance(inputs, dict) or isinstance(inputs, list):

return self.ensure_tensor_on_cpu(inputs)

elif isinstance(inputs, torch.Tensor):

return inputs.to(self.device)

else:

return inputs

instead of having this function at all?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Bad copy&past, it should just be recursive.

| return model_outputs | ||

|

|

||

| def get_iterator(self, inputs, num_workers: int, preprocess_params, forward_params, postprocess_params): | ||

| if "TOKENIZERS_PARALLELISM" not in os.environ: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

maybe add a else that if it's true there could be problems with dataloader multithreading?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It's it's already set, and problems do occur, tokenizers will already yell. Definitely could yell too...

| preprocess_params, forward_params, postprocess_params = self._sanitize_parameters(**kwargs) | ||

|

|

||

| # Fuse __init__ params and __call__ params without modifying the __init__ ones. | ||

| preprocess_params = {**self._preprocess_params, **preprocess_params} |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

this means that the call params would everwrite the init params no? Think this is good! Does it maybe make sense to add a warning though when this happens? E.g. something like:

if self._preprocess_params.keys() & preprocess_params.keys():

logger.warning(f"The parameters {self._preprocess_params.keys() & preprocess_params.keys()} have been overwritten by the passed parameters")

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, it will (but it will only locally override, contrary to the previous implementation).

pipe = pipeline("text-generation", max_length=20)

pipe("Something") # Using max_length=20

pipe("else", max_length=100) # using max_length=100

pipe("again") # using max_length=20There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I am not a big fan of high verbosity personally but it definitely could be added.

IMO it's expected behavior, so no reason to yell.

|

|

||

| if "max_length" in generate_kwargs: | ||

| forward_params["max_length"] = generate_kwargs["max_length"] | ||

| # self.max_length = generate_kwargs.get("max_length", self.model.config.max_length) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

delete the comment?

| @@ -186,7 +186,7 @@ def run_pipeline_test(self, model, tokenizer, feature_extractor): | |||

| ], | |||

| ) | |||

|

|

|||

| outputs = fill_masker([f"This is a {tokenizer.mask_token}", f"Another {tokenizer.mask_token}"]) | |||

| outputs = fill_masker([f"This is a {tokenizer.mask_token}", f"Another {tokenizer.mask_token} great test."]) | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

why is this added here?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

But the test only checks for type so should be fine

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Because the length was too small I guess.

I don't like having this change in there though.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Amazing work @Narsil ! I really like that all pipelines have to obey the "preprocess", "forward", "postprocess" design now!

I think we should also update the docs now to showcase how to use an iterator pipeline in practice no?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This looks great! LGTM.

Feel free to merge when ready.

src/transformers/pipelines/base.py

Outdated

| return predictions | ||

| model_inputs = self._ensure_tensor_on_device(model_inputs, device=self.device) | ||

| model_outputs = self._forward(model_inputs, **forward_params) | ||

| model_inputs = self._ensure_tensor_on_device(model_outputs, device=torch.device("cpu")) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Unnecessary variable init

| model_inputs = self._ensure_tensor_on_device(model_outputs, device=torch.device("cpu")) | |

| self._ensure_tensor_on_device(model_outputs, device=torch.device("cpu")) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I changed to model_outputs and I would like to keep the code as mutable free as possible even though the variables are actually mutated to it is redundant to reaffect the variable:

| dataloader = DataLoader(dataset, num_workers=num_workers, batch_size=1, collate_fn=collate_fn) | ||

| model_iterator = PipelineIterator(dataloader, self.infer_forward) | ||

| final_iterator = PipelineIterator(model_iterator, self.postprocess) | ||

| return final_iterator |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ah, my bad :)

| max_length = self.max_length | ||

| if self.framework == "pt": | ||

| model_inputs = self.ensure_tensor_on_device(**model_inputs) | ||

| n = model_inputs["input_ids"].shape[1] |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Agree

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nice doc additions!

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thank you for adding a very welcome documentation!

docs/source/add_new_pipeline.rst

Outdated

| :obj:`_sanitize_parameters` exists to allow users to pass any parameters whenever they wish, be it at initialization | ||

| time `pipeline(...., maybe_arg=4)` or at call time `pipe = pipeline(...); output = pipe(...., maybe_arg=4)`. | ||

|

|

||

| The returns of `_sanitize_parameters` are the 3 dicts of kwargs that will be passed directly to :obj:`preprocess`, | ||

| :obj:`_forward` and :obj:`postprocess`. Don't fill anything if the caller didn't call with any extra parameter. That | ||

| allows to keep the default arguments in the function definition which is always more "natural". | ||

|

|

||

| Try to keep the inputs/outputs very simple and ideally JSON-serializable as it makes the pipeline usage very easy | ||

| without requiring users to understand new kind of objects. It's also relatively common to support many different types | ||

| of arguments for ease of use (audio files, can be filenames, URLs or pure bytes) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't exactly understand how this is supposed to be done for _sznitize_parameters - is there a pipeline that could be linked to show an example?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I added an example on how to add a default parameters + edit _sanitize_parameters.

Enabling dataset iteration on pipelines. Unifying parameters under `set_parameters` function. Small fix. Last fixes after rebase Remove print. Fixing text2text `generate_kwargs` No more `self.max_length`. Fixing tf only conversational. Consistency in start/stop index over TF/PT. Speeding up drastically on TF (nasty bug where max_length would increase a ton.) Adding test for support for non fast tokenizers. Fixign GPU usage on zero-shot. Fix working on Tf. Update src/transformers/pipelines/base.py Co-authored-by: Sylvain Gugger <35901082+sgugger@users.noreply.github.com> Update src/transformers/pipelines/base.py Co-authored-by: Sylvain Gugger <35901082+sgugger@users.noreply.github.com> Small cleanup. Remove all asserts + simple format.

Instead of overriding implicitly internal state, we moved to real named arguments on every `preprocess`, `_forward`, `postprocess` function. Instead `_sanitize_parameters` will be used to split all kwargs of both __init__ and __call__ into the 3 kinds of named parameters.

0a00f60

to

63e6ed5

Compare

| A classic example would be a :obj:`top_k` argument in the post processing in classification tasks. | ||

|

|

||

| .. code-block:: | ||

|

|

||

| >>> pipe = pipeline("my-new-task") | ||

| >>> pipe("This is a test") | ||

| [{"label": "1-star", "score": 0.8}, {"label": "2-star", "score": 0.1}, {"label": "3-star", "score": 0.05} | ||

| {"label": "4-star", "score": 0.025}, {"label": "5-star", "score": 0.025}] | ||

|

|

||

| >>> pipe("This is a test", top_k=2) | ||

| [{"label": "1-star", "score": 0.8}, {"label": "2-star", "score": 0.1}] | ||

|

|

||

| In order to achieve that, we'll update our :obj:`postprocess` method with a default parameter to :obj:`5`. and edit | ||

| :obj:`_sanitize_parameters` to allow this new parameter. | ||

|

|

||

|

|

||

| .. code-block:: | ||

|

|

||

|

|

||

| def postprocess(self, model_outputs, top_k=5) | ||

| best_class = model_outputs["logits"].softmax(-1) | ||

| # Add logic to handle top_k | ||

| return best_class | ||

|

|

||

| def _sanitize_parameters(self, **kwargs) | ||

| preprocess_kwargs = {} | ||

| if "maybe_arg" in kwargs: | ||

| preprocess_kwargs["maybe_arg"] = kwargs["maybe_arg"] | ||

|

|

||

| postprocess_kwargs = {} | ||

| if "top_k" in kwargs: | ||

| preprocess_kwargs["top_k"] = kwargs["top_k"] | ||

| return preprocess_kwargs, {}, postprocess_kwargs |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

|

What about integrating this idea into this rework? |

|

@xegulon It would be a great addition, we already have similar functionnality within HF. The code is not open source just because it's messy and wouldn't fit If we can find something clean enough, it's probable it would be a welcome addition. Few caveats to mention:

With those caveats in mind, feel free to add a PR, it would be a welcome addition if we manage to make it readable and orthogonal (the new refactor should help for sure). |

|

Hey, it's really great to see work on general code organisation to any degree. Thanks for your work. It looks like this PR introduced a bug around completing empty prompts: |

|

🤑💪🏻 |

|

how to use my own dataset, that is txt file ,per line is the input for NER model |

the example scripts want itin jsonlines or csv. https://huggingface.co/docs/transformers/run_scripts#use-a-custom-dataset . you can use a tool to convert to jsonlines. it takes some patience to figure out a way to do each step, and then it works. |

What does this PR do?

tl;dr: Make pipeline code much more consistent and enable large speedups with GPU inference.

GPU pipeline

Currently the way pipeline are setup, it's kind of hard to keep the GPU busy 100% because we're not enabling the use of DataLoader (on pytorch), which is necessary to keep CPU working on next items to tokenize, while processing an item on GPU.

We cannot realistically use the current API to maximize utilization:

So we need to change up the API to something closer to what

DataLoaderdoes, which is use an iterable, which enables to have worker CPU threads process next items while the GPU is busy on the current one, meaning we're now using 100% of the GPU.In order to make that change possible, we need to separate better what happens on the CPU vs the GPU.

The proposed way is to split the call of pipeline into 3 distinct function calls

preprocess: in charge of taking the original pipeline input, and output a dict of everything necessary to domodel(**model_inputs)for instance (or ageneratecall, but stuff that will really involve the GPU.forward: In most cases it's a simple function call to the model forward method, but can be more complex depending on the pipeline. It needs to be separate from the other 2 because this is where the GPU might be used. so we can encapsulate more logic around this in the base class (no_grad, sending and retrieving tensors to/from GPU etc..)postprocess: Usually links to processing the logits into something more user-friendly for the task at hand, again usually pretty fast and should happen on CPU (but should be so fast it does not matter really to have a separate thread for this).In order to increase consistency across pipelines, ALL pipelines will have to implement the 3 methods, and should have a

__call__method (with exceptions discussed in consistency).They should be readable on their own too, meaning, the outputs of

preprocessshould be exactly what is sent toforwardand what is returned byforwardexactly the inputs ofpreprocess. So:will always be perfectly valid, even if not the most efficient.

Consistency of pipelines

Right now, pipelines are quite inconsistent in their returned outputs.

batch_sizeparameter in thepipelineobject #13141, Add batching in TokenClassificationPipeline #11251, Add batching in TokenClassificationPipeline #11251)Batching on GPU seems like what is speeding up, things, but really it's not at inference times, batching in ML is used because of gradients and it's necessary for the gradient descent to be smooth, the speed part of the GPU is really linked to overall GPU usage, using

DataLoaderis the key part here. Nonetheless, sometimes, depending on actual hardware, pipeline, and input data, batching can be used efficiently, so the new design should enable that. However, it shouldn't be done the way it's currently setup, which is some pipelines do, some don't and no consistency overall, it should be done on a different layer than dataprocessing part of the pipeline.Because of the inconsitencies mentionned above, this refactor will include some

__call__methods to change the return type based on what was previously there so (preprocess,forwardandpostprocessare mostly pure, while__call__will handle backwards compatibilty)Parameter handling

Another cause of concern for pipelines was parameter handling. Most parameters were sent to

__call__method, but some where sent to__init__. Some in both.That meant that you would have to look though the docs to guess if you needed to to

The goal in this PR, was to make that explicit, so BOTH will be supported and have the exact same behavior.

In order to do that, we introduced a new mandatory method

set_parameterswhich would be called both in__call__and__init__in the same way so that it would always work.set_parametersis a standard method, we can use it to properly discard unexpected keyword with a real errors instead of just ignoring it.__init__and__call__are now base class only (roughly), we can capture parameters much better, meaning we don't have extra layer of parameter guessing (is it tokenization param, model param, pipeline param ?). Each method will capture everything it needs and pass on the rest, the ultimate method in the chain isset_parameterswhich might be specific parameters, or accept everything (like **generate_kwargs, so utlimatelygeneratewill have the final word).set_parameterswill be called at least 2 times and we don't know which one will have actual real values, it needs to be done in a somewhat odd way. The ways most pipelines will do, is simply have a default argument toNone, so if the argument isNonewe know that the caller didn't supply this argument so we don't override it (the default one is defined in the__init__if dynamic or directly in the class if static. This however does not work whenNoneis a valid choice for some parameter, this is true only forzero-shot-classificationtest, where we specially test that we raise some error when passingNoneas a value (so it can probably be changed, but will be backward incompatible regarding tests). For those, more complex logic is required.selfas the holder for parameters that means that using threading mecanisms to run the pipelines might lead to some oddities (but people most likely aren't using 1 pipeline on different threads, most likely shouldn't be at least). Other options are possible but would passing them though all 3 functionspreprocess,forwardandpostprocessreducing readability IMHO, for debattable gains.Results

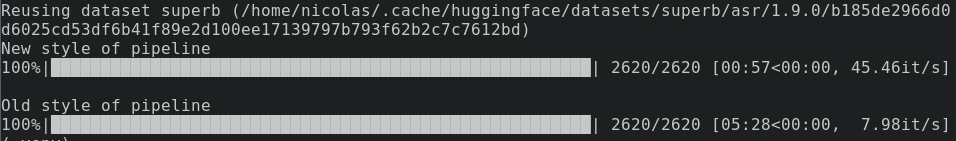

Currently we're sitting here performance wise

bench code

Speed (done on old suffering GTX 970):

Backward compatibility

We're currently sitting at 100% backward compatibility regarding tests. We're not however 100% backward compatible.

By fixing the inconsistencies of pipelines, we will break any code that was using parameters wrong (as they will suddenly start working or crashing because they're invalid).

Tensorflow

I mentionned

DataLoaderwhich will be used to great effectiveness on Pytorch +listinputs orDatasetinput. (on single inference on GPU + pt, you will get a warning, prompting you to use more efficient methods)On tensorflow however, more work is needed to make it faster there too. At the very least we shouldn't degrade performance too much, this has to be checked (both GPU and CPU). Ideally we would have a similar mecanism than

DataLoaderto maximise efficiency on GPU tensorflow.Fixes # (issue)

Before submitting

Pull Request section?

to it if that's the case.

documentation guidelines, and

here are tips on formatting docstrings.

Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.