New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Istio is picking up new virtualservice slowly #25685

Comments

|

How is pilot doing? Is workload properly distributed to each pilot instance? Also, can you describe your scenario on fast you are applying these VSs? And can you disable telemetry v2 if you are not using it? |

|

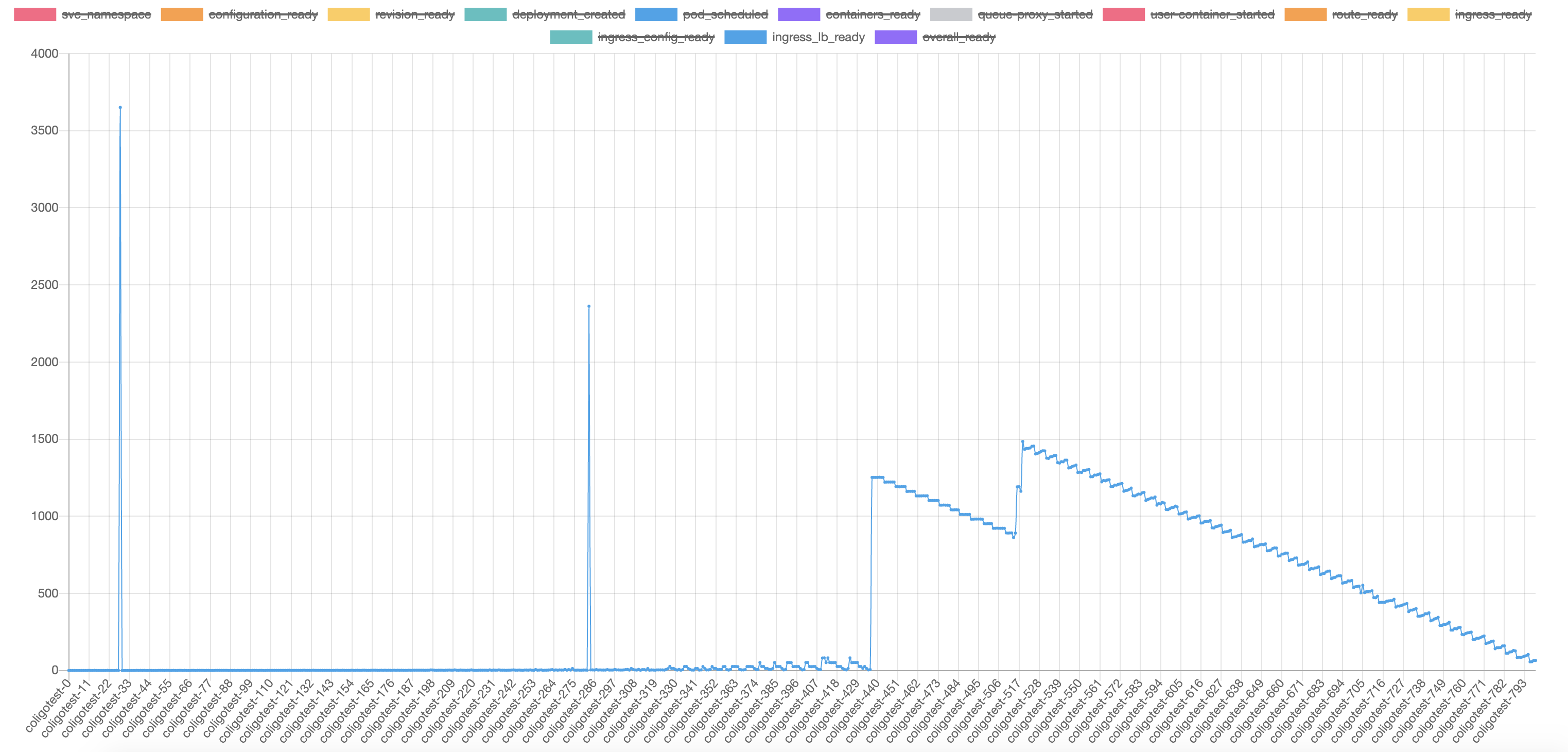

@linsun how to check the pilot if doing well ? In our case, from the diagram(blue line), the delay is increasing continually when we create knative service, each knative service will create one VS to config route in istio gateway. And the output is of For telemetry, I do not think we enable it, you can check the install script we are using. Or how to check if telemetry v2 enabled or not ? |

|

@linsun we tested this case on istio 1.6.5, the behavior is same. Thank you. |

|

@ZhuangYuZY I have not personally ran a systemwide profile (yet) with your use case - however usually kubeapi is the culprit in this scenario. I have ran systemwide profiles of creating a service entry CR, and see very poor performance in past versions. The diagnosed problem in the SE scenario is kubeapi rate-limits incoming CR creation, which will make it appear as if "Istio" is slow. Istio's discovery mechanism works by reading K8s's CR list. K8s CR creation is slow. Therefore, Istio is often blamed for K8s API scalability problems. Kubernetes can only handle so many creations of any type of object per second, and the count is low. I'll take a look early next week at this particular problem for you and verify this is just not how Kubernetes "works"... Cheers, |

|

cc / @mandarjog |

|

Basic tooling to test with and without Istio in the path: https://github.com/sdake/knative-perf |

|

Running the manifest created by the vs tool in #25685 (comment), but changing the hub to a valid hub, the following results are observed: System = VM on vsphere7 with 128gb ram + 8 cores, istio-1.6.5 |

|

Running with just an application of |

|

(edit - first run of this had an error, re-ran - slightly slower results) With The results were close to baseline (vs-crd.yaml): |

|

@lanceliuu - is the use case here that knative uses a pattern of creating a large amount of virtual services, all at about the same time? Attempting to replicate your benchmark - it feels synthetic, and I am curious if it represents a real-world use case. Cheers, |

|

ON IKS: Running with just an application of vs-crd.yaml and not istio.yaml, nearly two extra minutes to create the VS in the remote cluster: |

|

ON IKS: Running with istio.yaml and applying vs.yaml from the test repo, the time to create 1000 VS in the remote cluster: |

|

Yes, we create 800 kn service (trigger 800 vs creation), we saw salability problem of configuration setup in gateway, the time continue increase, from 1 sec to > 1 min. |

|

@ZhuangYuZY I am attempting to reproduce your problem, although I have to write my own tools to do so as you haven't published yours. Here is the question: Are you creating 800 vs. then pausing for 5-10 minutes. Then measuring the creation of that last VS at 1 minute? Or are you measuring the registration of the VS in the ingress gateway after each creation in the kubeapi? Cheers, |

|

@sdake From the logs of knative we can confirm that the failed probes always caused by 404 response code. It is not related with DNS as the probe force to resolve the domain to the pod ip of istio ingress gateway. IIRC with 800 ksvcs present, when we create new vs, we can see logs for istiod pilot popping up with logs quickly like following So I think it is not related with kube API and informers could cache and refelect the changes quickly. There is another problem which might also affect the performance. For services created by knative, it will also create coressponding kubernetes service and endpoints. And istio will also create rules like following even the virtualservice is not created. I wonder that the EDS might also stress the ingress gateway. |

|

Thank you for the detail. The first problem I spotted is in this VS. gateways may not contain a slash. The validation for Cheers,

|

|

Feels environmental. My system is very fast - 8000 virtual services representing the 1000 services (and their endpoints) are created more quickly then I can run the debug commands. Please check my performance testing repo and see if you can reproduce in your environment. You will need istioctl-1.7.0a2. You need this version because the route command was added to proxy-config is istioctl in 1.7 and doesn't do much useful work in 1.6. istio.yaml was created using istioctl-1..6.5 and the IOP you provided above. Here are my results on a single node virtual machine with 128gb of ram and 8 core of xeon CPU: The last two commands run the curl command above - sort of - to determine the endpoints and virtual service routes. Please note I noticed one of the ingress is not used. Also, please double-check my methodology represents what is happening in knative. Cheers, |

|

|

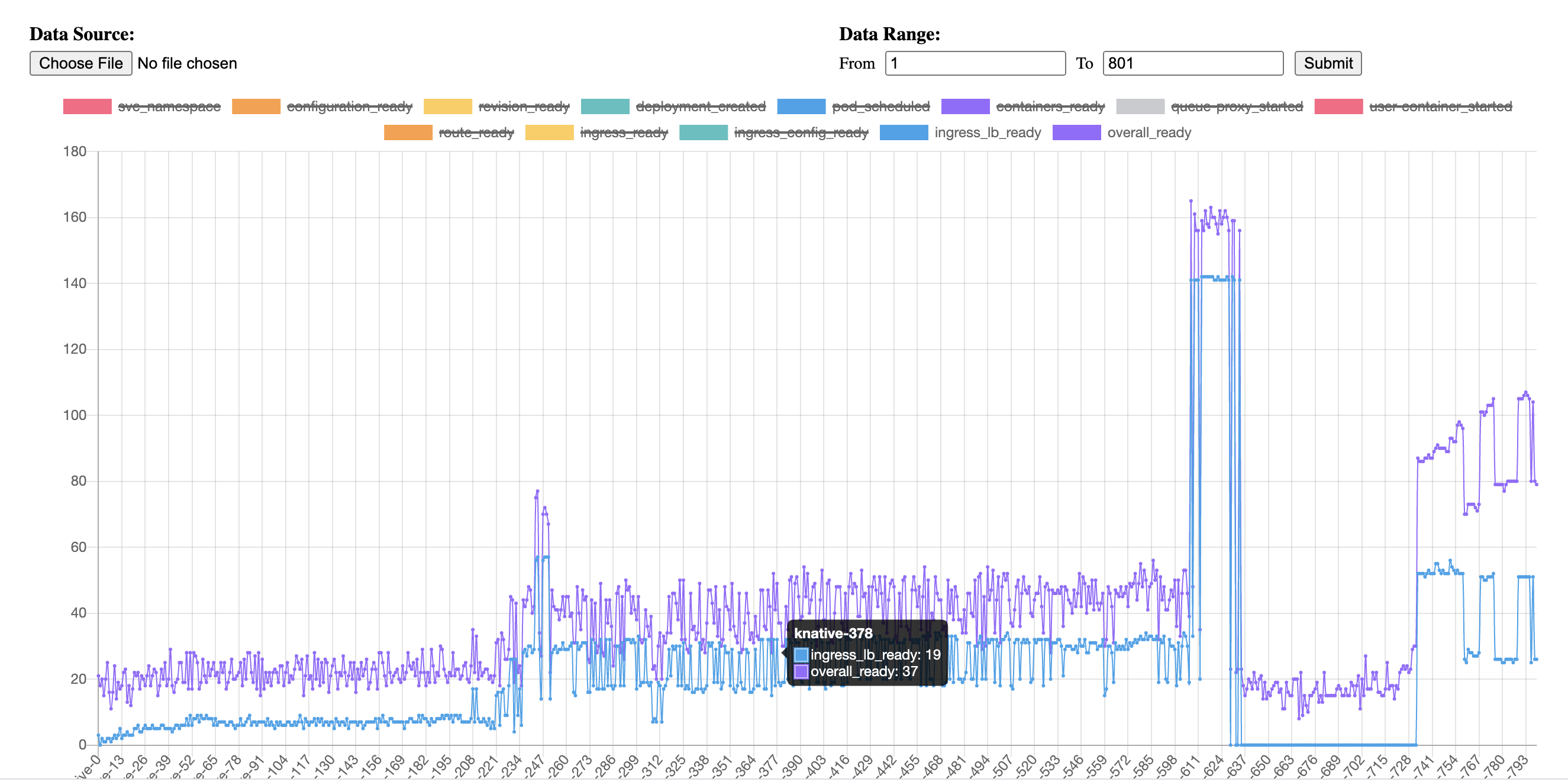

Per offline discussion with @sdake, we're not using IKS ALB mentioned above. So no traffic will go through IKS ingress but will go to Istio ingress gateway. And during Knative service creation, the ingress_lb_ready duration basically means Knative have applied a Istio Virtual Service but will send a http request as below to make sure the Istio config works. |

|

As I understand, NLB uses keepalived on IKS. As a result, there are still processes running to keep the packets moving in a keepalived scenario within the cluster. My theory is that when you run your 1k processes, it overloads the cluster. You start to see this overload at about 330 processes, and then it really increases up later at 340 processes. Kubernetes is designed to run 30 pods per node. At maximum - with configuration, Kubernetes on a very fast machine such as bare metal can run 100 pods per node maximum. If you have 10-15 nodes, you will see the above results, because you have overloaded the K8s platform and the scheduler will begin to struggle. It would be interesting to take a look at dmesg output during this ramp up to see if processes are killed as a result of OOM, or other kernel failures are occuring. Cheers, |

|

We are testing using a cluster with 12 worker nodes of the following configurations So I think it should be to able to test 800 knative services (also 800 pods if we set minscale =1). The test application is just a basic hello world go example. I noticed that even we set minscale = 0, which means that the deployment will scale to zero pods when it is ready and no traffic goes through, we can get a similar result. |

|

@sdake FYI, here is the benchmark tool we're using which will help to generate the Knative Service with different intervals as @lanceliuu mentioned above and can get the ingress_lb_ready duration time and dashboard. |

|

@sdake Seems that the host in virtualservice is recongized and the upstream cluster config is also configured correctly. But envoy treat it as unhealthy. I can confirm the dns for upstream host is able to resolve in ingress-gateway-pods when the error occurred. And no healthy checkers is specified in the upstream cluster config. The document of envoy said that

I wonder why envoy reports it as unhealthy and takes several minutes to recover. |

|

Can you check out https://karlstoney.com/2019/05/31/istio-503s-ucs-and-tcp-fun-times/index.html and to see if there is more you can find from these 503s? also, you can turn on access logs https://istio.io/docs/tasks/telemetry/logs/access-log/ and there is a "Response Flag" field that will give more details about the 503 (like DC, UC, etc). |

|

HI gang. With PR: #27687, Istio performs much better. Running the master branch with the 800 services test case on K8s 1.19: I found this PR has improved the default case, and explains the massive jump at 237+ without flow control. I think flow control is still necessary, although without modifying this value, flow control fails in various ways. Here is how I set I displayed some of the various send metric times .. Note even though the FLOW_CONTROL flag is enabled in the command, it is unimplemented in master so no flow control is running at present. Envoy SIGTERMed (restarted) in the big spike here: The Hilarious exit code - actually my license plate... During the test I displayed the push time in milliseconds: |

|

clicked wrong button ^^ |

|

I am not sure how send timeout is helping here - are we saying some times the config is lost/dropped because of low timeouts? |

|

when the connection is dropped because of the 5 second send timeout, the system falls over. Under heavy services churn, the proxy enters a super-overloaded state where it can take 200-800 seconds to recover - see: #25685 (comment). Yes, that is what I am saying. |

Fixes: istio#25685 Istio suffers from a problem at large scale (800+ services sequentially created) with significant churn that Envoy becomes overloaded and produces a zigzaw pattern in acking results. This slows Istio down by using a semaphore to signal when a receive has occured and wait for the semaphore prior to new pushes. Co-Authored-By: John Howard <howardjohn@google.com>

|

These results are better - although ADS continues to disconnect and Envoy OOMs: #28192. I do feel like this is the first attempt of a PR that manages the numerous constraints of the protocol implementation |

|

Got a profile of envoy during high XDS pushes 20% of time in MessageUtil::validate |

Fixes: istio#25685 At large scale, Envoy suffers from overload of XDS pushes, and there is no backpressure in the system. Other control planes, such as any based on go-control-plane, outperform Istio in config update propogations under load as a result. This changes adds a backpressure mechanism to ensure we do not push more configs than Envoy can handle. By slowing down the pushes, the propogation time of new configurations actually increases. We do this by keeping note, but not sending, any push requests where that TypeUrl has an un-ACKed request in flight. When we get an ACK, if there is a pending push request we will immediately trigger it. This effectively means that in a high churn environment, each proxy will always have exactly 1 outstanding push per type, and when the ACK is recieved we will immediately send a new update. This PR is co-authored by Steve, who did a huge amount of work in developing this into the state it is today, as wel as finding and testing the problem. See istio#27563 for much of this work. Co-Authored-By: Steven Dake sdake@ibm.com

* Wait until ACK before sending additional pushes Fixes: #25685 At large scale, Envoy suffers from overload of XDS pushes, and there is no backpressure in the system. Other control planes, such as any based on go-control-plane, outperform Istio in config update propogations under load as a result. This changes adds a backpressure mechanism to ensure we do not push more configs than Envoy can handle. By slowing down the pushes, the propogation time of new configurations actually increases. We do this by keeping note, but not sending, any push requests where that TypeUrl has an un-ACKed request in flight. When we get an ACK, if there is a pending push request we will immediately trigger it. This effectively means that in a high churn environment, each proxy will always have exactly 1 outstanding push per type, and when the ACK is recieved we will immediately send a new update. This PR is co-authored by Steve, who did a huge amount of work in developing this into the state it is today, as wel as finding and testing the problem. See #27563 for much of this work. Co-Authored-By: Steven Dake sdake@ibm.com * Refactor and cleanup tests * Add test

|

@howardjohn It is great to have PR to fix the issue. What is the target release for this PR ? Thank you. |

Signed-off-by: gargnupur <gargnupur@google.com> fix Signed-off-by: gargnupur <gargnupur@google.com> add yaml Signed-off-by: gargnupur <gargnupur@google.com> fix comments Signed-off-by: gargnupur <gargnupur@google.com> fix comments Signed-off-by: gargnupur <gargnupur@google.com> fix build Signed-off-by: gargnupur <gargnupur@google.com> fix build Signed-off-by: gargnupur <gargnupur@google.com> Delete reference to old ISTIO_META_PROXY_XDS_VIA_AGENT (istio#28203) * update expose istiod * add https sample * fix tab * update host + domain * fix lint * fix lint * tweak host * fix lint * use tls port * name port correctly * change default to tls * Update samples/multicluster/expose-istiod.yaml Co-authored-by: Iris <irisding@apache.org> * Update samples/multicluster/expose-istiod.yaml Co-authored-by: Iris <irisding@apache.org> * Revert "Update samples/multicluster/expose-istiod.yaml" This reverts commit 7feb468. * Revert "Update samples/multicluster/expose-istiod.yaml" This reverts commit 98209a0. * use istiod-remote since pilot is still enabled on remote cluster * loose up on host name * adding notes * clean up this in preview profile Co-authored-by: Iris <irisding@apache.org> Avoid telemetry cluster metadata override (istio#28171) * fix cluster metadata override * test * fix * fix * fix again * clean add telemetry test for customize metrics (istio#27844) * add test for customize metrics * address comments * add remove tag check * fix test Delete istiod pods on cleanup (istio#28205) Otherwise they stay around and can cause other tests to fail. In a concrete example, deployment "istiod-canary" stays live and interferes in pilot's TestMultiRevision test, which also deploys a "istiod-canary", but, since a deployment with that name already exists, operator doesn't redeploy it, because it's already there. Fix HTTPs on HTTP port passthrough (istio#28166) * Fix HTTPs on HTTP port passthrough * Add note remove 1.7 telemetry filters from charts (istio#28195) use correct env var name (istio#28217) Align Ingress resource status updates with Ingresses targeted in controller (istio#28225) make istiod-remote depend on base when installation (istio#28219) Add remoteIpBlocks functionality to AuthorizationPolicy (istio#27906) * create remoteIpBlocks and update ipBlocks for AuthorizationPolicy By adding remoteIpBlocks and notRemoteIpBlocks in Source, an AuthorizationPolicy can trigger actions based on the original client IP address gleaned from the X-Forwarded-For header or the proxy protocol. The ipBlocks and notIpBlocks fields have also been updated to use direct_remote_ip in Envoy instead of source_ip * use correct attribute for RemoteIpBlocks * fix unit tests and add integration tests for remote.ip attribute * fix notRemoteIp integration test * initialize headers if it is nil * Combine remoteIp tests into IngressGateway test and add release note * add titles to links * remove unneeded tests * fix quotes in releasenote, run make gen * make upgradeNotes a list Remove deprecated istio-coredns plugin (istio#28179) make stackdriver test platform agnostic (istio#28237) * make stackdriver test platform agnostic * fix * clean up Add Wasm Extension Dashboard (istio#28209) * Add WASM Extension Dashboard * update dashboard * update dashboard and add cpu/mem * address review comment * add excluded * remove extension dashboard from test allowlist.txt * update readme Clean up metadata exchange keys (istio#28249) * clean up * cleanup exchange key Remove unnecessary warning log from ingress status watcher (istio#28254) vm health checking (istio#28142) * impl with pilot * Remove redundant import * Remove redundant return * address some concerns * address more concerns * Add tests * fix ci? * fix ci? Automator: update proxy@master in istio/istio@master (istio#27786) pilot: GlobalUnicastIP of a model.Proxy should be set to the 1st applicable IP address in the list (istio#28260) * pilot: GlobalUnicastIP of a model.Proxy should be set to the 1st applicable IP address in the list Signed-off-by: Yaroslav Skopets <yaroslav@tetrate.io> * docs: add release notes Signed-off-by: Yaroslav Skopets <yaroslav@tetrate.io> Adjust Wasm VMs charts order and Add release note (istio#28251) * Adjust Wasm VMs charts order * add release note * replace wasm extension dashboard with real ID Issue istio#27606: Minor Bug fixes, mostly renaming (istio#28156) Cleanup ADS tests (istio#28275) * Cleanup ADS tests * fix lint * fix lint Temporarily skip ratelimit tests (istio#28286) To help master/1.8 get to a merge-able state Add warning for legacy FQDN gateway reference (istio#27948) * Add warning for legacy FQDN gateway reference * fix lint * Add more warnings Fixes for trust domain configuration (istio#28127) * Fixes for trust domain configuration We want to ensure we take values.global.trustDomain as default, fallback to meshConfig.trustDomain, and ensure this is passed through to all parts of the code. This fixes the breakage in istio/istio.io#8301 * fix lint Status improvements (istio#28136) * Consolidate ledger and status implementation * Add ownerReference for garbage collection * write observedGeneration for status * cleanup rebase errors * remove garbage from pr * fix test failures * Fix receiver linting * fix broken unit tests * fix init for route test * Fix test failures * add missing ledger to test * Add release notes * Reorganize status controller start * fix race * separate init and start funcs * add newline * remove test sprawl * reset retention Add size to ADS push log (istio#28262) Add README.md for vendor optimized profiles (istio#28155) * Add README.profiles for vendor optimized profiles * Another attempt at the table Fix operator revision handling (istio#28044) * Fix operator revision handling * Add revision to installation CR * Add revision to each resource label * Update label handling * Add deployment spec template labels, clean up logging * Fix test * Update integration test * Make gen * Fix test * Testing * Fix tests Futureproof telemetry envoyfilters a bit (istio#28176) remove the install comment (istio#28243) * remove the install comment * Revert "remove the install comment" This reverts commit 60bc649. * Update gen-eastwest-gateway.sh pilot: skip privileged ports when building listeners for non-root gateways (istio#28268) * pilot: skip privileged ports when building listeners for non-root gateways * Add release note * Use ISTIO_META_UNPRIVILEGED_POD env var instead of a Pod label Automator: update proxy@master in istio/istio@master (istio#28281) istioctl bug-report: do not override system namespaces if --exclude flag is provided (istio#27989) Add ingress status integration test (istio#28263) clean up: extension configs (istio#28277) * clean up extension configs Signed-off-by: Kuat Yessenov <kuat@google.com> * make gen Signed-off-by: Kuat Yessenov <kuat@google.com> Show empty routes in pc routes (istio#28170) ``` NOTE: This output only contains routes loaded via RDS. NAME DOMAINS MATCH VIRTUAL SERVICE https.443.https-443-ingress-service1-default-0.service1-istio-autogenerated-k8s-ingress.istio-system * /* 404 https.443.https-443-ingress-service2-default-0.service2-istio-autogenerated-k8s-ingress.istio-system * /* 404 http.80 service1.demo.........io /* service1-demo-......-io-service1-istio-autogenerated-k8s-ingress.default http.80 service2.demo.........io /* service2-demo-.....i-io-service2-istio-autogenerated-k8s-ingress.default * /stats/prometheus* * /healthz/ready* ``` The first 2 lines would not show up without this PR Add warnings for unknown fields in EnvoyFilter (istio#28227) Fixes istio#26390 Update rather than patch webhook configuration (istio#28228) * Update rather than patch webhook configuration This is a far more flexible pattern, allowing us to have multiple webhooks and patch them successful. This pattern follows what the cert-manager does in their webhook patcher (see pkg/controller/cainjector), which I consider to be top quality code. * update rbac Improve error when users use removed addon (istio#28241) * Improve error when users use removed addon After and before: ``` $ grun ./istioctl/cmd/istioctl manifest generate --set addonComponents.foo.enabled=true -d manifests Error: component "foo" does not exist $ ik manifest generate --set addonComponents.foo.enabled=true -d manifests Error: stat manifests/charts/addons/foo: no such file or directory ``` * Fix test When installing istio-cni remove existing istio-cni plugin before inserting a new one (istio#28258) * Remove istio-cni plugin before inserting a new one * docs: add release notes Automator: update common-files@master in istio/istio@master (istio#28278) Make ingress gateway selector in status watcher match the one used to generate gateway (istio#28279) * Check for empty ingress service value when converting ingress to gateway * Pull ingress gateway selector logic into own func * Use same ingress gateway selector logic for status watcher as when generating gateways * Fix status watcher test Remove time.Sleep hacks for fast tests/non-flaky (istio#27741) * Remove time.Sleep hacks for fast tests * fix flake Add grafana templating query for DS_PROMETHEUS and add missing datasource (istio#28320) * Add grafana templating query for DS_PROMETHEUS and add missing datasource * make extension dashboard viewonly skip ingress test in multicluster (istio#28321) E2E test for trust domain alias client side secure naming. (istio#28206) * trust domain alias secure naming e2e test * add dynamic certs and test options * move under ca_custom_root test folder * trust domain alias secure naming e2e test * add dynamic certs and test options * move under ca_custom_root test folder * fix host to address * update script * refactor based on comments * updated comments * add build constraints * lint fix * fixes based on comments Samples: use more common images and delete useless samples (istio#28215) Signed-off-by: Xiang Dai <long0dai@foxmail.com> Wait until ACK before sending additional pushes (istio#28261) * Wait until ACK before sending additional pushes Fixes: istio#25685 At large scale, Envoy suffers from overload of XDS pushes, and there is no backpressure in the system. Other control planes, such as any based on go-control-plane, outperform Istio in config update propogations under load as a result. This changes adds a backpressure mechanism to ensure we do not push more configs than Envoy can handle. By slowing down the pushes, the propogation time of new configurations actually increases. We do this by keeping note, but not sending, any push requests where that TypeUrl has an un-ACKed request in flight. When we get an ACK, if there is a pending push request we will immediately trigger it. This effectively means that in a high churn environment, each proxy will always have exactly 1 outstanding push per type, and when the ACK is recieved we will immediately send a new update. This PR is co-authored by Steve, who did a huge amount of work in developing this into the state it is today, as wel as finding and testing the problem. See istio#27563 for much of this work. Co-Authored-By: Steven Dake sdake@ibm.com * Refactor and cleanup tests * Add test istioctl: fix failure when passing flags to `go test` (istio#28332) add xds proxy metrics (istio#28267) * add xds proxy metrics Signed-off-by: Rama Chavali <rama.rao@salesforce.com> * lint Signed-off-by: Rama Chavali <rama.rao@salesforce.com> * fix description Signed-off-by: Rama Chavali <rama.rao@salesforce.com> remove-from-mesh: skip system namespace to remove sidecar (istio#28187) * remove-from-mesh: skip system namespace to remove sidecar * check for -i Remove accidentally merged debug logs (istio#28331) update warning message for upgrading istio version (istio#28303) * update warning message for upgrading istio version * add use before istioctl analyze Update README.md (istio#28272) * Update README.md * Update README.md Xds proxy improve (istio#28307) * Prevent goroutine leak * Accelerate by splitting upstream request and response handling * fix lint fix manifestpath for verify install. (istio#28345) Fix ADSC race (istio#28342) * Fix ADSC race * fix * fix ut * Update pkg/istio-agent/local_xds_generator.go Co-authored-by: Shamsher Ansari <shaansar@redhat.com> * Update pkg/adsc/adsc_test.go Co-authored-by: Shamsher Ansari <shaansar@redhat.com> * Update pilot/pkg/xds/lds_test.go Co-authored-by: Shamsher Ansari <shaansar@redhat.com> * Apply shamsher's suggestions from code review Co-authored-by: Shamsher Ansari <shaansar@redhat.com> Co-authored-by: Shamsher Ansari <shaansar@redhat.com> List Istio injectors (istio#27849) * Refactor and rename command * Code nits * Fix typo * Print message if no namespaces have injection * Code nits * Case where an injected namespace does not yet have pods * Code cleanup kube-inject: hide namespace flag in favour of istioNamespace (istio#28067) Automator: update proxy@master in istio/istio@master (istio#28355) Fix test race in FilterGatewayClusterConf (istio#28330) Example failure https://prow.istio.io/view/gs/istio-prow/logs/unit-tests_istio_postsubmit/4087 In generally, we need a better way to mutate feature flags in tests. Maybe a conditionally compiled mutex. Will open an issue to track Networking: Add scaffold of tunnel EDS builder (istio#28244) * add endpoint tunnel supportablity field Signed-off-by: Yuchen Dai <silentdai@gmail.com> * edsbtswip Signed-off-by: Yuchen Dai <silentdai@gmail.com> * fix import Signed-off-by: Yuchen Dai <silentdai@gmail.com> * make gen Signed-off-by: Yuchen Dai <silentdai@gmail.com> * EndpointsByNetworkFilter refactor Signed-off-by: Yuchen Dai <silentdai@gmail.com> * fix pkg/pilot/ tests Signed-off-by: Yuchen Dai <silentdai@gmail.com> * ep builder decide build out tunnel type Signed-off-by: Yuchen Dai <silentdai@gmail.com> * add basic tunnel eds test Signed-off-by: Yuchen Dai <silentdai@gmail.com> * make gen Signed-off-by: Yuchen Dai <silentdai@gmail.com> * fix proxy metadata access Signed-off-by: Yuchen Dai <silentdai@gmail.com> * make gen Signed-off-by: Yuchen Dai <silentdai@gmail.com> * fix endpoint COW, h2support bitfield bug Signed-off-by: Yuchen Dai <silentdai@gmail.com> * make gen without fmt Signed-off-by: Yuchen Dai <silentdai@gmail.com> * add errgo Signed-off-by: Yuchen Dai <silentdai@gmail.com> * address comment Signed-off-by: Yuchen Dai <silentdai@gmail.com> * fmt Signed-off-by: Yuchen Dai <silentdai@gmail.com> * make gen Signed-off-by: Yuchen Dai <silentdai@gmail.com> cni:fix order dependent test failures (istio#28349) The `interceptRuleMgrType` decalred in main.go as "iptables", it would be changed to "mock" in func resetGlobalTestVariables(). When run single test, it would be "iptables" and make test failed. Signed-off-by: Xiang Dai <long0dai@foxmail.com> fix uninstall test (istio#28335) * fix uninstall test * revert prow change * address comment * add logic to deduplicate Adding Route Specific RateLimiting Test Signed-off-by: gargnupur <gargnupur@google.com> remove debug info Signed-off-by: gargnupur <gargnupur@google.com> fix failure Signed-off-by: gargnupur <gargnupur@google.com>

Signed-off-by: gargnupur <gargnupur@google.com> fix Signed-off-by: gargnupur <gargnupur@google.com> add yaml Signed-off-by: gargnupur <gargnupur@google.com> fix comments Signed-off-by: gargnupur <gargnupur@google.com> fix comments Signed-off-by: gargnupur <gargnupur@google.com> fix build Signed-off-by: gargnupur <gargnupur@google.com> fix build Signed-off-by: gargnupur <gargnupur@google.com> Delete reference to old ISTIO_META_PROXY_XDS_VIA_AGENT (#28203) * update expose istiod * add https sample * fix tab * update host + domain * fix lint * fix lint * tweak host * fix lint * use tls port * name port correctly * change default to tls * Update samples/multicluster/expose-istiod.yaml Co-authored-by: Iris <irisding@apache.org> * Update samples/multicluster/expose-istiod.yaml Co-authored-by: Iris <irisding@apache.org> * Revert "Update samples/multicluster/expose-istiod.yaml" This reverts commit 7feb468. * Revert "Update samples/multicluster/expose-istiod.yaml" This reverts commit 98209a0. * use istiod-remote since pilot is still enabled on remote cluster * loose up on host name * adding notes * clean up this in preview profile Co-authored-by: Iris <irisding@apache.org> Avoid telemetry cluster metadata override (#28171) * fix cluster metadata override * test * fix * fix * fix again * clean add telemetry test for customize metrics (#27844) * add test for customize metrics * address comments * add remove tag check * fix test Delete istiod pods on cleanup (#28205) Otherwise they stay around and can cause other tests to fail. In a concrete example, deployment "istiod-canary" stays live and interferes in pilot's TestMultiRevision test, which also deploys a "istiod-canary", but, since a deployment with that name already exists, operator doesn't redeploy it, because it's already there. Fix HTTPs on HTTP port passthrough (#28166) * Fix HTTPs on HTTP port passthrough * Add note remove 1.7 telemetry filters from charts (#28195) use correct env var name (#28217) Align Ingress resource status updates with Ingresses targeted in controller (#28225) make istiod-remote depend on base when installation (#28219) Add remoteIpBlocks functionality to AuthorizationPolicy (#27906) * create remoteIpBlocks and update ipBlocks for AuthorizationPolicy By adding remoteIpBlocks and notRemoteIpBlocks in Source, an AuthorizationPolicy can trigger actions based on the original client IP address gleaned from the X-Forwarded-For header or the proxy protocol. The ipBlocks and notIpBlocks fields have also been updated to use direct_remote_ip in Envoy instead of source_ip * use correct attribute for RemoteIpBlocks * fix unit tests and add integration tests for remote.ip attribute * fix notRemoteIp integration test * initialize headers if it is nil * Combine remoteIp tests into IngressGateway test and add release note * add titles to links * remove unneeded tests * fix quotes in releasenote, run make gen * make upgradeNotes a list Remove deprecated istio-coredns plugin (#28179) make stackdriver test platform agnostic (#28237) * make stackdriver test platform agnostic * fix * clean up Add Wasm Extension Dashboard (#28209) * Add WASM Extension Dashboard * update dashboard * update dashboard and add cpu/mem * address review comment * add excluded * remove extension dashboard from test allowlist.txt * update readme Clean up metadata exchange keys (#28249) * clean up * cleanup exchange key Remove unnecessary warning log from ingress status watcher (#28254) vm health checking (#28142) * impl with pilot * Remove redundant import * Remove redundant return * address some concerns * address more concerns * Add tests * fix ci? * fix ci? Automator: update proxy@master in istio/istio@master (#27786) pilot: GlobalUnicastIP of a model.Proxy should be set to the 1st applicable IP address in the list (#28260) * pilot: GlobalUnicastIP of a model.Proxy should be set to the 1st applicable IP address in the list Signed-off-by: Yaroslav Skopets <yaroslav@tetrate.io> * docs: add release notes Signed-off-by: Yaroslav Skopets <yaroslav@tetrate.io> Adjust Wasm VMs charts order and Add release note (#28251) * Adjust Wasm VMs charts order * add release note * replace wasm extension dashboard with real ID Issue #27606: Minor Bug fixes, mostly renaming (#28156) Cleanup ADS tests (#28275) * Cleanup ADS tests * fix lint * fix lint Temporarily skip ratelimit tests (#28286) To help master/1.8 get to a merge-able state Add warning for legacy FQDN gateway reference (#27948) * Add warning for legacy FQDN gateway reference * fix lint * Add more warnings Fixes for trust domain configuration (#28127) * Fixes for trust domain configuration We want to ensure we take values.global.trustDomain as default, fallback to meshConfig.trustDomain, and ensure this is passed through to all parts of the code. This fixes the breakage in istio/istio.io#8301 * fix lint Status improvements (#28136) * Consolidate ledger and status implementation * Add ownerReference for garbage collection * write observedGeneration for status * cleanup rebase errors * remove garbage from pr * fix test failures * Fix receiver linting * fix broken unit tests * fix init for route test * Fix test failures * add missing ledger to test * Add release notes * Reorganize status controller start * fix race * separate init and start funcs * add newline * remove test sprawl * reset retention Add size to ADS push log (#28262) Add README.md for vendor optimized profiles (#28155) * Add README.profiles for vendor optimized profiles * Another attempt at the table Fix operator revision handling (#28044) * Fix operator revision handling * Add revision to installation CR * Add revision to each resource label * Update label handling * Add deployment spec template labels, clean up logging * Fix test * Update integration test * Make gen * Fix test * Testing * Fix tests Futureproof telemetry envoyfilters a bit (#28176) remove the install comment (#28243) * remove the install comment * Revert "remove the install comment" This reverts commit 60bc649. * Update gen-eastwest-gateway.sh pilot: skip privileged ports when building listeners for non-root gateways (#28268) * pilot: skip privileged ports when building listeners for non-root gateways * Add release note * Use ISTIO_META_UNPRIVILEGED_POD env var instead of a Pod label Automator: update proxy@master in istio/istio@master (#28281) istioctl bug-report: do not override system namespaces if --exclude flag is provided (#27989) Add ingress status integration test (#28263) clean up: extension configs (#28277) * clean up extension configs Signed-off-by: Kuat Yessenov <kuat@google.com> * make gen Signed-off-by: Kuat Yessenov <kuat@google.com> Show empty routes in pc routes (#28170) ``` NOTE: This output only contains routes loaded via RDS. NAME DOMAINS MATCH VIRTUAL SERVICE https.443.https-443-ingress-service1-default-0.service1-istio-autogenerated-k8s-ingress.istio-system * /* 404 https.443.https-443-ingress-service2-default-0.service2-istio-autogenerated-k8s-ingress.istio-system * /* 404 http.80 service1.demo.........io /* service1-demo-......-io-service1-istio-autogenerated-k8s-ingress.default http.80 service2.demo.........io /* service2-demo-.....i-io-service2-istio-autogenerated-k8s-ingress.default * /stats/prometheus* * /healthz/ready* ``` The first 2 lines would not show up without this PR Add warnings for unknown fields in EnvoyFilter (#28227) Fixes #26390 Update rather than patch webhook configuration (#28228) * Update rather than patch webhook configuration This is a far more flexible pattern, allowing us to have multiple webhooks and patch them successful. This pattern follows what the cert-manager does in their webhook patcher (see pkg/controller/cainjector), which I consider to be top quality code. * update rbac Improve error when users use removed addon (#28241) * Improve error when users use removed addon After and before: ``` $ grun ./istioctl/cmd/istioctl manifest generate --set addonComponents.foo.enabled=true -d manifests Error: component "foo" does not exist $ ik manifest generate --set addonComponents.foo.enabled=true -d manifests Error: stat manifests/charts/addons/foo: no such file or directory ``` * Fix test When installing istio-cni remove existing istio-cni plugin before inserting a new one (#28258) * Remove istio-cni plugin before inserting a new one * docs: add release notes Automator: update common-files@master in istio/istio@master (#28278) Make ingress gateway selector in status watcher match the one used to generate gateway (#28279) * Check for empty ingress service value when converting ingress to gateway * Pull ingress gateway selector logic into own func * Use same ingress gateway selector logic for status watcher as when generating gateways * Fix status watcher test Remove time.Sleep hacks for fast tests/non-flaky (#27741) * Remove time.Sleep hacks for fast tests * fix flake Add grafana templating query for DS_PROMETHEUS and add missing datasource (#28320) * Add grafana templating query for DS_PROMETHEUS and add missing datasource * make extension dashboard viewonly skip ingress test in multicluster (#28321) E2E test for trust domain alias client side secure naming. (#28206) * trust domain alias secure naming e2e test * add dynamic certs and test options * move under ca_custom_root test folder * trust domain alias secure naming e2e test * add dynamic certs and test options * move under ca_custom_root test folder * fix host to address * update script * refactor based on comments * updated comments * add build constraints * lint fix * fixes based on comments Samples: use more common images and delete useless samples (#28215) Signed-off-by: Xiang Dai <long0dai@foxmail.com> Wait until ACK before sending additional pushes (#28261) * Wait until ACK before sending additional pushes Fixes: #25685 At large scale, Envoy suffers from overload of XDS pushes, and there is no backpressure in the system. Other control planes, such as any based on go-control-plane, outperform Istio in config update propogations under load as a result. This changes adds a backpressure mechanism to ensure we do not push more configs than Envoy can handle. By slowing down the pushes, the propogation time of new configurations actually increases. We do this by keeping note, but not sending, any push requests where that TypeUrl has an un-ACKed request in flight. When we get an ACK, if there is a pending push request we will immediately trigger it. This effectively means that in a high churn environment, each proxy will always have exactly 1 outstanding push per type, and when the ACK is recieved we will immediately send a new update. This PR is co-authored by Steve, who did a huge amount of work in developing this into the state it is today, as wel as finding and testing the problem. See #27563 for much of this work. Co-Authored-By: Steven Dake sdake@ibm.com * Refactor and cleanup tests * Add test istioctl: fix failure when passing flags to `go test` (#28332) add xds proxy metrics (#28267) * add xds proxy metrics Signed-off-by: Rama Chavali <rama.rao@salesforce.com> * lint Signed-off-by: Rama Chavali <rama.rao@salesforce.com> * fix description Signed-off-by: Rama Chavali <rama.rao@salesforce.com> remove-from-mesh: skip system namespace to remove sidecar (#28187) * remove-from-mesh: skip system namespace to remove sidecar * check for -i Remove accidentally merged debug logs (#28331) update warning message for upgrading istio version (#28303) * update warning message for upgrading istio version * add use before istioctl analyze Update README.md (#28272) * Update README.md * Update README.md Xds proxy improve (#28307) * Prevent goroutine leak * Accelerate by splitting upstream request and response handling * fix lint fix manifestpath for verify install. (#28345) Fix ADSC race (#28342) * Fix ADSC race * fix * fix ut * Update pkg/istio-agent/local_xds_generator.go Co-authored-by: Shamsher Ansari <shaansar@redhat.com> * Update pkg/adsc/adsc_test.go Co-authored-by: Shamsher Ansari <shaansar@redhat.com> * Update pilot/pkg/xds/lds_test.go Co-authored-by: Shamsher Ansari <shaansar@redhat.com> * Apply shamsher's suggestions from code review Co-authored-by: Shamsher Ansari <shaansar@redhat.com> Co-authored-by: Shamsher Ansari <shaansar@redhat.com> List Istio injectors (#27849) * Refactor and rename command * Code nits * Fix typo * Print message if no namespaces have injection * Code nits * Case where an injected namespace does not yet have pods * Code cleanup kube-inject: hide namespace flag in favour of istioNamespace (#28067) Automator: update proxy@master in istio/istio@master (#28355) Fix test race in FilterGatewayClusterConf (#28330) Example failure https://prow.istio.io/view/gs/istio-prow/logs/unit-tests_istio_postsubmit/4087 In generally, we need a better way to mutate feature flags in tests. Maybe a conditionally compiled mutex. Will open an issue to track Networking: Add scaffold of tunnel EDS builder (#28244) * add endpoint tunnel supportablity field Signed-off-by: Yuchen Dai <silentdai@gmail.com> * edsbtswip Signed-off-by: Yuchen Dai <silentdai@gmail.com> * fix import Signed-off-by: Yuchen Dai <silentdai@gmail.com> * make gen Signed-off-by: Yuchen Dai <silentdai@gmail.com> * EndpointsByNetworkFilter refactor Signed-off-by: Yuchen Dai <silentdai@gmail.com> * fix pkg/pilot/ tests Signed-off-by: Yuchen Dai <silentdai@gmail.com> * ep builder decide build out tunnel type Signed-off-by: Yuchen Dai <silentdai@gmail.com> * add basic tunnel eds test Signed-off-by: Yuchen Dai <silentdai@gmail.com> * make gen Signed-off-by: Yuchen Dai <silentdai@gmail.com> * fix proxy metadata access Signed-off-by: Yuchen Dai <silentdai@gmail.com> * make gen Signed-off-by: Yuchen Dai <silentdai@gmail.com> * fix endpoint COW, h2support bitfield bug Signed-off-by: Yuchen Dai <silentdai@gmail.com> * make gen without fmt Signed-off-by: Yuchen Dai <silentdai@gmail.com> * add errgo Signed-off-by: Yuchen Dai <silentdai@gmail.com> * address comment Signed-off-by: Yuchen Dai <silentdai@gmail.com> * fmt Signed-off-by: Yuchen Dai <silentdai@gmail.com> * make gen Signed-off-by: Yuchen Dai <silentdai@gmail.com> cni:fix order dependent test failures (#28349) The `interceptRuleMgrType` decalred in main.go as "iptables", it would be changed to "mock" in func resetGlobalTestVariables(). When run single test, it would be "iptables" and make test failed. Signed-off-by: Xiang Dai <long0dai@foxmail.com> fix uninstall test (#28335) * fix uninstall test * revert prow change * address comment * add logic to deduplicate Adding Route Specific RateLimiting Test Signed-off-by: gargnupur <gargnupur@google.com> remove debug info Signed-off-by: gargnupur <gargnupur@google.com> fix failure Signed-off-by: gargnupur <gargnupur@google.com>

Signed-off-by: gargnupur <gargnupur@google.com> fix Signed-off-by: gargnupur <gargnupur@google.com> add yaml Signed-off-by: gargnupur <gargnupur@google.com> fix comments Signed-off-by: gargnupur <gargnupur@google.com> fix comments Signed-off-by: gargnupur <gargnupur@google.com> fix build Signed-off-by: gargnupur <gargnupur@google.com> fix build Signed-off-by: gargnupur <gargnupur@google.com> Delete reference to old ISTIO_META_PROXY_XDS_VIA_AGENT (istio#28203) * update expose istiod * add https sample * fix tab * update host + domain * fix lint * fix lint * tweak host * fix lint * use tls port * name port correctly * change default to tls * Update samples/multicluster/expose-istiod.yaml Co-authored-by: Iris <irisding@apache.org> * Update samples/multicluster/expose-istiod.yaml Co-authored-by: Iris <irisding@apache.org> * Revert "Update samples/multicluster/expose-istiod.yaml" This reverts commit 7feb468. * Revert "Update samples/multicluster/expose-istiod.yaml" This reverts commit 98209a0. * use istiod-remote since pilot is still enabled on remote cluster * loose up on host name * adding notes * clean up this in preview profile Co-authored-by: Iris <irisding@apache.org> Avoid telemetry cluster metadata override (istio#28171) * fix cluster metadata override * test * fix * fix * fix again * clean add telemetry test for customize metrics (istio#27844) * add test for customize metrics * address comments * add remove tag check * fix test Delete istiod pods on cleanup (istio#28205) Otherwise they stay around and can cause other tests to fail. In a concrete example, deployment "istiod-canary" stays live and interferes in pilot's TestMultiRevision test, which also deploys a "istiod-canary", but, since a deployment with that name already exists, operator doesn't redeploy it, because it's already there. Fix HTTPs on HTTP port passthrough (istio#28166) * Fix HTTPs on HTTP port passthrough * Add note remove 1.7 telemetry filters from charts (istio#28195) use correct env var name (istio#28217) Align Ingress resource status updates with Ingresses targeted in controller (istio#28225) make istiod-remote depend on base when installation (istio#28219) Add remoteIpBlocks functionality to AuthorizationPolicy (istio#27906) * create remoteIpBlocks and update ipBlocks for AuthorizationPolicy By adding remoteIpBlocks and notRemoteIpBlocks in Source, an AuthorizationPolicy can trigger actions based on the original client IP address gleaned from the X-Forwarded-For header or the proxy protocol. The ipBlocks and notIpBlocks fields have also been updated to use direct_remote_ip in Envoy instead of source_ip * use correct attribute for RemoteIpBlocks * fix unit tests and add integration tests for remote.ip attribute * fix notRemoteIp integration test * initialize headers if it is nil * Combine remoteIp tests into IngressGateway test and add release note * add titles to links * remove unneeded tests * fix quotes in releasenote, run make gen * make upgradeNotes a list Remove deprecated istio-coredns plugin (istio#28179) make stackdriver test platform agnostic (istio#28237) * make stackdriver test platform agnostic * fix * clean up Add Wasm Extension Dashboard (istio#28209) * Add WASM Extension Dashboard * update dashboard * update dashboard and add cpu/mem * address review comment * add excluded * remove extension dashboard from test allowlist.txt * update readme Clean up metadata exchange keys (istio#28249) * clean up * cleanup exchange key Remove unnecessary warning log from ingress status watcher (istio#28254) vm health checking (istio#28142) * impl with pilot * Remove redundant import * Remove redundant return * address some concerns * address more concerns * Add tests * fix ci? * fix ci? Automator: update proxy@master in istio/istio@master (istio#27786) pilot: GlobalUnicastIP of a model.Proxy should be set to the 1st applicable IP address in the list (istio#28260) * pilot: GlobalUnicastIP of a model.Proxy should be set to the 1st applicable IP address in the list Signed-off-by: Yaroslav Skopets <yaroslav@tetrate.io> * docs: add release notes Signed-off-by: Yaroslav Skopets <yaroslav@tetrate.io> Adjust Wasm VMs charts order and Add release note (istio#28251) * Adjust Wasm VMs charts order * add release note * replace wasm extension dashboard with real ID Issue istio#27606: Minor Bug fixes, mostly renaming (istio#28156) Cleanup ADS tests (istio#28275) * Cleanup ADS tests * fix lint * fix lint Temporarily skip ratelimit tests (istio#28286) To help master/1.8 get to a merge-able state Add warning for legacy FQDN gateway reference (istio#27948) * Add warning for legacy FQDN gateway reference * fix lint * Add more warnings Fixes for trust domain configuration (istio#28127) * Fixes for trust domain configuration We want to ensure we take values.global.trustDomain as default, fallback to meshConfig.trustDomain, and ensure this is passed through to all parts of the code. This fixes the breakage in istio/istio.io#8301 * fix lint Status improvements (istio#28136) * Consolidate ledger and status implementation * Add ownerReference for garbage collection * write observedGeneration for status * cleanup rebase errors * remove garbage from pr * fix test failures * Fix receiver linting * fix broken unit tests * fix init for route test * Fix test failures * add missing ledger to test * Add release notes * Reorganize status controller start * fix race * separate init and start funcs * add newline * remove test sprawl * reset retention Add size to ADS push log (istio#28262) Add README.md for vendor optimized profiles (istio#28155) * Add README.profiles for vendor optimized profiles * Another attempt at the table Fix operator revision handling (istio#28044) * Fix operator revision handling * Add revision to installation CR * Add revision to each resource label * Update label handling * Add deployment spec template labels, clean up logging * Fix test * Update integration test * Make gen * Fix test * Testing * Fix tests Futureproof telemetry envoyfilters a bit (istio#28176) remove the install comment (istio#28243) * remove the install comment * Revert "remove the install comment" This reverts commit 60bc649. * Update gen-eastwest-gateway.sh pilot: skip privileged ports when building listeners for non-root gateways (istio#28268) * pilot: skip privileged ports when building listeners for non-root gateways * Add release note * Use ISTIO_META_UNPRIVILEGED_POD env var instead of a Pod label Automator: update proxy@master in istio/istio@master (istio#28281) istioctl bug-report: do not override system namespaces if --exclude flag is provided (istio#27989) Add ingress status integration test (istio#28263) clean up: extension configs (istio#28277) * clean up extension configs Signed-off-by: Kuat Yessenov <kuat@google.com> * make gen Signed-off-by: Kuat Yessenov <kuat@google.com> Show empty routes in pc routes (istio#28170) ``` NOTE: This output only contains routes loaded via RDS. NAME DOMAINS MATCH VIRTUAL SERVICE https.443.https-443-ingress-service1-default-0.service1-istio-autogenerated-k8s-ingress.istio-system * /* 404 https.443.https-443-ingress-service2-default-0.service2-istio-autogenerated-k8s-ingress.istio-system * /* 404 http.80 service1.demo.........io /* service1-demo-......-io-service1-istio-autogenerated-k8s-ingress.default http.80 service2.demo.........io /* service2-demo-.....i-io-service2-istio-autogenerated-k8s-ingress.default * /stats/prometheus* * /healthz/ready* ``` The first 2 lines would not show up without this PR Add warnings for unknown fields in EnvoyFilter (istio#28227) Fixes istio#26390 Update rather than patch webhook configuration (istio#28228) * Update rather than patch webhook configuration This is a far more flexible pattern, allowing us to have multiple webhooks and patch them successful. This pattern follows what the cert-manager does in their webhook patcher (see pkg/controller/cainjector), which I consider to be top quality code. * update rbac Improve error when users use removed addon (istio#28241) * Improve error when users use removed addon After and before: ``` $ grun ./istioctl/cmd/istioctl manifest generate --set addonComponents.foo.enabled=true -d manifests Error: component "foo" does not exist $ ik manifest generate --set addonComponents.foo.enabled=true -d manifests Error: stat manifests/charts/addons/foo: no such file or directory ``` * Fix test When installing istio-cni remove existing istio-cni plugin before inserting a new one (istio#28258) * Remove istio-cni plugin before inserting a new one * docs: add release notes Automator: update common-files@master in istio/istio@master (istio#28278) Make ingress gateway selector in status watcher match the one used to generate gateway (istio#28279) * Check for empty ingress service value when converting ingress to gateway * Pull ingress gateway selector logic into own func * Use same ingress gateway selector logic for status watcher as when generating gateways * Fix status watcher test Remove time.Sleep hacks for fast tests/non-flaky (istio#27741) * Remove time.Sleep hacks for fast tests * fix flake Add grafana templating query for DS_PROMETHEUS and add missing datasource (istio#28320) * Add grafana templating query for DS_PROMETHEUS and add missing datasource * make extension dashboard viewonly skip ingress test in multicluster (istio#28321) E2E test for trust domain alias client side secure naming. (istio#28206) * trust domain alias secure naming e2e test * add dynamic certs and test options * move under ca_custom_root test folder * trust domain alias secure naming e2e test * add dynamic certs and test options * move under ca_custom_root test folder * fix host to address * update script * refactor based on comments * updated comments * add build constraints * lint fix * fixes based on comments Samples: use more common images and delete useless samples (istio#28215) Signed-off-by: Xiang Dai <long0dai@foxmail.com> Wait until ACK before sending additional pushes (istio#28261) * Wait until ACK before sending additional pushes Fixes: istio#25685 At large scale, Envoy suffers from overload of XDS pushes, and there is no backpressure in the system. Other control planes, such as any based on go-control-plane, outperform Istio in config update propogations under load as a result. This changes adds a backpressure mechanism to ensure we do not push more configs than Envoy can handle. By slowing down the pushes, the propogation time of new configurations actually increases. We do this by keeping note, but not sending, any push requests where that TypeUrl has an un-ACKed request in flight. When we get an ACK, if there is a pending push request we will immediately trigger it. This effectively means that in a high churn environment, each proxy will always have exactly 1 outstanding push per type, and when the ACK is recieved we will immediately send a new update. This PR is co-authored by Steve, who did a huge amount of work in developing this into the state it is today, as wel as finding and testing the problem. See istio#27563 for much of this work. Co-Authored-By: Steven Dake sdake@ibm.com * Refactor and cleanup tests * Add test istioctl: fix failure when passing flags to `go test` (istio#28332) add xds proxy metrics (istio#28267) * add xds proxy metrics Signed-off-by: Rama Chavali <rama.rao@salesforce.com> * lint Signed-off-by: Rama Chavali <rama.rao@salesforce.com> * fix description Signed-off-by: Rama Chavali <rama.rao@salesforce.com> remove-from-mesh: skip system namespace to remove sidecar (istio#28187) * remove-from-mesh: skip system namespace to remove sidecar * check for -i Remove accidentally merged debug logs (istio#28331) update warning message for upgrading istio version (istio#28303) * update warning message for upgrading istio version * add use before istioctl analyze Update README.md (istio#28272) * Update README.md * Update README.md Xds proxy improve (istio#28307) * Prevent goroutine leak * Accelerate by splitting upstream request and response handling * fix lint fix manifestpath for verify install. (istio#28345) Fix ADSC race (istio#28342) * Fix ADSC race * fix * fix ut * Update pkg/istio-agent/local_xds_generator.go Co-authored-by: Shamsher Ansari <shaansar@redhat.com> * Update pkg/adsc/adsc_test.go Co-authored-by: Shamsher Ansari <shaansar@redhat.com> * Update pilot/pkg/xds/lds_test.go Co-authored-by: Shamsher Ansari <shaansar@redhat.com> * Apply shamsher's suggestions from code review Co-authored-by: Shamsher Ansari <shaansar@redhat.com> Co-authored-by: Shamsher Ansari <shaansar@redhat.com> List Istio injectors (istio#27849) * Refactor and rename command * Code nits * Fix typo * Print message if no namespaces have injection * Code nits * Case where an injected namespace does not yet have pods * Code cleanup kube-inject: hide namespace flag in favour of istioNamespace (istio#28067) Automator: update proxy@master in istio/istio@master (istio#28355) Fix test race in FilterGatewayClusterConf (istio#28330) Example failure https://prow.istio.io/view/gs/istio-prow/logs/unit-tests_istio_postsubmit/4087 In generally, we need a better way to mutate feature flags in tests. Maybe a conditionally compiled mutex. Will open an issue to track Networking: Add scaffold of tunnel EDS builder (istio#28244) * add endpoint tunnel supportablity field Signed-off-by: Yuchen Dai <silentdai@gmail.com> * edsbtswip Signed-off-by: Yuchen Dai <silentdai@gmail.com> * fix import Signed-off-by: Yuchen Dai <silentdai@gmail.com> * make gen Signed-off-by: Yuchen Dai <silentdai@gmail.com> * EndpointsByNetworkFilter refactor Signed-off-by: Yuchen Dai <silentdai@gmail.com> * fix pkg/pilot/ tests Signed-off-by: Yuchen Dai <silentdai@gmail.com> * ep builder decide build out tunnel type Signed-off-by: Yuchen Dai <silentdai@gmail.com> * add basic tunnel eds test Signed-off-by: Yuchen Dai <silentdai@gmail.com> * make gen Signed-off-by: Yuchen Dai <silentdai@gmail.com> * fix proxy metadata access Signed-off-by: Yuchen Dai <silentdai@gmail.com> * make gen Signed-off-by: Yuchen Dai <silentdai@gmail.com> * fix endpoint COW, h2support bitfield bug Signed-off-by: Yuchen Dai <silentdai@gmail.com> * make gen without fmt Signed-off-by: Yuchen Dai <silentdai@gmail.com> * add errgo Signed-off-by: Yuchen Dai <silentdai@gmail.com> * address comment Signed-off-by: Yuchen Dai <silentdai@gmail.com> * fmt Signed-off-by: Yuchen Dai <silentdai@gmail.com> * make gen Signed-off-by: Yuchen Dai <silentdai@gmail.com> cni:fix order dependent test failures (istio#28349) The `interceptRuleMgrType` decalred in main.go as "iptables", it would be changed to "mock" in func resetGlobalTestVariables(). When run single test, it would be "iptables" and make test failed. Signed-off-by: Xiang Dai <long0dai@foxmail.com> fix uninstall test (istio#28335) * fix uninstall test * revert prow change * address comment * add logic to deduplicate Adding Route Specific RateLimiting Test Signed-off-by: gargnupur <gargnupur@google.com> remove debug info Signed-off-by: gargnupur <gargnupur@google.com> fix failure Signed-off-by: gargnupur <gargnupur@google.com>

Affected product area (please put an X in all that apply)

[ ] Configuration Infrastructure

[ ] Docs

[ ] Installation

[X] Networking

[X] Performance and Scalability

[ ] Policies and Telemetry

[ ] Security

[ ] Test and Release

[ ] User Experience

[ ] Developer Infrastructure

Affected features (please put an X in all that apply)

[ ] Multi Cluster

[ ] Virtual Machine

[ ] Multi Control Plane

Version (include the output of

istioctl version --remoteandkubectl versionandhelm versionif you used Helm)How was Istio installed?

Environment where bug was observed (cloud vendor, OS, etc)

IKS

When we create ~1k virtualservices in a single cluster, the ingress gateway is picking up new virtualservice slowly.

The blue line in the chart indicates the overall time for probing to gateway pod return with success. (200 response code and expected header

K-Network-Hash). The stepped increasing of time is caused by the exponential retry backoff to execute probing. But the overall trend seems to have a linear growth which takes ~50s for a new virtual service to be picked up with 800 virtual services present.I also tried to dump and grep the configs in istio-ingress-gateway pod after the virtual service was created.

Initially the output was empty and It takes about 1min for the belowing result to showup.

There is no mem/cpu pressure for istio components.

Below is a typical virtual service created by knative.

The text was updated successfully, but these errors were encountered: