-

Notifications

You must be signed in to change notification settings - Fork 7.6k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Networking breaks when using cilium with strict kube-proxy replacement #27619

Comments

|

This essentially bypasses Istio load balancing. We do not support routing "direct to pod ip" traffic like they are doing |

|

@howardjohn Thanks for the quick reply! It makes sense to be this way. Unfortunately I've lost a lot of time debugging this issue 😔 Maybe this info will be useful to other istio users that face similar issues or have similar setups. |

|

@StefanCenusa I think that makes sense. We should add the "direct to pod ip" to https://istio.io/latest/docs/ops/deployment/requirements/ and the cilium specific part (not sure where). I wasn't aware that is what the cilium no kube-proxy mode did, so good to know |

|

Regarding Cilium, I wasn't aware either. But now I found this in their docs:

Thanks again! Should I close this issue or leave it open for the docs improvement part? |

|

Sorry added the wrong label - lets keep this open. |

|

We have just been made aware of this. We can definitely add to our docs to disable Context: I'm a Cilium developer. |

|

@tgraf Istio routing is based on:

So for HTTP (explicitly declared only) will work (both theoretically and I confirmed this) as the routing doesn't take the IP into account, but everything else is not going to hit the appropriate configuration. |

|

@tgraf does disabling host services translation significantly reduce functionality provided by cilium? |

No, it does not. The functionality when implemented on the network level is the same, just with a much higher overhead as each network packet has to be altered. We will point it out in the Cilium documentation that host services are currently not compatible with Istio due to the way Istio is doing redirect. On that note, what if Cilium did the redirect of all traffic? Similar to host services can currently bypass Istio, it can also be used in favor of Istio to redirect traffic to the sidecar.

This then means that headless services are not compatible either if the DNS directly returns pod IPs? |

Good point - for headless services specifically we create listeners for each pod IP. The assumption we are depending on here, and why we do this only for headless services, is that users will not have massive headless services as this will lead to scalability bottlenecks. |

|

How would Cilium redirect all traffic to Istio ? We do need some metadata - like original DST:port - that we get using a system call. We have discussed different ways to get this metadata - like a 'proxy protocol' prefix, or tunneled in H2 CONNECT - but it'll take some time. |

We have already built this using TPROXY so you will see the original network headers. This is also how Cilium redirects packets to Envoy when Istio is not in play. In the past, we have also shared metadata with Envoy and have built an eBPF metadata reader in Envoy to share arbitrary data between the Cilium datapath and Envoy. |

|

@tgraf is this available in OSS and does it require CAP_NET_ADMIN to read eBPF metadata? |

Yes, all of this is available as of Cilium today.

Yes, when using TPROXY with IP_TRANSPARENT it will require CAP_NET_ADMIN. We also used to have code to redirect to a known listener port on Envoy which does not require CAP_NET_ADMIN. |

|

Not stale

…On Thu, Jan 7, 2021, 9:05 PM Istio Policy Bot ***@***.***> wrote:

🧭 This issue or pull request has been automatically marked as stale

because it has not had activity from an Istio team member since 2020-10-09.

It will be closed on 2021-01-22 unless an Istio team member takes action.

Please see this wiki page

<https://github.com/istio/istio/wiki/Issue-and-Pull-Request-Lifecycle-Manager>

for more information. Thank you for your contributions.

*Created by the issue and PR lifecycle manager*.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#27619 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAEYGXL5DLU7NO5QW2QJEULSY2HAZANCNFSM4R5WUUDA>

.

|

|

Just a heads-up - we released the fix (cilium/cilium#17154) in Cilium v1.10.5 which allows Cilium's KPR to cooperate with Istio's dataplane. The cilium-agent option is named |

Applied above configuration on our clusters, all stuff work like a charm 🚀 |

|

Maybe we can add a check to |

👍 We could make the setting accessible through Cilium's Config API. |

|

Some news on this? |

|

@alfsch the only thing Istio can ever do about this is warn that its enabled. The option fundamentally breaks Istio. No news on a warning being added |

|

@howardjohn Is there an easy way to detect when Cilium runs with Istio? |

|

Statically we can try to read Ciliums config and parse the configmap for this value, but I don't know how reliable that is (or stable). I do wonder if we could add a runtime check. We have a validation container that tests our iptables actually work though, i wonder if we could extend this... something like send a request to One issue with that is we want to remove the validation container, but I think there will always be a place we can run it, it just may move |

|

I'm more interested in detecting a presence of Istio from Cilium PoV. I'd like to add a warning if the KPR / Socket LB are enabled when running with Istio. |

|

Ah got it. At install time you probably have Cilium before Istio, so I would imagine you want it at runtime. Some possible detection mechanisms:

I am not sure what type of data you have where you would run this, but I'd imagine we could find an approach that works One note, this would break all sidecar service meshes, so you might want a more generic approach |

|

For all who have troubles with cilium in strict mode without kube-proxy. From my point of view, the root cause for the issues was our cilium version < 12.x and linux kernel < 5.7 due to the usage of ubuntu 20.04 as kubernetes node image. There were the same issues as described in http(s) communication across namespaces using services with port mappings to different destination ports. Using the same ports mitigated the problem for some pods, but not for all. After updating to cilium 1.12.6 we still faced the issues, but after looking into the logs of the cilium pods, I found in the startup section warnings about possible problems with istio using a kernel lower than 5.7. We updated the cluster nodes to a current ubuntu 22.04 with kernel 5.15.x and voilà, all our issues were gone. We even don't need the port mapping change to use the same destination ports. The for us working command line to render the cilium chart is: kubernetes node: I found this, because I saw a warning during startup. @brb Is the feature you want already implemented? |

|

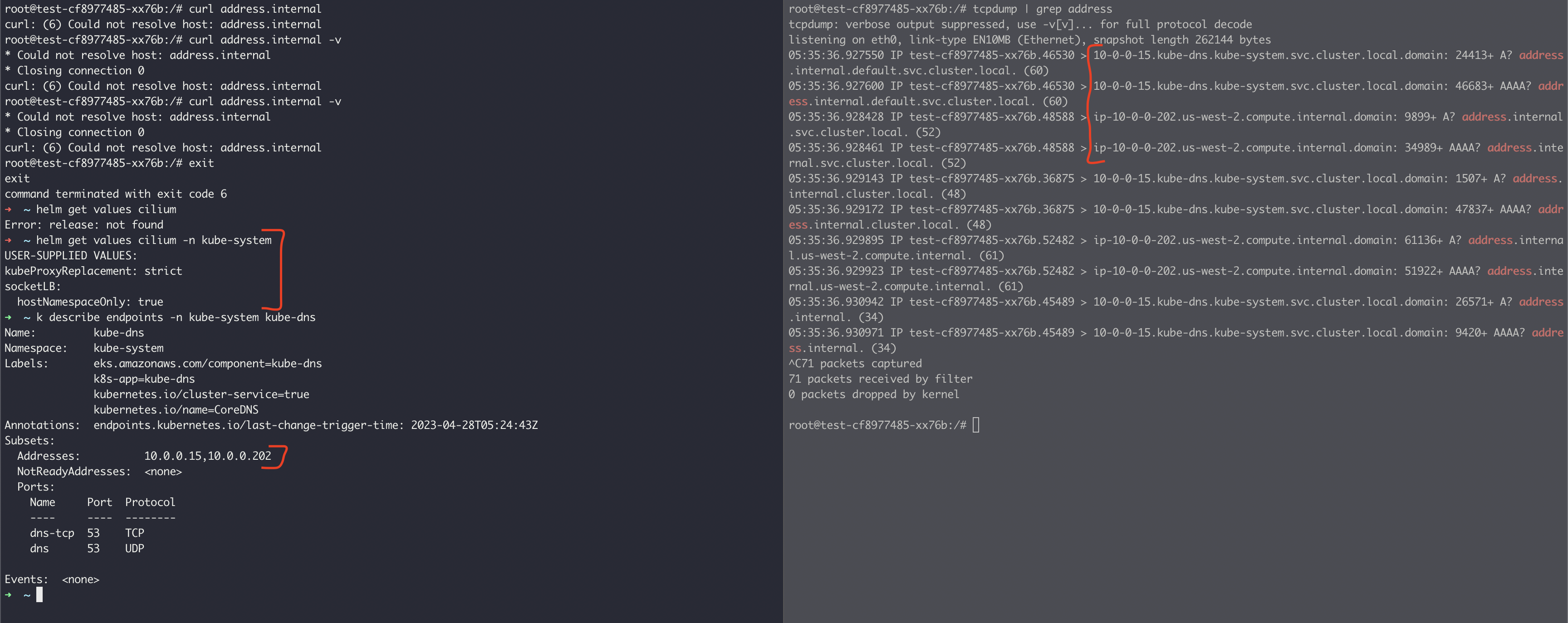

Resolved: need kernel 5.7 at least Still seeing this issue on AWS EKS 1.23: Minimal install: On left hand side is the application pod and right hand is the istio-proxy sidecar of said application pod. tcpdump on istio-proxy shows requests going to endpoints of corends, intead of kube-dns. Prior to replacing kube-proxy we were seeing correct calls: After replacing kube-proxy: Any thoughts on what else to try? After installing cilium, nodes were terminated to ensure all configurations applied correctly. |

|

Kernel v5.7 is patched from torvalds/linux@f318903 |

|

Seems like we can close this? |

|

Thanks @christian-posta @gyutaeb confirmed with EKS 1.24 (Kernel v5.10) this issue is resolved. |

|

Closing |

Bug description

This issue is related to a thread I've started on istio's slack channel.

It affects inter-service telemetry, but it might impact other features as well, because traffic is not treated as http where it should be.

My setup is a kubernetes 1.18 cluster with cilium 1.8 configured with

kubeProxyReplacement=strict. This means that thekube-proxycomponent from kubernetes is replaced and cilium handles its duties.I'm not an expert in how cilium works, but this mode should improve service to service communication (and more networking) leveraging eBPF functionality.

I have noticed (using tcpdump) that when this mode is enabled, if from one pod I make requests to another service (eg.

curl http://servicename.namespace), the connections are "magically" made directly to destination pod (pod-ip:target-port), rather then going through the ClusterIP of the destination service. I don't know the internals of how istio-proxy is configured or how metadata filter works, but this behaviour seem to make istio-proxy into thinking that requests go directly to thepod-ip:container-port, thus no route from istio config is matched, going through some default tcp path.[ ] Docs

[ ] Installation

[X] Networking

[ ] Performance and Scalability

[X] Extensions and Telemetry

[ ] Security

[ ] Test and Release

[ ] User Experience

[ ] Developer Infrastructure

Expected behavior

Istio metadata headers to be added to requests even when using cilium's kube-proxy solution

Steps to reproduce the bug

To test this bug, I've installed kube-proxy and changed cilium's config to

kubeProxyReplacement=partialand inter-service telemetry started to work, no changes to istio setup at all. Moreover, when inspecting traffic between pods using tcpdump, I was able to see some packages being sent to the ClusterIP of the destination service (this didn't happen previously).Version (include the output of

istioctl version --remoteandkubectl version --shortandhelm versionif you used Helm)How was Istio installed?

istioctl and istio-operator

Environment where bug was observed (cloud vendor, OS, etc)

aws, kops cluster

I am not able to share a config dump of my proxies, but this issue can be reproduced on any test cluster with cilium configured as described.

The text was updated successfully, but these errors were encountered: