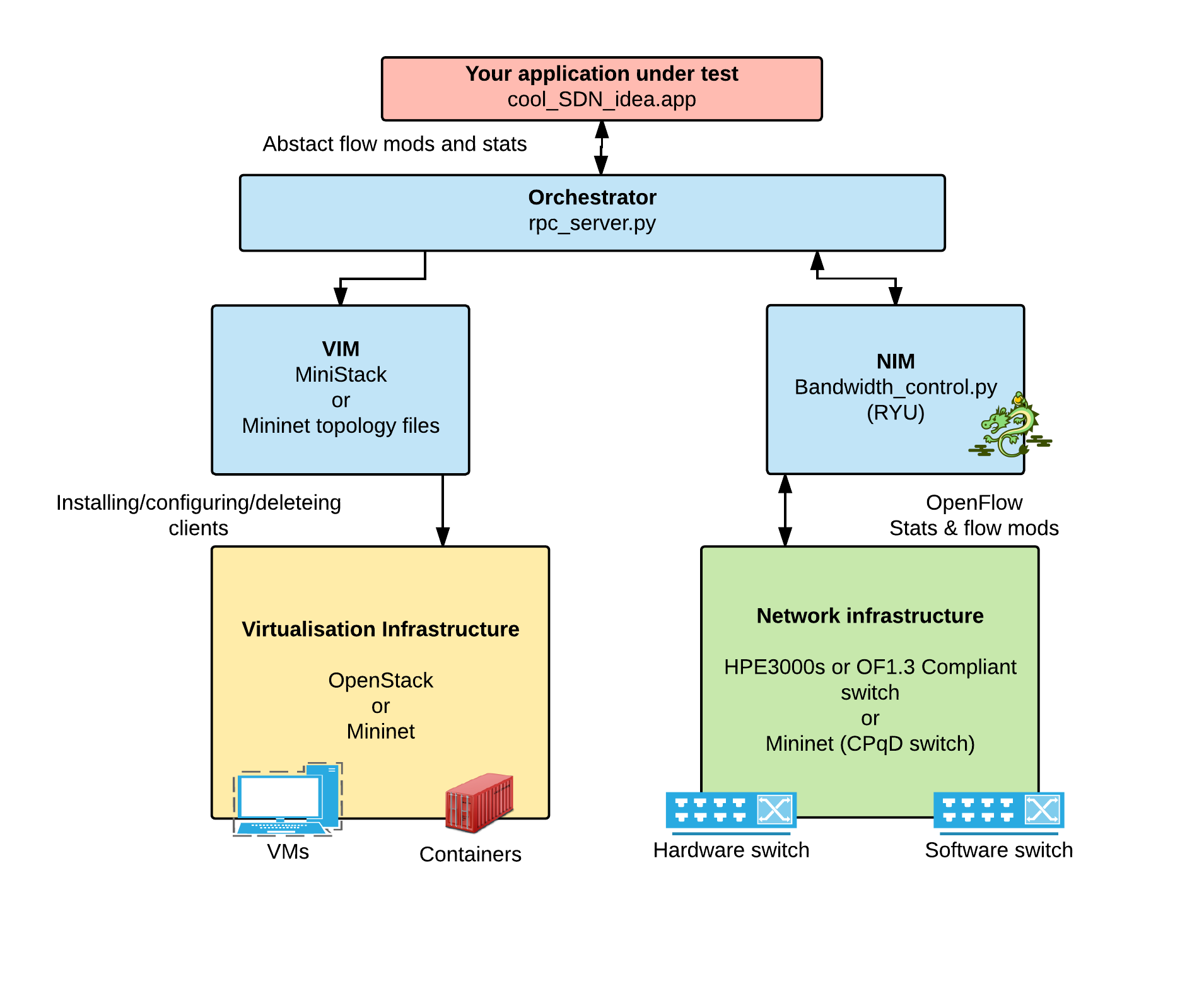

Online video streaming and Internet of Things (IoT) are becoming the main consumers of future networks, generating high throughput and highly dynamic traffic from large numbers of heterogeneous user devices. This places significant pressure on the underlying networks and deteriorates performance, efficiency and fairness. In order to address this issue, future networks must incorporate contextual network designs that recognize application and user-level requirements. However, new designs of network management components such as resource provisioning models are often tested within simulation environments which lack subtleties in how network protocols and relevant specifications are executed by network equipment in practice. This paper contributes the design and operational guidelines for an software-defined networking (SDN) experimentation framework (REF), which enables rapid evaluation of contextual networking designs using real network infrastructures. A use case study of a QoE-aware resource allocation model demonstrates the effectiveness of REF in facilitating design and validation of SDN-assisted networking.

In order to get started using REF, a few dependencies are required. Infrastructure control, client automation, and the REF controller. It is recommended that you all of the tools and packages provided in this repository as they have been frozen for this REF's use. Newer versions of other tools may offer more features but we cannot guarantee that they will work.

Install python and pip using your package manager.

% sudo apt-get install python

% sudo apt-get install python-pip

Install all pip requirements using the requirements.txt file provided (this includes the ryu controller):

% sudo pip install -r requirements.txt

Using MiniStack with OpenStack to automate VM creation and environment cleanup at scale.

Need some help getting openstack together? Checkout our single command openstack build scripts: https://github.com/hdb3/openstack-build

Visit the readme here for usage instructions: https://github.com/lyndon160/REF/tree/master/ministack

After openstack is setup (with vlan configurations), connect it to your OpenFlow 1.3 compliant switches. Also, if not already configured, configure the switches to point to the controller (the IP that REF is running on).

With this solution, you will be limited scale because of the limited throughput avialable with compliant soft switches (CPqD). This solution uses Mininet to bring up and destroy clients at scale.

Clone mininet

$ git clone https://github.com/mininet/mininet

Build mininet with the CPqD soft switch

$ sudo ./install.sh -n3fxw

In order to use this you must use the CPqD switch because as of yet, OvS does not support the extended features used in this framework.

Use cluster command to automate your clients across the network.

TODO add installation details for this

Visit the readme here for further usage details: https://github.com/lyndon160/REF/tree/master/cluster_command/

TODO

The REF controller is a modified version of the Ryu controller (http://osrg.github.io/ryu/) , it can be found in the /openflow_bandwidth folder. The applciation supports the basic forwarding applications available in Ryu and offers control of the network through an RPC intereface. It reports context in the network by monitoring all flows, meters and ports, it also keeps a track of the maximum throughput seen on the switch and on any single flow, port, or meter. The controller runs a JSON-RPC server for interfacing (see below for API).

Install depedencies. Python, Python-pip, and the Ryu controller.

Next, to install the REF controller clone this repository and change directory to bandwidth_control/:

% git clone http://github.com/lyndon160/REF

Assuming the dependencies are correctly installed, you can run the controller with:

% ryu-manager bandwidth_control_simple_switch_13.py

Copy the sample skeleteon program provided in this repository under samples (samples/skeleton.py).

Run bandwidth_control_simple_switch_13.py as a Ryu app

% ryu-manager bandwidth_control_simple_switch_13.py

Make sure that any switches you have are configure to point to use a controller at the IP address which you're running bandwidth_control_simple_switch_13.py.

By default the RPC server is running on http://localhost:4000/jsonrpc

Now run your REF application (your utility application)

% python ./my_REF_app.py

The default application maintains a periodic state-transfer with the controller. In this simple example, the total bandwidth observed by all switches on the network is reported.

For other features, look at the API available on the JSON RPC server's interface (see below).

The JSON-RPC server is a HTTP server. The following examples would be used to develop a python application using the python-jspnrpc library (https://pypi.python.org/pypi/python-jsonrpc), but procedures can be called using any JSON-RPC method. (I think)

Install

% pip install python-jsonrpc

Import

import pyjsonrpc

Connection

http_client = pyjsonrpc.HttpClient(url = "http://localhost:4000/jsonrpc")

Procedure calling.

# Direct call

result = http_client.call("<procedure>", arg1, arg2)

# Use the *method* name as *attribute* name

result = http_client.procedure(arg1, arg2)

# Notifcations send messages to the serevr without reply

http_client.notify("<procedure>", arg1, arg2)

report_port

Reports the maximum seen throughput of a specific port on a specific switch.

Params: [<switch_id>, <port_no>]

Result: [upload B/s, download B/s]

--> {"jsonrpc": "2.0", "method": "report_port", "params": [<switch_id>, <port_no>], "id": 1}

<-- {"jsonrpc": "2.0", "result": [<upload B/s>, <download B/s>], "id": 1}

report_flow - Not implemented

Reports the throughout of a specific flow on a specific switch.

Params: [<switch_id>, <flow_id>]

Result: <B/s>

report_switch_ports

Reports the throughput of all ports on a specific switch.

Params: <switch_id>

Result: JSON formatted port list

{

<port_no>:[<upload B/s>, <download B/s>],

...

<port_no>:[<upload B/s>, <download B/s>]

}

report_switch_flows - Not implemented

Reports the througput of all flows on a specific switch.

Params: <switch_id>

Result: JSON formatted flow list

{

<flow_id>:<B/s>,

...

<flow_id>:<B/s>

}

report_all_ports

Report the throughput of all ports on all switches under the control of the controller.

Result: JSON formatted switch & port list

{

<switch_id>:{

<port_no>:[<upload B/s>, download B/s],

...

<port_no>:[<upload B/s>, download B/s]

},

...

<switch_id>:{

<port_no>:[<upload B/s>, <download B/s>],

...

<port_no>:[<upload B/s>, <download B/s>]

}

}

report_all_flows

Report the throughput of all flows on all switches under the control of the controller.

Result: JSON formatted switch & flow list

{

<switch_id>:{

<flow_id>:<B/s>,

...

<flow_id>:<B/s>

},

...

<switch_id>:{

<flow_id>:<B/s>,

...

<flow_id>:<B/s>

}

}

reset_port - Notification

Resets the throughput of a specific port. To be recalculated.

Params: [<switch_id>, <port_no>]

reset_flow - Notification - Not implemented

Resets the throughput of a specific flow. To be recalculated.

Params: [<switch_id>, <flow_id>]

reset_switch_ports - Notification

Resets all recorder throughputs of all ports on a specific switch.

Params: <switch_id>

reset_switch_flows - Notification

Resets all recorder throughputs of all ports on a specific switch.

Params: <switch_id>

reset_all_ports - Notification

Resets all recorder throughputs of all ports on all swtiches under the control of the controller.

reset_all_flows - Notification

Resets all recorder throughputs of all flows on all swtiches under the control of the controller.

Enforce procedures in progress

enforce_port_outbound - Notification

Enforces an outbound bandwidth restriction on a specific port. Any previous enforcements will be replaced.

Params: [<switch_id>, <port_no>, <speed B/s>]

enforce_port_inbound - Notification

Enforces an inbound bandwidth restriction on a specific port. Any previous enforcements will be replaced.

Params: [<switch_id>, <port_no>, <speed B/s>]

enforce_flow - Notification -

Enforces a bandwidth restricion on an existing flow. Any previous enforcements will be replaced.

Params:[<switch_id>, <flow_id>]

enfore_service

Enforces a bandwith restricting on a service donated by the source and destination address pair.

Params: [<switch_id>, <src_addr>, <dst_addr>, <speed B/s>]

block_flow

Blocks a flow for a set duration on the specified switch.

Params: [<switch_id>, <src_addr>, <dst_addr>, <duration seconds>]

Show everything

% python report_througput.py -a

Show all ports on a switch

% python report_throughput.py -s <switch_id>

Show specifc port on a switch

% python report_throughput.py -s <switch_id> -p <port_no>

enforce_throughput_port.py

% python enforce_throughput_port.py <switch_id> <port_no> <speed B/s>

enforce_throughput_service.py

% python enforce_throughput_service.py <switch_id> <src_ip> <dst_ip> <speed B/s>

block_flow.py

% python block flow.py <switch_id> <src_ip> <dst_ip> <duration seconds>

An experimental MPEG-DASH request engine with support for accurate logging. This is used on the clients for the QoE usecase.

This is a simple bridge SDN controller that rate-limits on a per port basis. This is only used when the target OpenFlow hardware does not support metering on muliple tables within a single pipeline.

There is a number of additional scripts I used in the experimentation. For example, the /tools/reboot.sh script is used to reboot all the nodes via the nova interface. It could be used again (assuming we naming the hosts and servers the same). This naming shouldn't be an issue if we use the /tools/specmuall.py script in conjunction with Nic's ministack tools. This will recreate the exact same configuration of VMs and networks that we used in the experiment. The only think missing is the configuration of the HP switches and the VLAN trickery used to ensure VMs appear on particular ports on each of the virtual switches present on the physical HP switch.

There is two bash scripts used during the experiments. These were run simultaneously on each of the VMs using cluster SSH. The /tools/pre.sh script was run before experiments started and basically ensured that there was connectivity between each of the hosts and the server. It prints a nice green message if there is, a big red [FAIL] if not. This helped debug connectivity issued before even before we started. The /tools/go.sh was run in a similar manner ()using cluster SSH) to start the tests. The one included is for the background traffic generation using wget; evidently, it will different on the hosts playing video (I forgot to retrieve that one, but it basically started Scootplayer pointing at the server and playing the desired version of Big Buck Bunny). I just called it the same so that you could simply hit $ ./go.sh and start the damned thing!

An alternative to cssh was created because it had a tendency to close sessions or fail to open some. The experiments can be ran using cluster command simple RPC program which contains scripts specific to this experiment. The server is already installed and running on the clients. (Included in the REF repository as /cluster_command)

I included a sample output from the integration code in /samples/debug.log. This is basically so that you know what the output will be like. This data can then be transformed and plotted. The /samples/history.txt file is a dump of the bash history, it shows as an example on how to run the experiment.

This glues together the various elements. It includes both my integration code and Mu's QoE code, and can be found in the /UFair folder. The code to run is the /UFair/integration.py script. This will do the talking between the OpenFlow controller, Mu's QoE code and the REF framework. Try python integration.py -h to check out the parameters you can pass. To install the required packages, run pip install -r requirements.txt; that should install everything.

The configuration file used in the experiments (/UFair/config.json) is a tree structure, describing the nodes (hosts, servers and switches) and the connections between them (the edges). The config is fairly self explanatory, but is rather tedious to build.

This uses the REF framework to get information about the throughput of all current flows in the network. Then using a whitelist, it determines how much bandwidth each node in the network should be delegated. Further more, it uses infromations about meters from REF including the drop rate to determine whether an attack is taking place, with this information it can decide if it wants to block the flow or not. This is highly dependant on the amount of available bandwidth. https://github.com/lyndon160/Smart-ACL

UFair paper published available to be read. Smart-ACL currently in submission. REF paper currently in submission.