You signed in with another tab or window. Reload to refresh your session.You signed out in another tab or window. Reload to refresh your session.You switched accounts on another tab or window. Reload to refresh your session.Dismiss alert

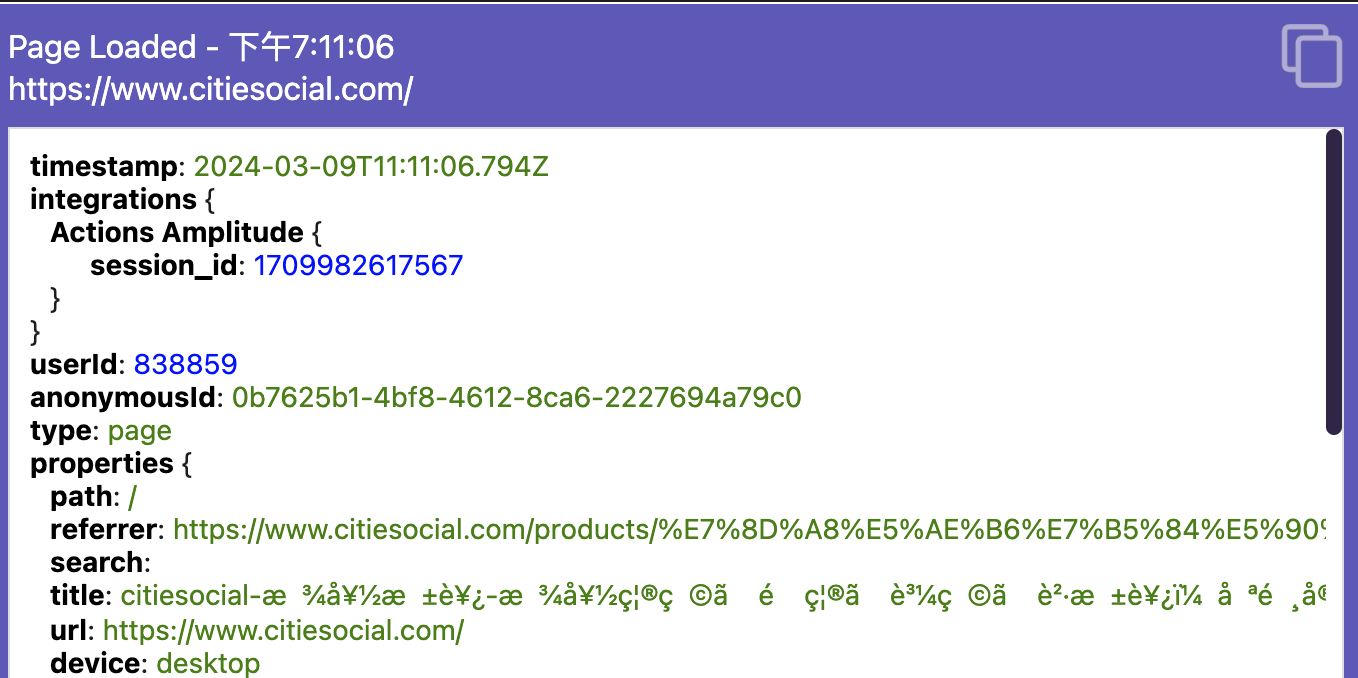

Currently, the application uses String.fromCharCode.apply(null, new Uint8Array(...)) to convert binary data from network requests into strings. This approach, however, has proven to be unreliable for UTF-8 encoded text, especially when dealing with non-ASCII characters such as Chinese characters, leading to garbled text outputs.

Proposed Solution:

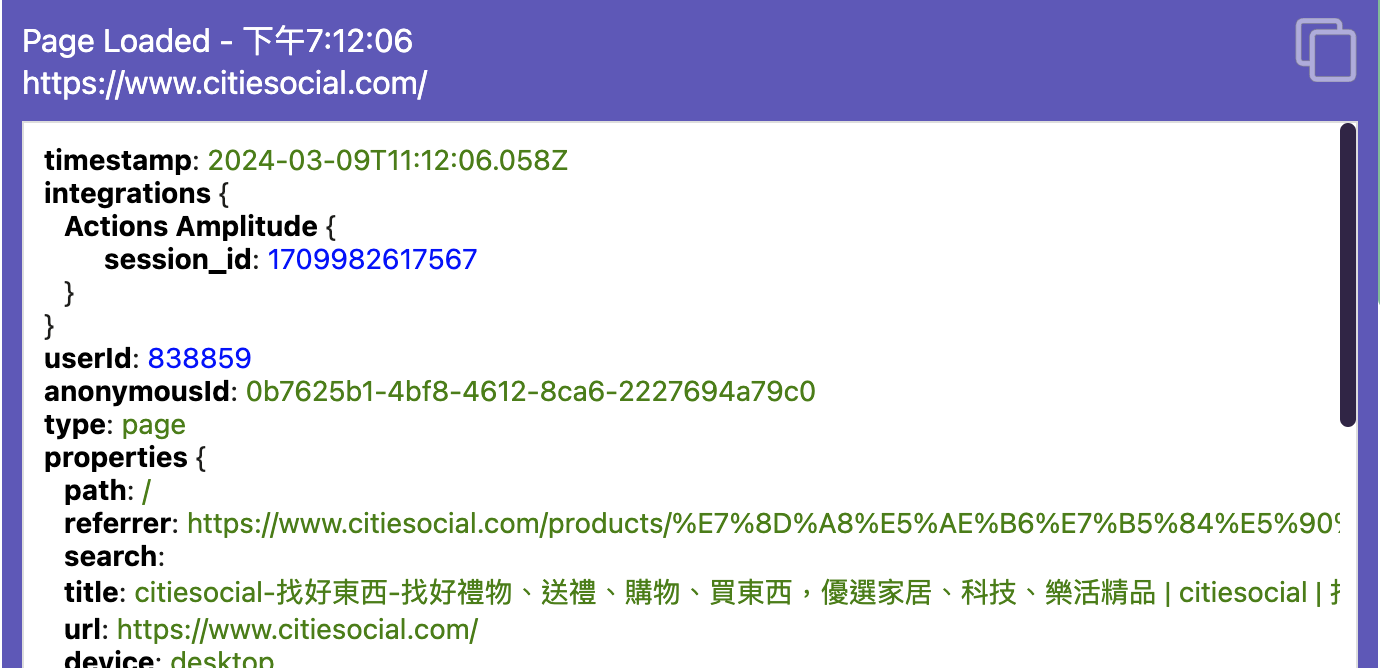

It is suggested that we switch to using the TextDecoder API for decoding binary data. The TextDecoder interface provides a more robust mechanism for handling UTF-8 encoded text, ensuring accurate representation of all characters, including those outside the ASCII range.

Problem:

Currently, the application uses

String.fromCharCode.apply(null, new Uint8Array(...))to convert binary data from network requests into strings. This approach, however, has proven to be unreliable for UTF-8 encoded text, especially when dealing with non-ASCII characters such as Chinese characters, leading to garbled text outputs.Proposed Solution:

It is suggested that we switch to using the

TextDecoderAPI for decoding binary data. TheTextDecoderinterface provides a more robust mechanism for handling UTF-8 encoded text, ensuring accurate representation of all characters, including those outside the ASCII range.For Example:

In

background.js, line 109change to

Results

If you have any questions, please let me know. Thank you!

The text was updated successfully, but these errors were encountered: