New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Improve admin upgrade page UX when upgrade service fails to update containers #7859

Comments

|

This is ready for AT for self-hosted on: To AT:

|

|

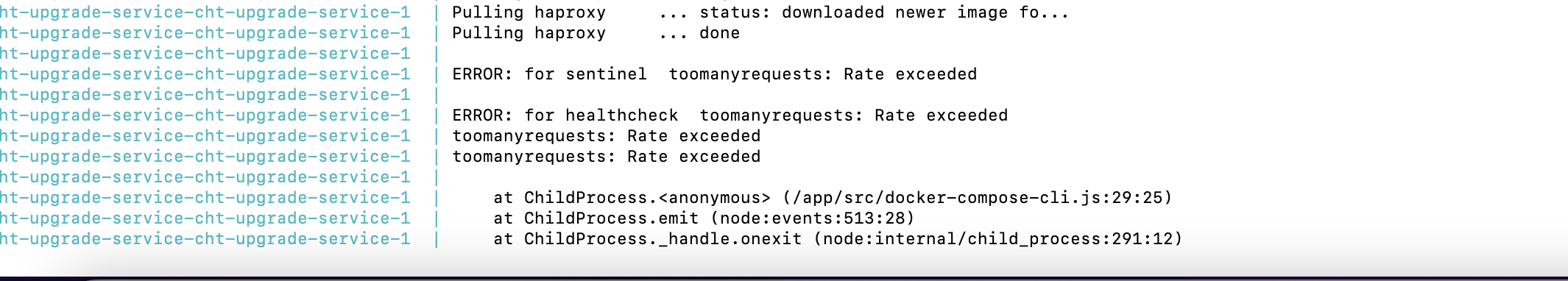

Seeing these errors @dianabarsan . Maybe we need another branch to upgrade to which has this fix. Api logs (quite chatty) |

|

Files i am using: cht-core.ymlcht-couchdb.yml |

|

Hi @ngaruko Seem that you're hitting the pull rate limit :) This is not related to this change. |

|

Environment Steps to reproduce:

|

|

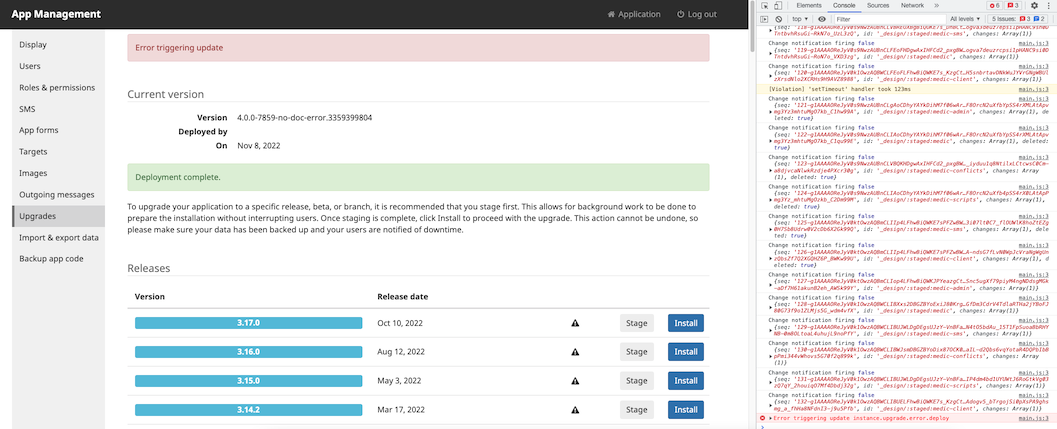

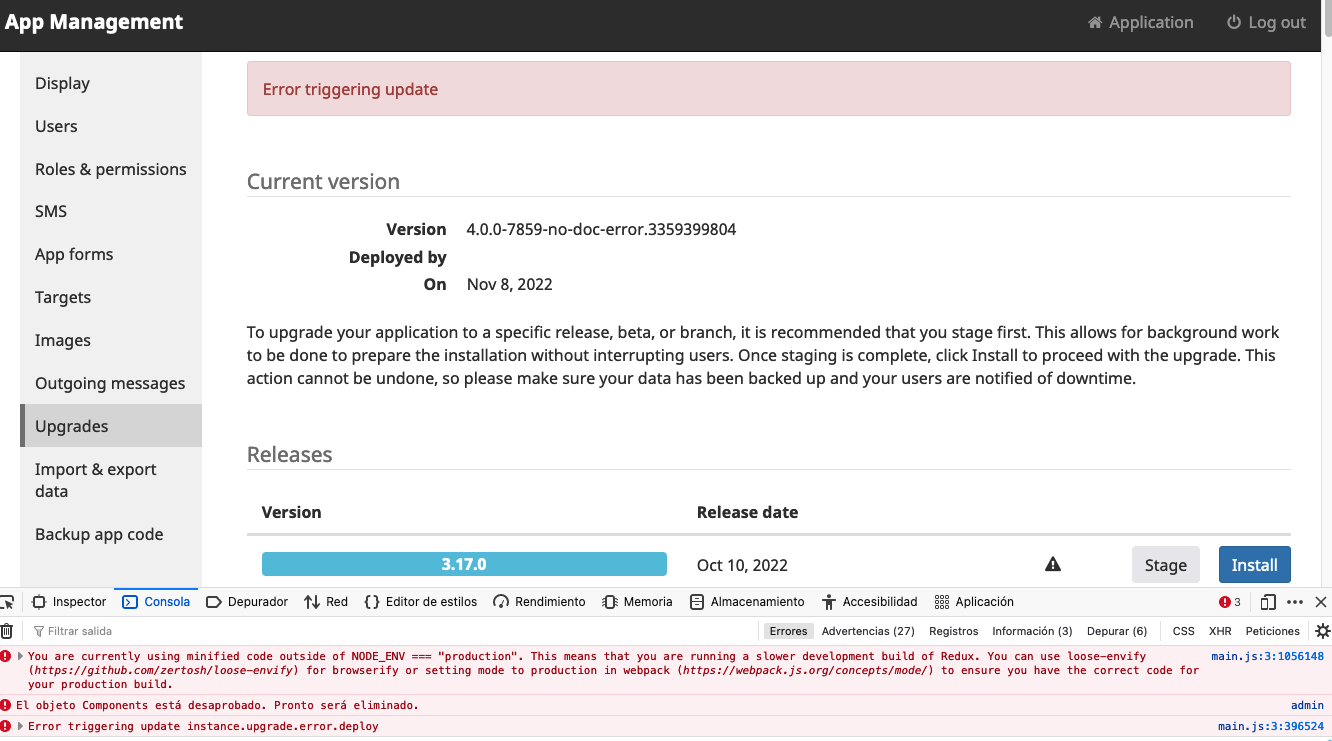

I'm a bit intrigued as to why you're seeing BOTH "deployment complete" and "error triggering update". I'll have to look into that. |

|

Oh! you are right! good catch. Sorry about that. I focused on verifying the version and did not notice the successful green message. Let me know what you discover, and I will test it again. |

|

I'm not seeing the "deployment complete" locally. If you could, please, try again, just open the upgrade page and directly try to upgrade to a different branch? |

|

Retested Steps to reproduce:

cht-upgrade-service log: |

|

Thanks so much @lorerod I'll create the issue about clearing the success status shortly. |

|

Thank to you @dianabarsan. I will follow up on that issue. |

|

Merged to |

* add e2e tests to messages tab breadcrumbs * fix breatfeeding typo for delivery form in default config (#7890) #7884 * add GH Action to replace placeholders with staging urls (#7860) * add GH Action for staging urls per #7848 * trying to fix path...how I love how you have to push to test a 1 line change... * Clean up readme and template changes in prep for PR * 2 spaces > 4 spaces per feedback * make search tokens more unique per feedback * test committ to trigger replace action * revert pinning to this branch for testing * collapse decleration of updateme object per feedback * un-collapse decleration of updateme object against feedback * fix typo in branch nane * revert pin to branch * collapse more objects per feedback * unping from branch * Update .github/actions/update-staging-url-placeholders/README.md Co-authored-by: Gareth Bowen <gareth@medic.org> Co-authored-by: Gareth Bowen <gareth@medic.org> * Change staging server db #7879 * apply review recommendations * minor changes * Log error when no compose files were overwritten on upgrade complete (#7863) #7859 * Update deep staging link: builds -> builds_4 * add missing opening quote on couch readme (#7881) Co-authored-by: Gareth Bowen <gareth@medic.org> Co-authored-by: Ashley <8253488+mrjones-plip@users.noreply.github.com> Co-authored-by: Diana Barsan <35681649+dianabarsan@users.noreply.github.com>

What feature do you want to improve?

If an upgrade goes well, we expect API to be down for a couple of seconds, while the new container boots up. This means that the admin upgrade page expects to receive http errors while requesting upgrade progress.

At the same time, when we call the upgrade endpoint to finalize the upgrade, and the request succeeds, we check whether our currently installed version is the version that we requested via the upgrade. If it is not, we display an error:

The browser logs will only display an error without any details:

API logs will not report an error:

The success of the upgrade call actually indicates an error. The most common error can be that the docker compose files were not updated (because of a name mismatch - see medic/cht-upgrade-service#9).

Describe the improvement you'd like

Analyze response from upgrade call to cht-upgrade-service.

If the

cht-corecompose file was not updated, display an error in api logs to indicate this.Describe alternatives you've considered

None.

Additional context

Add any other context or screenshots about the feature request here.

The text was updated successfully, but these errors were encountered: