-

-

Notifications

You must be signed in to change notification settings - Fork 2.7k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Advanced Machine Learning #231

Comments

|

Some idea: categorize images in the database into "pure", "single-JPG", "double-JPG", "multi-JPG" (JPG as in JPG compression). |

waifu2x is implemented in LuaJIT/Torch, not Caffe. Torch already seems to outdated, it is good to switch to PyTorch, but for now I don't have resource to do it.

ResNet model is already found in dev branch.

It has already been realized. waifu2x can specify JPEG quality and compression times for real-time data augmentation at training. The dataset has been constructed with images that is not JPEG compressed. |

Maybe reduce the size of the ResNet by using less modules? And compare that with VGG5/7/9/16/19 to create a graph of epoch training speed compared to total training time and accuracy?

what about auto-detection of JPEG quality? Could that be implemented as well? |

Using shallow network, the accuracy is downgraded. I think it is related to the receptive field size (it depends on the number of layers and the filter size when use fully convolutional network). I think it may be solved with dilated convolution or progressive approach.

I already implemented it, but it is not an open source activity. JPEG noise level can be predicted with classification task, with sets of image patches. |

|

@nagadomi what about using expert systems for JPEG noise level detection? |

|

Looks like the resnet version is 2.3 times slower than upconv version. But get better quallity than the upcov with TTA (8 times slower). Which means it faster than the upcov with TTA but better quality. So it make sence to replace the normal TTA option. BTW, is there any plain to train an resnet art version model? |

|

Generally, in super resolution task, pooling layer can not be used. |

|

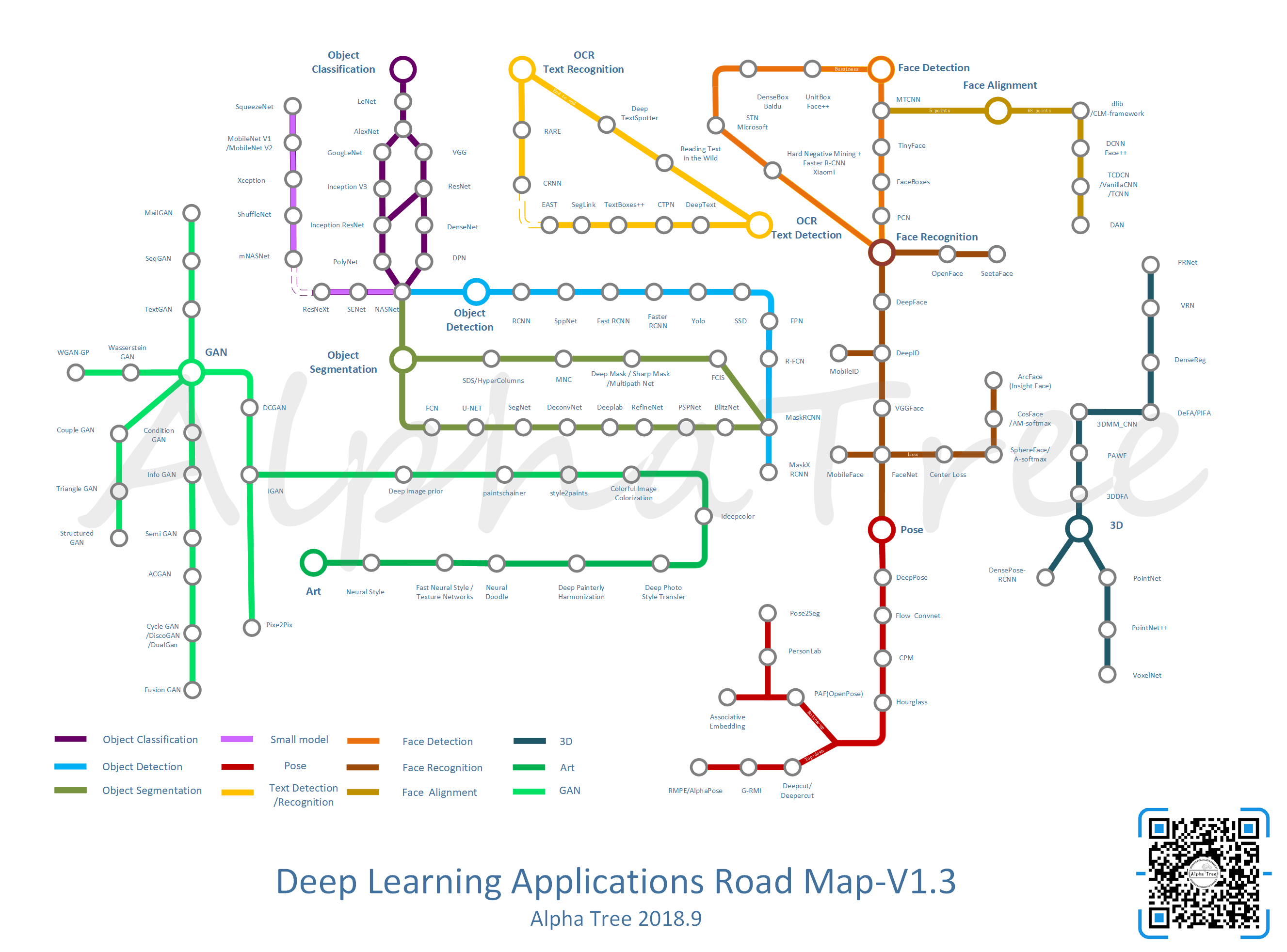

@nagadomi is it possible to see this graph (the purple parts) and see if there are alternatives for Waifu2x? |

|

@DonaldTsang Edit: |

|

@nagadomi There is a paper using atrous conv to segment small objects on satellite images. The model increase the atrous rates and then decrease them. I code a similar model on my manga text segmentation project and find a clear improvement on accuracy. I am rewriting and testing a similar model on image up-scaling. The preliminary result seems acceptable, and I plan to train it thoroughly on a server. |

|

@yu45020 I also develop OCR Engine for Manga, it is a closed source product so I can not describe the details, but there is a result on P59~ of this slide (Japanese). |

|

@nagadomi Your project seems to complete what I desire. It is very interesting and seems to be comparable to the ABBYSS's engine. My project's in sample prediction achieves similar result, but my goal is to segment all text pixels only. Back to your product. I notice the slices come from a seminar. Do you plan to publish a technical report ? |

The text was updated successfully, but these errors were encountered: