-

Notifications

You must be signed in to change notification settings - Fork 3

How to work with depth cameras?

Most of the depth cameras we're working with have 3 output channels: RGB, depth and point cloud:

- RGB channel is basically the color video stream from the camera. RGB stands for Red, Green and Blue. It's represented as a 3D array, w x h x 3.

- Depth channel is the corresponding video stream that will give you the distance value from the camera to the object. It's usually a 2D array w x h with each value in the array is the distance.

- Point cloud channel is a collection of 3D positions (x, y, z) of the pixels relative to the camera. It can be combined with the color channel to project a 3D representation of the environment.

- Some cameras also provide an infrared channel.

As we're using ROS, we have to use ROS packages that can support the cameras. Most of these packages are open source and maintained by the open-source community. Different ROS packages would have different installation guides and usage, but in general, they all provide similar ROS topics that we can subscribe to and get the data from (RGB, depth and point cloud). Many topics might look similar, but they use different optimization algorithms or they have different resolutions (hd versus sd). Therefore, please make sure to read documentation or try them all out to find the one that suits your needs.

Below is the list of cameras we have (newest one on the top):

This section will show you how to use the Kinect camera with ROS. Other cameras would work similarly, but they might have different names and arguments. Please make sure to install the packages necessary to use the camera before you continue.

To display ROS topics for the Kinect, run

rostopicKinect ROS topics start with /kinect2. Such examples include

- /kinect2/sd/image_depth_rect

- /kinect2/hd/image_color

- /kinect2/hd/points

If no Kinect ROS topics appear, check if roslaunch is throwing errors.

To save a bag file of the PointCloud data, run

rosbag record /kinect2/sd/pointsTo playback the data, create a launch file called playback.launch with the following content:

<launch>

<!-- Creates a command line argument called file -->

<arg name="file"/>

<!-- Run the rosbag play as a node with the file argument -->

<node name="rosbag" pkg="rosbag" type="play" args="--loop $(arg file)" output="screen"/>

</launch>

Run the file with the full directory name

roslaunch playback.launch file:=/ROS.bagOpen rviz to display the data

rosrun rviz rvizClick on add in the Displays section, click by topic, and add the PointCloud2 data. Then, change the Fixed Frame to kinect2_ir_optical_frame.

Primarily, camera calibration is about finding the quantities internal to the camera that affect the imaging process. Camera calibration is an important step towards getting a highly accurate representation of the real world in the captured images. Below, you can see the quality differences between uncalibrated and calibrated data:

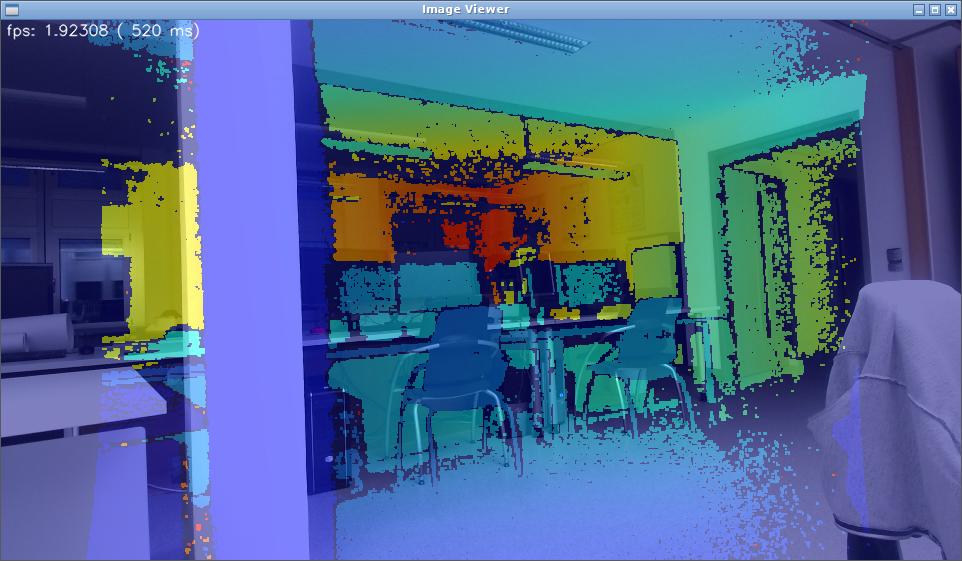

Uncalibrated rectified images (depth and RGB superimposed):

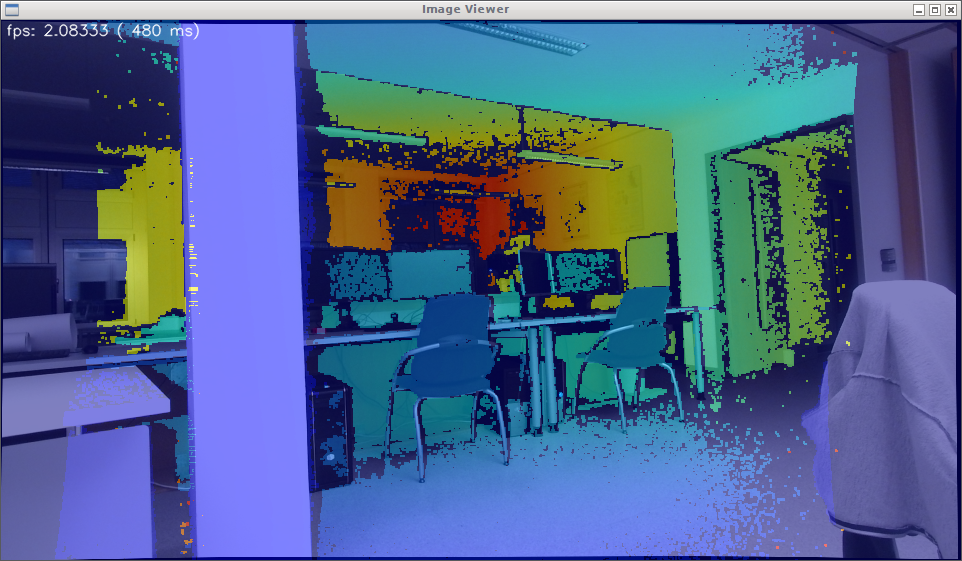

Calibrated rectified images (depth and RGB superimposed):

Each camera has its own calibration tool. The general method can be found here for Kinect for XBox One Camera: https://github.com/olinrobotics/iai_kinect2/tree/master/kinect2_calibration

Read more:

If you need help, come find Merwan Yeditha myeditha@olin.edu or Audrey Lee alee2@olin.edu!