Zipkin 2.16

Zipkin 2.16 includes revamps of two components, our Lens UI and our Elasticsearch storage implementation. Thanks in particular to @tacigar and @anuraaga who championed these two important improvements. Thanks also to all the community members who gave time to guide, help with and test this work! Finally, thanks to the Armeria project whose work much of this layers on.

If you are interested in joining us, or have any questions about Zipkin, join our chat channel!

Lens UI Revamp

As so much has improved in the Zipkin Lens UI since 2.15, let's look at the top 5, in order of top of screen to bottom. The lion's share of effort below is with thanks to @tacigar who has put hundreds of hours effort into this release.

To understand these, you can refer to the following images which annotate the number discussed. The first image in each section refers to 2.15 and the latter 2.16.2 (latest patch at the time)

1. Default search to 15minutes, not 1 hour, and pre-can 5 minute search

Before, we had an hour search default which unnecessarily hammers the backend. Interviewing folks, we realized that more often it is 5-15minutes window of interest when searching for traces. By changing this, we give back a lot of performance with zero tuning on the back end. Thanks @zeagord for the help implementing this.

2. Global search parameters apply to the dependency diagram

One feature of Expedia Haystack we really enjoy is the global search. This is where you can re-use context added by the user for trace queries, for other screens such as network diagrams. Zipkin 2.16 is the first version to share this, as before the feature was stubbed out with different controls.

3. Single-click into a trace

Before, we had a feature to preview traces by clicking on them. The presumed use case was to compare multiple traces. However, this didn't really work as you can't guarantee traces will be near eachother in a list. Moreover, large traces are not comparable this way. We dumped the feature for a simpler single-click into the trace similar to what we had before Lens. This is notably better when combined with network improvements described in 5. below.

4. So much better naming

Before, in both the trace list and also detail, names focused on the trace ID as opposed to what most are interested in (the top-level span name). By switching this out, and generally polishing the display, we think the user interface is a lot more intuitive than before.

5. Fast switching between Trace search and detail screen.

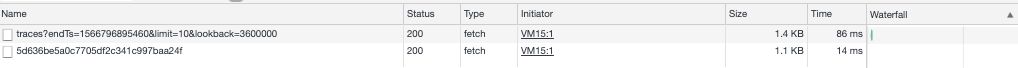

You cannot see 5 unless you are recording, because the 5th is about network performance. Lens now shares data between the trace search and the trace detail screen, allowing you to quickly move back and forth with no network requests and reduced rendering overhead.

Elasticsearch client refactor

Our first Elasticsearch implementation allowed requests to multiple HTTP endpoints to failover on error. However, it did not support multiple HTTPS endpoints, nor any load balancing features such round-robin or health checked pools.

For over two years, Zipkin sites have asked us to support sending data to an Elasticsearch cluster of multiple https endpoints. While folks have been patient, workarounds such as "setup a load balancer", or change your hostnames and certificates, have not been received well. It was beyond clear we needed to do the work client-side. Now, ES_HOSTS can take a list of https endpoints.

Under the scenes, any endpoints listed receive periodic requests to /_cluster/health. Endpoints that pass this check receive traffic in a round-robin fashion, while those that don't are marked bad. You can see detailed status from the Prometheus endpoint:

$ curl -sSL localhost:9411/prometheus|grep ^armeria_client_endpointGroup_healthy

armeria_client_endpointGroup_healthy{authority="search-zipkin-2rlyh66ibw43ftlk4342ceeewu.ap-southeast-1.es.amazonaws.com:443",ip="52.76.120.49",name="elasticsearch",} 1.0

armeria_client_endpointGroup_healthy{authority="search-zipkin-2rlyh66ibw43ftlk4342ceeewu.ap-southeast-1.es.amazonaws.com:443",ip="13.228.185.43",name="elasticsearch",} 1.0Note: If you wish to disable health checks for any reason, set zipkin.storage.elasticsearch.health-check.enabled=false using any mechanism supported by Spring Boot.

The mammoth of effort here is with thanks to @anuraaga. Even though he doesn't use Elasticsearch anymore, he volunteered a massive amount of time to ensure everything works end-to-end all the way to prometheus metrics and client-side health checks. A fun fact is Rag also wrote the first Elasticsearch implementation! Thanks also to the brave who tried early versions of this work, including @jorgheymans, @jcarres-mdsol and stanltam

If you have any feedback on this feature, or more questions about us, please reach out on gitter

Test refactoring

Keeping the project going is not automatic. Over time, things take longer because we are doing more, testing more, testing more dimensions. We ran into a timeout problem in our CI server. Basically, Travis has an absolute time of 45 minutes for any task. When running certain integration tests, and publishing at the same time, we were hitting near that routinely, especially if the build cache was purged. @anuraaga did a couple things to fix this. First, he ported the test runtime from classic junit to jupiter, which allows more flexibility in how things are wired. Then, he scrutinized some expensive cleanup code, which was unnecessary when consider containers were throwaway. At the end of the day, this bought back 15 minutes for us to.. later fill up again 😄 Thanks, Rag!

Small changes

- We now document instructions for custom certificates in Elasticsearch.

- We now document the behaviour of in-memory storage thanks @jorgheymans

- We now have consistent COLLECTOR_X_ENABLED variables to turn off collectors explicitly thanks @jeqo

Background on Elasticsearch client migration

The OkHttp java library is everywhere in Zipkin.. first HTTP instrumentation in Brave, the encouraged way to report spans, and relevant to this topic, even how we send data to Elasticsearch!

For years, the reason we didn't support multiple HTTPS endpoints was the feature we needed was on OkHttp backlog. This is no criticism of OkHttp as it is both an edge case feature, and there are ways including layering a client-side load balancer on top. This stalled out for lack of volunteers to implement the OkHttp side or an alternative. Yet, people kept asking for the feature!

We recently moved our server to Armeria, resulting in increasing stake, experience and hands to do work. Even though its client side code is much newer than OkHttp, it was designed for advanced features such as client-side load balancing. The idea of re-using Armeria as an Elasticsearch client was interesting to @anuraaga, who volunteered both ideas and time to implement them. The result was a working implementation complete with client-side health checking, supported by over a month of Rag's time.

The process of switching off OkHttp taught us more about its elegance, and directly influenced improvements in Armeria. For example, Armeria's test package now includes utilities inspired by OkHttp's MockWebServer.

What we want to say is.. thanks OkHttp! Thanks for the formative years of our Elasticsearch client and years ahead as we use OkHttp in other places in Zipkin. Keep up the great work!